- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Svem analysis DOE with replicated runs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Svem analysis DOE with replicated runs

In last DOE discussion forum Svem analysis was discussed for a DOE with relicated runs. Is was suggested to avarage the result of replicated runs. What if is there is significant variation between the replicates? Would it not be better to run Svem with extra "replicate run variable" to estimate the within replicates variation? If within replicte variation is important Svem will pick this up; in a next mixed model analysis the replicate variable can be assigned as random be separated from fixed effects that Svem assigned to be important.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

Hi @frankderuyck,

SVEM analysis works great in two situations :

- To build predictive model with very limited data, by stacking the different individual models that have been trained and validated on slightly different data (different weights for training and validation).

- To assess practical significance and increase the precision of effects estimates for unique individual experiments (no replicates) in a Machine Learning way, thanks to models stacking.

No matter the situation, it's important to remember in which context SVEM technique has been introduced : to provide a validation technique/framework in the presence of small dataset without any other possible validation technique.

Talks and presentations : Re-Thinking the Design and Analysis of Experiments? (2021-EU-30MP-776) - JMP User Community

SVEM: A Paradigm Shift in Design and Analysis of Experiments (2021-EU-45MP-779) - JMP User Community

So just conceptually, I have mixed feelings about the idea of combining SVEM and replicates, as this looks like the merging of two different model building methodologies that rely on different concepts, objectives and validation methodologies : screening based on statistical significance and error/noise estimation for designs involving replicates and statistical modeling vs. predictive modeling based on predictive accuracy and bootstrapping/models stacking.

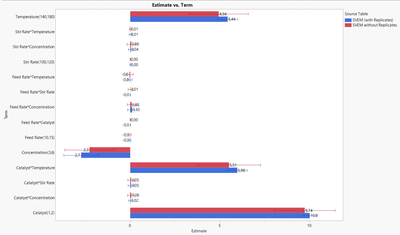

In presence of replicates, I fear that data leakage may occur when using SVEM for model building : as each experiment will have randomly (and in an anticorrelated way) training and validation weights, replicate runs could have different emphasis on training and validation, even if they represent the same treatment ! So the model building may be slightly biased (as it has information on the training and validation sets through replicates with different weights), and may result in "overconfidence" and a big decrease of standard deviation for terms estimates :

When increasing the variability between replicates on the same example (Reactor 20 Custom JMP dataset with added replicate runs up to 30 runs vs. original Reactor 20 Custom dataset), you can see that the two situations of using SVEM on design with replicates and using SVEM without replicates are fairly similar in terms of estimates and standard errors :

So again, I don't see what could be the benefit of merging these two techniques, as you're merging two (very) different validation techniques, which is not why SVEM has been created for.

I'm also skeptical about using SVEM to estimate within replicate variation, as you can already have this information if you have replicates with a standard analysis, and using SVEM might decrease the estimation of standard deviation, like bootstrapping : see the great interactive visualization for bootstrapping : Seeing Theory - Frequentist Inference

For other interested in this discussion, here are the recordings of DoE Club Q4 : Recordings DOE Club Q4 2024

Hope the response make sense and interested in the inputs from other members,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

I'm sorry I didn't attend the DOE club meeting and am not familiar with SVEM analysis (I did download the file and will review). Analysis of experiments is a personal choice. There are many ways to do this. Before I comment, I want to make sure you mean replicate and not repeat. That is replicates are considered independent runs of the identical treatment combination, each run having an independent experimental unit as an output.

Here are my uninformed comments:

1. When you summarize data you invariably throw out information. If you are going to average, you should also consider the variation of the data you averaged as a response. IMHO, you throw out information (and DF's) if you average across the replicates. Now if you are doing repeats, that is a great idea and also consider the response of variation to model.

2. If these are indeed replicates, it seems that you want to understand what changes between replicates. If you can identify and assign what factors (noise) change between replicates, you can learn a great deal about the robustness of factor effects. This can be an excellent strategy to simultaneously increase inference space and increase the precision of the design.

"Block what you can, randomize what you cannot". G.E.P. Box

Confound what you can identify and assign with the block. For factors you have failed to identify, randomization is the strategy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

Hi @frankderuyck,

SVEM analysis works great in two situations :

- To build predictive model with very limited data, by stacking the different individual models that have been trained and validated on slightly different data (different weights for training and validation).

- To assess practical significance and increase the precision of effects estimates for unique individual experiments (no replicates) in a Machine Learning way, thanks to models stacking.

No matter the situation, it's important to remember in which context SVEM technique has been introduced : to provide a validation technique/framework in the presence of small dataset without any other possible validation technique.

Talks and presentations : Re-Thinking the Design and Analysis of Experiments? (2021-EU-30MP-776) - JMP User Community

SVEM: A Paradigm Shift in Design and Analysis of Experiments (2021-EU-45MP-779) - JMP User Community

So just conceptually, I have mixed feelings about the idea of combining SVEM and replicates, as this looks like the merging of two different model building methodologies that rely on different concepts, objectives and validation methodologies : screening based on statistical significance and error/noise estimation for designs involving replicates and statistical modeling vs. predictive modeling based on predictive accuracy and bootstrapping/models stacking.

In presence of replicates, I fear that data leakage may occur when using SVEM for model building : as each experiment will have randomly (and in an anticorrelated way) training and validation weights, replicate runs could have different emphasis on training and validation, even if they represent the same treatment ! So the model building may be slightly biased (as it has information on the training and validation sets through replicates with different weights), and may result in "overconfidence" and a big decrease of standard deviation for terms estimates :

When increasing the variability between replicates on the same example (Reactor 20 Custom JMP dataset with added replicate runs up to 30 runs vs. original Reactor 20 Custom dataset), you can see that the two situations of using SVEM on design with replicates and using SVEM without replicates are fairly similar in terms of estimates and standard errors :

So again, I don't see what could be the benefit of merging these two techniques, as you're merging two (very) different validation techniques, which is not why SVEM has been created for.

I'm also skeptical about using SVEM to estimate within replicate variation, as you can already have this information if you have replicates with a standard analysis, and using SVEM might decrease the estimation of standard deviation, like bootstrapping : see the great interactive visualization for bootstrapping : Seeing Theory - Frequentist Inference

For other interested in this discussion, here are the recordings of DoE Club Q4 : Recordings DOE Club Q4 2024

Hope the response make sense and interested in the inputs from other members,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

Hi Victor, when variation between replicates is low one can expect not much influence when increasing #replicates. But what if there is qute high variability between the replicates, not interesting to introduce a blocking factor for each replication and include this blocking effect in Svem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

Hi Frank,

I would still think this may not be a good idea, as SVEM method seems to be "design-agnostic" so it won't take into consideration any grouping, blocking structure or presence of replicates, possibly resulting in "overconfidence"/data leakage/decrease of standard deviation on the blocking effect term estimate.

Also as it is a specific type of bootstrapping, it may result in a decrease of the standard deviation estimate, as shown before with the interactive visualization.

EDIT: More info on SVEM :

Overview of Self-Validated Ensemble Models

Lemkus, T., Gotwalt, C., Ramsey, P., and Weese, M. (2021). “Self-Validated Ensemble Models for Design of Experiments.” Chemometrics and Intelligent Laboratory Systems 219:104439.

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

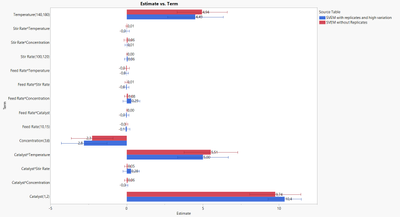

I agree that if variation between replicates is not too high, average results can be used for modeling. This is differetn when there is significant replicate variation; find in attachment a study with significant variation between replicates because of process noise. Mixed model with Replicate as random effect and SVEM with Replicate factor show similar results. However, when replicate results are averaged Genralized AICC & SVEM models are also similar and much better but the results are different from Mixed & SVEM+replicate: in the latter 2 analysis using replicate averageing, more effects are significant! This case shows that one needs to be careful averageing replicates with significant variation; also the SVEM+replicate picks up the random replicate effect quite well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

Below analysis screenshots

1. Mixed model & SVEM + Replicate effect

2. Averaged replicate results: Generalized foreward selection AICC & SVEM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

Interesting case study, thanks for sharing @frankderuyck.

Comparing the terms estimates and their standard deviations, we can see that indeed using SVEM with replicates has an influence on the standard deviations. In my example with medium replicates variability, SVEM has reduced significantly the standard deviations associated with terms estimation, as data leakage in training & validation weights brought information about terms estimates during model building.

In your case, as variability between replicates is very high, the data leakage doesn't bring information between training and validation of the model (in fact the model may be even more "lost" because of this big replicates variability), so you'll have standard deviations for terms estimates higher than for other methods. Using SVEM with average values or a REML model brings very similar informations, and the Generalized Regression Forward Regression on average values might provide over-optimistic standard deviations estimations :

So I think a general advice could be to not use SVEM on raw data involving replicates (due to the biased model fitting this would create with data leakage). Either use SVEM with average values for treatment (but you lose information about the inner-treatments variability), or use other methods for raw data with replicates (REML/Fit Model approaches).

Interested to have the points of view of @philramsey, @chris_gotwalt1 and any other curious members of the Community,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

I would propose a two step approach: (1) use SVEM + replicate factor to filter out strongest effects and (2) use the latter to build a mixed model with replicate as random.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Svem analysis DOE with replicated runs

When weight of replicate effect in SVEM is low one can use regular genreg/OLS with replicate averages.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us