- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: RAM per row

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

RAM per row

Can someone tell me the calculation for RAM per row?

I'm running JMP 11 on a 32-bit Windows 7 machine with 4.00 GB of RAM. And, my data table has 14 variables: 7 numeric and 7 character.

I know a 64-bit machine would be better. As a workaround for my current situation, I'm curious what the largest number of rows I can ideally have in a data table and then run an analysis. If I know the memory limit, I can subset my data table into smaller tables with the appropriate number of rows for my data.

Thanks!

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Our rule of thumb is that you need twice as much memory as the largest data table you'll use. This is true for 32-bit and 64-bit systems.

To get an estimate of the size of a data table in memory, look at the size of the .JMP file on disk.* That's a pretty reliable indicator of how much memory the data table will use.

JMP running on a 32-bit machine can access a maximum of 2GB of memory.

So, on a 32-bit system, the largest data table you can comfortably use is 1GB.

This is only a general guideline as some operations and analyses can require lots of additional memory.

-Jeff

*Assuming you aren't compressing the columns when you save.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

jb,

I have not made a calculation, but have a potential workaround. Columns use up the most memory and character data will use more than numeric.

All computers are not created equal and the processes running in the background on your computer may different than those running on someone else's computer. Start/restart your computer with no applications open. Open your task manager and select performance. Check the RAM used at that point. Open JMP and check the RAM used after opening. Open the data table of interest and start scaling back the number of rows to see where you get a break even point on RAM. Run a "simple" analysis like Fit Y by X and check the RAM usage. The analysis platforms is where you will see the performance difference based on available RAM have the most effect. It comes down to how much time you are willing to wait for a given analysis to complete. You can use a variable shrinkage method like partition to help determine which variables are most important to your model and use only those columns in your analysis.

Hope this helps.

Best,

Bill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Our rule of thumb is that you need twice as much memory as the largest data table you'll use. This is true for 32-bit and 64-bit systems.

To get an estimate of the size of a data table in memory, look at the size of the .JMP file on disk.* That's a pretty reliable indicator of how much memory the data table will use.

JMP running on a 32-bit machine can access a maximum of 2GB of memory.

So, on a 32-bit system, the largest data table you can comfortably use is 1GB.

This is only a general guideline as some operations and analyses can require lots of additional memory.

-Jeff

*Assuming you aren't compressing the columns when you save.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Hi jb,

Depending on the format of your character columns and number of rows, one option that you may want to consider is Try to Compress Character Columns, which is available in the Import Settings within Preferences > Text Data Files. For it to work properly, you may need to also enable Allow List Check (enabled on my system).

What this option does is scan character columns to identify columns that have <= 256 unique values. This happens in our data where we have long categorical label names, but < 100 unique values. For character columns that meet this <= 256 unique values criteria, JMP is able to compress these so that each cell only takes 1 byte. It takes longer to open files, but can save a lot of memory depending on the structure of your data. Even better, on the screen everything looks the same to you, so it happens rather seamlessly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Interesting idea! Unfortunately, I'm importing from Excel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Thank you everyone for your suggestions.

I need most of the (long) nominal character columns for grouping. Removing a few ordinal character columns had a negligible affect on performance.

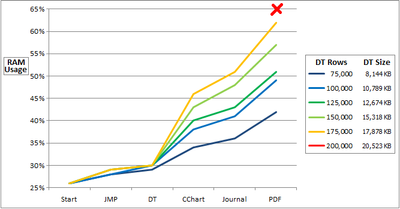

Yes, every machine is different (and running different background processes). Based on my M's (machine, material/data, and method), JMP gets shut down (or freezes) when the machine approaches 65% RAM usage; this was using a data table of 200,000 rows (about 20,000 KB). At this point, Windows Task Manager showed JMP memory usage at 1,343,424 KB.

Note that I was using these steps: (1) open JMP, (2) open DT & color rows, (3) run CChart, (4) create Journal, and (5) save as PDF.

Any help with interpreting data table file sizes (converting from KB to GB) for JMP memory management would be appreciated. Thanks!.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Interesting thread. Unfortunately, you are working on a 32-bit machine, so you are very restricted. As Jeff mentioned, JMP can only access 2 GB of RAM on a 32-bit system and that limitation is due to Windows. While a 32-bit system can theoretically address 4 GB, 32-bit systems tend to have a limit considerably less than that set by the operating system due to overhead. I know that in Windows XP the limit was effectively 3 GB, but by the time I was using Vista/7, I was working on 64-bit computers.

Speaking of overhead, there is no direct correlation between file size and memory usage for an application using said file. The memory requirements for the application can be appreciably more than the file size because 1) the application requires memory and 2) executing processes in the application requires memory. An application such as JMP can have considerable overhead due to the nature of the work you are performing.

As to converting KB to GB, that haws always been a sore point for me in Windows; who I am adding one of many things I hate about Windows. OS X reports file sizes with the appropriate units, but Windows insists on behaving as if it is the 1980s and everything is measured in kilobytes. Unlike the storage OEMs that started erroneously reporting capacity in Base 10 units back in the 90s, operating systems still report true byte units for memory and storage that are powers of 1024 (Base 2, 2^10). Thus,

- 1 KB = 1024 bytes

- 1 MB = 1024 KB

- 1 GB = 1024 MB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Hi, I recently upgraded from JMP 11 to JMP 12.

After I run my performance tests again with JMP 12, I can look into these computations. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Hi jb,

I aggree with all the others regarding RAM, data size and the other things. In Addition you should consider, looking at the RAM usage, that for the Export to PDF the System will start Acrobat Reader or the apropriate PDF program you are using. This will increase the RAM as you could see in your graph. Depending on the way you save the report in the journal (with data table in place or just the script to generate the Report with a reference to the data table) you will use more or less Memory and the file size of the Journal will be larger or smaller. And as a consequence the PDF file size will also adjust (simply due to the fact of the picture vs. line with a script - but I guess you like to have the graphics in the PDF as well and not just a script line).

That said, there is a lot of overhead independent from the actual file size you must not Forget to consider when calculating RAM to be used.

May it also helps if you may be able to close data tables and reports immediately after you do not Need them anymore, e.g. after saving to the Journal and before saving to PDF. I think worth a try. As well changing your System Settings (if you are on Windows) to allow for 3GB RAM usage even on 32bit Systems. You'll find many guides on how to do that step by step for your System in the web.

Cheers,

Martin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RAM per row

Thanks, Martin.

After I created my journal, I closed the data table and analysis. This did free up 16% of my working memory for creating the PDF. And I was able to successfully create the PDF.

I will also look into the other methods suggested by you and others. Sounds like there are a lot of variables that contribute to this.

A work in progress!

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us