- JMP will suspend normal business operations for our Winter Holiday beginning on Wednesday, Dec. 24, 2025, at 5:00 p.m. ET (2:00 p.m. ET for JMP Accounts Receivable).

Regular business hours will resume at 9:00 a.m. EST on Friday, Jan. 2, 2026. - We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- JMP stability test report questions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

JMP stability test report questions

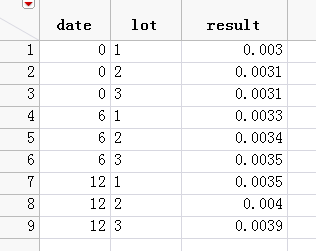

stability test data as follow:

Using the degradation>Stability test Platform,result for response,date for time,lot for label,Enter 0.012 for the uper Spec Limit and

click ok.

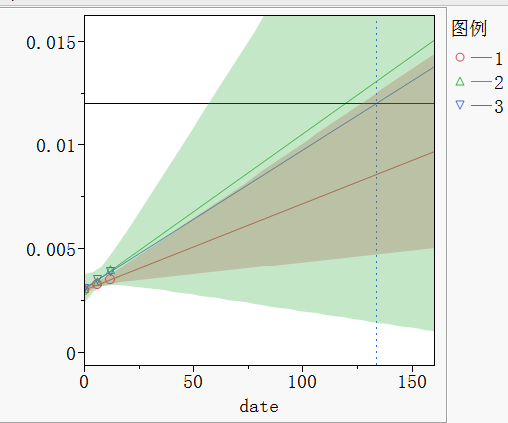

Q1:The report shows different· slope and different intercepts,but the chart(shows as follow left)didn't choose the most shorter date for the expiration date(according to the ICH) ,why?

Q2:What is the meaning for the "source A ".?

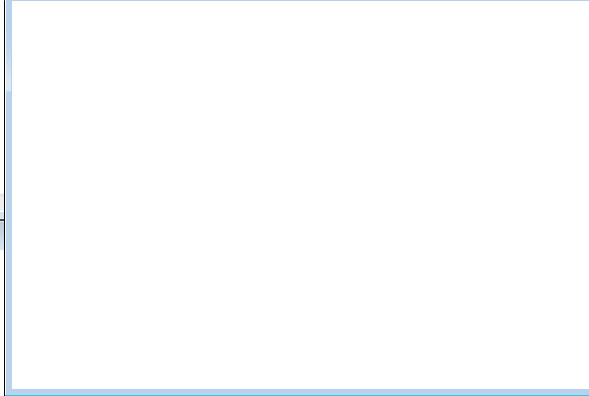

Q3:According to JMP report ,the expiration date is 133 ,on the other hand ,i use minitab 17 “Stability test Platform”to forecast the same data ,it also concludes that different· slope and different intercepts is given and the expiration date is 94(shows as follow right),what is different between jmp and minitab in the Stability test Platform?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

My data table and analysis attached.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

I get "The best model accepted at the significance level of 0.25 has Different intercepts and Different slopes. The model suggests the earliest crossing time at 57.03571 with 95 percent confidence. ICH Guidelines indicate an expiration time of 57.03571."

Maybe you save your script to the data table and attach the data table in a reply in this discussion.

Or you could send the data table and your questions to support@jmp.com.

Regarding Q2: Briefly, Source A is explained in the legend. It is part of the model comparison between the different possible models (as per ICH).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

My data table and analysis attached.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

This is my analysis.the expiration date "133"is a automatical result from reports.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

Hi,

I suggest you contact technical support (email: support@jmp.com).

I ran your analysis from your table and got the results that I had got previously.

Regards,

Phil

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

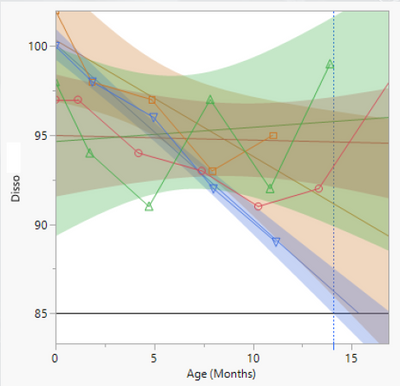

Hi Phil_Kay, I recently got a report from my CMO stating the predicted shelf life from JMP stability test program as 14 months that is very low. I analysed the input data of 4 batches and found that there was high varibility in the data to the extent that I can call it haphazard. (attaching a snapshot of the regression plot). "Garbage in leads to garbage out". Canyou please guide me on " how reliable is output when the input data is like this as depicted in the plot". Also how do I access the significance of this model. I mean how do we access that the model is valid enough to be able to predict the shelflife.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP stability test report questions

Hi @Bhaskar ,

Sorry for slow response - I have been out of office for a few weeks.

Purely from the graph above the result of 14 months seems like a reasonable conclusion.

Clearly there is a lot of variation between your batches. And this is reflected in the fact that the stability protocol has determined that the different intercepts and different slopes is the most appropriate model.

The batch coloured in blue shows a strong downward trend in Disso, which suggests that it would be less than 85 from around 14 months.

The other batches show less clear trends. I can't comment on why that is. That is something for you to think about. It might be that the precision of Disso measurement is poor. You might want to look at the Disso measurement system using Measurement Systems Analysis methods (a topic for another thread). It might also be that there truly are differences in how the product in different batches is degrading.

You ask about statistical significance. The Stability platform uses statistical testing to determine the best model according to the ICH guidelines. Then, for the model that has been determined, it gives you the 95% confidence intervals for the trend, which is effectively another test of statistical significance.

The statistical tests are detailed in the help documentation.

I hope this helps.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us