- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Clean up a csv File

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Clean up a csv File

I have large quatity of csv files that need to be clean up. All of these files have same format which make the task a bit easy. My initial csv file has the following format:

| Item Recipe: (001) 48pt | |||||

| Stage Recipe: 48pt | |||||

| Batch ID: Prod1 | |||||

| Item ID: 42 | |||||

| Date/Time: 5/1/2017 4:28:56 PM | |||||

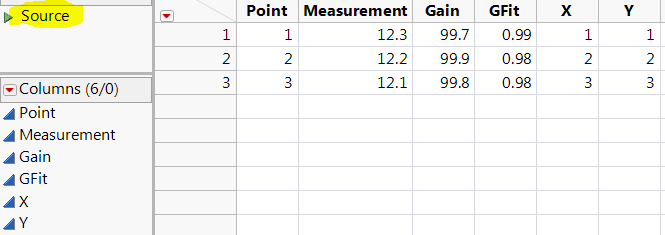

| Point | Measurement | Gain | GFit | X | Y |

| 1 | 12.3 | 99.7 | 0.99 | 1 | 1 |

| 2 | 12.2 | 99.9 | 0.98 | 2 | 2 |

| 3 | 12.1 | 99.8 | 0.98 | 3 | 3 |

The first 6 rows need to be clean up. I'm thinking of getting the first 6 rows into a new table and transpose them. After the traspose, join the table with the other section. My ideal JMP table is the following:

| Item Recipe: | Stage Recipe: | Batch ID: | Item ID: | Date/Time: | Point | Measurement | Gain | GFit | X | Y |

| (001) 48pt | 48pt | Prod1 | 42 | 5/1/2017 4:28:56 PM | 1 | 12.3 | 99.7 | 0.99 | 1 | 1 |

| (001) 48pt | 48pt | Prod1 | 42 | 5/1/2017 4:28:56 PM | 2 | 12.2 | 99.9 | 0.98 | 2 | 2 |

| (001) 48pt | 48pt | Prod1 | 42 | 5/1/2017 4:28:56 PM | 3 | 12.1 | 99.8 | 0.98 | 3 | 3 |

I'm trying a jsl script but it is behond my capability at the moment. Can someone give me an idea how to get this accomplish?

Thank you

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Clean up a csv File

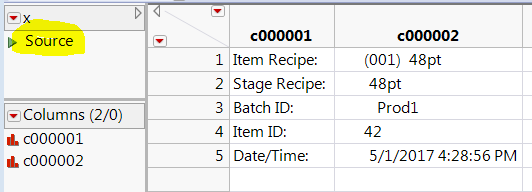

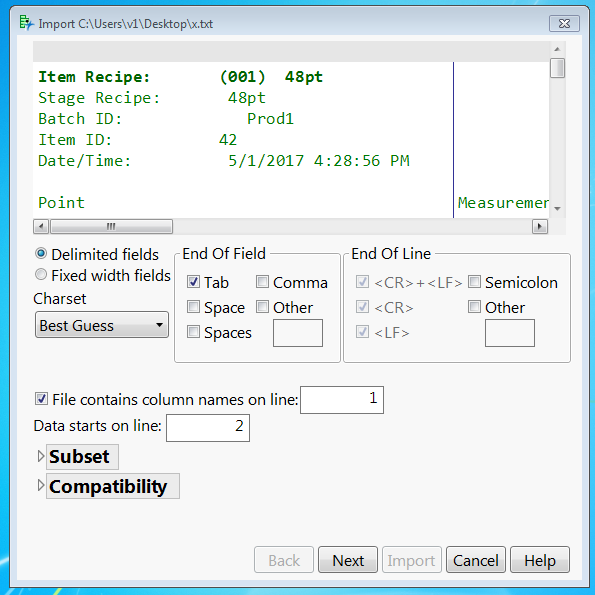

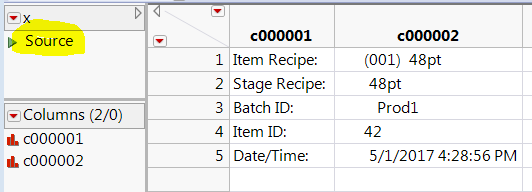

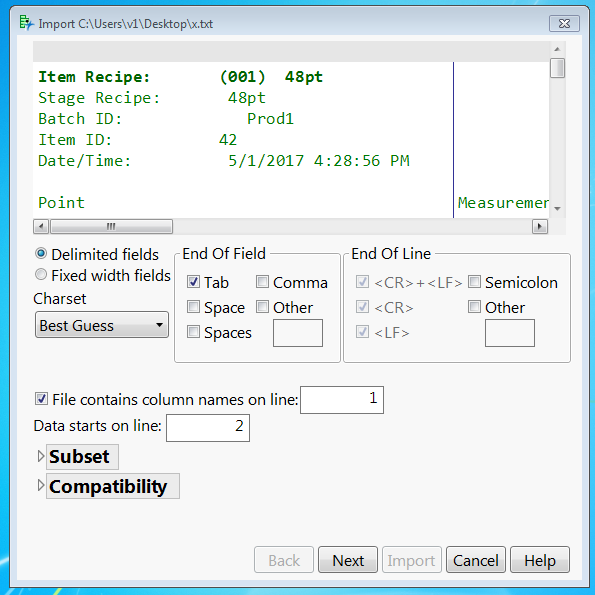

Nice description of the problem, and I think your plan will work. You can use the text import preview wizard to do part of the work. By limiting the number of lines to 5, you can get the header, and by choosing fixed width fields you can get two columns (or maybe spaces, plural, would work even better for the delimiter). (If my cut-and-paste of your data messed up tabs and spaces you might need to tweak the column delimiters a bit). The source script is in the table and you can grab a copy of it for your bigger script:

Open(

"C:\Users\v1\Desktop\x.txt",

columns(

Column( "c000001", Character, "Nominal" ),

Column( "c000002", Character, "Nominal" ),

Omitted Column( . )

),

Import Settings(

Fixed Column Widths( 13, 28, 98 ),

Strip Quotes( 0 ),

Use Apostrophe as Quotation Mark( 0 ),

Use Regional Settings( 0 ),

Scan Whole File( 1 ),

Treat empty columns as numeric( 0 ),

CompressNumericColumns( 0 ),

CompressCharacterColumns( 0 ),

CompressAllowListCheck( 0 ),

Labels( 0 ),

Column Names Start( 1 ),

Data Starts( 1 ),

Lines To Read( 5 ),

Year Rule( "20xx" )

)

)

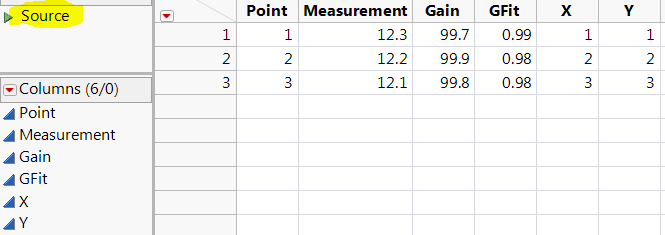

Next, import again, skipping the 5 lines, and grab that script too:

Open(

"C:\Users\v1\Desktop\x.txt",

columns(

Column( "Point", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "Measurement", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "Gain", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "GFit", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "X", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "Y", Numeric, "Continuous", Format( "Best", 12 ) )

),

Import Settings(

End Of Line( CRLF, CR, LF ),

End Of Field( Tab, CSV( 0 ) ),

Strip Quotes( 1 ),

Use Apostrophe as Quotation Mark( 0 ),

Use Regional Settings( 0 ),

Scan Whole File( 1 ),

Treat empty columns as numeric( 0 ),

CompressNumericColumns( 0 ),

CompressCharacterColumns( 0 ),

CompressAllowListCheck( 0 ),

Labels( 1 ),

Column Names Start( 7 ),

Data Starts( 8 ),

Lines To Read( "All" ),

Year Rule( "20xx" )

)

)

All of the JSL above was written by the text import preview wizard and stored in the source script attached to the table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Clean up a csv File

Nice description of the problem, and I think your plan will work. You can use the text import preview wizard to do part of the work. By limiting the number of lines to 5, you can get the header, and by choosing fixed width fields you can get two columns (or maybe spaces, plural, would work even better for the delimiter). (If my cut-and-paste of your data messed up tabs and spaces you might need to tweak the column delimiters a bit). The source script is in the table and you can grab a copy of it for your bigger script:

Open(

"C:\Users\v1\Desktop\x.txt",

columns(

Column( "c000001", Character, "Nominal" ),

Column( "c000002", Character, "Nominal" ),

Omitted Column( . )

),

Import Settings(

Fixed Column Widths( 13, 28, 98 ),

Strip Quotes( 0 ),

Use Apostrophe as Quotation Mark( 0 ),

Use Regional Settings( 0 ),

Scan Whole File( 1 ),

Treat empty columns as numeric( 0 ),

CompressNumericColumns( 0 ),

CompressCharacterColumns( 0 ),

CompressAllowListCheck( 0 ),

Labels( 0 ),

Column Names Start( 1 ),

Data Starts( 1 ),

Lines To Read( 5 ),

Year Rule( "20xx" )

)

)

Next, import again, skipping the 5 lines, and grab that script too:

Open(

"C:\Users\v1\Desktop\x.txt",

columns(

Column( "Point", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "Measurement", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "Gain", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "GFit", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "X", Numeric, "Continuous", Format( "Best", 12 ) ),

Column( "Y", Numeric, "Continuous", Format( "Best", 12 ) )

),

Import Settings(

End Of Line( CRLF, CR, LF ),

End Of Field( Tab, CSV( 0 ) ),

Strip Quotes( 1 ),

Use Apostrophe as Quotation Mark( 0 ),

Use Regional Settings( 0 ),

Scan Whole File( 1 ),

Treat empty columns as numeric( 0 ),

CompressNumericColumns( 0 ),

CompressCharacterColumns( 0 ),

CompressAllowListCheck( 0 ),

Labels( 1 ),

Column Names Start( 7 ),

Data Starts( 8 ),

Lines To Read( "All" ),

Year Rule( "20xx" )

)

)

All of the JSL above was written by the text import preview wizard and stored in the source script attached to the table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Clean up a csv File

Hi Craige,

It works as advertised.

Thank you so much

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us