- JMP will suspend normal business operations for our Winter Holiday beginning on Wednesday, Dec. 24, 2025, at 5:00 p.m. ET (2:00 p.m. ET for JMP Accounts Receivable).

Regular business hours will resume at 9:00 a.m. EST on Friday, Jan. 2, 2026. - We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

Uncharted

- JMP User Community

- :

- Blogs

- :

- Uncharted

- :

- Ray Marching and WebP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This post shows a way-too-slow way to do ray marching and a perfectly fine Python way to make an animated WebP (like an animated Gif, but so much nicer!)

Part 1: file formats

This JSL uses the Python PIL library to make some test files to compare size, speed, and quality of PNG, JPEG, and WebP files. GIF is in part 2. If you want to run it, use 0 or 1 on the second line to make each picture. The RMSE values are the average error of the decoded pixels minus the source pixels in 0..255 color channel units. The error gets larger with more compression and makes the artifacts or blurriness in highly compressed images. PNG images use a lossless compression that results in a 0.0 RMSE.

The purple WebP dots are pretty consistently lower RMSE than the red JPEG dots for almost all compression levels. They also take about 20X as long to compress, which might be worth it if the images will be stored forever.

{picLeft, picRight, picTop, picBottom} = If( 1,

picURL = "https://upload.wikimedia.org/wikipedia/commons/1/1e/Rooster_-_Public_Domain.jpg"; // Lisafern, CC0, via Wikimedia Commons -- https://commons.wikimedia.org/wiki/Special:ListFiles/lisafern

{600000, 6000000, 35, 5};//

, //

picURL = "https://upload.wikimedia.org/wikipedia/commons/3/3a/Foster_graph_hamiltonian.png"; // public domain: https://commons.wikimedia.org/wiki/File:Foster_graph_hamiltonian.png

{450000, 10000000, 32, 8};//

);

gTempFiles = Convert File Path( "$temp\pil_webp\", "windows" );

Delete Directory( gTempFiles ); // careful!

Create Directory( gTempFiles ); // the directory exists, and is empty

// load python and make sure PIL is available...

rc = Python Execute(

{},

{},

"\[from PIL import Image, ImageSequence

import numpy as np

import os

import time

def rmse(imageA, imageB):

if imageA.size != imageB.size:

raise ValueError("Images must have the same dimensions to calculate RMSE")

imgA_np = np.asarray(imageA).astype("float")

imgB_np = np.asarray(imageB).astype("float")

err = np.sum((imgA_np - imgB_np) ** 2)

err /= float(imageA.size[0] * imageA.size[1])

return np.sqrt(err)

]\",

echo( 0 )

);

If( rc != 0,

Throw( "python init error rc=" || Char( rc ) ),

"ok"

);

// add a JSL image to a list of files for a .webp and .jpg and .gif

addNewFrame = Function( {filename, img},

img << saveimage( gTempFiles || filename || ".png", "png" ); // png is lossless and the rmse uses this for comparison

File Size( gTempFiles || filename || ".png" );

);

// the saveFrameTo... and saveFramesTo... functions

// reflect the way the formats are typically used;

// you would not normally make a single frame GIF

// and you can't really make a multi-frame JPG.

saveFrameToWebP = Function( {outputfilename, qual},

{filelist, webpname, rc}, //

filelist = Transform Each( {f}, Files In Directory( gTempFiles ), //

Convert File Path( gTempFiles || f, "windows" );//

);

If( N Items( filelist ) != 1, //

Throw( "saveFrameToWebP nitems=" || Char( N Items( filelist ) ) );//

);

webpname = Convert File Path( outputfilename, "windows" );

rc = Python Execute(

{filelist, webpname, qual}, // inputs

{_rmse, _ossize, _timew, _timer}, // outputs

"\[# https://pillow.readthedocs.io/en/stable/handbook/image-file-formats.html#webp

imgs = []

for f in filelist:

imgs.append(Image.open(f).convert('RGB'))

img=imgs[0]

_timew = time.perf_counter()

img.save(webpname,save_all=True,format="WEBP",

minimize_size=True,

lossless=False,quality=int(qual))

_timew = time.perf_counter() - _timew

_timer = time.perf_counter()

ir = Image.open(webpname).convert('RGB')

_timer = time.perf_counter() - _timer

_rmse = rmse(img,ir)

_ossize = os.path.getsize(webpname)

imgs = None

]\",

echo( 0 )

);

If( rc != 0, //

Throw( "saveFrameToWebP error rc=" || Char( rc ) );//

, //

Eval List( {_rmse, _ossize, _timew, _timer} );// return value

);

);

saveFrameToPng = Function( {outputfilename, qual},

{filelist, pngname, rc}, //

filelist = Transform Each( {f}, Files In Directory( gTempFiles ), //

Convert File Path( gTempFiles || f, "windows" );//

);

If( N Items( filelist ) != 1, //

Throw( "saveFrameToPng nitems=" || Char( N Items( filelist ) ) );//

);

pngname = Convert File Path( outputfilename, "windows" );

rc = Python Execute(

{filelist, pngname, qual}, // inputs

{_rmse, _ossize, _timew, _timer}, // outputs

"\[#

imgs = []

for f in filelist:

imgs.append(Image.open(f).convert('RGB'))

img=imgs[0]

_timew = time.perf_counter()

img.save(pngname,save_all=True,format="PNG",

optimize=True,

quality=int(qual))

_timew = time.perf_counter() - _timew

_timer = time.perf_counter()

ir = Image.open(pngname).convert('RGB')

_timer = time.perf_counter() - _timer

_rmse = rmse(img,ir)

_ossize = os.path.getsize(pngname)

imgs = None

]\",

echo( 0 )

);

If( rc != 0, //

Throw( "saveFrameToWebP error rc=" || Char( rc ) );//

, //

Eval List( {_rmse, _ossize, _timew, _timer} );// return value

);

);

saveFrameToJPG = Function( {outputfilename, qual},

{filelist, jpgname, rc}, //

filelist = Transform Each( {f}, Files In Directory( gTempFiles ), //

Convert File Path( gTempFiles || f, "windows" );//

);

If( N Items( filelist ) != 1, //

Throw( "saveFrameToJPG nitems=" || Char( N Items( filelist ) ) );//

);

jpgname = Convert File Path( outputfilename, "windows" );

rc = Python Execute(

{filelist, jpgname, qual}, // inputs

{_rmse, _ossize, _timew, _timer}, // outputs

"\[# https://pillow.readthedocs.io/en/stable/handbook/image-file-formats.html#webp

imgs = []

for f in filelist:

imgs.append(Image.open(f).convert('RGB'))

img=imgs[0]

_timew = time.perf_counter()

img.save(jpgname,format="JPEG",

optimize=True,

quality=int(qual))

_timew = time.perf_counter() - _timew

_timer = time.perf_counter()

ir = Image.open(jpgname).convert('RGB')

_timer = time.perf_counter() - _timer

_rmse = rmse(img,ir)

_ossize = os.path.getsize(jpgname)

imgs = None

]\",

echo( 0 )

);

If( rc != 0, //

Throw( "saveFrameToJPG error rc=" || Char( rc ) );//

, //

Eval List( {_rmse, _ossize, _timew, _timer} );// return value

);

);

// Lisafern, CC0, via Wikimedia Commons -- https://commons.wikimedia.org/wiki/Special:ListFiles/lisafern

img = New Image( picURL );

start = HP Time();

pngSize1 = addNewFrame( "1jsl.png", img );

JSLpngTime = (HP Time() - start) / 1e6;

dt = New Table( //

New Column( "extension", "character" ),

New Column( "size" ),

New Column( "timewrite" ),

New Column( "timeread" ),

New Column( "rmse" )

);

dt << addrows( 1 );

extension = "JSLPng";

size = pngSize1;

rmse = 0;

timewrite = JSLpngTime;

dt << addrows( 1 );

extension = "PILPng";

{rmse, size, timewrite, timeread} = saveFrameToPng( "$temp/1.png", 95 );

For( i = 0, i <= 100, i += 1,

dt << addrows( 1 );

extension = "WebP";

{rmse, size, timewrite, timeread} = saveFrameToWebP( "$temp/1.webp", i );

Wait( .01 );

dt << addrows( 1 );

extension = "Jpg";

{rmse, size, timewrite, timeread} = saveFrameToJpg( "$temp/1.jpg", i );

Wait( .01 );

);

dt << Graph Builder(

Size( 996, 713 ),

Show Control Panel( 0 ),

Legend Position( "Inside Floating" ),

Variables( X( :size ), Y( :rmse ), Overlay( :extension ), Size( :timewrite ) ),

Elements( Points( X, Y, Legend( 47 ) ) ),

SendToReport(

Dispatch( {}, "size", ScaleBox,

{Scale( "Log" ), Format( "Engineering SI", 12 ), Min( 1e4 ), Max( 6.1e6 ), Minor Ticks( 1 ), Label Row(

{Show Major Grid( 1 ), Show Minor Grid( 1 ), Show Minor Labels( 1 )}

)}

),

Dispatch( {}, "rmse", ScaleBox, {Min( -0.5 ), Max( 32 ), Inc( 5 ), Minor Ticks( 0 )} ),

Dispatch( {}, "400", ScaleBox,

{Legend Model(

47,

Type Properties( 0, "Marker", {Marker( "FilledCircle" )} ),

Properties( 1, {Line Color( 3 ), Marker( "FilledCircle" )}, Item ID( "Jpg", 1 ) ),

Properties( 2, {Line Color( 5 ), Marker( "FilledCircle" )}, Item ID( "JSLPng", 1 ) ),

Properties( 3, {Line Color( 6 ), Marker( "FilledCircle" )}, Item ID( "PILPng", 1 ) ),

Properties( 4, {Line Color( 8 ), Marker( "FilledCircle" )}, Item ID( "WebP", 1 ) ),

Properties( -1, {Marker( "FilledSquare" )}, Item ID( "Png", 1 ) )

)}

),

Dispatch( {}, "graph title", TextEditBox, {Set Text( "WebP vs Jpg vs Png" )} ),

Dispatch( {}, "size", TextEditBox, {Set Text( "Size on disk, bytes" )} ),

Dispatch( {}, "rmse", TextEditBox, {Set Text( "RMSE out of 255" )} ),

Dispatch( {}, "Graph Builder", FrameBox,

{Marker Drawing Mode( "Normal" ), Reference Line Order( 6 ), Add Image(

2, // layer 2 above grid lines

image( New Image( picURL ) ),

Bounds( Left( picLeft ), Right( picRight ), Top( picTop ), Bottom( picBottom ) )

), Add Graphics Script( 7, Description( "" ), Empty() )}

),

Dispatch( {}, "400", LegendBox, {Legend Position( {47, [4, 0, 3, 2, 1]} )} )

)

);

dt << Graph Builder(

Size( 638, 370 ),

Show Control Panel( 0 ),

Variables( X( :size ), Y( :timeread ), Overlay( :extension ) ),

Elements( Points( X, Y, Legend( 9 ) ) ),

SendToReport(

Dispatch( {}, "400", ScaleBox,

{Legend Model(

9,

Properties( 0, {Line Color( 3 )}, Item ID( "Jpg", 1 ) ),

Properties( 1, {Line Color( 5 )}, Item ID( "JSLPng", 1 ) ),

Properties( 2, {Line Color( 6 )}, Item ID( "PILPng", 1 ) ),

Properties( 3, {Line Color( 8 )}, Item ID( "WebP", 1 ) )

)}

),

Dispatch( {}, "400", LegendBox, {Legend Position( {9, [0, 3, 2, 1]} )} )

)

);Saving a real-world image. The orange and blue dots beyond a 3MB disk size show how PNG files fail to compress this data well. The original is 1264x2208 = 2,790,912 pixels of 3 bytes each (if uncompressed.) For (almost) any file size, the WebP has a smaller RMSE (better quality) than the same size JPEG, but the time to compress (marker size) is much larger.

Saving a hard-edged computer image. With this data, PNG out-performs JPG for a best-quality image. However many observers will be satisfied with small RMSE values, less than 5 or so, for either image type. The original is 2000x2000 = 4,000,000 pixels of 3 bytes each (if uncompressed.) Again, the WebP is higher quality compared to the same size JPEG but takes longer to compress.

Here's a quick look at the read times; they only apply to the PIL reader in Python, not FireFox or whatever browser might be displaying the images. It looks like WebP is 3X slower than JPEG to load.

None of WebP, JPEG, or PNG are clear winners (yet. Keep reading.) JPEG compresses fast, WebP compresses small, and PNG is lossless. My personal preference is to use PNG for most computer generated graphs and (in the past, at least) JPEG for real world images. JMP 19 image support does not include WebP without using Python.

Part 2: animated file formats

WebP and GIF formats both support storing multiple images that can be played back as a soundless loop. JMP can directly create an animated GIF and, with Python+PIL, an animated WebP. If you want to jump ahead, the much newer WebP significantly outshines the ancient GIF technology...see the two images at the end.

Several years ago I stumbled into https://www.shadertoy.com/ (don't look, you might not make it back for a while!) Shaders are tiny programs that run on graphics cards using the GPUs. Each pixel of the display is colored based on the output of the shader program. More GPUs generate more pixels, faster. The site is fascinating because you can modify the C-like shader program and re-run it in your browser. Your browser has access to the GPUs, and it is really fast.

The shader idea was originally intended to color 3D triangles where each corner has a color (based on material, orientation, lighting, ...) The pixels (usually called fragments) of the triangle are interpolated between the corners. However, there is no requirement that a shader use a 3D triangle; it can compute a color any way it wants. Some of the shadertoy examples compute a color based on the x-y pixel coordinates and the current time (to drive an animation, perhaps of wave-like motion in the ocean.)

The more interesting examples use an idea called ray marching. There are a lot of great tutorials on ray marching and signed distance functions. (An SDF for an object returns a positive distance from its surface unless the point has somehow gone inside, then a negative distance.) Briefly, ray marching puts a virtual screen of pixels in front of your eye/camera and follows a ray from the eye through a pixel into a bunch of signed distance functions, asking each SDF "How far is this point on the ray from you?". A sphere, cube, torus, plane, etc. all have simple SDFs. The magic of the ray march is to find the minimum answer from all the SDFs and move that far along the ray and repeat until the minimum distance is quite small. Each pixel typically requires a dozen iterations to sneak up on an object represented by an SDF. The same style of march can also discover if the point on the SDF is shadowed and what the slope of the surface is (which determines how much light bounces to the eye.)

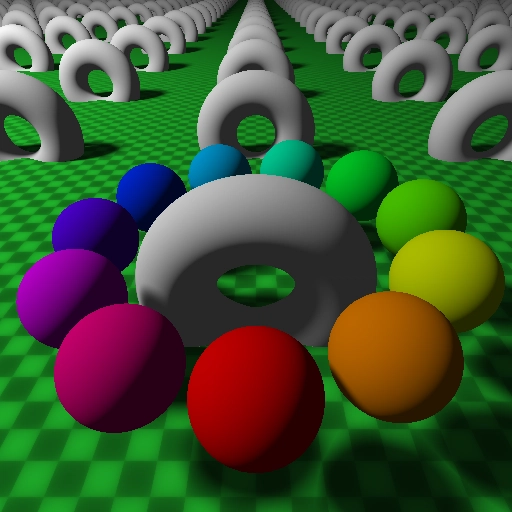

Here's the JSL For making both the animated GIF and the animated WebP from a 3D model with a camera moving around it. The bigger part of it is the code for the ray marching; you won't need that. The functions you are looking for are addNewFrame, which makes a file in a directory from a global variable named bitmap, and saveFrames, which takes all the files from the directory and builds the WebP or the GIF. You'll want to modify them to meet your needs. Everything else is specific to the ray marching and is educational more than useful (because it is slow.)

start = HP Time();

xypix = 512;//32 takes 22s/f; 256 takes 22 minutes/frame, 128 is 6 min/fr

nframes=128;

ambient=.0005; // .0001 barely visible

lightPosition = -40. || -40. || 0; // top left. z=0 is near eye, -1 closer to scene.

lightPower = 50;

filesdir="$desktop/donutpics/"; // not empty()!

makegif = "$desktop/donut.gif"; // empty() or "$desktop/filename.gif"

makewebp = "$desktop/donut.webp"; // empty() or "$desktop/filename.webp" -- requires jpip.bat install Pillow

//deletedirectory(filesdir);createdirectory(filesdir);

if(!Directory Exists(filesdir),throw("stopped because directory does not exist: "||filesdir));

if(nitems(filesindirectory(filesdir))!=0,throw("stopped because directory not empty: "||filesdir));

if(!isempty(makewebp), // need a snip of python to make webp...fail early if not there

rc = Python Execute({},{},

"\[from PIL import Image

]\",echo(0));

if(rc!=0, throw("python init error rc="||char(rc)),"ok");

);

addNewFrame = Function( {filename},{img}, // writes global bitmap to filesdir in .png format

img = New Image( bitmap );

img<<saveimage(filesdir||filename||".png","png");

);

// build .gif or .webp from the .png files in filesdir

saveFrames = Function( {duration,qual=100},{webpname,filelist,finalImage,img,i,rc},

filelist = Transform Each( {f}, Files In Directory( filesdir ),

if(!endswith(f,".png"),throw("stopped because "||f||" is not .png"));

Convert File Path( filesdir || f, "windows" ) );

If( !Is Empty( makewebp ),

webpname = Convert File Path(makewebp,"windows");

rc = Python Execute(

{filelist,webpname,duration,qual}, // inputs

{}, // outputs

"\[# https://pillow.readthedocs.io/en/stable/handbook/image-file-formats.html#webp

imgs = []

for f in filelist:

imgs.append(Image.open(f))

img=imgs[0]

img.save(webpname,save_all=True,format="WEBP",

append_images=imgs[1:],minimize_size=True,

duration=duration,loop=0,lossless=False,quality=qual)

imgs = None

]\", echo( 0 ));

If( rc != 0,Throw( "makewebp error rc=" || Char( rc ) ),"ok");

); // makewebp

/////////////////////////////

if(!isempty(makegif),

for(i=1,i<=nitems(filelist),i+=1,

img = newImage(filelist[i]);

if(i==1, // the first one...

finalImage = img; //

, // all the rest...

finalImage<<addFrame; //

finalImage<<setPixels(img<<getPixels); //

);

finalImage<<setFrameDuration(duration); // same duration every frame

);

finalImage<<saveImage( makegif,"gif");

); // make gif

); // saveframes

// get X,Y,Z rot functions return 4x4 using radians around each single axis; compose them in the order you need.

getXrot = Function( {_xr},

{xr},

xr = Identity( 4 ); // rotate x

xr[2, 2] = Cos( _xr );

xr[2, 3] = -Sin( _xr ); // https://www.brainvoyager.com/bv/doc/UsersGuide/CoordsAndTransforms/SpatialTransformationMatrices.html

xr[3, 2] = Sin( _xr ); // flips the sign of sin for x and y but not z, changing the handedness. trying to match opengl here.

xr[3, 3] = Cos( _xr );

xr;

);

getYrot = Function( {_yr},

{yr},

yr = Identity( 4 ); // rotate y

yr[1, 1] = Cos( _yr );

yr[1, 3] = Sin( _yr );

yr[3, 1] = -Sin( _yr );

yr[3, 3] = Cos( _yr );

yr;

);

getZrot = Function( {_zr},

{zr},

zr = Identity( 4 ); // rotate z

zr[1, 1] = Cos( _zr );

zr[1, 2] = -Sin( _zr );

zr[2, 1] = Sin( _zr );

zr[2, 2] = Cos( _zr );

zr;

);

// rotmat uses degrees and composes x, then y, then z rotation

rotmat = Function( {x, y, z}, // compose euler rotations in degrees, first x, then y, then z

getzrot( Pi() / 180 * z ) * getyrot( Pi() / 180 * y ) * getxrot( Pi() / 180 * x )

);

// scale and translate do x,y,z together, compose scale before/after translate as needed

scalemat = Function( {x, y, z},

((x) || (0) || (0) || (0)) |/ //

((0) || (y) || (0) || (0)) |/ //

((0) || (0) || (z) || (0)) |/ //

((0) || (0) || (0) || (1))

);

tranmat = Function( {x, y, z},

((1) || (0) || (0) || (x)) |/ //

((0) || (1) || (0) || (y)) |/ //

((0) || (0) || (1) || (z)) |/ //

((0) || (0) || (0) || (1))

);

// paralelassign needs to return an RGB with more than 8 bits for each color, stored in an 8-bight double with >51 bits of integer available.

cscale = 2 ^ 17;// 3*17==51bits in 52-53bit double. sign bit is also used elsewhere.

cscaleMax = 2 ^ 3;// biggest rgb packcolor handles

// packcolor is inside the parallel assign to build 17 bit per color doubles; the

// >8 bit per color resolution helps delay the scaling without loss of color.

// unpackcolor is needed after the parallelassign.

unpackcolor = Function( {pc},

{r, g, b},

r = Mod( pc, cscale ) / (cscale / cscaleMax - 1);

pc = Floor( pc / cscale );

g = Mod( pc, cscale ) / (cscale / cscaleMax - 1);

pc = Floor( pc / cscale );

b = Mod( pc, cscale ) / (cscale / cscaleMax - 1);

Eval List( {r, g, b} );

);

finalImage=0; // a flag to recreate the animated gif

// if you are going to use this for more than a simple gif-loop,

// you could repackage this. For now it uses an "time" from 0 to 1

// to make a complete orbit of the camera. Shadertoy uses time "t"

// and makes an angle from that; shadertoy is not typically making

// a loop for a gif, but rather a mouse driven display.

// using a power of 2 is not required for the step but does make the

// begin and end match up; the <1.0 means the end frame is left out.

for(time=0.0,time<1.0,time+=1/nframes,

// y rotation (vertical on screen) is done over the angles. then

// a small tilt on X to make the camera look down at the tiled floor.

// then the camera is pulled back so the scene, which is near 0,0,0

// will be in view. Back means the Z axis; the wording around this

// is always confusing!

angle = time*2*pi();

anim_8=8*angle;

anim_7=7*angle;

anim_3=3*angle;

anim_1=1*angle;

camera = tranmat(0,0,-8)*getXrot(-.1 *2*pi()+0.06*sin(anim_1))*getYrot(anim_1);

// the scene list is a list of lists... and the lists must be evaluated to store an efficient value.

scene = Eval List(

{

// tranmat: Y positive down screen, z positive toward camera

Eval List(

{"torus", Expr(

ptrep = pt[1]-12*round(pt[1]/12)||pt[2]-12*round(pt[2]/12)||pt[3]; // repeat the torus on the X and Z axis (rotated onto Y) https://iquilezles.org/articles/sdfrepetition/

sdTorus( ptrep, [2 1] );

), Eval List( {"solid", RGB Color( "white" )} ), //

Inverse( scalemat( 1, 1, 1 ) * tranmat( 0, 1/*down to the floor*/, 0 ) * rotmat(90,0,0))}

), //

Eval List(

{"floor", Expr(

sdPlane( pt, [0 -1 0], 2.1 )

), Eval List( {"checker", RGB Color( "green" )} ), //

Identity( 4 )}

)//

}

);

nspheres = 11;// add n bouncing spheres in a circle. the Y axis anim8 + p0 phases the bounces

for( p=0,p<nspheres*1.00000001,p+=1,

p0 = (p/nspheres)*2*pi();

scene[nitems(scene)+1] =

Eval List(

{"sphere", Expr( sdSphere( pt, 1 ) ), Eval List( {"solid", hlscolor(p0/(2*pi()),.5,1)} ), // color based on p0

Inverse( rotmat( 0, 0, 0 ) * scalemat( 1, 1, 1 ) * tranmat( 4*sin(p0), -(1.5+sin(anim_8+(p0)))*0.25, 4*cos(p0) ) )} // and position and height

);

);

// choose your pain level; double the x and y is 4 times as long

bitmap = J( xypix, xypix, 0 );

//bitmap = J( 32, 32, 0 );// ~3 seconds for a single image

//bitmap = J( 64, 64, 0 );//

//bitmap = J( 128, 128, 0 );//

//bitmap = J( 256, 256, 0 );// 6 min/image

//bitmap = J( 512, 512, 0 );// 35 min/image

//bitmap = J( 1024, 1024, 0 );//

// JSL's parallelassign function assigns a value to each element of an

// array, using your multiple CPU (not GPU) processors. Each CPU thread

// needs an isolated environment of local data (even the read-only data).

// The first half of this function call is a {list} argument of the local

// variables that each thread can use. The second (smaller, for now) half

// after the local list is the JSL code that is re-executed for each element

// of the array on one of your CPU processors. You have a small number,

// typically 4 to 32. This code was developed on an old machine with 4.

Parallel Assign(

{// locals for each thread

camera = camera, // this is how camera (20 lines above) is copied in to a local of the same name

invCamera = Inverse( camera ), // and the computed inverse

cscale = cscale, //

cscaleMax = cscaleMax, //

xypix = xypix, // the picture size in pixels

nframes=nframes, //

ambient=ambient, //

// anim_8=anim_8, //

// anim_7=anim_7, //

// anim_3=anim_3, //

zdistance = (-42.25) + 9.75 * log(xypix,2) + 1.625 * (log(xypix,2) - 7) * (log(xypix,2) - 7), // see way below

// JSL functions must also be copied in because the JSL interpreter may

// optimize them and it won't do to have two threads simultaneously tweaking

// the same code. Letting the function function build the code as one of

// the locals is an easy way to do it. When packcolor runs, the cscale(Max)

// variable it uses is the local copy, not the global one that was copied in.

// packcolor is the opposite of unpackcolor and lets each array element

// hold 3 "high" resolution color components. I did this because I want to

// scale (white balance?) the colors after an expensive render into the 8-bit

// per color rgb range without losing precision. during the render, depending

// on light brightness and various angles and distances, the maximum color values

// can be smaller or larger than expected.

packcolor = Function( {r, g, b}, //

Floor( Min( cscaleMax, r ) * (cscale / cscaleMax - 1) ) + //

Floor( Min( cscaleMax, g ) * (cscale / cscaleMax - 1) ) * cscale + //

Floor( Min( cscaleMax, b ) * (cscale / cscaleMax - 1) ) * cscale * cscale//

), //

// https://iquilezles.org/articles/distfunctions/

vec2 = Function( {a, b}, a || b ), //

vec3 = Function( {a, b, c},

a || b || c

), //

vlength = Function( {v},

Sqrt( Sum( v ^ 2 ) )

), //

normalize = Function( {v},

v / vlength( v )

), //

clamp = Function( {v, small, large},

If(

v <= small, small,

v <= large, v,

large

)

),

sdPlane = Function( {vec3_p, vec3_n, h},

// vec3_n is the normalized normal vector of the plane

// h is the distance from the origin to the plane

dotProduct( vec3_p, vec3_n ) + h

),

sdSphere = Function( {vec3_p, s},

vlength( vec3_p ) - s

),

sdTorus = Function( {vec3_p, vec2_t},

{q1, q2}, // the Y axis goes through the hole, missing the donut. vec2_2 is [big radius (2" for a 4" donut), small radius (.5" for a 1" thick donut)]

//show(vec3_p, vec2_t);

q1 = vlength( vec3_p[[1 3]] ) - vec2_t[1]; // distance from 000 minus ring center radius is distance from ring center in y==0 plane

q2 = vec2( q1, vec3_p[2] ); // lift out of y=0 plane, vlength(q2) is 3D distance to center of tube

vlength( q2 ) - vec2_t[2]; // ... minus tube radius is distance to surface

),

//sdTorus([2.12132,0,2.12132],[2,1]);

// I really wanted these soft shadows. They are speed-slayers.

softShadow=function({ro,rd,mint,maxt,w},{res,t,i,h},// "nurof3n" https://iquilezles.org/articles/rmshadows/

res = 1.0;

t = mint;

ro=ro[1]||ro[2]||ro[3];

for( i=0, i<256 & t<maxt, i+=1,

h = map(ro + t*rd);

res = min( res, h/(w*t) );

t += clamp(h, 0.005, 0.50);

if( res<-1.0 | t>maxt,i=1e99/*break*/ );

);

res = max(res,-1.0);

0.25*(1.0+res)*(1.0+res)*(2.0-res);

),

// https://github.com/scratchapixel/scratchapixel-code/blob/main/perspective-and-orthographic-projection-matrix/geometry.h

dotProduct = Function( {a, b}, // v2 or v3 x * v.x + y * v.y + z * v.z

Sum( a :* b ) // scalar: how much the vecs a and b point in the same direction

),

crossProduct = Function( {a, b}, // v3 only y * v.z - z * v.y, z * v.x - x * v.z, x * v.y - y * v.x

a[2] * b[3] - a[3] * b[2] || a[3] * b[1] - a[1] * b[3] || a[1] * b[2] - a[2] * b[1]; // vector: perpendicular to both a and b

),

// https://www.shadertoy.com/view/4dSBz3 and https://iquilezles.org/articles/normalsSDF/

// Calculate the normal by taking the central differences on the distance field.

// for spheres there is a simpler way, probably torus too. And plane!

// I'm keeping the code simple for now.

// https://iquilezles.org/articles/normalsSDF/ - tetrahedron technique

calcNormal = Function( {p},

p = p[1] || p[2] || p[3];

normalize( // this is a tetrahedron around p

[1 -1 -1] * map( p + [.001 -.001 -.001] ) + //

[-1 -1 1] * map( p + [-.001 -.001 .001] ) + //

[-1 1 -1] * map( p + [-.001 .001 -.001] ) + //

[1. 1 1.] * map( p + [.0010 .001 .0010] ) //

); //

), //

mapFound = ., // global to remember what object the march ran into.

// the map function tests p against every object in scene and returns the closest.

map = Function( {p},

{i, result, tresult, pt},

p = p[1] |/ p[2] |/ p[3] |/ 1;

result = 1e99;

mapFound = .;

For( i = 1, i <= N Items( scene ), i += 1,

pt = scene[i][sceneDistanceMatrix] * p; // the torus rotate on X happens before the repeat operation, so the Z axis is Y.

pt = pt[1] || pt[2] || pt[3];

tresult = Eval( scene[i][sceneDistanceExpr] );

If( tresult < result,

result = tresult;

mapFound = i; // remember. Not needed on majority of calls.

);

);

result;

), //

//

// the scene list. the sceneDistanceExpr expr() should only use

// functions that are in the parallel assign locals!

//

// first some indexes that explain how to interpret the scene list

sceneDistanceName = 1, //

sceneDistanceExpr = 2, //

sceneDistanceMaterial = 3, //

/**/sceneDistanceMaterial_pattern = 1, //

/**/sceneDistanceMaterial_color = 2, //

sceneDistanceMatrix = 4, //

lightPosition=lightPosition, //

lightPower=lightPower, //

// no comma at end...

scene=scene //

}, // locals for each thread

//

// finally, the JSL that assigns elements of the array. Parallel Assign has

// already chosen an element and supplies a scaled index; the .68 scale was

// empirically chosen to look good. we cannot access the bitmap we are assigning

// into from here, not even the old value about to be overwritten (a bug/flaw

// in the implementation...we could use a copy of course).

//

bitmap[yImage( -.68, .68 ), xImage( -.68, .68 )] = (//

// here begins pretty standard ray marching code; better to look up excellent explanations on the web.

ro = 0 || 0 || 1 ; // ray origin at 0,0 this eye is 1 unit in front of the image plane at z=0

rd = (xImage || yImage || 0) - ro ; // ray direction, image plane is one z unit from the eye_origin

rd = normalize( rd ) ; //normalize to length 1

t = 1 ; resultColor = -1 ; // background if nothing hit

For( i = 0, i < 1024 & t < 1024, i += 1, // <512 is iteration limit, <200 is depth limit for nothing in background

p = ro + rd * t;

pi = invCamera * (p || 1)`;

h = map( pi );

t += h;

If( h < .001,

object = mapFound; // capture before calcNormal reuses map()

light = lightPosition;

if(0,// 1: light attached to camera (needs work)

light = invCamera * (light || 1)`; // light relative to camera

light = light[[1 2 3]]`;

);

// https://www.shadertoy.com/view/4dSBz3 ...

// Calculate diffuse lighting by taking the dot product of

// the light direction (light-p) and the normalVec.

lightVec = normalize( light - pi[[1 2 3]]` /*p*/ );

normalVec = calcNormal( pi[[1 2 3]]` );

dif = clamp( dotProduct( normalVec, lightVec ), 0., 1. );

dif *= softShadow(pi,lightVec, .02/*min t*/, 30/*max t*/, .1/*width of light*/); // <<<< leave this out, get no shadows and a big speedup

// Multiply by light intensity and divide by the square of the distance to the light.

// a post processing step will tweak the lighting to get at least one bright pixel in some color.

// if there is blow-out (light too bright) or banding (light too dark), adjust 1e0 up or down

dif *= lightPower / dotProduct( light - p, light - p ); // 1e-5 ... 1e5

dif += ambient; // add a little ambient. For an early test case, .0001 kept shadows from going completely black.

// https://iquilezles.org/articles/checkerfiltering/ not using his algorithm, rolled my own...

If( scene[object][sceneDistanceMaterial][sceneDistanceMaterial_pattern] == "checker",

// t is the distance to this object with the checker pattern.

// as t gets bigger, the pattern should be less visible

// amplitude is the product of the x and y axis sin waves and forms the tiles; *5

// makes the tile size (bigger makes smaller tiles.) Even raised to ^.4 power there

// is some slope at the transition between tiles. bigdiv is a number between 1 and 2

// with the slope for the transition; smalldiv is always 1. divisor is interpolated

// between smalldiv and bigdiv; t is the distance and chooses smalldiv when the floor is

// far away, bigdiv up close. Smalldiv picks the middle value between the light and dark

// tile color. Bigdiv makes dark darker and light lighter. Far away tiles are the average color,

// and near the tile transitions are the average color and the result is somewhat anti-aliased.

amplitude = sin(pi[1]*5) * sin(pi[3]*5); // 1,3 is the x-z plane the floor lies in <<< unfortunate, we have to know this is the floor.

bigdiv = 1+abs(amplitude)^.4; // empirically, I like .4 better than .3 or .5

smalldiv=1;

// empirical: the useful z-distance before aliasing happens. Formula from fit model.

// 32x32 13

// 128x128 26

// 512x512 52

//zdistance = (-42.25) + 9.75 * log(xypix,2) + 1.625 * (log(xypix,2) - 7) * (log(xypix,2) - 7);

divisor = interpolate(t, -1e99,bigdiv, 3,bigdiv, zdistance,smalldiv, 1e99,smalldiv);

if(amplitude > 0,

dif /= divisor; //

, //

dif *= divisor; //

)

);

resultColor = Max( dif, 0 );

resultColor = resultColor ^ 0.4545; // Gamma correction, not sure where it comes from but several sources use .4545

i = 1e99; /* break */

);

);

If(

resultColor < 0, //

If( resultColor != -1,

Show( resultColor )

); // background, most likely

RGB Color( .4, .5, .6 );// a JSL color is negative

, /*else if */ Is Missing( resultColor ), //

RGB Color( .8, .1, .8 )// error color, negative jsl color

, // else a positive packed color, up to 3 bits of int and 14 bits fraction

{red, green, blue} = Color To RGB( scene[object][sceneDistanceMaterial][sceneDistanceMaterial_color] );

packcolor( red * resultColor, green * resultColor, blue * resultColor ) //

;

) ; //

); // bitmap= expr

);//ParallelAssign

// there are two kinds of values in the bitmap array: positive packed values and negative JSL colors

// leave the negative values alone and don't use them for scaling. Use the biggest value of all the

// packed value to scale up/down to make the biggest 255 on the 8-bit rgb scale. this might be

// brighter than intended.

poscols = Loc( bitmap > 0 );

rmax = gmax = bmax = 0;

// scale for briteness...find the max which might be as small as 1/32 or as large as 8

// negative values are jsl colors already and their intensity is not adjusted

For Each( {i}, poscols,

{red, green, blue} = unpackcolor( bitmap[i] );

rmax = Max( red, rmax );

gmax = Max( green, gmax );

bmax = Max( blue, bmax );

);

maxcol = Max( rmax, gmax, bmax );

Show( maxcol );

// all the frames should use the same adjustment

if(0.140<maxcol<.203, maxcol=.203,show("range check"));

For Each( {i}, poscols,

{red, green, blue} = unpackcolor( bitmap[i] );

bitmap[i] = RGB Color( red / maxcol, green / maxcol, blue / maxcol );

);

addNewFrame("p"||char(1e9+nframes*time)); // save a numbered .png

Show( time,As Date( Today() ) );wait(.01);

);// loop over time

saveFrames(8000/nframes,99);// ms. 8000ms for the full loop.

Show( (HP Time() - start) / 1e6 );

| GIF - 12MB | WebP - 10MB |

|

|

The ancient GIF image compression produced a 20% larger file with severe color banding. The WebP looks great. The torus has one SDF, repeated at very low cost. The colorful spheres each have a separate SDF, and the tiled floor has an SDF. The floor tiles fade into the distance to avoid twinkling alias artifacts. The soft shadows combine two shadowing ideas. The lighting is fixed to the model, and the light and the model are rotating in front of the camera. The spheres are bouncing without ever quite touching down to the floor. The camera angle changes to show the sky during part of the rotation. The GIF was produced by JMP's GIF writer, not PIL's GIF writer. There are a lot of ways to optimize a GIF; JMP and PIL will make different choices for different images.

I'm not planning to do anything else with the ray marching code because it is way too slow without a compiled program running on GPUs. If you really needed it, you might get a 10X improvement by leaving out the shadows, simplifying the floor, and calculating the surface normals for simple primitives directly (without using four additional marches.) You'd still be looking at minutes/frame, not frames/second.

https://www.shadertoy.com/view/4dSBz3 - simple example

https://www.shadertoy.com/view/4dSfRc - tutorial

https://iquilezles.org/articles/rmshadows/ - soft shadows

https://iquilezles.org/articles/checkerfiltering/ - checkerboard

https://iquilezles.org/articles/sdfrepetition/ - repetition

https://www.opengl-tutorial.org/beginners-tutorials/tutorial-3-matrices/ - matrix rotation, etc

https://iquilezles.org/articles/distfunctions/ - list of distance functions

https://www.shadertoy.com/view/Xds3zN - display of distance functions

https://www.shadertoy.com/view/WtGfRV - SIGGRAPH RayMarching tutorial

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.