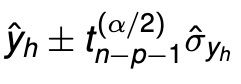

I was looking for more details on the implementation of the prediction intervals in the JMP Fit Model Profiler, when you use the loglinear variance personality in the Fit Model platform. Could you provide more insight compared to what I can find in the help or over the internet ? For instance, does it use the design matrix in its computation ? Like this:

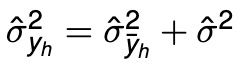

with

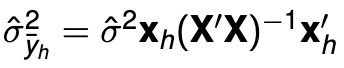

With (this is the part I miss on the documentation)

With sigma square (hat) being the model RSME (for loglinear variance, I guess this is the predicted RMSE for one design condition) and xh a new design condition. I hope so because this is just amazingly powerful to do exactly this.

Additionally, my point is that Prediction inverals are what we need. I communicated in the past (long, long time ago) that having generally those prediction intervals in the prediction profiler would be an ultimate addition to this powerful tool. I mean, the default multiple regression model (Standard Least Square) is the one used by most users, and this is unfortunate Prediction intervals are not available there ! Also, it would be easy to use such a Student distribution with the Simulator as well, instead of a Normal distribution not accounting for the design matrix nor the degrees of freedom (it even exists in multi-response form as a multivariate Student distribution) ! The reason is that most people use DoE first, and then come up with models that logically have low degrees of freedom. Hence the Normal approximation does not hold quite much.

For information, a lot of people cannot really use JMP for their Design Space computation (QbD environment) because of that poor Normal distribution in the simulator, to get the probabilities of defects. Then, we usually need to come back to R or SAS to do it, which is an unfortunate huge loss of time.