- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Undersampling, Oversampling, and weighing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Undersampling, Oversampling, and weighing

How can I populate my data so I only get undersampling sampling technique without modeling. I need to do the same for oversampling and weighing. I am trying to run xgboost with these three sampling techniques (one at a time of course). So, I need one dataset that shows undersampling, a different dataset for oversampling, and a third dataset for weighing. Thank you.

- Tags:

- windows

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Undersampling, Oversampling, and weighing

Hi @NishaKumar ,

There are probably a couple ways you can do this, and it might depend on what you're end goal is as to which method is better.

One option would be to create a validation column where you have it highly offset to one variable, say 10% training and 90% validation, then you make a subset of the training data and do your fit on that. Save the fit formula to the subset table and then bring it back to the original data table and see how well the fit works on the untrained data. You can then do the reverse by treating the validation data as the "training" data, repeat the above steps and see how well it fits the training ("validation") data set. You might do this option if you want to stratify your validation column based on the output you're modeling, however, if you don't care, then you can try the other option.

Another option is to use the Row > Row Selection > Select Randomly and use either 10% or 90% -- or even 1% and 99% and then make a subset of each of those and do the fits and bring the formulas back in to compare how they do with the unused data from the original table.

As for weighting the data, that highly depends on the data set your using and how the data should be treated. If you have what looks to be outliers, but have no reason to remove them from the data set, you can weight them less than the other data so they don't bias the fit, but you would need to look at those individually to see how to deal with such cases.

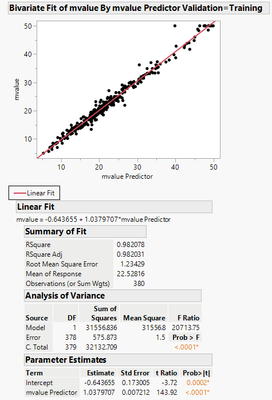

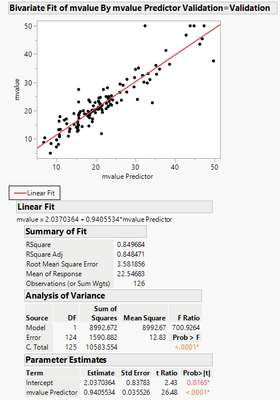

I tried the second approach with the Boston Housing.jmp sample data and it turned out pretty well. It did not quite overfit the data to give me a larger R^2 on the "validation" data of the oversampling data set, but it was larger than using a validation column and fitting the data using that.

By the way, I fit the data using the XGBoost platform and (response = mvalue; all other columns are X, Factors) used the default settings with the Autotune box checked.

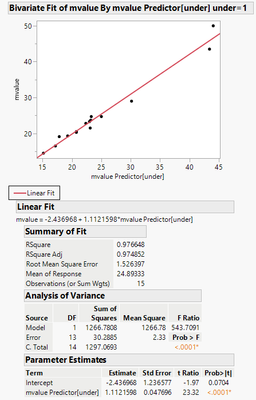

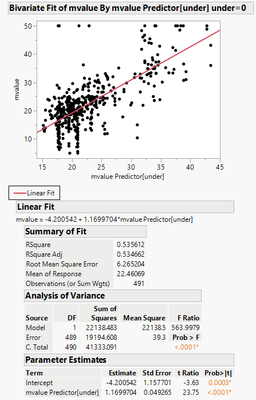

undersampling results (1= training, 0 = validation):

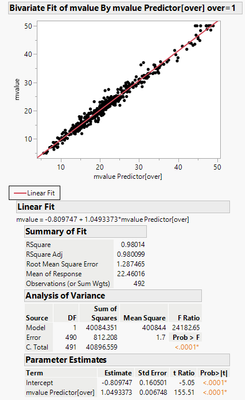

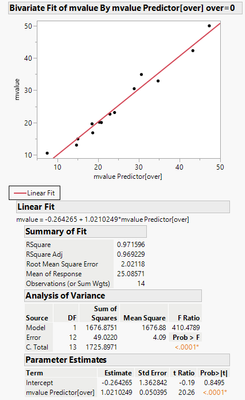

oversampling (1=training, 0=validation):

Here's the results using a .75/.25 training/validation column that was stratified on mvalue:

The R^2 of the last validation data is not as high as the oversampling model, but it's likely more accurate since more samples were used in the validation of the model than when taking too many samples to train.

Anyway, I hope this gives you some ideas on how to more forward with your specific goal.

Good luck!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Undersampling, Oversampling, and weighing

Hi @NishaKumar ,

There are probably a couple ways you can do this, and it might depend on what you're end goal is as to which method is better.

One option would be to create a validation column where you have it highly offset to one variable, say 10% training and 90% validation, then you make a subset of the training data and do your fit on that. Save the fit formula to the subset table and then bring it back to the original data table and see how well the fit works on the untrained data. You can then do the reverse by treating the validation data as the "training" data, repeat the above steps and see how well it fits the training ("validation") data set. You might do this option if you want to stratify your validation column based on the output you're modeling, however, if you don't care, then you can try the other option.

Another option is to use the Row > Row Selection > Select Randomly and use either 10% or 90% -- or even 1% and 99% and then make a subset of each of those and do the fits and bring the formulas back in to compare how they do with the unused data from the original table.

As for weighting the data, that highly depends on the data set your using and how the data should be treated. If you have what looks to be outliers, but have no reason to remove them from the data set, you can weight them less than the other data so they don't bias the fit, but you would need to look at those individually to see how to deal with such cases.

I tried the second approach with the Boston Housing.jmp sample data and it turned out pretty well. It did not quite overfit the data to give me a larger R^2 on the "validation" data of the oversampling data set, but it was larger than using a validation column and fitting the data using that.

By the way, I fit the data using the XGBoost platform and (response = mvalue; all other columns are X, Factors) used the default settings with the Autotune box checked.

undersampling results (1= training, 0 = validation):

oversampling (1=training, 0=validation):

Here's the results using a .75/.25 training/validation column that was stratified on mvalue:

The R^2 of the last validation data is not as high as the oversampling model, but it's likely more accurate since more samples were used in the validation of the model than when taking too many samples to train.

Anyway, I hope this gives you some ideas on how to more forward with your specific goal.

Good luck!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Undersampling, Oversampling, and weighing

Thank you so much, I will try out these techniques one by one and provide feedback/questions.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us