- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Stability prediction headache

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Stability prediction headache

Hello,

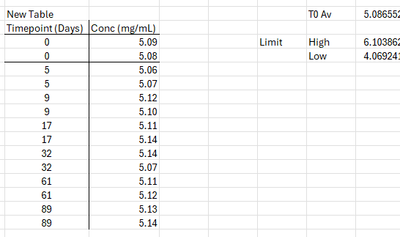

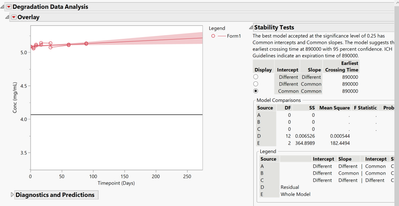

I have an analysis problem I'd like some advice on. Below is a table of some real stability data- from which I'm trying to extract a reasonable stability prediction.

I usually use the degradation profiler for this, and have done up until now without any issue for multiple other projects - but this dataset is causing me a headache; I believe it's because we had an anomalously low T0 which skewed the trend making the predictions….. Optimistic.

Table Linked - with the associated acceptance limits we have.

Notes:

1-This is a real-world example, so it's a fully understood system.

2-We know therefore, that it is impossible for this material to gain activity. i.e. the upward trend is due to assay noise.

3-We also know from previous data and experiments that this material degrades in a roughly linear fashion, and expiry's (i.e. when the confidence levels cross the lower limit) in the region of 730-1095 days are not unusual.

My question therefore is how to analyse this. I'd be comfortable using something like a wider confidence interval and expanding that out as the prediction but wouldn't know how to do that.

The next data collection point is in 3 months, so while I'm comfortable that the 'real' trend will emerge over time and the early assay noise will be averaged out I need to make some sort of realistic prediction now. Any suggestions/guidance (despite telling-off the scientist who did the T0 :) ) would be great!

Jon

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Hi @Jon_Armer : I think your thinking makes sense here; based on these data, there is no evidence that the spec is in danger or that it is worse than the previous batch...and continue to monitor.

And, if it is true that previous batch(es) had a generally linear drop, with a trend that didn’t really become apparent until later on...then I'd be inclined, if possible, to pull more between now and the next scheduled pull point so that the "knot" (the time that the trend starts down) can be estimated. Then you could model the entire "curve" via introducing another term in your model:

max(0, timepoint - t)

Where t is the timepoint where the trend down starts. So, you'd have Y = b0 + b1*Timepoint + b2*max(0, Timepoint - t). The initial slope is b1, the second slope is b1+b2. You can use the nonlinear platform to estimate t (and b0, b1, and b2), or you can eyeball t and use Fit Model to estimate b0, b1, and b2. Strictly speaking, the Nonlinear platform is the right thing to do...though eyeballing it can be be fit for purpose.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Hi @Jon_Armer : A few observations/thoughts/questions...in no particular order.

1. Can evaporation be at play here? I see your response units are mg/mL. Could evaporation make the trend appear it's increasing?

2. T5 and the 2nd rep of T32 are low as well...

3. Why to you "need to make some sort of realistic prediction now"? Are you (1) trying to get an early read on this batch relative to spec, or (2) are you trying to get an accurate prediction? If (1), you may be able to simply say that, based on these data, there is no evidence that the spec is in danger. If (2)...well, given on your assumptions (from your "Notes"), that is a different animal.

4. Could it be that there is something different about this batch that makes it behave differently from "previous data"?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Hi @MRB3855

Good questions:

1-no, they're sealed to prevent this very thing

2-Agreed. With biological systems it's not uncommon to see a degree of variaiton in longitudinal studies, especially given the assays etc, but i'd agree the variation is wide.

3-Shelf life prediction for this batch. Need to make an assessment on it and put a 'line in the sand' so we can fill out our yearly schedule.

4-Yes potentially- it's a complex system - so there's scope for variation there that would be hard to capture/identify.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Hi @Jon_Armer : Hmm. You say your next pull point is at 3 months. What is your target (registered?) shelf-life?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Ah slight correction (apologies if i mislead)- next timepoint is after an additional 3 months; which will actually be the 6 month timepoint.

Previous batch had 18 months predicted stability off 8 months data (confirmed at 12 months).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Hi @Jon_Armer : Headache indeed. Again, in no particular order:

1. If your next pull point is at 6 months, then that point will have a disproportionately large influence on the estimated linear regression parameters (slope and intercept) since it is so much further way from the bulk of the data. So, even if it is lower at 6 months (as you expect) the regression model may have prediction problems; e.g., much of the variability in the data will be due to lack-of-fit, not random error.

2. Is there some science/data that suggests it stays flat for some time, then starts to drop sometime after 3 months? If so, that can be modeled.

3. Any chance to pull before the 6 month time point?

4. These data could be "fit for purpose"; there is no evidence of a trend. So, given the data (mean = 5.11, SD=0.028),a 95% lower prediction interval bound for one rep is 5.055 (using distribution platform). That gives you a margin of 5.055 - spec = 5.055 - 4.1 = 0.995 mg/mL. So, it would have to drop nearly 1 mg/mL over the shelf-life to be at risk. Is this kind of drop consistent with other batches?

5. Setting shelf-life usually involves several batches being out up on stability (as you probably know) and the shelf-life is then set via the worst performing batch. Maybe this is just part of "normal" batch to batch variability; what kind of batch history do you have? i.e., how many batches have you put on stability and how many of those have shown the expected trend?

6. Based on these data and this discussion...I think you may need to revisit your assumptions about what is possible wrt how a given batch could behave. Of course, I could be wrong!

7. There is a lot to think about here; dunno why they call it "simple" linear regression ;) .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

I often think the term 'simple' is thrown around in these contexts as a way to not frighten timid phd students from engaging in statistics…..!

Ok- so

1.Correct. This is a pretty typical approach used in industry; the first timepoints are close-together, and then they get increasingly spread out as you go along.

Now, often a scoping study would be done to identify any inflection point in the trends and then the study time points would be designed such to increase the data density around that point. Sometimes though, especially at the start of a new project or development cycle you don't have that, so you're flying blind a bit (and tend to fall back on 'tried and tested' approaches).

We are unfortunately 'stuck' with the spread here, at least for this batch.

2.Previous batches had a generally linear drop, with a trend that didn’t really become apparent until later on.

3.Yes, that's an option- though doesn't solve my immediate problem (of needing to assign a preliminary expiry date for this batch)

4-This is the way I'm leaning I think. That sort of drop would be consistent with previous batches (it's usually pretty linear), just takes time.

5-yup again, though I only have 1 other batch in this 'set'- the trend was similar but 'ever so slightly down', allowing me to estimate a lower-level crossing.

6-We're agreeing a lot here- which is always nice (on the internet of all places!). What I think we're seeing here is assay variation superimposed on the (relatively) stable material. The variation-gods aligned with the previous batch to present me with a slightly negative trend which allowed a relatively straightforward stability prediction (with the obvious usual caveats). This time, I've somehow angered the variability gods and no such trend is offered, leading to my headache.

The noise in these data is wider than the previous batch too.

So- this is where my head is currently:

I have limited additional data to draw on here- the previous (and only other) batch gave a slightly negative trend, allowing me to assign an 18month initial stability at this (t3m) point. Obviously aware of the perils of over extrapolation here, but practical considerations must sometimes intrude.

This spread in this batch doesn't allow me to do that. So, the only option i currently see that would be defendable would be to revert to the previous batches stability on the basis that this new batch has not, as yet, offered me any indication that it is worse. As such, using the previous batches stability could be argued to be worst case, and therefore safe/defendable.

I then monitor and update as appropriate as new data comes in. Unless there's some clever way to use the added variability in this data to widen the Confidence intervals (and thus 'force' the prediction to cross the lower level)

Thoughts?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Hi @Jon_Armer : I think your thinking makes sense here; based on these data, there is no evidence that the spec is in danger or that it is worse than the previous batch...and continue to monitor.

And, if it is true that previous batch(es) had a generally linear drop, with a trend that didn’t really become apparent until later on...then I'd be inclined, if possible, to pull more between now and the next scheduled pull point so that the "knot" (the time that the trend starts down) can be estimated. Then you could model the entire "curve" via introducing another term in your model:

max(0, timepoint - t)

Where t is the timepoint where the trend down starts. So, you'd have Y = b0 + b1*Timepoint + b2*max(0, Timepoint - t). The initial slope is b1, the second slope is b1+b2. You can use the nonlinear platform to estimate t (and b0, b1, and b2), or you can eyeball t and use Fit Model to estimate b0, b1, and b2. Strictly speaking, the Nonlinear platform is the right thing to do...though eyeballing it can be be fit for purpose.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Stability prediction headache

Great stuff- thanks for the discussion/advice!

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us