- JMP will suspend normal business operations for our Winter Holiday beginning on Wednesday, Dec. 24, 2025, at 5:00 p.m. ET (2:00 p.m. ET for JMP Accounts Receivable).

Regular business hours will resume at 9:00 a.m. EST on Friday, Jan. 2, 2026. - We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Whe...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

Hi Guys,

We have a process that is fairly well characterized at this point but we are now de-risking potential process variability from the starting material. The intention is to maximize purity, and maximize recovery of this unit operation.

There are 2 parameters we know are significant factors as they have been modeled in previous DoEs and are just well known parameters (B,C). A third parameter (A) is under investigation as it in theory could be impactful. This is the starting purity of the material, which can be variable (And we cannot fully control).

From happenstance experiments, I've have shown successful purification over a wide range of A (~30-50% starting purity). I wanted to investigate where the point of failure is (At what starting material purity, can the target final purity not be reached) not be reached. I also wanted to fully characterize our design space around this so we can learn about if the optimal condition of B and C has moved with a change in A.

I performed an RSM model which ranged B and C across its normally tested range, and A ranged from about 5% purity to ~85% purity. It did identify A as a significant parameter but overall, the model is shifted down from historical data. So for example, after reducing the model, it predicted 30% material would be ~60% pure by end of operation, but we have multiple data points showing this is underestimated and should be closer to 85%.

One issue occurred in the performance of this study. The target A conditions were the following, 5%, 40%, 85%. After performing, it was found that the intermediate condition actually tested was closer to 55% so this was a performance error. Could this have caused the issue?

Overall, the model fit was very good, with low RSME. The low purity conditions definitely were a stress test, and not something we would ever expect to see in production, but it seemed beneficial to explore a wide space for process understanding. My theories are the experimental error that occurred has led to an issue, or the range was too wide and the lower A condition which repeatedly struggled to purify, lowered the model.

1.) How can I repair this DOE to improve the accuracy?

I can augment the runs and focus the dataset in the "MFG range" we would expect

I could add a third order term to evaluate more conditions of A rather than just 3.

2.) How can I avoid an inaccurate model in the future?

If I did not have historical data, I would not have questioned this dataset as it seems at face value, correct.

- Tags:

- windows

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

Thank you for the detailed description. What did the residual analysis show about the model in the recent RSM experiment?

Also, were any of the RSM runs in common with previous experiments or tests, and so might act as controls?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

^ I'm sorry, my computer is being glitchy and I accidently accepted your comment as a solution...

To answer your questions:

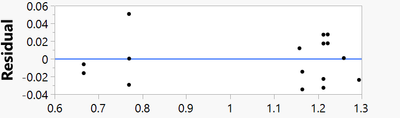

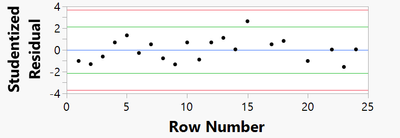

Here are the residual plots for parameter A:

No conditions were fully controls technically. Previous DoEs were performed with ~45% starting material, the closest in this RSM was 55%.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

For clarification, "A" is not controllable by you? Is it a "raw material" from a supplier? If so, it seems to me you should be developing a mixed model with A as a random variable (covariate) in the model.

Additional thoughts:

1. Have you assessed the measurement systems for A and for purity?

2. Where was A (e.g., what levels was it at) when you studied B&C in previous experiments? If it interacts with B and/or C then your previous models would be conditional.

3. I don't understand what you mean by "repair this DOE to improve the accuracy"? I'm guessing you want to improve the model, the DOE is a means to an end.

4. Models are approximations, hopefully useable. They can be used for explanatory or predictive purposes. How well they do at prediction is a function of how representative your studies (e.g., DOE's) are of the future. DOE's have, by design, narrow inference space. This is because you are removing/drastically reducing the time series and therefore preventing factors from varying in the DOE. To overcome this handicap, you should exaggerate noise in your experiment and use a strategy to handle the noise appropriately (e.g., repeats, repliates, blocks, split-plots, covariates, etc.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

Thanks for your response!

For clarification, "A" is not controllable by you? Is it a "raw material" from a supplier? If so, it seems to me you should be developing a mixed model with A as a random variable (covariate) in the model.

"A" will not be controllable at MFG but is controllable in this experiment. I combined two pools of starting material that was high, and low purity, although my middle target was off in purity target (I used a weighted average approach).

Additional thoughts:

1. Have you assessed the measurement systems for A and for purity?

The purity measurement here is very precise. We usually can estimate within 5%, usually more accurate but that is being conservative.

2. Where was A (e.g., what levels was it at) when you studied B&C in previous experiments? If it interacts with B and/or C then your previous models would be conditional.

"A" in previous DoE experiments was held constant at around 40-45% purity. At scale runs has gone as low as 30% with no noticeable impact on final purity.

3. I don't understand what you mean by "repair this DOE to improve the accuracy"? I'm guessing you want to improve the model, the DOE is a means to an end.

Correct, my terminology was incorrect. How can I improve the model, and what is the theory for why it is inaccurate.

4. Models are approximations, hopefully useable. They can be used for explanatory or predictive purposes. How well they do at prediction is a function of how representative your studies (e.g., DOE's) are of the future. DOE's have, by design, narrow inference space. This is because you are removing/drastically reducing the time series and therefore preventing factors from varying in the DOE. To overcome this handicap, you should exaggerate noise in your experiment and use a strategy to handle the noise appropriately (e.g., repeats, repliates, blocks, split-plots, covariates, etc.)

I totally understand this, the issue is that this model suggests that "A" is a significant factor in a space where is has been proven it is not. I am trying to determine if this was something I did incorrectly in my DoE design, or if was experimental, etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

"A" will not be controllable at MFG but is controllable in this experiment. I combined two pools of starting material that was high, and low purity, although my middle target was off in purity target (I used a weighted average approach).

I understand you managed A during the experiment, that is not the question. The question is, are you willing to manage A in the future? What I mean by willing is; do you have the capability, money or desire to manage A? If not, A is noise. Treating A like a design factor is not what I would recommend.

The purity measurement here is very precise. We usually can estimate within 5%, usually more accurate but that is being conservative.

I'm not sure I understand your terminology. There is a difference between accuracy and precision, but your appear to use them interchangeably? Accuracy is a measure of how close a measurement is to the true or accepted value of the quantity being measured (e.g., a reference standard). Precision is a measure of how close a series of measurements are to one another (i.e., repeatability and reproducibility). But in any case you suggest measurement is not an issue.

"A" in previous DoE experiments was held constant at around 40-45% purity. At scale runs has gone as low as 30% with no noticeable impact on final purity.

This is a potential issue. Since A was constant, during previous experiments, there is no estimate of potential interaction effects between A and other factors. Also, your model is contingent on A being constant at the 40-45% purity levels. If A changes, we have no idea how your model will perform.

I totally understand this, the issue is that this model suggests that "A" is a significant factor in a space where is has been proven it is not. I am trying to determine if this was something I did incorrectly in my DoE design, or if was experimental, etc

I'm not sure I follow your logic...How did you "prove" A was not significant in the design space? If you already know A is insignificant, why are you experimenting on it? Whether you ran the appropriate design or there were other issues in your experiment, I can only speculate. If you already had a model for B & C and you just wanted to add A to the model, I'm not sure why you ran a response surface type of design. Also, for optimization type designs, statistical significance is less important. You should have already established the model, now you are just selecting levels for the factors in the model to optimize the response. Some sort of contour mapping is typical. Again, if A is truly noise, then it should not be analyzed and interpreted as a design factor (perhaps as a covariate).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: RSM Model was inaccurate (Disagrees with Historical Data) - How to Fix & Where it went wrong

I'm going to go at this with a different approach to other answers focusing on the experimental approach and the data quality - If I understand correctly, your intermediate condition was 55% rather than the 40% it was intended as? If so, this may be a case of taking a step back and assessing the accuracy of your control over A - you need certainty that you are able to control at the 5, 40 and 85% you have selected in your design, if you have inherent variability this is going to increase the uncertainty in your design. How many replicates of each point did you perform?

What was the modelling type that you used? Unfortunately a limitation of standard least squares regressions is that it is sensitive to large changes over the factor ranges and to outliers (this often time means you miss the 'subtle' changes in data, possibly leading to the underestimations you describe here). I have had similar issues where my predictive model in an RSM did not match my understanding from theory/historical data, which came down to outliers in my data set - to help with this I looked at the Cooks D Influence scores of my data points - a good rule of thumb is that points have a value > 4/n to find outliers. Another option is to explore different predictive modelling forms such as GenReg.

Another consideration is if A was kept constant in your previous experiments and you are varying it now - has the quality/type of A changed (what was the time gap between the previous experiment and this one? Is there also potentially a 'hidden factor' associated with A that is causing an effect on the process?

Recommended Articles

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us