- JMP User Community

- :

- Discussions

- :

- REML mixed model: "Unable to allocate enough memory" error message

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

REML mixed model: "Unable to allocate enough memory" error message

Hi,

I'm getting an error message of "Unable to allocate memory" while trying to conduct REML (Random Effects). Could you please help me figure what's wrong?

- My dataset is 1.7 MBs in size, with on a 27 K rows and 7 columns.

- My machine is Win 10 , 12 GB RAM and 88 GB free HDD space.

- My model is also pretty simple (only three variables).

- I'm using JMP 13.2.1

My expectation was that this extra computational capacity should be more than enough for this relatively small task. I'm thinking there must be something wrong with my JMP settings.

Could you please help me figure this out?

Thank you in advance!

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

You have one more variable than I did but I still completed what I believe to be a similar analysis in 12 minutes with just over 6 GB of memory. Does this table look like yours now?

Random Reset(123);

//Make some data

dt = New Table( "RandomEffect Example",

Add Rows( 27000 ), //Set the number of rows here

New Column(

"Store",

Character,

"Nominal",

Format( "Best", 12 ),

Formula( Char( Sequence( 1, 5500, 1, 5 ) ) )

),

New Column(

"Time",

Numeric,

"Ordinal",

Format( "Best", 12 ),

Formula( Sequence( 1, 5, 1, 1 ) )

),

New Column(

"Geo",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( If( Random Normal() > 0, 1, 2 ) )

),

New Column(

"Type of Management",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( If( Random Normal() > 0, 1, 2 ) )

),

New Column(

"Base Cost",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula(

If( Row() == 1 | :Store != Lag( :Store, 1 ),

Round( Random Uniform( 1000, 2000 ), 0 ),

Lag( :Base Cost, 1 )

)

)

),

New Column(

"Cost",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula(

Round(

:Base Cost + If( :Type of Management == 1,

50 + 50 * :Time,

-50 * :Time

) + If( :Geo == 1,

500 + 10 * :Time,

-10 * :Time

) + Random Normal( 0, 200 )

)

)

)

);If so, this should be the same analysis you did:

//Analysis

dt << Fit Model(

Y( :Cost ),

Effects( :Time, :Type of Management, :Time * :Type of Management ),

Random Effects( :Store[:Geo] ),

NoBounds( 1 ),

Personality( "Standard Least Squares" ),

Method( "REML" ),

Emphasis( "Minimal Report" ),

Run(

:Cost << {Summary of Fit( 1 ), Analysis of Variance( 0 ),

Parameter Estimates( 1 ), Lack of Fit( 0 ), Plot Actual by Predicted( 0 ),

Plot Regression( 0 ), Plot Residual by Predicted( 0 ),

Plot Effect Leverage( 0 )}

)

);- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

You've got the single-user license version of JMP which is only 32-bit. Our annual license version is the only version available in 64-bit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

Can you say more about the model that you are fitting? Do you have a categorical variable as a random effect? If so, how many levels does it have?

Phil

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

Hi,

Thank you so much! I apologize for the delay in response. Lost in the abyss of solving this thing and on the verge of losing hope.

The model and data are similar to this one offered by JMP . Following the example's specifics, my data has a little over 5400 stores, each with with 5 observations(for time).

As in the example, my predictor variable and the random effect is categorical (1, 2) nested in another categorical variable for location (1, 2). Time is in five intervals (hence the five observations per subject).

I followed the same steps as the JMP example, but I always get the error message. I even tried without the nesting and the error comes again.

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

Does the script below recreate your analysis except with fewer stores? On windows JMP consumed 5.6 GB of memory and took 6 minutes and 11 seconds chugging along on one processor to analyze 60,000 rows (600 stores).

| Rows | Memory Consumed (MB) |

| 10,000 | 650 |

| 20,000 | 1,272 |

| 30,000 | 2,294 |

| 40,000 | 3,775 |

| 50,000 | 5,592 |

| 60,000 | 6,662 |

Script:

Random Reset(123);

//Make some data

dt = New Table( "RandomEffect Example",

Add Rows( 5400 ), //Set the number of rows here

New Column(

"Store",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( Sequence( 1, 5500, 1, 10 ) )

),

New Column(

"Type",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( Sequence( 0, 1, 1, 5 ) )

),

New Column(

"Time",

Numeric,

"Ordinal",

Format( "Best", 12 ),

Formula( Sequence( 1, 5, 1, 1 ) )

),

New Column(

"Base Sales",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula(

If( Row() == 1 | :Store != Lag( :Store, 1 ),

Round( Random Uniform( 1000, 2000 ), 0 ),

Lag( :Base Sales, 1 )

)

)

),

New Column(

"Sales",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula(

Round(

:Base Sales + If( :Type == 1,

50 + 50 * :Time,

-50 * :Time

) + Random Normal( 0, 200 )

)

)

)

);

//Analysis

dt << Fit Model(

Y( :Sales ),

Effects( :Type, :Time, :Type * :Time ),

Random Effects( :Store[:Type] ),

NoBounds( 1 ),

Personality( "Standard Least Squares" ),

Method( "REML" ),

Emphasis( "Minimal Report" ),

Run(

:Sales << {Summary of Fit( 1 ), Analysis of Variance( 0 ),

Parameter Estimates( 1 ), Lack of Fit( 0 ), Plot Actual by Predicted( 0 ),

Plot Regression( 0 ), Plot Residual by Predicted( 0 ),

Plot Effect Leverage( 0 ), {:Type * :Time << {LSMeans Plot( 1 )}}}

)

);

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

Many thanks! The script runs quite smooth here. I'm impressed!

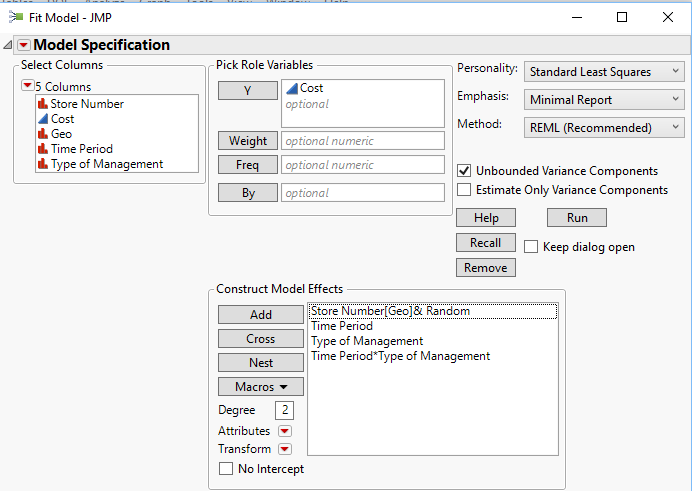

However, allow me to point out that my data is 5400 stores, each with 5 observations, that's 27K rows. I'm attaching an example of how it looks (first few obs). In each time period, we take note of the type of management that store had. Now I want to model the cost as a response variable, while the type of management as the predictor, over the five time periods. As mentioned above, we have 5400 of these stores hence 27K obs. My understanding is that this is a case of repeated measures.

This is how I'm doing it through the GUI .

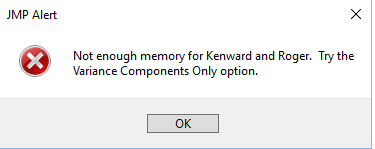

And this is the error message it brings up when using the full-blown data:

I thank you again for your time and help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

You have one more variable than I did but I still completed what I believe to be a similar analysis in 12 minutes with just over 6 GB of memory. Does this table look like yours now?

Random Reset(123);

//Make some data

dt = New Table( "RandomEffect Example",

Add Rows( 27000 ), //Set the number of rows here

New Column(

"Store",

Character,

"Nominal",

Format( "Best", 12 ),

Formula( Char( Sequence( 1, 5500, 1, 5 ) ) )

),

New Column(

"Time",

Numeric,

"Ordinal",

Format( "Best", 12 ),

Formula( Sequence( 1, 5, 1, 1 ) )

),

New Column(

"Geo",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( If( Random Normal() > 0, 1, 2 ) )

),

New Column(

"Type of Management",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( If( Random Normal() > 0, 1, 2 ) )

),

New Column(

"Base Cost",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula(

If( Row() == 1 | :Store != Lag( :Store, 1 ),

Round( Random Uniform( 1000, 2000 ), 0 ),

Lag( :Base Cost, 1 )

)

)

),

New Column(

"Cost",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula(

Round(

:Base Cost + If( :Type of Management == 1,

50 + 50 * :Time,

-50 * :Time

) + If( :Geo == 1,

500 + 10 * :Time,

-10 * :Time

) + Random Normal( 0, 200 )

)

)

)

);If so, this should be the same analysis you did:

//Analysis

dt << Fit Model(

Y( :Cost ),

Effects( :Time, :Type of Management, :Time * :Type of Management ),

Random Effects( :Store[:Geo] ),

NoBounds( 1 ),

Personality( "Standard Least Squares" ),

Method( "REML" ),

Emphasis( "Minimal Report" ),

Run(

:Cost << {Summary of Fit( 1 ), Analysis of Variance( 0 ),

Parameter Estimates( 1 ), Lack of Fit( 0 ), Plot Actual by Predicted( 0 ),

Plot Regression( 0 ), Plot Residual by Predicted( 0 ),

Plot Effect Leverage( 0 )}

)

);- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

Yep. That's quite like it. I must say I'm impressed of how you could recreate it by a script. Respects!

I've run the model and I'm getting the same error message again:

Before starting the analysis, my computer had about 8 GB of free RAM. If the same experiment consumed only 6 GB on your end, then why does JMP return such an error message here? Is there another memory measure I should keep an eye on?

Many thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

You provided the specifications of your machine, but are you using 64-bit JMP? On occasion people might be using 32-bit JMP. Using only 32-bit software can limit the amount of memory your computer is able to access, in spite of how much is installed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

Hopefully 32 bit is the problem, but funny things seem to happen when you get close to the memory limit of your computer. I once added memory to a machine that could not complete an analysis and after upgrading the analysis completed using less memory than was initially installed. Maybe JMP momentarily consumes more than 8 GB when setting up the analysis.

To confirm that you actually run out of memory you might try gradually increasing the number of rows in the data table and watching Windows Task Manager to see how close you get to 100% Physical Memory usage.

If you are short of memory you are not totally out of luck, you can do your analysis on subsets of the larger table then average the results. Each iteration might look something like this:

dt = current data table();

//Make a list of store numbers

FilterStoreTabluate = dt << Tabulate(

Add Table( Row Table( Grouping Columns( :Store ) ) )

);

//Get store numbers as data table, close tablate

dtFilterStore = FilterStoreTabluate << Make Into Data Table;

FilterStoreTabluate << Close Window();

//Make a column with random numbers

dtFilterStore << New Column(

"Rank",

Numeric,

"Continuous",

Format( "Best", 12 ),

Formula( Random Uniform() )

);

//Select 2500 stores

dtFilterStore << New Column(

"Keep",

Numeric,

"Nominal",

Format( "Best", 12 ),

Formula( Col Rank( :Rank ) < 2500 ),

Set Selected

);

//Join the list to your original data table

dtSubsetWhole = dt << Join(

With( dtFilterStore ),

Select(

:Store,

:Time,

:Geo,

:Type of Management,

:Base Cost,

:Cost

),

SelectWith( :Keep ),

By Matching Columns( :Store = :Store ),

Drop multiples( 0, 0 ),

Include Nonmatches( 1, 0 ),

Preserve main table order( 1 )

);

//close the list of stores

dtFilterStore << Close Window();

//Select only stores selected to 'keep'

dtSubsetFilter = dtSubsetWhole << Data Filter(

Location( {938, 189} ),

Add Filter( columns( :Keep ), Where( :Keep == 1 ) )

);

//Make data table with only stores you want to keep. Simply excluding where Keep = 0 might work as well.

dtSubset = dtSubsetFilter << Show Subset;

//Run the fit model- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: REML mixed model: "Unable to allocate enough memory" error message

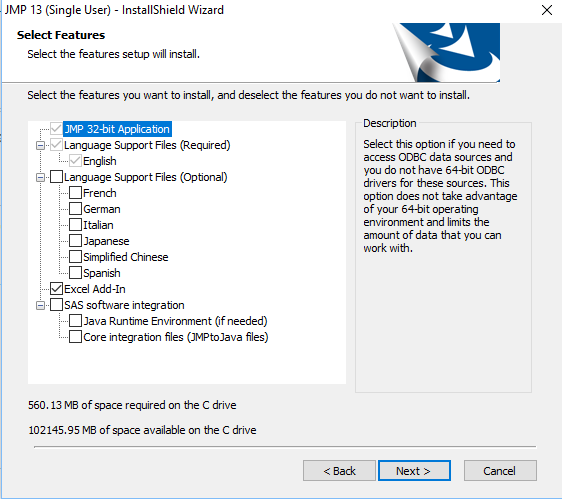

Yes. You nailed it, thank you. JMP was installed as 32-bit .

I've tried to uninstall / re-install. Yet, it still puts itself as 32-bit without an option to choose 64-bit. (greyed out in the screenshot below) . Any suggestions would be much appreciated.

------

Can't thank you enough. To your point, I did open Task manager on a second screen and observed it as I ran the model. It didn't even catch 50% of memory or CPU usage. Still, I was eyeballing the expansion options on Amazon and saying farewell to the paycheck before we knew it's the 32-bit thing :-)

- © 2024 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us