- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Parallel Assign() is slow on certain devices

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Parallel Assign() is slow on certain devices

Hi everybody,

there seems to be a problem with Parallel Assign() in combination with certain systems.

I have got access to 3 Windows 10 PCs with JMP 16.1.:

- Laptop: Core i5-8350U (4 cores) @ 1.9 GHz, 16GB Ram

- Old Workstation: Xeon E5-1660 v4 (8cores) @ 3.2 GHz, 256GB Ram

- New Workstation Xeon W-2145 (8 cores) @3.7 GHz, 128GB Ram

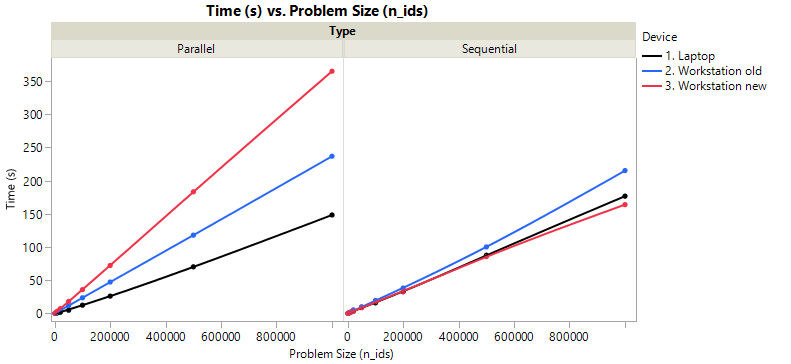

I compared the performance of the parallel and the sequential version of my test script using different problem sizes on all systems (scipt below).

The result was surprisingly bad. Only on my laptop the parallel version is slightly faster than the sequential version, but up to more than 2 times slower on the workstations.

Did anyone experience similar performance issues with Parallel Assign()? Or am I using it wrong? What is going on here?

Rob

n_different_ids = 100000;

// Just generate random data. Every 15 rows must be processed at the same time.

DT_Data = J( 15*n_different_ids, 9, 0 );

For ( i = 1, i <= n_different_ids, i++,

DT_Data[15*(i-1)+(1::15),0] = i + J( 15, 9, Random Normal() );

);

// some function

F_percentile = Function( {x , p},

x = Sort Ascending(x);

n = N Rows(x);

index = 1 + (n - 1) * p;

index_ibelow = Floor(index);

index_iabove = Ceiling(index);

h = index - index_ibelow;

result = (1 - h) * x[index_ibelow] + h * x[index_iabove];

result;

);

// parallel version

DT_Features = J( n_different_ids, 16, 0 );

start=tick seconds();

Parallel Assign( {

DT_Data = DT_Data,

F_percentile = Name Expr( F_percentile )

},

DT_Features[i,j] = (

i_same_group = Loc(DT_Data[15*(i-1)+(1::15), 1]);

data = DT_Data[15*(i-1)+i_same_group, 1+Ceiling(j/2)];

If (mod(j,2),

result = F_percentile(data, 0.95);

,

result = F_percentile(data, 0.05);

);

result;

)

);

time_parallel = tick seconds()-start;

Show(time_parallel);

Wait(0.1);

// sequential version

DT_Features = J( n_different_ids, 16, 0 );

start=tick seconds();

for(i=1,i<=n_different_ids,i++,

for(j=1,j<=16,j++,

i_same_group = Loc(DT_Data[15*(i-1)+(1::15), 1]);

data = DT_Data[15*(i-1)+i_same_group, 1+Ceiling(j/2)];

If (mod(j,2),

result = F_percentile(data, 0.95);

,

result = F_percentile(data, 0.05);

);

DT_Features[i,j] =result;

);

);

time_sequential = tick seconds()-start;

Show(time_sequential);

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

This is a 4-CPU 16GB ~3GHz machine JMP16/win10 under VirtualBox. I see the speed up I'd expect (not 4X, but still good.)

n_different_ids = 100,000;

time_parallel = 13.9833333333333;

time_sequential = 37.7333333333333;

n_different_ids = 1,000,000;

time_parallel = 141.233333333333;

time_sequential = 375.016666666667;

The parallel assign is copying a large matrix for each CPU, but the linear graphs don't suggest that to be a paging issue, at these sizes.

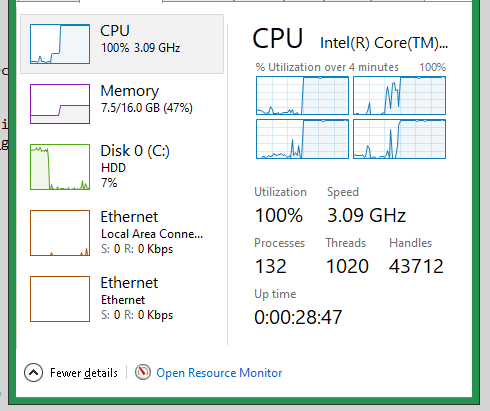

Watch the windows task monitor for CPU and Memory (and Disk) usage and see if it explains anything. I saw 100% during parallel assign and 25% during the sequential test. I also saw 7GB used during the bigger parallel assign.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

Hi Craige,

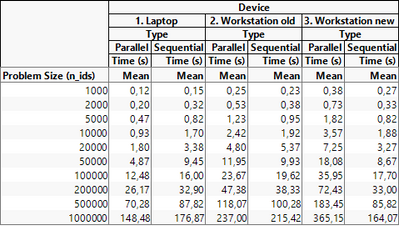

I'm just looking at my worst performing Workstation now (below). I did not see anything suspicious in the Task Manager. Except CPU, nothing is close to full occupation.

What I also noticed is that JMP 15.2.1 is always faster in the parallel and sequential versions. In the case of n=100,000 JMP 16.1 takes around 36s/18s (parallel/sequential) and JMP 15.2.1 31s/15s which is a little better but still far away from good parallel performance. Unfortunately, I don't have an older version available any more. I wrote this code using JMP 12 and didn't notice performance issues back then (and the PCs I was using were different, too).

Rob

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

I can confirm that behaviour on my Laptop (Win 10, JMP 16.1):

time_parallel = 158.7;

time_sequential = 157.766666666667;

n=1000000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

Not sure. I sent a note about this thread to JMP; you might want to talk to tech support as well.

My guess (and that's all it is!) is that JMP will need to change something to get the performance back on the new hardware. With only 4 virtual CPUs, I can't see the same thing you see.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

I also limited the number of cores in the task manager that are available to JMP to 2, 4 and 8 on the new workstation. The parallel version still remains slower than the sequential one. My guess is that JMP is using outdated libraries in the background that runs bad on new hardware architectures.

Thanks Craige, I will contact tech support about it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

For my 3.6GHz 4-core machine and N=100k:

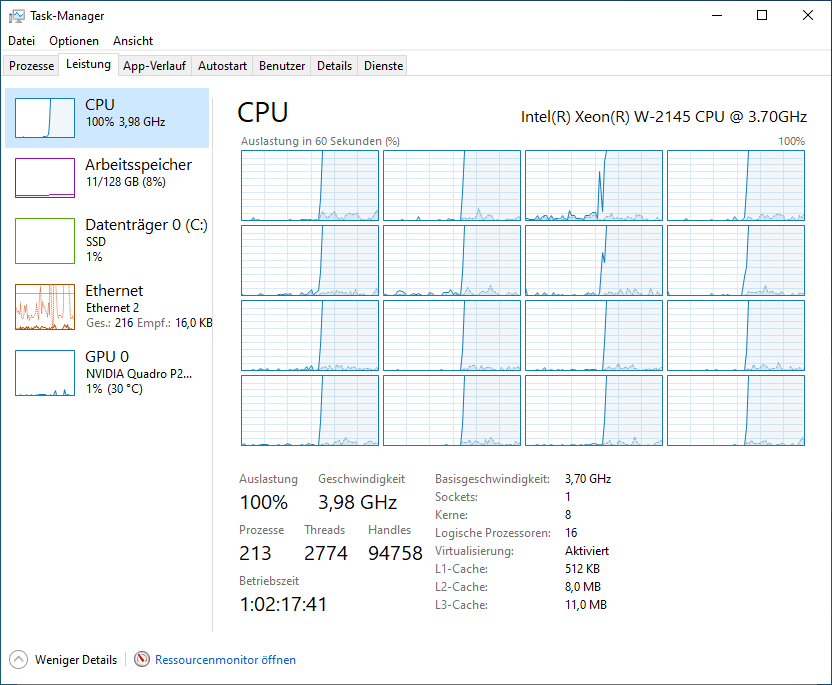

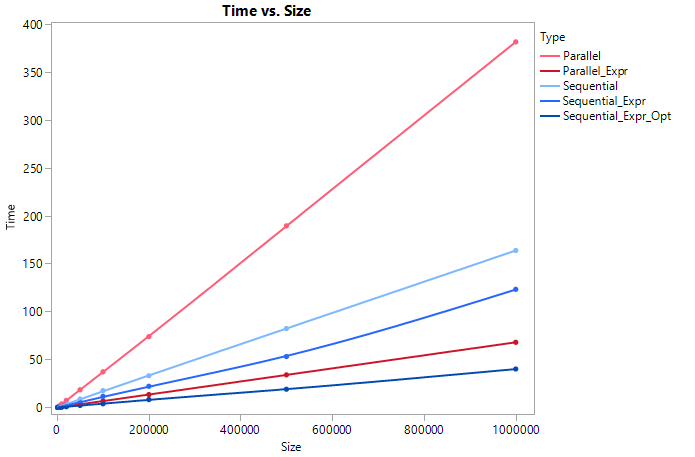

I am getting a 1.4X speedup using the parallel code over sequential (12.8s parallel vs 17.8s sequential). This isn't as good as the 2.7X speedup Craige was seeing and but it is better than the slowdown behavior you were seeing on the workstations. However, I wanted to see if I could get a little more performance out of this so I made some edits.

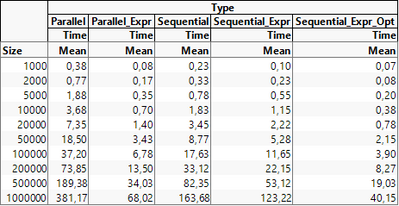

I removed the function call overhead (matrix argument copies, etc) by using expressions instead. This got me up to a 2.5X speedup (4.5s parallel vs 11.3s sequential) and a good bit faster than the original. And finally, I tried rearranging the loops a bit and got the sequential version down to 4s. This is faster than the parallel version but the rearranging is not as easy to do with Parallel Assign. I tested some different N and these patterns appeared to hold but I didn't do a full sweep like in your table above. Find the code attached.

Parallel: 12.78

Sequential: 17.80

Parallel_Expr: 4.50

Sequential_Expr: 11.27

Sequential_Expr_Opt: 3.98

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

Oh, wow thanks! Running your script on my newer Workstation I get

Parallel: 37.0833333333139

Sequential: 19.7166666666453

Parallel_Expr: 6.93333333331975

Sequential_Expr: 12.5333333333256

Sequential_Expr_Opt: 4.54999999998836

Let us not regard the Sequential_Expr_Opt one because it's an unfair comparison. :)

You just replaced the function by an expression and I get a speedup of approximately 1.5 for the sequential and more than 5 (!!!) for the parallel version? That's a great workaround. So what do I learn from this? Never use functions in JSL? That's a bit unpleasant because functions make code more readable and easier and safer to use and modify for others. However, I think there is still a problem somewhere behind the scenes becuase my original parallel version still takes twice the time of the original sequential one and this is not the case on Craige's virtual machine.

Update: Here is the full scan on the new Workstation:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

Hi @Robbb, two thoughts:

Programming for speed

The goal of your code can have a big effect on how you write it. For the vast majority of the scripts the additional time it takes to call a function or copy a symbol doesn't matter, so yes you should use functions and make the code easy to interpret. On the other hand if you are working a project that will is large and will be repeated enough times that you care how long it takes, then yes you might want to start trading some niceties for raw performance. I encourage you to only spend time optimizing things that actually matter though. Don't spend an extra hour coding something now, and then have to spend an extra hour figuring out what you did after a year because you made it more complex trying to speed it up, just to save users an extra 20 seconds once per day.

Parallel trade off

When deciding whether to execute in parallel you need to balance the overhead of setting up that 'child' thread with the work it is going to do. Imagine that you were going to run parallel jobs by opening another copy of JMP, opening a data table, and clicking run. If you leave it running for an hour then great, you will save a bunch of time over just letting one script do everything, but if that script is going to run for 20 seconds then you probably spent more time starting it then you saved. Yes the overhead here is a lot smaller, but copying symbols and allocating memory does take time. Make sure the work your script will do after the environment is set up is substantial. One way you can help make this happens is to break your loop up into just 4 parts and have each thread work on a quarter of the values at once. So, break your list into four parts, each with a quarter of the rows, and then have your script sequentially process each sub list. That way you reduce the time spent setting up child threads from n row() times to 4.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Parallel Assign() is slow on certain devices

Hi @ih ,

agree.

The overhead of setting up a child does not matter much in my case so I didn't spend much time for making this as effective as possible. This script is not meant to just run a few minutes. Before I started this topic I did exactly what you suggested. I broke my dataset down into 8 parts and it was finished within 0.5 days. For interest I tried Evan's "Sequential_Expr_Opt" version later, too, and it took something between 1.5 and 4 days to finish on my dataset (started it thursday morning, wasn't finished friday afternoon but monday morning).

This thread was to show that there is something wrong behind the scenes in general. In the past I noticed bad parallel performance of JMP every now and then and it bothered me. I like JMP and I like trying things with it what other people say can't be done with JMP. And I am more than happy that developers are looking into it.

However I must say there are better tools to deal with really large data sets than JMP. At least at the moment. ;)

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us