- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Neural nets_validation sets get higher model fits than training sets

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Neural nets_validation sets get higher model fits than training sets

Problem: Why my validation sets worked better than the training sets?

Data: 200 data points; 90% for training and 10% for validation

Statistical:

1. make validation column

Make Validation Column(

Training Set( 0.90 ),

Validation Set( 0.10 ),

Test Set( 0.00 ),

Formula Random);

2. neural nets:

one hidden layer, 3 nodes TanH

Boosting: number of models (10), learning rate (0.1)

Fitting options: Number of Tours( 10 )

3. the above steps were repeated for 1000 times

4. calculate the mean of the R squares for training sets and validation sets

Description of the problems:

The mean R square for the validation sets was much bigger than that of the training sets. Why would that happen? We even tried without a penalty method, the validation sets still worked better than the training.

How does JMP optimize the estimates parameters for the validation sets?

Why this is not the case if we use bootstrap forests? i.e., for bootstrap forests, training sets worked better than the validation sets.

Thanks.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

I forgot to mention that K-fold cross-validation is specifically for cases with small sample sizes. Did you try this method instead of a validation hold out set?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

No, the Fit Least Squares platform in JMP does not provide any cross-validation. The Generalized Regression platform in JMP Pro, which includes the standard linear regression model, provides both hold-out cross-validation in the launch dialog box and K-fold cross-validation as a platform validation option.

You might consider using AICc for model selection in place of cross-validation because of the small sample size.

You might also consider using open source software if it provides the flexibility you demand. You can connect to R or Python, for example, with JMP and work with both software.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

You are not bothering anyone here! Questions like yours are one of the main reasons for having the Discussions area in the JMP Community! On the other hand, some discussions might be beyond the scope or capability of this format.

NOTE: we are discussing predictive models, not explanatory models. As such, we only care about the ability of the model to predict the future. The future is unavailable while training the model and selecting a model.

Cross-validation of any kind is not the result of a theory. It is a solution to the problem of how to perform an honest assessment of the performance of models before and after selecting one model. The hold out set approach was developed first for cases of large data sets. Inference is not helpful to model selection in such cases. The future is not available. What to do? Set aside, or hold out, a portion of the data (validation). These data are not available to the training procedure. Then apply the fitted model to the hold out set to obtain model predictions. How did the model do? How do other models do?

Since the validation data was used to select the model, some modelers hold out a third portion (test) to evaluate the selected model.

Cross-validation is a very good solution but relies on the large size of the original set of observations. What to do when the sample size is small? The K-fold approach was developed specifically for such cases. Some modelers take it to the extreme and set K to the sample size. This version is known as 'leave one out.' It works better than hold out sets in such cases because you are using the data more efficiently.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

You are right that this is not a typical result - although it can and does happen. I suspect, in this case, that it is predominantly the result of your initial split. A 10% validation set, particularly with such small number of observations, means that the validation results are for a model applied to a very small number of data points. Neural networks may compound the problem, but even an OLS model will do a good job of fitting 20 data points. I believe if you change the training/validation split to 70%/30% (and particularly if you also have more data) that this anomaly will disappear.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

Please confirm my understanding of your study. You re-assigned rows to the training and validation hold out sets before each of the 1000 training events?

JMP does not optimize the training for the validation hold out set. It only evaluates the validation hold out set after the training is complete.

What is the mean and standard deviation of the R square sample statistics for the training and validation hold out sets?

What is the nature of the response? Is it continuous? Is it categorical? If so, how many levels in the response?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

Thank you. To further explain my study:

For each iteration I used a new splitting in training and validation set.

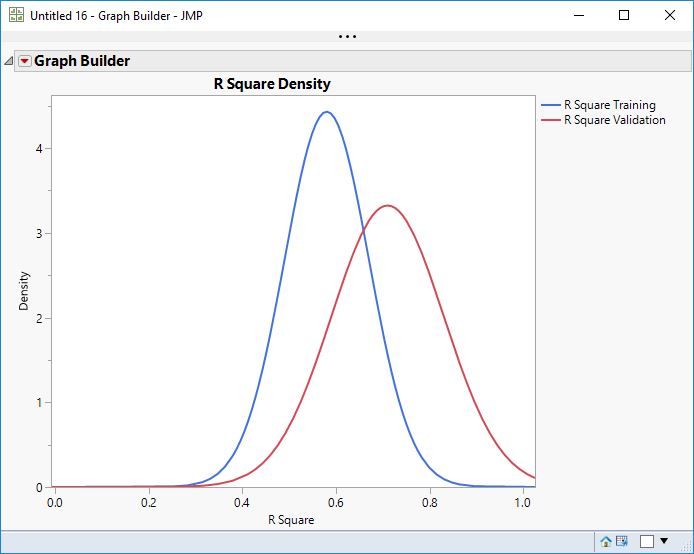

There are the results for training and validation: mean R2train = .58, SDtrain = .09; mean R2val = .71, SDval = .12

The response parameter is a continuous variable.

The pattern is the same for a 70%/30% splitting. So, I did not really understand what happens in validation. There should be more than just testing the model?

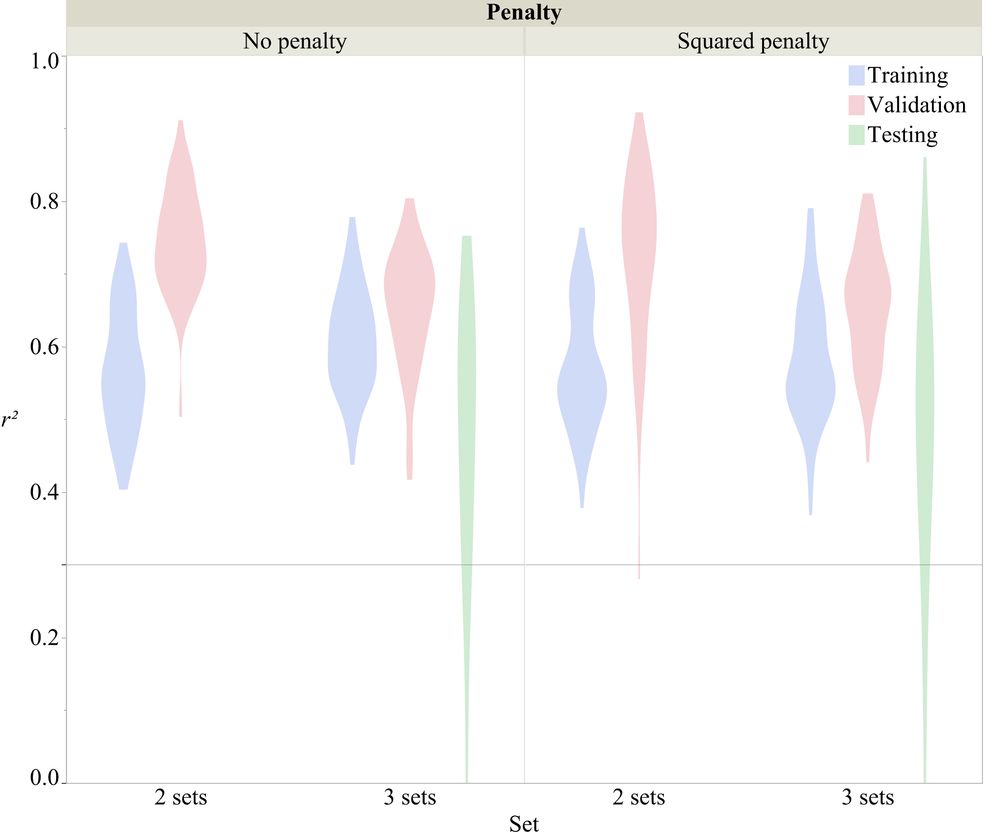

For example, when I use a 3-step procedure (training, validation and test), mean R square for validation sets is again higher than that for training, while there is a drop down for test. So what is the difference between test and validation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

How many features do you have? I am still suspicious about the small sample size. Notice that both the R-square and Std dev are larger for the validation data. I think, if you have enough features, that you can get a good "fit" to the relatively small validation set (even with 30% split) that will not carry over to the test data. Perhaps mbailey can shed some light on this - but my impression is that the validation data is used in tuning the neural net, so it is not the same as the test data which is not at all used in the modeling process. So, with few data points and enough features, you can fit the validation data very well, but with poor performance on the test data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

Thank you for your suggestions. We have seven features to be used as the predictors. And in some cases the Std is not bigger for the validation sets: mean R2train = .56, SDtrain = .11; mean R2val = .70, SDval = .11.

And we also tried with twelve predictors. The patterns of our results are the same: the mean R squares of the validation sets from 1000 iterations were always bigger than that of the training sets.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

If I understand your description then I am a bit puzzled. By 1000 iterations, do you mean you ran the neural net on 1000 random splits of the data set? If so, then I think it is strange if every one of those splits resulted in better performance on the validation data than on the training data. My concerns about the sample size and number of features would account for a number (even many) of the iterations behaving that way, but probably not all 1000. If I am understanding you correctly, then it may be something particular to your data and the particular features you have. If you are willing to post the data, I might be able to help more, but I think this exhausts my thoughts based on what you have said - unless I do not understand what you have done.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

I am not sure but I think that the claim is not of superiority. It is based on the difference in the mean R square between the training and the validation sets. In fact, one can't be superior because the sampling distributions overlap. Using a normal distribution to approximate the sampling distribution of R square, I get these two graphs of the normal PDF using the stated distribution parameter values:

NOTE: I know that R square is not normally distributed because of its bounds [0,1] but this case is far enough away from both boundaries for the approximation to work well enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

Thanks for the suggestions. Yes, 1000 iterations mean that we have 1000 random splits of the data set. And it is true that the R squares for the validation sets are not always bigger than the training sets (see the Figure). But the mean of the R squares oth the validation sets is always bigger than that of the training sets for all our response parameters. I am doing the analyses with k-fold now. Let's see what would happen.

As Mr. Bailey mentioned:

"The training set is used to estimate the model parameters. The validation set is used to select from the candidate models. The test set is used to assess the selected model."

I have two questions related to the above sentences:

- In the process of training, how many candidates would be prepared? How do the candidates calculate? Is it decided by the boosting – “fit an additive sequence of models scaled by the learning rate”?

- How does the software choose the best candidate? What is the criterion for the best candidate? Is it the candidate which gives the best R Square for the validation sets?

As for the k-fold, I also have two questions:

- If we have 200 data points and we used a division of 90%/10% for the training and validation set (no overlap), then we will probably get a training set of 180 data points and a validation set of 20 data points. This is the same if we use a 10-fold validation method. Is that right? What is the difference between k-fold validation and 90%/10% validation?

- In the K-fold cross-validation, again what is the criterion for the best candidate? How could find the optimized model? Is it the same method as for the 90%/10% validation?

In the manual I read “When K-fold crossvalidation is used, the procedure described is applied to each of the K training-holdback partitions of the data for each trial value of the penalty parameter. After the model has been fit to all the folds, the validation set likelihood is summed across the folds using each of the individual fold's parameter estimates. This is the value that is optimized in the selection of the penalty parameter. The fold whose parameter estimates give the best value on the complete dataset are the parameter estimates that are kept and used by the platform. The training-holdback pair that led to the model that was the best are the pair whose model diagnostics are given in the report.” Does this mean that model which has the biggest summed likelihood is the best?

Thank you again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural nets_validation sets get higher model fits than training sets

The training set is used to estimate the model parameters.The validation set is used to select from the candidate models. The test set is used to assess the selected model.

I agree with @dale_lehman that your data set is too small to use the hold-out cross-validation method. I recommend K-fold cross-validation.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us