- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: MS SQL query with timestamp / datetime / date data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

MS SQL query with timestamp / datetime / date data

Hello everyone,

we want to save a data table to a database and later query the database (using MS SQL) to retrieve the table. Since we run into some problems transferring the timestamp to the database, we set up a workaround. First of all, the table in JMP has a "date" column named "SecondsSince1904", where the datetime is stored as elapsed seconds since the 1904 epoche (01.01.1904 00:00:00). This column is formated as "Fixed Dec (30,0)". To transfer the data table to the database, we use the following script:

Names Default To Here(1);

dt = Current Data Table();

// This will delete the table "yourTable" in the database. BE CAREFUL!!!

Open Database(

"yourDSNconnectionString",

"DROP TABLE dbo.yourTable"

);

// This will upload the table "dt = Current Data Table" to the database, where it will be saved as "yourTable".

dt << Save Database("yourDSNconnectionString",

"dbo.yourTable"

);

// After saving the table to the database, we find the column "SecondsSince1904" formatted as FLOAT.

// Now we want to manipulate the table "yourTable" and add a computed column named "timestamp", where we will display the correct timestamp,

// i. e. something like "2015-09-26 00:00:02.000".

// We do this by calculating the correct timestamp from the column "SecondsSince1904". We also add an additional column named "SecondsAfterMidnight" just for fun.

dbc = Create Database Connection(

"yourDSNconnectionString"

);

Execute SQL(dbc,

"

ALTER TABLE dbo.yourTable ADD timestamp AS

DATEADD(ss, (CAST(yourTable.SecondsSince1904 AS BIGINT)%86400),

DATEADD(dd, (CAST(yourTable.SecondsSince1904 AS BIGINT)/86400), '1904-01-01' ) );

ALTER TABLE dbo.yourTable ADD SecondsAfterMidnight AS

(CAST(yourTable.SecondsSince1904 AS BIGINT)%86400)

"

);

Close Database Connection(dbc);

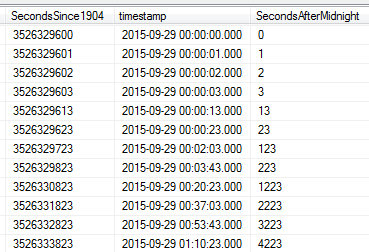

So far so good. No problem up to this point. The "SecondsAfter1904" are correctly transferred to the database and the timestamp is also calculated correctly. Have a look in the database:

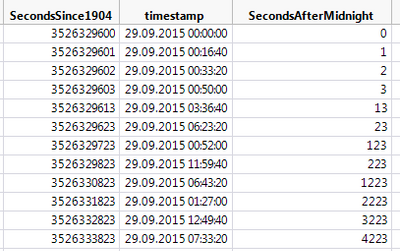

Now we query the database and retrieve the table back into JMP by going to File > Database > Open Table. And this is the point where things get messed up. Have a look in JMP:

The timestamp is corrupt. Some error happend. Maybe a bitshift or something like that. To draw conclusions, i incremented the SecondsSince1904 "bitwise".

Does anyone know what happened here? Is this a bug? Any help is appreciated.

Cheers,

Victor

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

So, finally it turned out, that there must be a conversion issue within the database when a query is started. I try to explain this:

Let´s assume that some timestamps are stored in the "datetime" format in a database. When i then run a query, the timestamps become erroneous upon transfer, like in the example above. The same is happening when the timestamps are stored in the "datetime2" format, which is by default "datetime2(7)".

However, if i cast a column in the database from, let´s say "datetime2(7)" to "smalldatetime", i get the same error. Therefore i speculate, that the issue is not within JMP, but within the database. I think, that JMP is sending some CAST or CONVERT command to the column with the timestamps before downloading them. And i guess that this is the moment where the problem is occuring.

To overcome this is issue, it is possible to CONVERT the column with the timestamps to the "datetime2(0)" format. This is the format JMP reads properly.

Here is the complete code:

Names Default To Here(1);

dt = Current Data Table();

dt << Save Database("yourDSNconnectionString",

"dbo.yourTable"

);

dbc = Create Database Connection(

"yourDSNconnectionString"

);

Execute SQL(dbc, // Here we calculate the correct timestamp/date from the Macintosh or JMP epoche (01.01.1904 00:00:00)

"

ALTER TABLE dbo.yourTable ADD timestamp AS

DATEADD(ss, (CAST(yourTable.SecondsSince1904 AS BIGINT)%86400),

DATEADD(dd, (CAST(yourTable.SecondsSince1904 AS BIGINT)/86400), '1904-01-01 00:00:00' ) );

"

);

Execute SQL(dbc,

"

ALTER TABLE dbo.yourTable ADD Date datetime2(0);

"

);

Execute SQL(dbc,

"

UPDATE dbo.yourTable

SET Date = CONVERT(datetime2(0), timestamp);

"

);

Execute SQL(dbc,

"

ALTER TABLE dbo.yourTable DROP COLUMN timestamp, SecondsSince1904

"

);

Close Database Connection(dbc);

Maybe this is helpful for somebody

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

I take a slightly different approach to dates/datetimes when reading from a database. I convert them to a character string, and then once I'm in JMP I convert back to date or datetime. That way I know it will be correct. I'm using to_char in Oracle. I see that SQL Server uses CONVERT or CAST to do something similar. I have functions in JMP that convert my string dates to JMP dates.

Going the other way (JMP to SQL Server) I'm not sure why you're having that problem. Is the default date format in SQL Server something worth investigating?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

Well, doing like you suggested (having the dates in the database as a string) was one of the options i considered. But i thought it would be convenient to have the dates in the database stored in the datetime format. However, if i save the JMP table to the database, the dates are stored as a string formatted in char. So i don´t need to CONVERT from datetime to char and i don´t know why the dates are not stored in the datetime format in the database by default. That´s why i wrote the above script...

I guess that i will also end up with your solution, i. e. having the dates in the database as strings formatted in char. But that´s a little disapointing to me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

You miss understand. Save the date in the database as a DateTime format. What PMroz is suggesting is to convert everything to date strings before sending them to a differently platform. This is a handy tip when dealing with different epochs.

You are having issues going from JMP to SQL Server b/c you drop the data table. You should just clear it with "TRUNCATE TABLE table_name". There isn't a date/time column in JMP, they are only numeric columns that are formatted as datetime. As such, when you use << Save Database, JMP just creates a numeric column since it's oblivious to time. However, if you already have a SQL Table with a column formatted as a datetime and insert into it, then you should be fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

Thank you msharp. I will try your suggestion with the TRUNCATE command and INSERT INTO an exisiting table. But i currently don´t know how to specify correctly from which data table in JMP the data should be taken. Maybe your helpful contribution in this thread will lead me to a solution...

However, i was able to solve the original problem by using the datetime2(0) in the database, as i described below.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

This is conjecture, but it looks like every Second is adding 1000 seconds. Then after 86.4 seconds it resets to 0. It seems to be an issue with the way the formula is interacting JMP when pulled.

Are you using the JMP epoch when you insert into SQL? SQL is 1-1-1900. Might be the reason you're having trouble inserting into SQL correctly.

diff = num("1-1-1904") - num ("1-1-1900");

time = (num("7-14-1914 14:14:14") + diff)/86400;

//In SQL

db = Create database connection("DRIVER=SQL SERVER;SERVER=BLAH\SQLEXPRESS;Database=Test_DB");

Execute SQL(db,

"SELECT CAST("||char(time)||" AS DATETIME) AS 'timestamp'"

);

close database connection(db);

This returns the right value for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

Although conjecture, i think you are correct. It really seems like every second adds 1000 seconds. Also the reset is happening somewhere between 23 and 123 seconds. I could imagine, that the issue is that the datetime format in SQL features milli, micro and nanoseconds (dd.mm.yyyy hh:mm:ss.mmmmmmn). Continuing the speculation, it could be, that up on import from the database to JMP, JMP thinks that 1000 milliseconds is 1000 seconds instead of 1 second.

I played with a few formats and styles but couldn´t teach JMP how to import the table from the database correctly. Here is my latest code, which assures that the timestamp format in the database is the same as in JMP. The format is called "smalldatetime" and looks like "dd.mm.yyyy hh:mm:ss".

...

Execute SQL(dbc,

"

ALTER TABLE dbo.berstTry01 ADD timestamp AS

DATEADD(ss, (CAST(berstTry01.SecondsSince1904 AS BIGINT)%86400), CAST(

DATEADD(dd, (CAST(berstTry01.SecondsSince1904 AS BIGINT)/86400), '1904-01-01 00:00:00' ) AS smalldatetime) ) ;

ALTER TABLE dbo.berstTry01 ADD SecondsAfterMidnight AS

(CAST(berstTry01.SecondsSince1904 AS BIGINT)%86400);

"

);

...

Unfortunately this also didn´t work. And right now i have no more clue what to do to make it work

The formula applied in the database is actually not interacting with JMP at all. And the calculation is obviously correct, as can be inferred from the first picture. What is happening is, that an INTEGER is transferred to the database and the values of the INTEGER happens to be the elapsed seconds since the "JMP epoche" (seconds since 1904).

If i run your example, i get the correct date, but a wrong time (18:07:17 instead of 14:14:14)...

The more i investigate this issue, the more i have the feeling this is actually a bug. I am running JMP 12.2.0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MS SQL query with timestamp / datetime / date data

So, finally it turned out, that there must be a conversion issue within the database when a query is started. I try to explain this:

Let´s assume that some timestamps are stored in the "datetime" format in a database. When i then run a query, the timestamps become erroneous upon transfer, like in the example above. The same is happening when the timestamps are stored in the "datetime2" format, which is by default "datetime2(7)".

However, if i cast a column in the database from, let´s say "datetime2(7)" to "smalldatetime", i get the same error. Therefore i speculate, that the issue is not within JMP, but within the database. I think, that JMP is sending some CAST or CONVERT command to the column with the timestamps before downloading them. And i guess that this is the moment where the problem is occuring.

To overcome this is issue, it is possible to CONVERT the column with the timestamps to the "datetime2(0)" format. This is the format JMP reads properly.

Here is the complete code:

Names Default To Here(1);

dt = Current Data Table();

dt << Save Database("yourDSNconnectionString",

"dbo.yourTable"

);

dbc = Create Database Connection(

"yourDSNconnectionString"

);

Execute SQL(dbc, // Here we calculate the correct timestamp/date from the Macintosh or JMP epoche (01.01.1904 00:00:00)

"

ALTER TABLE dbo.yourTable ADD timestamp AS

DATEADD(ss, (CAST(yourTable.SecondsSince1904 AS BIGINT)%86400),

DATEADD(dd, (CAST(yourTable.SecondsSince1904 AS BIGINT)/86400), '1904-01-01 00:00:00' ) );

"

);

Execute SQL(dbc,

"

ALTER TABLE dbo.yourTable ADD Date datetime2(0);

"

);

Execute SQL(dbc,

"

UPDATE dbo.yourTable

SET Date = CONVERT(datetime2(0), timestamp);

"

);

Execute SQL(dbc,

"

ALTER TABLE dbo.yourTable DROP COLUMN timestamp, SecondsSince1904

"

);

Close Database Connection(dbc);

Maybe this is helpful for somebody

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us