- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: If we are seeing "Training" and "Validation" results in ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

If we are seeing "Training" and "Validation" results in the output even when we are applying the model to the test data and training data separately in JMP pro. then what to do in order to obtain the results of only testing and training data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: If we are seeing "Training" and "Validation" results in the output even when we are applying the model to the test data and training data separately in JMP pro. then what to do in order to obtain the results of only testing and training data

You do not provide many details, so I will make some guesses. I would guess that you are fitting a neural network. A neural network requires a validation set. So even if you only have training data in your table, a validation set will be created (and you should have seen those options before requesting a fit).

My second guess is that you have two tables: one with training data and another with validation data. If that is the case, combine the tables into one table with a column indicating whether the row belongs to training or validation. Now when you go to fit the model, put the column indicating training or validation into the validation field of the modeling dialog. The output will now provide the statistics for the two sets as you desire.

If my guesses are wrong, please provide more details so we can help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: If we are seeing "Training" and "Validation" results in the output even when we are applying the model to the test data and training data separately in JMP pro. then what to do in order to obtain the results of only testing and training data

Hi @MathBayesWhale4,

Welcome in the Community !

In order for other members of this forum to answer to your question in a fast, easy and precise way, it's a great idea to provide as much context as possible, details about platforms used and JMP version you use, screenshots of the situation you encounter, and if possible a small (anonymized or JMP sample) dataset where you can show and reproduce the situation you're facing.

You can read more about how to frame your question on the post from @jthi : Getting correct answers to correct questions quickly

Coming back to your question, some reminders about the terms used so that the different sets and their use and needs are clear :

- Training set : Used for the actual training of the model(s),

- Validation set : Used for model optimization (hyperparameter fine-tuning, features/threshold selection, ... for example) and model selection,

- Test set : Used for generalization and predictive performance assessment of the selected model on new/unseen data.

This is extremely rare (if not "never seen") to not use a validation strategy if you're in predictive mindset and using Machine Learning algorithm, as you need some data to adjust the learning (through the different hyperparameters settings, feature selection, etc...), and/or compare the different possible models to select one (if you have trained several models). There are many validation strategies available, but I won't dive deeper into this topic as it doesn't seem to be related to your question. If needed, you can read this answer : Solved: Re: CROSS VALIDATION - VALIDATION COLUMN METHOD - JMP User Community

Related to your question, there are very few info about the algorithm/platform you used, and which version of JMP you're using (JMP/JMP Pro, licence version,...).

If the guesses from @Dan_Obermiller are true and that you're using the Neural platform, a validation is needed so that the different weights of the nodes in the neural model could be adjusted to your data without the risk of overfitting. There are some validation options by default in the JMP version, but a more flexible option could be used with a validation column in JMP Pro : Validation Methods for Neural

- So if you have JMP licence, you could split your data into two parts : one table for training+validation, and one table for testing. Fit the Neural on the first table for training and validation, save the prediction formula columns, and copy-paste the formula on the test table. You can then analyze the model fit graphically and statistically on the test table (creating a graph with Graph Builder to display Predicted vs. Actual values, Residuals, etc...).

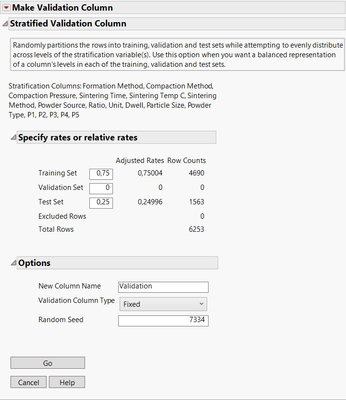

You could also use all data in one table, and "Hide and Exclude" the rows that will be used for Test set, so that the Neural Network doesn't use this data for training and validation. Saving the formula in the table will give you predicted values also for the hidden and excluded rows. - If you have JMP Pro, you can create a Validation Column. It's a column with numbers 0, 1 and 2 with the corresponding labels "Training", "Validation" and "Test". If for any reason you don't want a validation set, you can always set up only training and test sets :

Hope this additional answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us