- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Difference between nodes in same layer of neural network?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Difference between nodes in same layer of neural network?

I am currently in the process of wrapping up the final practices of 7.3 Neural Networks in the Predictive Modeling and Text Mining module in the JMP STIPS course. Fitting a neural network and understanding the basic function of a node in the hidden layer as a regression model appears intuitively easy. Yet, I am wondering whether and hoping that someone with more insight in the community could provide answers to the questions I still have after the very basic training I just underwent. For the example I want to discuss I used file GreenPolymerProduction.jmp and the standard version of JMP. A copy of the file including the model is attached.

The diagram of the model is shown below. We can see that data for each of the eight predictors is fed into each of the three nodes in the hidden layer. Each node in the layer uses a TanH activation function.

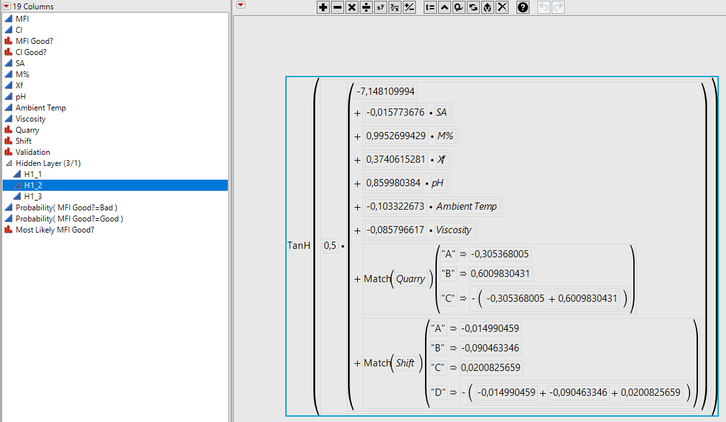

I've saved the formulas for the model to the table. The screenshots of the formulas used in each of the three nodes are shown below.

Question #1: How can I interpret the fact that each node has a different formula for the same activation function?Intuitively it appears naive to expect that the same formula should be used in each node, but I am still wondering how JMP arrives at the results shown below. One interpretation I thought of was that each node receives different input data, which would lead me to expect different formulas as shown below, but this assumption may be grossly wrong.

Question #2: Anyone who can share good references (using preferably phenomenological explanations) for reading up on neural networks for beginners?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between nodes in same layer of neural network?

Hi @Ressel,

Happy New Year to you !

Concerning your questions:

- The Universal Approximation Theorem (https://en.m.wikipedia.org/wiki/Universal_approximation_theorem) states that neural networks with sufficient hidden layers and number of neurons are able to represent any linear or non-linear function. They are able to approximate this large variety of functions/profiles, as they rely on a weighted combination of multiple simple mathematical functions (TanH, sigmoid, ...). Each node is just trying to have a useful piece of the puzzle (function) it is trying to solve, so the same data comes in, but different parts (of the final function) are created to be combined and get to the desired output.

On your screenshot, three TanH functions are combined with different weights (and different formulas mixing the inputs) to get to the final prediction formula.

About the ressources :

- You can have a look at this website that explains in great details an example to reconstruct a function with neural networks : http://neuralnetworksanddeeplearning.com/chap4.html#:~:text=No%20matter%20what%20the%20function,m)%2...

- I also really like the YouTube Channel StatQuest, where Josh Starmer did a really good explanation of the inner workings of a neural network on a simple use case : https://youtu.be/CqOfi41LfDw?si=leA0KeTcgSY3YW70

- There is also a dedicated learning page on Neural Networks done by the team of MLU Explain, really well done and easy to follow : https://mlu-explain.github.io/neural-networks/

- Finally, you can also try different neural networks configurations on various use cases classification/regression in this interactive neural network playground, to see how the training and fitting of the weights are done : https://playground.tensorflow.org/#activation=tanh&batchSize=10&dataset=circle®Dataset=reg-plane&...

I hope this first answer will help you :)

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between nodes in same layer of neural network?

Hi @Ressel,

Happy New Year to you !

Concerning your questions:

- The Universal Approximation Theorem (https://en.m.wikipedia.org/wiki/Universal_approximation_theorem) states that neural networks with sufficient hidden layers and number of neurons are able to represent any linear or non-linear function. They are able to approximate this large variety of functions/profiles, as they rely on a weighted combination of multiple simple mathematical functions (TanH, sigmoid, ...). Each node is just trying to have a useful piece of the puzzle (function) it is trying to solve, so the same data comes in, but different parts (of the final function) are created to be combined and get to the desired output.

On your screenshot, three TanH functions are combined with different weights (and different formulas mixing the inputs) to get to the final prediction formula.

About the ressources :

- You can have a look at this website that explains in great details an example to reconstruct a function with neural networks : http://neuralnetworksanddeeplearning.com/chap4.html#:~:text=No%20matter%20what%20the%20function,m)%2...

- I also really like the YouTube Channel StatQuest, where Josh Starmer did a really good explanation of the inner workings of a neural network on a simple use case : https://youtu.be/CqOfi41LfDw?si=leA0KeTcgSY3YW70

- There is also a dedicated learning page on Neural Networks done by the team of MLU Explain, really well done and easy to follow : https://mlu-explain.github.io/neural-networks/

- Finally, you can also try different neural networks configurations on various use cases classification/regression in this interactive neural network playground, to see how the training and fitting of the weights are done : https://playground.tensorflow.org/#activation=tanh&batchSize=10&dataset=circle®Dataset=reg-plane&...

I hope this first answer will help you :)

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between nodes in same layer of neural network?

Cheers @Victor_G, I have no doubt that this will improve my understanding. I appreciate it.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us