- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Decision Tree question

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Decision Tree question

Hi,

I created a simple DOE table as attached and tried to do a decision tree but JMP does not produce result as expect.

Any input is appreciate.

Thanks,

Long

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Decision Tree question

Hi @LGH,

Welcome in the Community !

I would recommend reading the post from @jthi Getting correct answers to correct questions quickly before posting in the Forum, as you provide no explanation to your expected result, and the one you obtain from the Decision Tree.

If your dataset comes from a designed experiment (DoE), why not using the least squares model regression provided by default in JMP ?

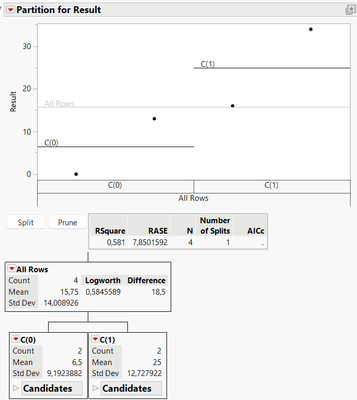

Is your problem coming from the fact that your sample size is too low, which prevents the Decision Tree to split the data (so no results, no splitting possible when using Decision Tree "as it is" ?) ? If yes, click on the red triangle next to the Decision Tree to open the options, and change the Minimum Size Split (by default at 5) to another value that allows the Decision Tree to grow in your case (2 could work, you can have one split) :

The default setting in JMP for this Decision Tree parameter is set to avoid overfitting: creating a Decision Tree that splits perfectly the samples depending on the variables, leading to a perfect model with leaves having low sample size, but practically useless and non-robust when using it to predict new unseen samples (test dataset). In practice, to use Machine Learning models like this one, you would need to have a validation strategy, using training, validation and test sets. There are methods to avoid splitting "too much" your dataset and not using it to train the model (like cross-validation, leave-one-out, etc...), but at the end you'll still need a test set to ensure prediction performances from test set are consistent with the training/validation sets. You can read some discussions on this topic in the forum CROSS VALIDATION - VALIDATION COLUMN METHOD / How to perform k-fold cross-validation on ML models (e.g. neural network and random forest) and chec... / ...

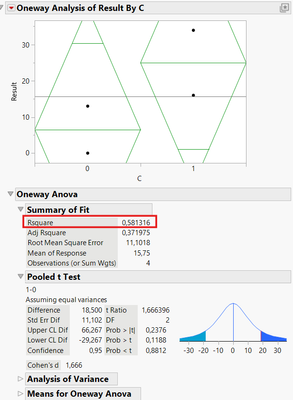

Note that in this particular case, due to the low dataset size and the linear relationship between the results and factor C, the results would be the same with a linear regression model or the platform Fit Y by X:

Hope this answer will give you some ideas,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Decision Tree question

Hi @LGH,

Welcome in the Community !

I would recommend reading the post from @jthi Getting correct answers to correct questions quickly before posting in the Forum, as you provide no explanation to your expected result, and the one you obtain from the Decision Tree.

If your dataset comes from a designed experiment (DoE), why not using the least squares model regression provided by default in JMP ?

Is your problem coming from the fact that your sample size is too low, which prevents the Decision Tree to split the data (so no results, no splitting possible when using Decision Tree "as it is" ?) ? If yes, click on the red triangle next to the Decision Tree to open the options, and change the Minimum Size Split (by default at 5) to another value that allows the Decision Tree to grow in your case (2 could work, you can have one split) :

The default setting in JMP for this Decision Tree parameter is set to avoid overfitting: creating a Decision Tree that splits perfectly the samples depending on the variables, leading to a perfect model with leaves having low sample size, but practically useless and non-robust when using it to predict new unseen samples (test dataset). In practice, to use Machine Learning models like this one, you would need to have a validation strategy, using training, validation and test sets. There are methods to avoid splitting "too much" your dataset and not using it to train the model (like cross-validation, leave-one-out, etc...), but at the end you'll still need a test set to ensure prediction performances from test set are consistent with the training/validation sets. You can read some discussions on this topic in the forum CROSS VALIDATION - VALIDATION COLUMN METHOD / How to perform k-fold cross-validation on ML models (e.g. neural network and random forest) and chec... / ...

Note that in this particular case, due to the low dataset size and the linear relationship between the results and factor C, the results would be the same with a linear regression model or the platform Fit Y by X:

Hope this answer will give you some ideas,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Decision Tree question

Hi Victor,

Thank you very much for your reply. Yes, my problem was no splitting due to small sample size. Your instruction works.

Regards,

LGH

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us