- Due to inclement weather, JMP support response times may be slower than usual during the week of January 26.

To submit a request for support, please send email to support@jmp.com.

We appreciate your patience at this time. - Register to see how to import and prepare Excel data on Jan. 30 from 2 to 3 p.m. ET.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- How to perform k-fold cross-validation on ML models (e.g. neural network and ran...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

How to perform k-fold cross-validation on ML models (e.g. neural network and random forest) and check model performance on fixed test data?

I have classification dataset on which I want to fit neural network and random forest model. I am performing the k-fold cross validation for selecting best model.

I have separate data (fixed test data) on which I want to test the model performance. How can I pass that data to JMP and get classification report?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to perform k-fold cross-validation on ML models (e.g. neural network and random forest) and check model performance on fixed test data?

Hi @abhinavsharma91,

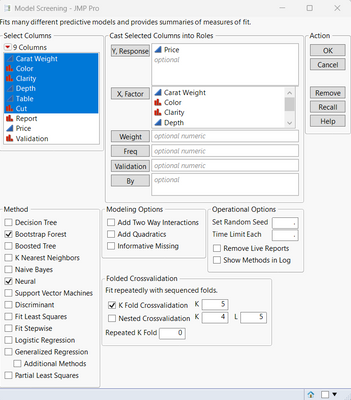

In order to use K-folds crossvalidation on several Machine Learning models, you can use the platform "Model Screening" (accessible in "Analyze", "Predictive Modeling", "Model Screening"), and select which algorithms you would like to try (in your example Neural Networks and Random Forest), and the number of folds used for crossvalidation (example here on toy dataset "Diamonds Data" with a 5-folds crossvalidation):

For your test data, there are several options :

- If your data are split in two datasets (one for training, one for testing), you can run the model on your training datatable with cross-validation, save the formulas for the best performing model, and then copy and paste the model's formula column on your test datatable, so that no data leakage is done for the unbiased assessment of the model's performances on test data.

- You can also have all your data on the same datatable, but "hide and exclude" the rows that will be used for testing; in this way, the models won't use your test data for the training and cross-validation, but by saving the formulas you will still have predictions for your hidden & excluded rows (and on the same table).

I hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to perform k-fold cross-validation on ML models (e.g. neural network and random forest) and check model performance on fixed test data?

Hi @abhinavsharma91,

In order to use K-folds crossvalidation on several Machine Learning models, you can use the platform "Model Screening" (accessible in "Analyze", "Predictive Modeling", "Model Screening"), and select which algorithms you would like to try (in your example Neural Networks and Random Forest), and the number of folds used for crossvalidation (example here on toy dataset "Diamonds Data" with a 5-folds crossvalidation):

For your test data, there are several options :

- If your data are split in two datasets (one for training, one for testing), you can run the model on your training datatable with cross-validation, save the formulas for the best performing model, and then copy and paste the model's formula column on your test datatable, so that no data leakage is done for the unbiased assessment of the model's performances on test data.

- You can also have all your data on the same datatable, but "hide and exclude" the rows that will be used for testing; in this way, the models won't use your test data for the training and cross-validation, but by saving the formulas you will still have predictions for your hidden & excluded rows (and on the same table).

I hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us