- Due to inclement weather, JMP support response times may be slower than usual during the week of January 26.

To submit a request for support, please send email to support@jmp.com.

We appreciate your patience at this time. - Register to see how to import and prepare Excel data on Jan. 30 from 2 to 3 p.m. ET.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Adding data distributions in JMP profiler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Adding data distributions in JMP profiler

Profiler is used to understand black box models but we cannot see the distribution of data in it.

How can we add those in JMP 14 or 15?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

Here is the proposal to include this feature in next versions:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

I am very curious to see what you are trying to do. Your image file did not come through, could you possibly re-post the image?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

Sure.

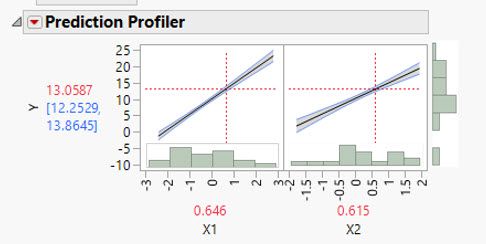

This is an example where you can see the prediction with an overlay of the distributions (it is using partial dependence plots, but it is something to visualize what the profiler can do).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

Thank you for sharing. Just a few thoughts to keep in mind on this.

First, and probably most important, JMP 15 is already out, so this feature won't be in version 15! You can look in the JMP Wish List area to see if someone has already suggested it, and if so, vote for it. If it is not there, you can always add the feature.

I am looking at the link you provided, and there is no real text to explain what I am looking at. But all good graphs should be self-explanatory and I think I see what is going on here. But full disclosure: I might be missing something yet so keep that in mind as you read the rest of my comments.

When JMP creates the profiler, the ranges for the input variables are determined by the data, so extrapolation requires extra intervention by the user. Because the ranges for the inputs will form a cuboidal region (or hyper-cuboidal), extrapolation would only be possible if the relationships among the inputs cause the ability to be outside of the data range, but within the cuboidal region. The plots you show (I think) would suffer from the same issue.

The profiler plots the relationships from the model, which actually makes things much cleaner. For example, suppose I fit a regression model Y=B0 + B1*X1 + B2*X2 +B12*X1*X2. You will get a profiler showing the modeled relationships. If you were to try and put the data on that graph, I'm not sure where it would go. Would it go in the profiler for X1? X2? Repeat the data in both X1 and X2? If the X1 part of the graph is just a scatterplot of X1 versus Y, then the graph could be misleading because the interaction of X1 and X2 makes the Y vs X1 scatterplot non-informative as the interaction could hide the X1, Y relationship. In other words, you might look at the picture and say the model is not doing well, when in fact, it is doing great because the interaction is an important part of the model.

Another way to understand how well the model is predicting the response and showing the actual response data would be to look at the observed versus predicted plot. It shows the ranges of the observed data, you see the predictions from the model (including very complicated models and many factors), and you see how well the model is predicting.

I am including a small data table that I created to illustrate my point. The scatterplots of X1 and X2 versus Y show a poor, if any, relationship. But the model with the interaction is very significant and the observed versus predicted plot shows that very clearly. Also, notice the ranges for X1 and X2 on the profiler. They are roughly -3 to 3, the same range for X1 and X2 that is found in the data.

Finally, because the link you provided called them "partial dependence plots", maybe you want to look at the leverage plots that JMP provides. The leverage plot, while calculated in a different way (and my explanation is explaining them in a "non-technical fashion"), is showing the relationships between the response and the inputs, after having adjusted for the impact of the other variables, just like a "partial dependence plot" needs to do. I think that picture might be getting closer to what you want.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

Thanks for the example, this is what I would like to see in that case:

Sometimes, long tails or outliers that are part the initial data can be misleading in the profiler. Starting with partition based models (e.g. bootstrap) is a fast way to descriminate factors given how robust they are amongst those. As the profiler is not showing the data, there might be a lot of iteration plotting and visualizing those.

Another example is if you have two distant modes, showing the distribution will help you to spot Simpson's paradox situations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

This picture helps me tremendously, as I certainly was missing how you wanted this graph to look. This is a good suggestion that I would encourage you to put on the JMP Wish List.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Adding data distributions in JMP profiler

Here is the proposal to include this feature in next versions:

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us