- JMP User Community

- :

- Blogs

- :

- Byron Wingerd

- :

- Fantastic Trends in FDA’s 483s and How to Find Them

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

If you are involved in making a product that is regulated by the Food and Drug Administration (FDA) and have had a site inspection, it is very likely that you are familiar with the FDA’s form 483 and the consequences of receiving one. During an inspection of your facility the FDA representatives check to make sure regulations are being followed correctly. While I was involved in being inspected I found most observations to be nearly completely irrational; however, as a consumer, reading other people's observations, I am really happy to have the agency looking out for me.

Each year there numerous articles pontificating new trends in FDA inspections. As a result, every year, depending on which of the rumor your regulatory team subscribes to, there is a flurry of activity to try and beat the next round of inspections. I've wondered, did the FDA really did change its focus each year? More specifically, is there evidence of an annual focus shift? Because of the Open Government Initiative that stated in 2009, the FDA began to provide the public with some of the finding and results from the site inspections. The goal was to help improve the public understanding of the FDA’s enforcement actions. The citation data is provided in easy to access spreadsheets that are pulled directly from the FDA’s electronic inspections tools. There are some fairly stern caveats that go along with the data: Every record is not included, manual records aren't included, and these might just be preliminary results, not the final form.

I think there is a lot that can be learned with the partial data set that is available. In this article, I'm going to discuss how I dug into the inspection database and what I discovered using JMP Pro.

The data I'm going to talk about is here:

https://www.fda.gov/ICECI/Inspections/ucm250720.htm

And my data dictionary comes from here:

https://www.fda.gov/iceci/inspections/ucm250729.htm

Table 1. Data Dictionary

|

Center Name |

Program Area |

|

Cite ID |

Cite ID is a unique identifier for each citation |

|

CFR Reference No. |

The Code of Federal Regulations reference for the citation |

|

Short Description |

Short or category type description |

|

Long Description |

The long description is entered on to the FDA Form 483 |

|

Frequency |

The number of times during the fiscal year the citation was used on a 483 for the Center Program Area |

|

Year |

Derived Term, for year of table |

The first step was getting all the data together. I imagine one could just download all the Excel workbooks and then open all the worksheets and then combine them all together, in kind of a copy and paste approach. That is way too tedious and error prone for me, so I used JMP.

I encountered several snags when working with the files. There are two types of Excel files (.xls and .xlsx), the column names change when the file type changes, and the newer .xlsx files have about 7 million empty rows to deal with. If you run my JMP script to do the data import it takes a few minutes because of the size of those large files. The JMP script for opening the data cleans up the empty rows, adds a year column, fixes the changing column names and generally makes the first step easy. (Script attached below)

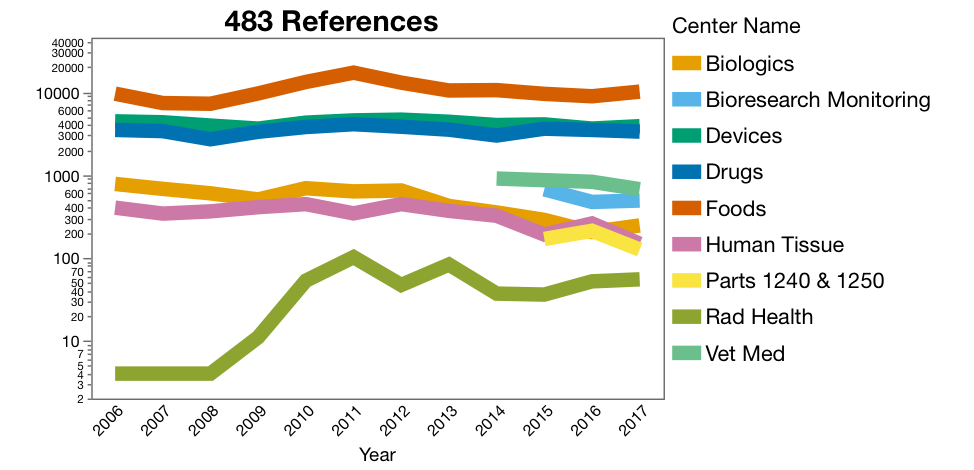

The raw data, with 18,737 rows, alone is accessible and interesting to look at (Figure 1). I adjusted the y-axis to a log scale to visualize all the trends together. The construction of the graph requires the use of the frequency column. There are a couple of potential trends across the Center Program Areas, for example, Radiation Health citations jumped up around 2009 and 2010, and Citations for Human Tissue and Biologics are trending down. Be very careful in assigning cause to trends. Biologics might be down because blood product suppliers have consolidated and there are fewer sites to inspect, or maybe the industry has gotten better (I hope so).

Figure 1. Counts of 483’s by Center for 2006 to 2017. Graph of the raw

imported from the database files

I mostly work with pharmaceutical companies so I am most interested in the 483's for drugs, so I made a subset of only the citations from the Drug Center. I would like to group all the similar long descriptions together. If many of they were unique, that wouldn't be a problem, but its not the case. I began to read through the long descriptions so that I could begin to catagorize them, but quickly it bacame clear that with 4,167 records, that would be a heroic task. One way to categorize documents is to use a text mining tool called latent class analysis. This method scores word frequencies within documents so that similar documents can be clustered together.

Text mining isn't some massively automatic process unless you have access to the SAS Text Mining Solution and a couple of experts, but even then it takes some work to clean up documents before doing analysis.

The terminology for dealing with text changes a bit. In this direction, each long description is called a document, and each “word,” or token, is a term. I started with a little text clean up. For example, with the JMP Recode tool:

- Removed “ . , ; : [ ] * -- - ”

- Removed (s), (S), ‘s

- Removed “Specifically” from the end of the descriptions

- Recoded, lower case, trim white space, collapse white space

- Changed “word/word” to “word / word”

The next step was a more extreme application of Recode. I wanted to use Recode on a word-by-word basis, not on the whole string together, so I separated all the words into separate columns using space as a delimiter and then combined the words with a comma delimiter. I have this column as a “Multiple Response” data type so that Recode would let me look at all the words separately. This let me fix some broken words and words that had more than one spelling along with a few other things. The goal was to decrease the dissimilarity between terms that were based on characteristics that wouldn't be relevant to the text analysis.

This isn't a complete or perfect clean up but it was good enough to get started with the next level of "cleaning" for text exploration. The JMP Text Explorer tool includes a Stemming option that lets me make similar words become the same word. For example, "adulterate", "adulterated", "adulterating" all become "adulter-". Each word is substituted with its stem word so that verb tense and plurals of a word are all the same word. JMP also finds frequent phrases so they can be added as terms. Some phrases appear quite often, like, “container closure”, “quality control”, or “written procedures.”

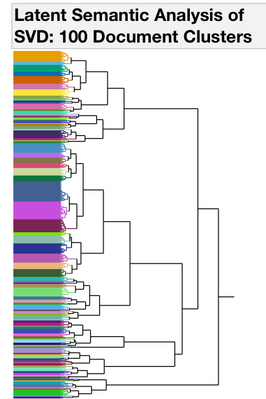

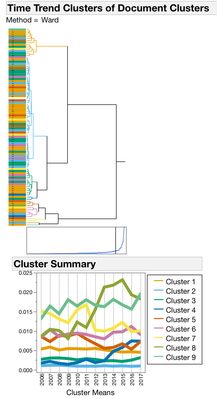

I started with Text Exploration. This is a word frequency (bag-of-words) approach to trying to understand what is in the documents. To group similar documents, I used a Singular Value Decomposition for a Latent Semantic Analysis (Latent Class Analysis or LCA). I centered and scaled the vectors and used TF-IDF weighting and then used a hierarchal clustering method to classify 100 clusters of documents. To find trends over time with each of the 100 clusters, I used a second rouind of clustering where I used the year as the attribute ID, LCA cluster as the object ID and clustered by the frequency of the LCA cluster. This hierarchal clustering approach finds groups of documents with the same frequency trends over time (Figures 2 and 3.)

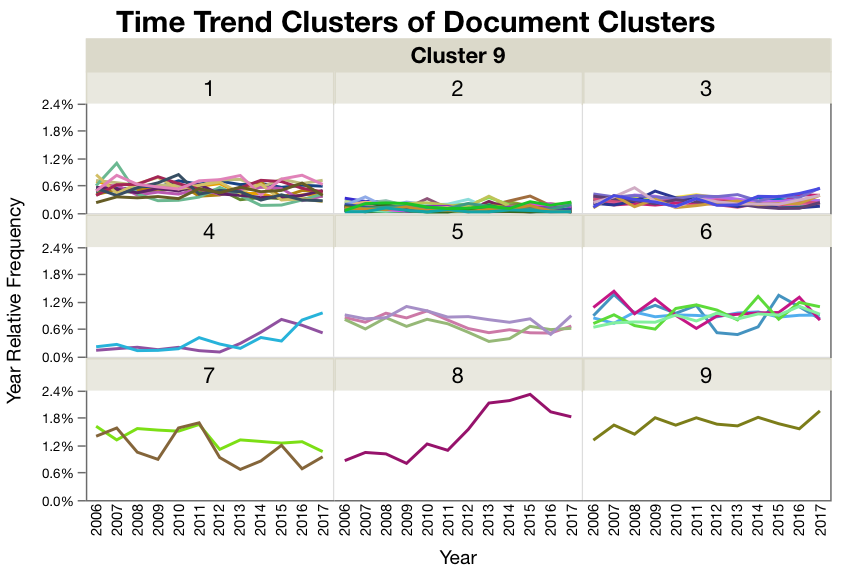

Using classification and cluster approach, we have narrowed down our search for trends in 4,167 documents to 283, and we can classify those documents with three Time Trend Clusters and a total of seven Document Cluster (LCA) groups (Table 1.)

With the documents grouped by time trend, the next problem involves trying to understand the contents of the documents we have identified. I could read the 283 long descriptions, and again, that might be interesting but tedious. Instead I'm going to go back to text analysis to try to understand what these citations are about. As it turns out, word frequency, often represented in a word cloud, is a quick method for deriving insights and then letting our brains work out the pretext based on our familiarity with the context. In these word clouds the size of the word is proportional to the frequency it occurs and the darkness of the word is indicates the frequency of the document. If the word is darker it was in more documents, but if it is bigger, then it occurs more frequently in documents. Text analysis used in this context is imperfect, but it is more practical than the alternative of manually processing each document individually (Figure 4, 5, and 6.)

Conclusion

The goal of this analysis exercise was to try to discover whether or not there are trends in enforcement during site inspections. In this analysis the most prominent increased trend was related to aseptic processing and contamination. This trend may represent an increased focus by the agency or it may also represent an increase in aseptic processing by the drug industry, or even an increase in the number of aseptically processed drugs. Differentiating between increased enforcement and increased opportunity for error isn't possible without adding substantial additional research; however, the message, for those doing aseptic processing should be clear. Be careful with your processes, your validation activities and how those activities are designed to monitor prevent contamination. (as a consumer, I really think this should be a high priority too!)

In general the agency seems to be generally consistent in enforcement across the entire set of 21CFR 210 and 211 regulations (where most drug specific regulations are). By looking at all of the other document clusters, a clear trend emerges in that annual spikes in citations for one area over another are clearly not occurring. This tends to provide evidence against the legendary “focus of the year” hypothesis.

Figure 2. Dendrograms from Clustering Operations. All of the 4167 documents from the Drug Center were clustered into 100 Document Groups using Latent Class Analysis (LCA) (1st panel). To find groups of documents that occur with similar frequencies, a second round of clustering was performed. The 100 document groups were summarized into 9 Time Trend groups (2nd panel). Clusters 3, 4 and 8 have patterns that begin to increase in the last years on the graph.

|

Time Trend Cluster |

Document Count |

Document Cluster |

Document Count |

|

3 |

146 |

26 |

55 |

|

|

|

45 |

39 |

|

|

|

56 |

35 |

|

|

|

90 |

17 |

|

4 |

97 |

11 |

72 |

|

|

|

74 |

25 |

|

8 |

39 |

46 |

39 |

Table 2. Contents of the Time Trend and Document clusters. The actual

number of documents to process is fairly large, but by using the Latent

Class document clustering followed by the Time Trend Clustering the

problem is substantially reduced.

Figure 3. Document clusters within each time trend cluster. Most of the time trend clusters are pretty flat or have low frequencies like cluster 1 and 2. But clusters 3, 4, and 8 have some interesting upward trends in the last couple of years. (note the lines are colored by document cluster, and the color has nothing to do with cluster distance.)

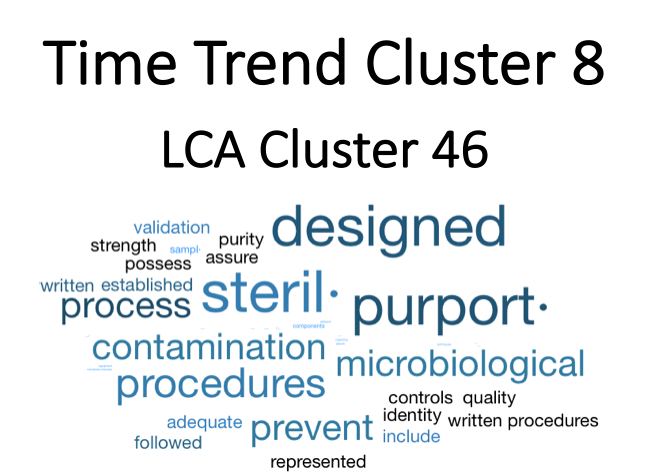

Figure 4. Time Trend Cluster 8. The largest trend is in Time Trend Cluster 8 where the documents are related to sterile processing and contamination.

Figure 5. Time Trend Cluster 4. Time Cluster 4 has the next largest increase in frequency of citations. The documents in the document cluster 11 are again related to aseptic processing. Document cluster 74 are related to appropriate levels of authorization and control of controlled records.

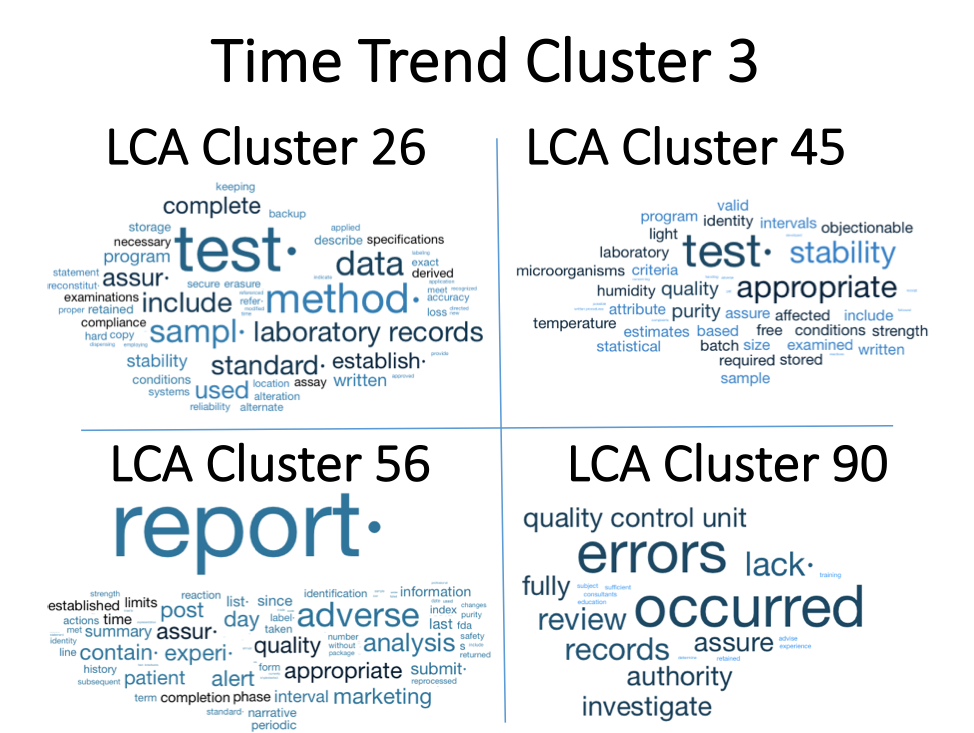

Figure 6. Time Trend Cluster 3. The time trend cluster with the smallest upward trends is cluster 3. Document clusters 26 and 45 are related to test methods and their general lack of robustness with respect to procedures, standards, sample handling, and their standards and controls. Document cluster 56 is related to citations around reporting things like adverse events at multiple stages of a drugs development and licensure. Finally, document cluster 90 is related to the lack of control of errors and how they were dealt with.

The Drug subset of the data is attached. Note that some of the analysis requires JMP Pro.

The Data is also on public.jmp.com here

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us