- We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

- JMP 19 is here! Learn more about the new features.

Uncharted

- JMP User Community

- :

- Blogs

- :

- Uncharted

- :

- Get AI Generated Content with New HTTP Request

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

JMP's Run Program and HTTP Request use AI tools and FFmpeg to make a video.

Grab a book from https://www.gutenberg.org/ebooks/67121 and save the text to disk. Write JSL to put the text in a data table, one paragraph per row.

txt = Load Text File( "f:/PolarisAndTheGoddessGlorian.txt" );//

txt = Regex(

txt,

"\*\*\* START OF THE PROJECT GUTENBERG EBOOK .*? \*\*\*\s*(.*?)\*\*\* END OF THE PROJECT GUTENBERG EBOOK .*? \*\*\*",

"\1"

);

If( Length( txt ) != 438066,

Throw( "text?" )

);

// look for paragraphs separated by ~0D~0A~0D~0A

txt = Regex( txt, "\s*\r\n\r\n\s*", "❡", globalreplace );

txt = Regex( txt, "_", " ", globalreplace ); // italic indicated with _this_ style

txt = Regex( txt, "[\n\r]+", " ", globalreplace ); // all on one line

dtStory = New Table( "story", New Column( "paragraph", Character, "Nominal" ) );

rc = Pat Match(

txt,

Pat Repeat(

(Pat Pos( 0 ) | "❡") + //

(Pat Break( "❡" )) >> p + Pat Test(

dtStory << addrows( 1 );

dtStory:paragraph[N Rows( dtStory )] = p;

//Wait( 0.01 );

,

1

)

) + Pat R Pos( 0 )

);

dtStory << New Column( "OriginalSort", values( 1 :: N Rows( dtStory ) ) );

dtStory << New Column( "OriginalLength", formula( Length( paragraph ) ) );

dtStory << runformulas;

dtStory:OriginalLength << deleteformula;To experiment with more than one reader voice, create some heuristics to decide if the quotation marks are around the text spoken by a male or female. Skipping this step and just using the best narrator voice might be a good choice too; switching voices is a bit jarring.

// speaker identification...just enough to choose a narrator vs female vs male voice

TalkingVerbs = "said" | "asked" | "repeated" | "exclaimed" | "muttered" | "called" | "continued" | "queried" |

"cried" | "comment" | "thought" | "say" | "shouted" | "awestruck" | "questioned" | "turned" | "replied" |

"answered" | "rejoined " | "screamed" | "gasped" | "bowed" | "answered" | "smiled" | "laughed" | "answer" |

"spoke" | "interposed" | "sobbed" | "enjoined" | "warned" | "shook" | "lowered" | "grew" | "grumbled" | "smile" |

"whispered" | "put" | "interposed" | "hiccoughed" | "informed" | "added" | "frowned" | "nodded" | "questioned" |

"gasped" | "pointed" | "broke" | "inquired" | "spoke" | "looked" | "barked" | "interrupted" | "shouted" |

"addressed" | "continued" | "told" | "suggested" | "directed" | "cautioned" | "comment" | "concluded" | "pleaded"

| "spoke" | "grinned" | "drew" | "snarled" | "volunteered" | "counsel" | "persisted" | "spat" | "counseled" |

"lifted" | "shout" | "roared" | "prayed" | "howled" | "shrieked" | "told" | "mused" | "growled" | "shouting" |

"groaned" | "grunted" | "rambled" | "directed" | "went" | "breathed" | "called" | "commanded" | "shuddered" |

"began" | "rejoined";

MaleSpeakerNames = "he" | "everson" | "commander" | "polaris" | "coxswain" | "zenas" | "wright" | "scotchman" |

"his" | "mackechnie" | "geologist" | "lieutenant" | "scot" | "stranger" | "oleric" | "captain" | "brunar" |

"ensign" | "brooks" | "minos" | "mordo" | "king" | "bel-ar" | "raissa" | "priest" | "man" | "melton" | "janess" |

"men" | "jastla" | "scientist" | "wright" | "voice" | "zoar" | "soldier" | "son" | "broddok" | "albar" |

"engineer" | "zind" | "chief" | "vedor" | "master" | "they" | "heralds";

FemaleSpeakerNames = "she" | "her" | "girl" | "rose" | "emer" | "lady" | "memene" | "queen" | "goddess" |

"glorian";

gapchars = ":; ,.?!\!n\!r"; // gaps between words ... for example he reluctantly said

gap = Pat Span( gapchars ) | Pat Pos( 0 ) | Pat R Pos( 0 );

distanceBetween = 1;

femalePattern = (gap + FemaleSpeakerNames >> spkr + gap + Pat Repeat(

Pat Break( gapchars ) + GAP,

0,

Expr( distanceBetween ),

RELUCTANT

) + TalkingVerbs >> verb + gap) //

| (gap + TalkingVerbs >> verb + gap + Pat Repeat(

Pat Break( gapchars ) + GAP,

0,

Expr( distanceBetween ),

RELUCTANT

) + FemaleSpeakerNames >> spkr + gap);

malePattern = (gap + MaleSpeakerNames >> spkr + gap + Pat Repeat(

Pat Break( gapchars ) + GAP,

0,

Expr( distanceBetween ),

RELUCTANT

) + TalkingVerbs >> verb + gap) //

| (gap + TalkingVerbs >> verb + gap + Pat Repeat(

Pat Break( gapchars ) + GAP,

0,

Expr( distanceBetween ),

RELUCTANT

) + MaleSpeakerNames >> spkr + gap);

// add a column for the speaker: narrator, male, female. The words above and algorithm below are

// specific to this story (and the time it was written, 1917)

// a paragraph is either all narrator or narrator + male speaker or narrator + female speaker

// usually there is a talkingverb and male or female speaker name in the paragraph. if not,

// the previous paragraph might end with

// he said:

// etc in the same format. if not, and the paragraph does not have a trailing matching quotation mark,

// the male/female speaker will continue at the next open quotation mark. if not, there are two places where

// the speaker should alternate male/female.

// for most of the book, male speaker will be the correct guess.

dtstory << New Column( "speaker", Character, "Nominal" );

prevspeaker = "narrator";

currspeaker = "narrator";

quotationmark = "\!"";

missingEndQuote = 0;

quotation = quotationmark + (//

(Pat Break( quotationmark ) + quotationmark + Pat Test(

missingEndQuote = 0;

1;

)) //

| //

(Pat Rem() + Pat Test(

missingEndQuote = 1;

1;

)));Those patterns, malePattern and femalePattern, identify structures like

"Now in all these happenings I think I see my way at last," he muttered.

or

"You have done well, indeed, good Oleric," she said quickly. "My king shall forgive you for the lost fademe, the losing of which was surely no fault of yours. And these—these be worth many fademes to me." She selected two of the pearls of fair size and goodly sheen and gave them to Oleric.

where he and she could also be various names and nick names of characters. And said could be pulled from that big list of verbs...the author must have taken some joy in cycling through them.

Next, apply the patterns, row-by-row, with some logic for alternating speakers and a special case where the heuristic failed (865). (The chapter numbers will be used in a bit to make Roman Numerals like IX say "nine." I think that was not part of the training data.)

iCHAPTER = 0;

chaps = {"one", "two", "three", "four", "five", "six", "seven", "eight", "nine", "ten"};

For( irow = 1, irow <= N Rows( dtstory ), irow += 1,

p = dtstory:paragraph[irow];

continueSpeaker = missingEndQuote; // from previous paragraph

hasquote = Pat Match( p, quotation, "" );

While( Pat Match( p, quotation, "" ), 0 ); // remove all quoted bits before looking for "he said"

explicitSpeaker = 0;

For( distanceBetween = 0, distanceBetween < 5 & explicitSpeaker == 0, distanceBetween += 1,

If(

Pat Match( p, femalePattern ), // try giving female precedence around 1322

prevspeaker = currspeaker;

currspeaker = "female";

explicitSpeaker = 1;//

,

Pat Match( p, malePattern ),

prevspeaker = currspeaker;

currspeaker = "male";

explicitSpeaker = 1;//

)

);

If( irow == 865,

Show( irow, currspeaker, prevspeaker );

prevspeaker = "male";

);

If( hasquote,

If(

explicitSpeaker, dtstory:speaker[irow] = currspeaker || " explicit", /* else if*/continueSpeaker,

dtstory:speaker[irow] = currspeaker || " unquote", /*else if*/

Ends With( dtstory:paragraph[irow - 1], ":" ), dtstory:speaker[irow] = currspeaker || " leading", // else alternating speakers

{prevspeaker, currspeaker} = Eval List( {currspeaker, prevspeaker} );

dtstory:speaker[irow] = currspeaker || " alternate";

)

, //

dtstory:speaker[irow] = "narrator" || " " || currspeaker || Char( explicitSpeaker )

);

);The author used some rigid rules for quotations that are probably different now; the JSL takes advantage of their rigidity. A trailing close quotation mark is always omitted when the same speaker continues in the next paragraph. If two speakers are alternating, the he said/she said parts can be omitted once the sequence is established. And the end of the previous paragraph can determine the speaker in the next paragraph.

When the hungry men had finished their meal, the girl spoke up again:

"Say, man from the sea, I have heard that there is a beautiful lady who waits for you in a prison in Adlaz town. Is that true?"

"Yes, lady, it is true," Polaris said; and he sighed.

"And you lead a great host thither to set her free?" the girl persisted.

"Yes, if I may."

"But to get on the way to Adlaz, you must take this fortress of Barme; and you find it a hard nut to crack. Is that not so?"

"That is true, also, lady."

"Well, hark you, man." The girl stood up and came to the table. "You who are true to a woman as few men are ever true; perhaps the poor, despised, cast-off Raula may aid you somewhat in this undertaking."

The audio is stored in an expression column with an event handler that plays the audio when clicked. The WavWriter function is JSL in another file, somewhere below...

dtStory << New Column( "audio",

expression,

Set Property(

"Event Handler",

Event Handler(

Click(JSL Quote(

Function( {thisTable, thisColumn, iRow},

RiffUtil:WavWriter( "$temp/yyy.wav", Blob To Matrix( Gzip Uncompress( eval(thisTable:thisColumn[iRow]) ), "int", 2, "big", 1 ), 22050 )

);

) )

)

)

);

dtStory << New Column( "nsamples" );

For( irow = 1, irow <= N Rows( dtstory ), irow += 1,

p = dtStory:paragraph[irow];

// do some fix-ups * * *, Chapter eye, --, "single"

pprev=p;

p = Regex( p, "\!"([a-zA-Z,]+)\!"", "\1", GLOBALREPLACE ); // he was "all" alone ==> he was all alone don't switch voice "Ivanhoe,"

If( p != pprev,

Show( "quoteword", irow, pprev, p )

);

pprev=p;

p = Regex( p, "\!"--", ",\!" ", GLOBALREPLACE ); // "go away"--he said--"or else" ==> "go away," he said, "or else" don't start segment with comma?

If( p != pprev,

Show( "quotehyphens", irow, pprev, p )

);

pprev=p;

p = Regex( p, "--", ", ", GLOBALREPLACE ); // -- does not slow down, but comma does

If( p != pprev,

Show( "hyphens", irow, pprev, p )

);

pprev=p;

p = Regex( p, "( [A-Z][a-z]?)\.", "\1", GLOBALREPLACE ); // _Mr. brown ==> _Mr brown

If( p != pprev,

Show( "Mr. B.", irow, pprev, p )

);

pprev=p;

If( Pat Match( p, Pat Pos( 0 ) + Pat Span( " *\n\r" ) + Pat R Pos( 0 ), "" ), // * * * ==> empty

Show( "asterix", irow, pprev, p )

);

pprev=p;

If( Pat Match( p, Pat Pos( 0 ) + "CHAPTER " ), // roman ==> one, two, ... ten

iCHAPTER += 1;

p = "Chapter " || chaps[iChapter] || ".";

Show( "chapno", irow, pprev, p );

);

if(trim(p)!="",samples = paragraphToSamples( p, dtStory:speaker[irow] ),samples=j(22050,1,0));//* * * paragraph

dtStory:audio[irow] = Gzip Compress( Matrix To Blob( samples, "int", 2, "big" ) );

dtStory:nsamples[irow] = N Rows( samples );

Wait( .1 );

);

Quite a bit of the JSL above is doing some additional fixups to the text; the AI narrator was trained in a way that -- did not work, so it replaces -- with , to get the desired pause. Then it had to fix up some quotations that began with , to get them to work. A lot of this is specific to the book and the narrator. At the end, paragraphToSamples makes the 22.050kHz audio which is then compressed and stored in a blob in the expression column.

include("f://redwindows/TTS_wav_support.jsl");

/* on dell 780 main,

python tts2.py

to start the server for text-to-speech

*/

getsamples = Function( {text, speaker, rate},

{fields, req, b, c},

fields = Associative Array();

fields["text"] = text;

fields["speaker_id"] = speaker;

fields["language_id"] = "en";

req = New HTTP Request( URL( "http://192.168.0.57:8080//api/tts" ), Method( "GET" ), Query String( fields ) );

b = req << send;

(Save Text File( "$temp/xxx.wav", b ));

c = (RiffUtil:loadWav( "$temp/xxx.wav" ));

If( speaker == "jenny" & rate != 48000,

c = RiffUtil:resample( c, 48000, rate )

);

If( speaker != "jenny" & rate != 22050,

c = RiffUtil:resample( c, 22050, rate )

);

c;

);

/****

speakers=

{"jenny", "ED\!n", "p225", "p226", "p227", "p228", "p229", "p230", "p231", "p232", "p233", "p234", "p236", "p237", "p238", "p239", "p240", "p241",

"p243", "p244", "p245", "p246", "p247", "p248", "p249", "p250", "p251", "p252", "p253", "p254", "p255", "p256", "p257", "p258", "p259", "p260",

"p261", "p262", "p263", "p264", "p265", "p266", "p267", "p268", "p269", "p270", "p271", "p272", "p273", "p274", "p275", "p276", "p277", "p278",

"p279", "p280", "p281", "p282", "p283", "p284", "p285", "p286", "p287", "p288", "p292", "p293", "p294", "p295", "p297", "p298", "p299", "p300",

"p301", "p302", "p303", "p304", "p305", "p306", "p307", "p308", "p310", "p311", "p312", "p313", "p314", "p316", "p317", "p318", "p323", "p326",

"p329", "p330", "p333", "p334", "p335", "p336", "p339", "p340", "p341", "p343", "p345", "p347", "p351", "p360", "p361", "p362", "p363", "p364",

"p374", "p376"};

bestMaleSpeakers={"p226","p251"/*best*/,"p254","p256"/*alternate*/,"p299","p302"/*alternate*/,"p330"};

bestFemaleSpeakers={"p225","p243","p250","p273"/*best*/,"p274"/*alternate*/,"p294","p300"/*alternate*/};

****/

finalSampleRate = 22050;

malevoice = "p256"/*male*/;

narratorvoice = "p273"/*narrator*/;

femalevoice = "p300"/*female*/;

quotationmark = "\!"";

paragraphToSamples = Function( {paragraph, speaker},

{all, part, parta, rc, currentSpeaker, quoteSpeaker},

quoteSpeaker = Match( Substr( speaker, 1, 3 ), "mal", malevoice, "fem", femalevoice, narratorvoice );

all = J( 0, 1, 0 );// start with empty matrix to join the voice segments together

currentSpeaker = narratorvoice;

// quotation marks toggle between narrator and speaker, always start with narrator

// before first quotation mark. process whole paragraph. possible missing close

// quotation mark at end.

While( Length( paragraph ) > 0,

// break at quotation mark and remove from paragraph. take remainder if no more quotation marks.

rc = Pat Match( paragraph, (Pat Break( quotationmark ) >> part + quotationmark) | Pat Rem() >> part, "" );

If( rc != 1,

Throw( "patmatch?" )

);

If( Length( part ), // leading quotation mark (len==0) will switch from narrator to speaker

parta = getsamples( part, currentSpeaker, finalSampleRate ); // convert text to audio

all = all |/ parta;

);

// alternate speaker/narrator. Typically (ralph said) "Let's go" said ralph, "they are waiting."

If( currentSpeaker == narratorvoice,

currentSpeaker = quoteSpeaker//

, //

currentSpeaker = narratorvoice

);

);

all // return the joined samples

);The Python code for the local server looks like this (and this matches the way getSamples, above, works):

# modified from https://github.com/coqui-ai/TTS/discussions/2740#discussion-5367521 , thanks @ https://github.com/masafumimori

import io

import json

import os

from typing import Union

from flask import Flask, request, Response, send_file

from TTS.utils.synthesizer import Synthesizer

from TTS.utils.manage import ModelManager

from threading import Lock

app = Flask(__name__)

manager = ModelManager()

#model_path = None

#config_path = None

speakers_file_path = None

vocoder_path = None

vocoder_config_path = None

#model_name = "tts_models/en/vctk/vits"

#model_name = "tts_models/en/ek1/tacotron2"

# model_name = "tts_models/en/jenny/jenny"

model_path1, config_path1, model_item1 = manager.download_model("tts_models/en/vctk/vits")

# load models

synthesizer1 = Synthesizer(

tts_checkpoint=model_path1,

tts_config_path=config_path1,

tts_speakers_file=speakers_file_path,

tts_languages_file=None,

vocoder_checkpoint=vocoder_path,

vocoder_config=vocoder_config_path,

encoder_checkpoint="",

encoder_config="",

use_cuda=False,

)

model_path2, config_path2, model_item2 = manager.download_model("tts_models/en/jenny/jenny")

# load models

synthesizer2 = Synthesizer(

tts_checkpoint=model_path2,

tts_config_path=config_path2,

tts_speakers_file=speakers_file_path,

tts_languages_file=None,

vocoder_checkpoint=vocoder_path,

vocoder_config=vocoder_config_path,

encoder_checkpoint="",

encoder_config="",

use_cuda=False,

)

print(

" > Available speaker ids: (Set --speaker_idx flag to one of these values to use the multi-speaker model."

)

print(synthesizer1.tts_model.speaker_manager.name_to_id)

lock = Lock()

@app.route("/api/tts", methods=["GET"])

def tts():

with lock:

text = request.args.get("text")

speaker_idx = request.args.get("speaker_id", "")

language_idx = request.args.get("language_id", "")

style_wav = request.args.get("style_wav", "")

style_wav = style_wav_uri_to_dict(style_wav)

print(f" > Model input: {text}")

print(f" > Speaker Idx: {speaker_idx}")

print(f" > Language Idx: {language_idx}")

if speaker_idx=="jenny":

wavs = synthesizer2.tts(text, language_name=language_idx, style_wav=style_wav)

out = io.BytesIO()

synthesizer2.save_wav(wavs, out)

else:

wavs = synthesizer1.tts(text, speaker_name=speaker_idx, language_name=language_idx, style_wav=style_wav)

out = io.BytesIO()

synthesizer1.save_wav(wavs, out)

return send_file(out, mimetype="audio/wav")

def style_wav_uri_to_dict(style_wav: str) -> Union[str, dict]:

if style_wav:

if os.path.isfile(style_wav) and style_wav.endswith(".wav"):

return style_wav # style_wav is a .wav file located on the server

style_wav = json.loads(style_wav)

return style_wav # style_wav is a gst dictionary with {token1_id : token1_weigth, ...}

return None

if __name__ == "__main__":

app.run(host="0.0.0.0", port=8080)

The Python code runs on a Linux computer; the TTS support is not available on Windows. HTTP Request doesn't care! Thanks @ masafumimori for the code, saved me from relearning flask.

$ python tts2.py

> tts_models/en/vctk/vits is already downloaded.

> Using model: vits

> Setting up Audio Processor...

| > sample_rate:22050

...

> Setting up Audio Processor...

| > sample_rate:48000

...

| > win_length:2048

> Available speaker ids: (Set --speaker_idx flag to one of these values to use the multi-speaker model.

{'ED\n': 0, 'p225': 1, 'p226': 2, 'p227': 3, 'p228': 4, 'p229': 5, 'p230': 6, 'p231': 7,

...

106, 'p374': 107, 'p376': 108}

* Serving Flask app 'tts2'

* Debug mode: off

INFO:werkzeug:WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:8080

* Running on http://192.168.0.57:8080

INFO:werkzeug:Press CTRL+C to quit

The TTS server, without any cuda support, runs on old hardware with the voices chosen above...fast enough -- windows did not time-out waiting for the GET request to supply an answer. Typically answers are returned in about the same time it takes the audio to play, but for other voices it can be longer. If that becomes a problem, the Python TTS server could be rewritten with a polling query, similar to the way the easy diffusion server works (below.)

(If I had Nvidia graphic cards with lots of memory and Cuda cores and newer computers with bigger power supplies, I'd probably get a 10X to 100X performance boost.)

The TTS server depends on this package:

pip install -U TTS

https://docs.coqui.ai/en/latest/installation.html

Pictures

As of early 2024, you can find a number of sites that will generate pictures from text. The free sites tend to be slow, one to five minutes for a picture. You can also download the software and trained models for Stable Diffusion, which many of the sites are using. https://github.com/easydiffusion/easydiffusion works on win and linux. The code below assumes a set of four linux machines without any cuda support and makes a picture every two minutes (faster, slower depending...) There seem to be several APIs for stable diffusion's rest-based web versions. The code below works with the easy diffusion version.

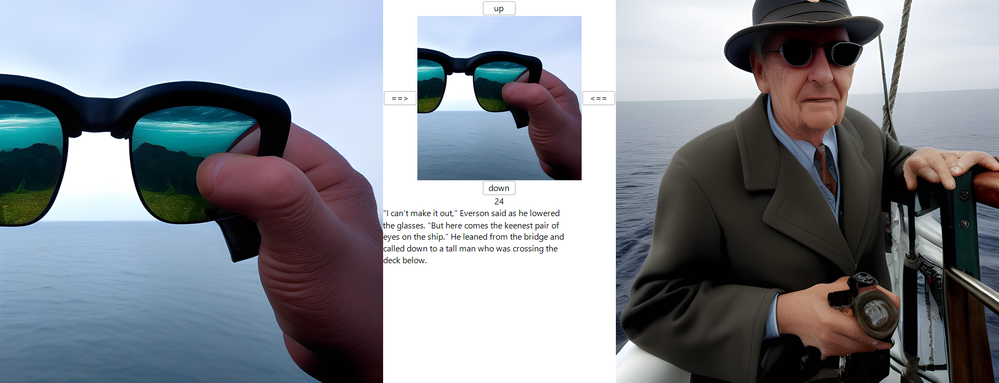

Pictures are created for each paragraph by using the text of the preceding, current, and following paragraphs. Some other keywords are tossed in (color, Dramatic, HD, Landscape, Realistic, Fantasy, Cinematic) and some negative keywords (nsfw, comic, pokemon, text, caption, words, letters, album, panel, religion, poem, prose). Negative words like text, caption, words, pokemon, comic, etc. were an attempt to avoid images like

A few of these wound up in the videos anyway; the negative words are just suggestions to steer the AI model away from images.

Way above, the audio TTS API used HTTP GET to retrieve audio samples (a .wav or .mp3 -like file). It worked OK because the GET was able to compute the answer and return it in a few seconds. If it had taken a minute or so, many tools (windows, JMP, browsers) would assume the connection was broken and produced an error message instead of a result. The Easy Diffusion API below triggers a longer process on the server, several minutes on my slow machines. I did not find a good-enough description of the API and resorted to using FireFox or Chrome browser's F12 inspection to study the interaction of the browser and the server. In the JSL you'll see where JMP, acting like a browser, POSTs a request with keywords and parameters, then GETs status from the server until the picture is ready. On the initial POST, the server replies with a status URL unique to the request. The JSL is further complicated by keeping a list of servers and status URLs for four machines running in parallel, each independently working on a different row of the data table.

The addImage routine's original design would add a new image column to the table if the row's current image columns were all filled in; the idea was to leave it running for...a while... In the end I used images from two columns of different tables with slightly different parameters.

addImage = Function( {img, irow, seed, request},

{c1, c2, icol},

For( icol = 2, icol < N Cols( dt ), icol += 2,

If( Is Empty( dt[irow, icol] ),

c1 = Column( dt, icol );

c2 = Column( dt, icol + 1 );

Break();

)

);

If( icol >= N Cols( dt ),

c1 = dt << New Column( "pict",

expression,

"None",

Set Property(

"Expression Role",

Expression Role(

"Picture",

MaxSize( 512, 512 ),

StretchToMaxSize( 0 ),

PreserveAspectRatio( 1 ),

Frame( 0 )

)

),

Set Display Width( 522 )

);

c2 = dt << New Column( "meta",

expression,

"None",

Set Property( "Expression Role", ),

Set Display Width( 20 )

);

);

c1[irow] = img;

c2[irow] = Eval Insert( "seed: ^seed^\!nrequest: ^request^" );

);The multiple servers began as a single LocalHost. The table of servers also holds the status url and row number so when the status url eventually gives a picture, it can be stored in the right row.

// these servers need to run the easydiffusion server...more would be better, cuda, etc

// with 4 I get a picture a minute; 1800/60 -> 30 hours to fill in the table.

// my old hardware does not support cuda cards good enough to use them. bummer.

LHtable = [// originally "localhost", now there are N hosts

1 => ["host" => "192.168.0.38:9000", // thinkd.local

"statusURL" => 0, "targetRow" => 0], 2 => ["host" => "192.168.0.37:9000",

// thinkc.local

"statusURL" => 0,

"targetRow" => 0], 3 => ["host" => "192.168.0.36:9000",

// thinkb.local

"statusURL" => 0,

"targetRow" => 0], 4 => ["host" => "192.168.0.35:9000",

// dell9010.local

"statusURL" => 0,

"targetRow" => 0]];

getLH = Function( {},

{i},

For( i = 1, i <= N Items( LHtable ), i += 1,

If( LHtable[i]["targetRow"] == 0,

Return( i )

)

);

Return( 0 );

);A separate step to combine the triples of paragraphs and remove some undesirable characters. Not sure why the prompt was an expression, it should be character but works anyway. This version uses if(1 to avoid a test for modest length paragraphs.

There is no memory in the Stable Diffusion model from one picture to the next. The triple paragraph is a poor attempt to simulate some long term memory. The characters in the pictures will change hair color, clothing, etc. from image to image. There may be better models on the horizon for this.

// pre-pass the story into the table.

dt = New Table( "Untitled", New Column( "prompt", expression ), Set Cell Height( 522 ) );

For( iom = 1, iom <= N Rows( dtStory ), iom += 1,

txtprev = dtStory:paragraph[Max( 1, iom - 1 )];

txtprev = Regex( txtprev, "[^a-zA-Z,'.?]+", " ", GLOBALREPLACE );

txt = dtStory:paragraph[iom];

txt = Regex( txt, "[^a-zA-Z,'.?]+", " ", GLOBALREPLACE );

txtnext = dtStory:paragraph[Min( N Rows( dtStory ), iom + 1 )];

txtnext = Regex( txtnext, "[^a-zA-Z,'.?]+", " ", GLOBALREPLACE );

txt = " ( " || txt || " ) " || txtprev || " " || txtnext;

If( 1 /*300 < Length( txt ) < 700*/, // choose modest length paragraphs

dt << Add Rows( 1 );

//iom += 20;

dt:prompt[N Rows( dt )] = txt;

);

);This last, monolithic, block is doing all the heavy lifting. It uses the LH structure above to keep several servers busy. It was also originally written to experiment with models; notice the model list is just sd-v1-5 now. Similarly the number of inference steps is just 30. And the outer loop is just grinding through the rows.

The query for this image was similar to all the others, but rerun 30 times with the same random seed.

So, jump in around line 7: while there is not an idle server, send a GET to each server for status, store the image if ready and mark the server idle. Then much further down, if there is an idle server, POST a request to start generating the next image. In the HTTP POST Request you can see how a parameter was hidden by renaming it with leading Xs; if the value is needed the server won't see it in the request and most likely will use a default value. In my case I decided the face correction was not helping...might have been a bad decision.

While( 1,

For( irow = 1, irow <= N Rows( dt ), irow += 1,

For Each( {modelname},

{/*"wd-v1-3-full", "animagine-xl-3.0", "dreamlike-photoreal-2.0", */"sd-v1-5"/*, "sd21-unclip-h", "sd21-unclip-l"*/

},

For Each( {infsteps}, {30},

While( (iLH = getLH()) == 0,

For( iLH = 1, iLH <= N Items( LHtable ), iLH += 1,

request = New HTTP Request(

url( LHtable[iLH]["statusURL"] ),

Method( "GET" ),

Headers( {"Accept: application/json"}, {"Content-Type: application/json"} )

);

StatusHandle = request << Send;

If( !Is Empty( StatusHandle ),

statusReport = Parse JSON( StatusHandle );

If(

Is Associative Array( statusreport ) & Contains( statusreport, "status" ) &

statusreport["status"] == "succeeded",

// unpack final image data(s)

If( N Items( statusReport["output"] ) != 1,

Throw( "unsupported multiple items" )

);

For( i = 1, i <= N Items( statusReport["output"] ), i++,

addImage(

Open(

Decode64 Blob(

Regex(

statusReport["output"][i]["data"],

"data:image/jpeg;base64,(.*)",

"\1"

)

),

"jpg"

),

LHtable[iLH]["targetRow"],

Char( statusReport["output"][i]["seed"] ),

Char( statusReport["render_request"] )

)

);

Beep();

LHtable[iLH]["targetRow"] = 0;

LHtable[iLH]["statusURL"] = 0;//

, /*else if*/Is Associative Array( statusreport ) & Contains( statusreport, "step" ) &

Contains( statusreport, "output" ),

Throw( "needs work" );

); //

, //else no status update...

Wait( 1 )

);

)

);

If( iLH > 0, // found idle host...

txt = Regex( dt:prompt[irow], "jean", "john", globalreplace, ignorecase ); // try name tweak

txt = Regex( txt, "angelica", "susan", globalreplace, ignorecase ); // ditto

request = New HTTP Request(

url( LHtable[iLH]["host"] || "/render" ),

Method( "POST" ),

Headers( {"Accept: application/json"}, {"Content-Type: application/json"} ),

// Cinematic, Dynamic Lighting, Serene, by Salvador Dalí, Detailed and Intricate, Realistic, Photo, Dramatic, Polaroid, Pastel, Cold Color Palette, Warm Color Palette

JSON(

Eval Insert(

"\[

{"prompt":" color, Dramatic, HD, Landscape, Realistic, Fantasy, Cinematic, ^txt^",

"seed":^char(randominteger(1,4294967295))^,

"negative_prompt":"nsfw, comic, pokemon, text, caption, words, letters, album, panel, religion, poem, prose",

"num_outputs":1,

"num_inference_steps":^char(infsteps)^,

"guidance_scale":7.5,

"width":512,"height":512,

"XXXXuse_face_correction":"GFPGANv1.4",

"use_upscale":"RealESRGAN_x4plus",

"upscale_amount":"4",

"vram_usage_level":"balanced",

"sampler_name":"euler_a",

"use_stable_diffusion_model":"^modelname^",

"clip_skip":false,

"use_vae_model":"",

"stream_progress_updates":true,

"stream_image_progress_interval":2,

"stream_image_progress":false,

"show_only_filtered_image":true,

"enable_vae_tiling":true

}]\"

)

)

);

// occasionally the first send seems to happen when the server is ... asleep? The 2nd always works?

For( itry = 1, itry <= 10, itry += 1,

GenHandle = request << Send;

If( Is Empty( GenHandle ),

If( itry == 10,

Throw( "failed to connect, stable diffusion not running?" )//

, // else

Write( "\!nwaiting" );

Wait( 1 );

)

, //else

Break()

);

);

// above requested a picture. it is not ready yet, but it gave a URL to ask if ready. Below, ask status once/second.

LHtable[iLH]["statusURL"] = LHtable[iLH]["host"] || Parse JSON( GenHandle )["stream"];

LHtable[iLH]["targetRow"] = irow; //

);

)

)

)

);When that is all done, there may be several data tables of images to combine into a single table. I used a bit of JSL to help me click through 1729 pairs of images to remove objectionable or choose the best.

// interactive picture selection

dt = open("f://redwindows/story.jmp");

dta = open("f://redwindows/pic1024A.jmp");

dtb = open("f://redwindows/pic1024B.jmp");

if(nrows(dt) !=1729 |nrows(dtA) !=1729 |nrows(dtB) !=1729, throw("rows") );

irow=1;

newwindow("chooser",

hlistbox(

vlistbox(abox=mousebox(textbox())),

vlistbox(spacerbox(size(300,1)),

hcenterbox(buttons=vlistbox(

hcenterbox(buttonbox("up",irow=max(1,irow-1);updateRow())),

hcenterbox(hlistbox(

vcenterbox(buttonbox("==>",dt:pict[irow]=dtA:pict[irow];updateRow())),

cbox=mousebox(textbox()),

vcenterbox(buttonbox("<==",dt:pict[irow]=dtB:pict[irow];updateRow()))

)),

hcenterbox(buttonbox("down",irow=min(nrows(dt),irow+1);updateRow()))

)),

hcenterbox(rbox = textbox()),

tbox=textbox()

),

vlistbox(bbox=mousebox(textbox()))

)

);

abox<<setClickEnable( 1 );

abox<<setClick( Function( {this, clickpt, event}, if(event == "Pressed",dt:pict[irow]=dtA:pict[irow];updateRow());if(event == "Released",irow=min(nrows(dt),irow+1);updateRow())));

bbox<<setClickEnable( 1 );

bbox<<setClick( Function( {this, clickpt, event}, if(event == "Pressed",dt:pict[irow]=dtB:pict[irow];updateRow());if(event == "Released",irow=min(nrows(dt),irow+1);updateRow())));

cbox<<setClickEnable( 1 );

cbox<<setClick( Function( {this, clickpt, event}, if(event == "Pressed",irow=min(nrows(dt),irow+1);updateRow())));

updateRow = function({},

(abox<<child)<<delete;

apic=newimage(dtA:pict[irow]);

apic<<scale(.35);

abox<<append(apic);

(bbox<<child)<<delete;

bpic=newimage(dtB:pict[irow]);

bpic<<scale(.35);

bbox<<append(bpic);

(cbox<<child)<<delete;

if("Picture"!=type(dt:pict[irow]),dt:pict[irow]=dtA:pict[irow]);

cpic=newimage(dt:pict[irow]);

cpic<<scale(.15);

cbox<<append(cpic);

rbox<<settext(char(irow));

tbox<<settext(dt:paragraph[irow]);

);

updateRow();

Once the pictures and audio and paragraphs are all in the same table, they can be combined into a video by driving FFmpeg with RunProgram. FFmpeg can easily accept the image data from stdin supplied by RunProgram's write mechanism. So build the complete audio file, then feed the images in one at a time. I chose to make a 30FPS video with custom transitions between pictures. The bandwidth for the 4K uncompressed pictures is...a lot, so I added a bit of Linux pipe as a Python program to avoid resending the exact same image 30 times a second. And towards the end, I chose to inject an extra slide at the beginning of each chapter/video.

chapter0based = 9; // 0..10, 0 is introduction

Include( "f://redwindows/TTS_wav_support.jsl" );

frame = J( 1080 * 2, 1920 * 2, 0 );

dtstory = Open( "/F:/redWindows/story.jmp", invisible );

dtpict = dtstory;//Open( "/F:/redWindows/pic1024B.jmp", invisible );

nn = 0;

imgrows = (N Rows( frame ) - 2048) / 2;

imgrows = imgrows :: (imgrows + 2047);

imgcols = imgrows;

textTop = 200;

textLeft = imgcols[N Cols( imgcols )] + 100;

textRight = N Cols( frame ) - imgcols[1];

textWide = textRight - textLeft;

dtstory << New Column( "position", formula( Col Cumulative Sum( :nsamples ) ) );

dtstory << runformulas;

//

chapters = 1 |/ (dtstory << getrowswhere( Starts With( paragraph, "CHAPTER " ) )) |/ (1 + N Rows( dtstory ));

audioSampleLength = Sum( dtstory:nsamples[chapters[chapter0based + 1] :: chapters[chapter0based + 1 + 1]] );

nParagraphRows = chapters[chapter0based + 1 + 1] - chapters[chapter0based + 1];

//dtStory = open("y://story.jmp");

sps = 22050;

//dtStory = Open( "f://redwindows/story.jmp" );

allsamples = J( sps + sps + audioSampleLength + sps, 1, 0 );// sps: title slide, sps:lead gray, ... , sps: trail gray

nSamps = sps + sps; // 1 sec silence before and after. extra second before for title

// 22050/30==735 audio samples/frame

// pre-calculate the durations for each frame. there is an extra black frame

// for the second of lead in audio, and an extra at the end.

frameCounts = J( 2 + nParagraphRows, 1, 0 );

pictureRow = J( 2 + nParagraphRows, 1, 0 );

frameCounts[1] = 30;

frameCounts[2 + nParagraphRows] = 30;

iFrameCount = 2;

iRunningSamples = 0;

For( irow = chapters[chapter0based + 1], irow < chapters[chapter0based + 1 + 1], irow += 1,

allsamples[nSamps + 1 :: nSamps + dtStory:nsamples[irow]] = Blob To Matrix( Gzip Uncompress( Eval( dtStory:audio[irow] ) ), "int", 2, "big", 1 );

nSamps += dtStory:nsamples[irow];

If( Mod( irow, 10 ) == 0,

Show( irow );

Wait( 0 );

);

iRunningSamples += dtStory:nsamples[irow];

nframes = Floor( iRunningSamples / 735 );

iRunningSamples -= nframes * 735;

pictureRow[iFrameCount] = irow;

frameCounts[iFrameCount++] = nframes;

);

Show( frameCounts, pictureRow );

f = RiffUtil:WavWriterHeader(

Eval Insert( "Y:\farm\PolarisAudio_Chapter_^chapter0based+1-1^.wav" ),

N Rows( allsamples ),

N Cols( allsamples ),

sps

);

File Size( f );

Save Text File( f, Matrix To Blob( allsamples, "int", 2, "little" ), Mode( "append" ) );

File Size( f );

curFrame = 1;

maxFrame = N Rows( frameCounts );

/* repeater.py - the frames are 33MB each, and repeated many times. This is a pipe program to repeat at the far end without retransmitting.

#!/usr/bin/python3

import sys

source = sys.stdin.buffer

dest = sys.stdout.buffer

while True:

binary = source.read(4)

if not binary:

break #eof

reps = int(binary.decode("utf-8"))

block = source.read(2160*3840*4)

for i in range(reps):

dest.write(block)

*/

tran = New Namespace(

"tran"

);

tran.setup = Function( {ir1, ir2},

{p1, p2, red1, green1, blue1, red2, green2, blue2, gray1, gray2, rank1, rank2},

If( ir1,

p1 = Eval( dtpict:pict[ir1] );

tran.dest = p1 << getpixels;//

, //

tran.dest = J( 2048, 2048, RGB Color( "gray" ) );

p1 = New Image( tran.dest );

);

If( ir2,

p2 = Eval( dtpict:pict[ir2] );//

, //

p2 = New Image( J( 2048, 2048, RGB Color( "gray" ) ) )

);

{tran.nc, tran.nr} = p1 << getsize;

tran.firsthalf = (1 :: 4011707)`;

tran.secondhalf = (4011708 :: (tran.nr * tran.nc))`;

{red1, green1, blue1} = p1 << getpixels( "rgb" );

gray1 = Shape( red1 + green1 + blue1, tran.nr * tran.nc, 1 );

{red2, green2, blue2} = p2 << getpixels( "rgb" );

gray2 = Shape( red2 + green2 + blue2, tran.nr * tran.nc, 1 );

rank1 = Rank( gray1 );

tran.y1 = Floor( (rank1 - 1) / tran.nc ) + 1;

tran.x1 = Mod( (rank1 - 1), tran.nc ) + 1;

tran.r1 = red1[rank1];

tran.g1 = green1[rank1];

tran.b1 = blue1[rank1];

rank2 = Rank( gray2 );

tran.y2 = Floor( (rank2 - 1) / tran.nc ) + 1;

tran.x2 = Mod( (rank2 - 1), tran.nc ) + 1;

tran.r2 = red2[rank2];

tran.g2 = green2[rank2];

tran.b2 = blue2[rank2];

);

tran.next = Function( {itrans},

{alpha, beta, x3, y3, xy, r3, g3, b3, firstz, secondz},

nframes = 30;

// For( itrans = 0, itrans < nframes, itrans += 1,

fract = (itrans + 1) / (nframes); // 0 is already transitioning, 29 is complete

alpha = (Cos( Pi() * fract ) + 1) / 2;// ease in, ease out

beta = 1 - alpha;

x3 = Round( alpha * tran.x1 + beta * tran.x2 );

y3 = Round( alpha * tran.y1 + beta * tran.y2 );

xy = (y3 - 1) * tran.nc + x3;

r3 = alpha * tran.r1 + beta * tran.r2;

g3 = alpha * tran.g1 + beta * tran.g2;

b3 = alpha * tran.b1 + beta * tran.b2;

// workaround problem at 4011707 (works) and 4011708 (fails)

firstz = RGB Color( r3[tran.firsthalf], g3[tran.firsthalf], b3[tran.firsthalf] );

secondz = RGB Color( r3[tran.secondhalf], g3[tran.secondhalf], b3[tran.secondhalf] );

tran.dest[xy[tran.firsthalf]] = firstz;

tran.dest[xy[tran.secondhalf]] = secondz;

//x=[1 2,3 4];x[[2,3,2,3]]=[55,66,77,88];x

//New Window( "", New Image( Shape( dest, nr, nc ) ) );

// should not need shape? Shape( tran.dest, tran.nr, tran.nc );

// rp << Write( Right( Char( 1e6 + 1 ), 4 ) ); //repeater.py expects 4-char repeat count, just 1 of each transition frame

// Wait( 0.1 );

// );

);

New Window( "", bb = Border Box( Text Box( "" ) ) );

rp = Run Program(

executable( "plink" ),

options(

{"dell9010",

// idea from https://stackoverflow.com/questions/34167691/pipe-opencv-images-to-ffmpeg-using-python

"\!"/mnt/nfsc/farm/repeater.py 2>repeater.stderr.txt|ffmpeg", "-y", // overwrite output

"-f", "rawvideo", // tell ffmpeg the stdin data coming in is

"-vcodec", "rawvideo", // "raw" pixels, uncompressed, no meta data

"-s", Eval Insert( "^ncols(frame)^X^nrows(frame)^" ), // raw video needs this meta data to know the size of the image

"-pix_fmt", "argb", // the JSL color values are easily converted to argb with MatrixToBlob

"-r", "30", // input FPS

"-thread_queue_size", "256", // 128 was slow. applies to the following input. is this in frames or some other unit? 8 produces a warning, 1024 is too big?

"-i", "-", // this is the pipe input. everything before this is describing the input data

//"-an", // disable audio, or supply a sound file as another input

"-i", Eval Insert( "/mnt/nfsc/farm/PolarisAudio_Chapter_^chapter0based+1-1^.wav" ), //

"-vcodec", "mpeg4", // encoding for the output

"-b:v", "5000k", // bits per second for the output

Eval Insert( "/mnt/nfsc/farm/polaris_Chapter_^chapter0based+1-1^.mp4" ), // the name of the output

Eval Insert(">ffmpeg.stdout^chapter0based+1-1^.txt 2>ffmpeg.stderr^chapter0based+1-1^.txt\!"")}

),

WriteFunction( // the WriteFunction is called when FFMPEG wants JMP to write some data to FFMPEG's stdin

Function( {this},

//Show( curframe, maxFrame, chapter0based + 1, chapters[chapter0based + 1] );

//Show( rp << canread, rp << isreadeof, rp << canwrite );

//Wait( 0.1 );

write(curframe,"/", maxFrame," ",hptime()/1e6," ");

Try(

If( curFrame < maxFrame,

If( curFrame == 1, // inject title slide

t = Text Box(

"\[

Built with JMP software, FFmpeg, Stable Diffusion

and TTS voices p273 (narrator), p256 (male), and p300 (female)

https://github.com/easydiffusion/easydiffusion

https://pypi.org/project/TTS/

https://github.com/coqui-ai/TTS

Polaris and the Goddess Glorian by Charles B. Stilson

available at https://www.gutenberg.org/ebooks/67121

Compilation Copyright © 2024 Craige Hales

]\",

<<setwrap( 3000 ),

<<setfontsize( 64 ), // 50pt handles the largest paragraph ok

<<backgroundcolor( "black" ),

<<fontcolor( "green" )

);

frame[0, 0] = 0;

t = (t << getpicture) << getpixels;

frame[400 :: (400 + N Rows( t ) - 1), 700 :: (700 + N Cols( t ) - 1)] = t;

this << Write( Right( Char( 1e6 + 30 ), 4 ) );

data = Matrix To Blob( -frame, "int", 4, "big" ); // return the argb data (negative JSL color)

If( Length( data ) != 2160 * 3840 * 4,

Throw( "length mis-match " || Char( Length( data ) ) )

);

this << Write( data );

);

// clear old text

frame[0, 0] = 0;

// add new text

t = Text Box(

If( curFrame < N Rows( pictureRow ) & pictureRow[curFrame + 1],

dtstory:paragraph[pictureRow[curFrame + 1]];//

, //

""

),

<<setwrap( textWide ),

<<setfontsize( 50 ), // 50pt handles the largest paragraph ok

<<backgroundcolor( "black" ),

<<fontcolor( "green" )

);

t = (t << getpicture) << getpixels;

frame[textTop :: (textTop + N Rows( t ) - 1), textLeft :: (textLeft + N Cols( t ) - 1)] = t;

// add 30 transition frames. the last one repeats...more.

tran.setup( pictureRow[curFrame], pictureRow[curFrame + 1] );

For( i = 0, i < 30, i += 1, // i==0 begins a 30 frame, 1 second of silence, transition from gray to frame 1

Show( curframe, i );

tran.next( i );

this << Write( // frames 0..28 last 1/30 second. the 29th frame sticks for the next cycle, except the last is done

Right( Char( 1e6 + If( i < 29, 1, If( curFrame < N Rows( frameCounts ), frameCounts[curFrame + 1] - 29, 1 ) ) ), 4 )

);

frame[imgrows, imgcols] = tran.dest;

data = Matrix To Blob( -frame, "int", 4, "big" ); // return the argb data (negative JSL color)

If( Length( data ) != 2160 * 3840 * 4,

Throw( "length mis-match " || Char( Length( data ) ) )

);

this << Write( data );// send the data

(bb << child) << delete;

pp = New Image( frame );

pp << scale( .4 );

bb << append( pp );

bb << inval << updatewindow;

Wait( 0 );

);

curFrame += 1;//

, //

Show( "sending eof" );

this << writeEOF;

)

,

Show( exception_msg ); // oops. what went wrong?

this << writeEOF;// tell FFMPEG there is no more data

);

)

),

readfunction( Function( {this}, Write( this << read ) ) )

);

While( rp << canread | rp << canwrite | !(rp << isreadeof),

Show( "waiting" );

Wait( 1 );

);

Show( rp << canread, rp << isreadeof, rp << canwrite );The attached files are mostly explained above. They will not just work to reproduce this project, not without a bit of thought. If I left something out, which seems likely, fire away in the comments.

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.