JMP Blog

A blog for anyone curious about data visualization, design of experiments, statistics, predictive modeling, and more- JMP User Community

- :

- Blogs

- :

- JMP Blog

- :

- Lessons learned in modeling Covid-19 (Pt 1)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We are living in strange times. As of the writing of this post, I’ve been #WFH for two whole weeks now. Once bustling cities now stand like ghost towns. The media, both TV and social, are saturated with one topic: Covid-19, more colloquially referred to as the coronavirus (even though it’s just one of several).

While this pandemic has certainly brought with it a lot of anxiety and hardship, it has also brought with it some interesting challenges. Thanks to researchers like those at Johns Hopkins University and others, we have daily access to data on the number of confirmed cases from countries around the world. This is a boon for data junkies such as myself! But it can also be a curse. Many of us are going to take that data and run with it, recklessly creating plots and charts and models of all kinds, then going on to use those results to make outlandish claims about the extent and severity of the pandemic. My Twitter feed in recent days has been filled with experts calling out charts and articles that do more harm than good and help spread fear faster than any virus could. To paraphrase one statement I found, “a lot of data viz folks are going to have to do some soul-searching after all this is done" (https://twitter.com/wisevis/status/1237021575926472704).

Now this is not to say that plotting and analyzing the data is wrong by any means. I work at JMP, a place filled with data junkies, many of whom are tracking and plotting the data as it comes in. A quick glance through JMP Public will reveal many amazing charts, carefully prepared to present the data in an informative manner without causing alarm. In fact, our very own Byron Wingerd recently gave a webinar and wrote his own blog post on proper plotting of the Covid-19 data. I strongly recommend you check it out as he makes several excellent points and has had experience with this type of data before. The one commonality among all of these is that they are only visualizing the current data. No modeling, no predictions. All very safe.

Well, fearless reader, I must confess that I was not safe. As a certain famous cartoon duck would put it, I “got dangerous”. That’s right… I made predictive models.

Life on the Edge

Now before you grab your torches and pitchforks, hear me out. In making these predictive models, I had no intention to make them public before now. They were intended for my private use only. In fact, the reason I am sharing them now is to illustrate precisely why you shouldn’t put so much confidence in them to begin with. As George Box so eloquently put it, “All models are wrong, but some are useful”. And yes, I know that quote has been used and abused too often to count, but I hope what I’m about to show you puts it in a new light. Just because you fit a model and all the metrics point towards it being highly accurate does not mean it is correct.

Before we dive in, a bit of background info. The data I’ve been using is the cumulative daily count of confirmed cases as recorded by Johns Hopkins University at their GitHub site starting on Jan. 22, 2020. As many have said before, the counts are only as accurate as the testing is comprehensive. In other words, limited testing means less trustworthy data. Furthermore, I have not, nor do I intend to, model the cumulative death counts for what I hope are obvious reasons.

Lesson 1: Know Your Limits

When choosing how to model the cumulative confirmed counts, I chose my model based upon my very, very rudimentary knowledge of epidemiology. You’ve no doubt heard about people using an exponential model. This model fits the rapid acceleration in confirmed cases we’ve been experiencing well. However, I did not use such a model for one very good reason: It is simply not physically accurate.

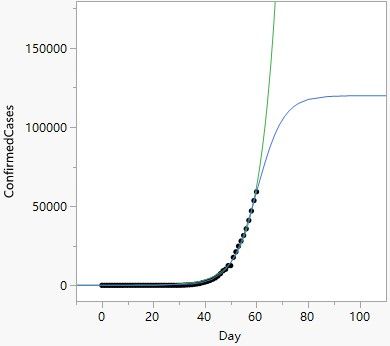

Allow me to illustrate with some real data. Below is a figure showing exponential (in green, assuming this is the model people are talking about) and logistic (in blue) fits to the confirmed counts of Italy (as of March 22, 2020).

Notice that both models fit the data very well. However, the exponential model continues increasing without bound while the logistic model eventually levels off at some higher value. The simplest models epidemiologists use to describe the behavior of illnesses spreading throughout a population is the SIR model. The name comes from how the population is divided: Susceptible to the disease, Infected with the disease, and Removed from the disease. The basic idea is that there is some fixed population of individuals who are susceptible to some new disease and a good portion of these individuals will transition through the phases of Infected and Removed (either through recovery or…). If you implement such a model and keep track of the cumulative counts of infected individuals, you will end up with something that looks a lot like that logistic curve. This is all because of one key fact: There is a fixed population size. Note that the exponential model continues up, up and away like a superhero, oblivious to any so-called population threshold that might seek to oppose it. This clearly does not match reality, where the cumulative number of infected will have to level out at some point either because methods were taken to stop the infections or the entire population was infected.

You can also see this behavior clearly illustrated from several other sources. The recorded counts from China clearly follow this trend. Even the plots shown in the original Flatten the Curve and the simulations of the now famous Washington Post article or even this amazing extension show this trend in the daily counts (which translates to the behavior in cumulative counts I showed in the figure above; think first derivative of that curve). So, in summary, I did not use an exponential model. Instead I started with the logistic model.

This is not to say that a logistic model, or any other sigmoid model (sigmoid = S-shaped), is not without its weaknesses too. Where the exponential errs on the side of being too inflammatory, the logistic would err on the side of being too optimistic. Any sigmoid model can be broken down into roughly three basic parts: the rate of increase, the inflection point, and the asymptote.

The first part is self-explanatory: how fast do the cumulative totals increase from 0. This part is the only one common to both models. The second part, the inflection point, is the point of the model where the rate of increase starts to transition from fast to slow. In the figure above, the inflection point for the logistic model is about where that last point is located, right in the middle of the curve. In terms of daily counts, it’s where the number of cases peaks then starts to fall. The final part, the asymptote, is the maximum possible cases that will have occurred. In terms of daily counts, it’s where the number of cases finally drops to zero (or at least a very small number).

Obviously, the asymptote and the rate of increase are probably the two most popular aspects of the model as they tell you how many total cases to expect and how soon. In fact, the asymptote may be more favored as it tells you how bad you should expect things to be. However, it is also the greatest weakness. Too low of an asymptote will lull you into a false sense of security and understanding. In fact, I would argue that the inflection point is more important. If you don’t know where the inflection point is, you’re in no better position then if you were using an exponential model. I’ll explain more when we look at the data.

In summary, the first lesson when building models is knowing the limits not only of your data, but also your model. If you understand the working parts of your model, you’ll understand its strengths and weakness and, most importantly, where it has the potential to break down. Also, it is important to understand the context in which your model will be operating. A model that is more in line with subject matter expertise should be preferred whenever possible over a quick out-of-the-toolbox model. And finally, understand how your model will be interpreted, used, and possibly abused. In the context of this pandemic, an exponential model says there’s no hope for recovery; a logistic model says it will subside. It’s just a matter of time.

Lesson 2: No Plan Survives the Battlefield

The day: March 23, 2020. The time: early morning I guess? Doesn’t matter. The previous weekend, I had already been playing around with the data and trying out logistic model fits using the Fit Curve platform in JMP (that’s the Logistic 3P model for those following at home). That Monday, I decided to put my models to the test.

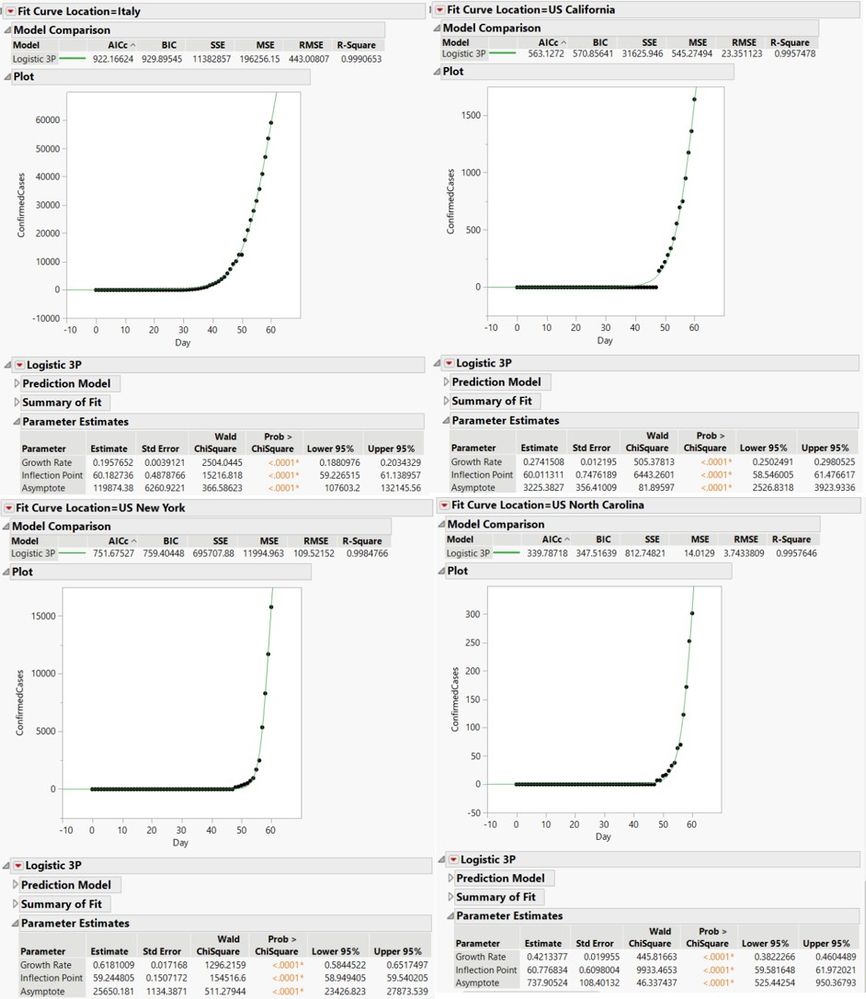

Now, I was only modeling data from four locations: Italy (second most cases behind China at the time), New York, California (two states with large numbers of confirmed cases), and North Carolina (because of course I would). While I used the same model form, I made sure that the individual locations each had their own parameter estimates (using location as a By Group). This is my natural tendency when fitting models within a natural grouping. It also reflects the fact that each location is unique and should be treated differently from one another. How testing and treatment was performed in Italy is very different from here in the US, and there are differences even among individual states. The modeling of the data should reflect this fact.

Below are the fits I got using the daily cumulative counts up to March 22, 2020, roughly two months worth of data:

Look at how amazing those fits are! I can just hear the report saying “What’s this?! Such clean data all lined up and so well-behaved. And that near-perfect trend?!! Oh what a glorious day! You sir are to be commended for such exquisite data! Bravo, good sir, bravo!” I bet many data analysts would see this and seethe with jealousy over how near-perfect these fits are. And who’s to blame them? Just look at how clean the data looks! I'd be surprised if I didn't get such great fits! With such low p-values coupled with R^2 values oh so close to 1, these models should be spot on, right? Well…

As you can probably guess from my overtly sarcastic tone, these models are not the gems the metrics purport them to be. Our first warning sign that something may be amiss is to look at the parameter estimates themselves. Note the estimates of the inflection point (recall I said it was the most important parameter) for each location. They are all roughly the same…and they are all the very last day of recorded data. Yep, the model says that you just happened to have caught the very moment at which the rate of cases will start to slow down and cases should start decreasing very soon! How fortunate! Apologies for the sarcastic tone again, but it should be obvious that this is a fluke of the model estimation procedure. In fact, I didn’t even notice it until I was writing this blog. It’s that easy to miss.

But let’s pretend that you were just like me that day and missed that important fact. Having estimated the model, I then realized that I would have a chance to test it out by keeping track of the model predictions vs. actual counts over the next few days. So, that’s precisely what I did.

Come Tuesday morning (note that there’s a day lag in getting the count data…that’s just the nature of it), I punched in the counts from the previous day and compared them to my model predictions, along with pointwise 95% confidence intervals (because predictions without uncertainty are a quick way to lose my statistics practicing license). So how did I fare?

|

Location |

Actual Count |

Prediction |

95% Lower |

95% Upper |

|

Italy |

63927 |

64721.72 |

63662.40 |

65781.04 |

|

US California |

2108 |

1829.92 |

1758.63 |

1901.21 |

|

US New York |

20884 |

19171.40 |

18616.80 |

19726.00 |

|

US North Carolina |

353 |

386.29 |

367.21 |

405.37 |

One day. One day out of the gate and my accuracy drops to a measly 25%. All of my US models bombed almost immediately. The California and New York models are underestimating the true counts while the North Carolina model has overestimated the true counts. The Italy model has also technically overestimated the true counts, but the lower uncertainty bound still captures it. But hey, maybe it’s all just a fluke? Maybe there’s actually more noise to the data than at first glance and I just need to give the models time to kick in, right?

Here’s the data for the rest of the week up to Thursday’s count.

|

Location |

Day |

Actual Count |

Prediction |

95% Lower |

95% Upper |

|

Italy |

Tuesday |

69176 |

70487.69 |

68933.02 |

72042.36 |

|

Wednesday |

74386 |

76058.94 |

73906.42 |

78211.46 |

|

|

Thursday |

80589 |

81345.24 |

78513.24 |

84177.24 |

|

|

US California |

Tuesday |

2538 |

2041.74 |

1925.52 |

2157.95 |

|

Wednesday |

2998 |

2238.73 |

2067.96 |

2409.51 |

|

|

Thursday |

3899 |

2415.94 |

2184.81 |

2647.07 |

|

|

US New York |

Tuesday |

25681 |

21698.12 |

20692.37 |

22703.87 |

|

Wednesday |

30841 |

23357.28 |

21946.15 |

24768.41 |

|

|

Thursday |

37877 |

24361.26 |

22648.06 |

26074.46 |

|

|

US North Carolina |

Tuesday |

495 |

461.98 |

422.16 |

501.77 |

|

Wednesday |

590 |

530.14 |

463.68 |

596.59 |

|

|

Thursday |

738 |

586.96 |

491.78 |

682.14 |

Soooo…the outlook definitely doesn’t look good. At least for a few days, I had 2 out of 4 correct. But, in general, my models performed horribly. Especially the California and New York models, which are severely underestimating the true counts.

“How did I respond?”, you might ask. “And by I, I meant you”, you also might clarify. Did I crumple into a heap, watching daily as most of my models crashed and burned, smoke and flames rising from the wreckage? Actually, I was quite impressed at the results.

Contradictory as it may sound, I found this to be a perfect example of why you shouldn’t blindly trust the metrics we use in model-assessment. It also presents an excellent reason why you should always validate and test your models, no matter how perfect the metrics make them look. That is why techniques such as cross-validation have become so popular in the statistical modeling community. You could have the most beautiful model, which fits the data almost perfectly. But once it fails to make accurate predictions, it is complete garbage. A model is only as good as its ability to predict beyond the data.

Stay Tuned…

So what happens next? How will our hero rise from this tragedy? What new challenges await him? What new lessons will he learn? And, most importantly, when will I get to go back to the office?

Check out the next part of this series, where I discuss more lessons learned and hopefully answer all your questions. Except that last one. As you can clearly see, I’m in no position to give you an honest answer.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us