- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

JMP Add-Ins

Download and share JMP add-ins- JMP User Community

- :

- File Exchange

- :

- JMP Add-Ins

- :

- Validation for Continuous Processing Data

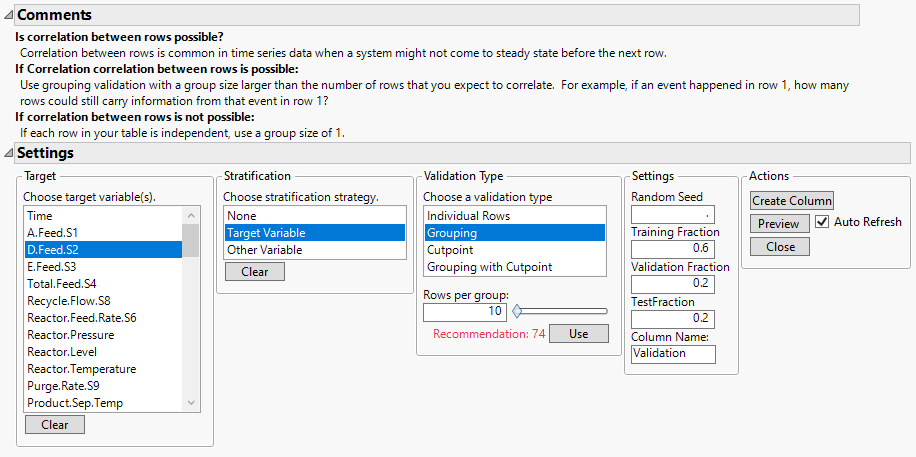

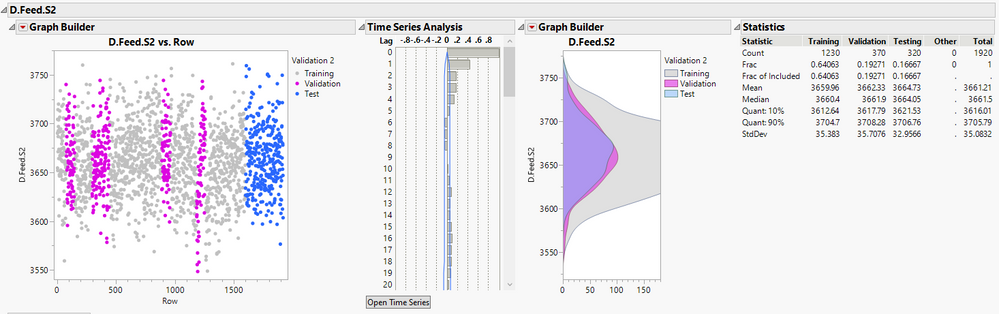

This add-in helps create validation columns for data that could include correlation between rows, either due to autocorrelation or other factors including noise that affects sequential rows. Data is grouped by row, then groups are assigned to training or validation. There is also an option to use grouping and cutpoint validation together, so validation groups are spread across time while the last rows are in the test set. The user is presented with a default recommendation that is likely more useful when analyzing continuous process data than using the standard validation platform. If target variables are selected then graphs are presented to help the user visualize the training/validation split.

This was created with JMP Pro versions 16 and 17. It was not tested on standard or older versions of JMP.

Find this project over on GitHub, feel free to submit issues or pull requests there.

Motivation

By default the validation platform assigns rows to training and validation randomly and individually. When rows are independent this is ideal, however if a row depends on the previous row this split will lead to unrealistic expectations of a model's predictive power as the information in the training set is essentially being used to validation and test the model. In time series this is typically solved using cutpoint validation, in fact @ian_jmp and @julian developed a great addin for this:Create Time Series Validation Column. For many exploratory models using the latest rows is not the best approach though, particularly when recent changes prompted the analysis. Picking groups of rows from within the training set increases the likelihood that recent data is still available to the model, while minimizing the chance that individual sources of noise are present in both training and validation groups.

Alternatives

Two other ways to analyze data with correlation between rows are better in some situations but are not always feasible:

- Reduce the data table to have truly independent rows, eliminating the possibility for one row to be correlated with the previous row. Sometimes it is tricky or time consuming to effectively summarize each column into rows that are independent. As an example, if a user doesn't already know that a 15 minute event in one variable at the start of a process 2 day residence time affects their target, the user might not know how to summarize that variable over 2 or more days in order to get independent rows.

- Model or predict the autocorrelation. The time series platform is a great place to start, and including series of lagged variables in multivariate models is can provide a lot of additional insight into the process. For complex processes with hundreds of variables this is time consuming and complex, and they tend to be beyond the skill set of new users.

The User Interface

The user selects target variables, if applicable. By default the first of these is used for stratification, although this doesn't always affect the split depending on the group and table size. The default validation type is Grouping and a recommended group size is presented.

Some information on target variables is displayed to help the user identify an appropriate group size.

'Under the Hood' Details

The recommended group size is based on a time series analysis of each target variable. The platform picks a lag time where the next several rows have an autocorrelation smaller than 0.1 and then ensuring a minimum number of groups in small tables, and increasing the group size in large tables.

Changes

Here are updates in the latest version, a full list of changes are available in the CHANGELOG.md over on github.

- View change log from UI on About screen

- Calculates group size limits using only rows that are not excluded.

- Only show numeric columns in list of potential Target and Startification variables.

- Target columns sometimes reset to selected columns in the table.

- Correct validation column name was not always used.

- If Auto-refresh option is turned off some values are not updated.

Feedback

We are looking for suggestions and ideas, both related to this user interface and for other ways to solve this problem. For philosophical discussions, feel free to make a new post in the Discussion forum and tag @ih and @DrewLuebe to get our attention, that will give plenty of room for discussion.

This is great and very well explained. I believe JMP needs to better support sensor data.

For example, K-fold is again a random split.

While having a time-series split will also help:

https://scikit-learn.org/stable/modules/cross_validation.html#time-series-split

@FN I agree, this would make the built-in cross validation much more useful for this type of data. You haven't already put in a wish list item for this have you?

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us