- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

JMP Add-Ins

Download and share JMP add-ins- JMP User Community

- :

- File Exchange

- :

- JMP Add-Ins

- :

- Machine Learning with XGBoost

Purpose

Here is an attempt of my first application built with app-builder using the connection between JMP and Python to handle a complex machine learning algorithm.

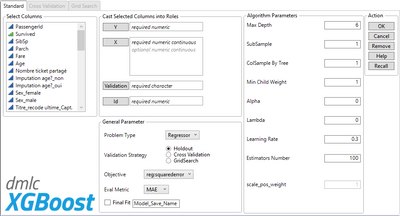

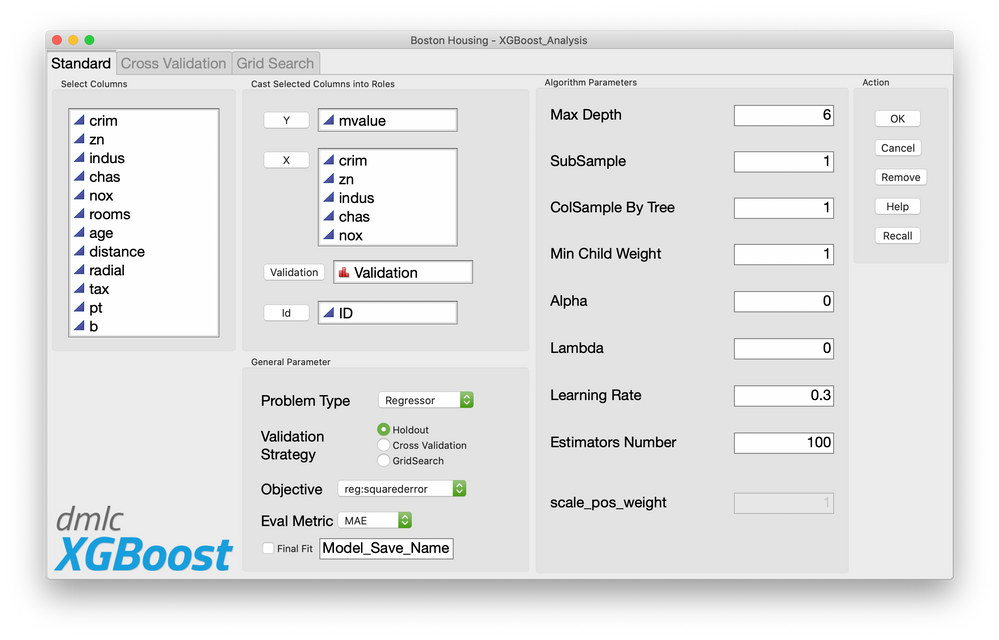

The purpose of this app is to launch a graphical user interface write in jsl and connect that interface with the “XGBoost” machine learning API.

How to Use

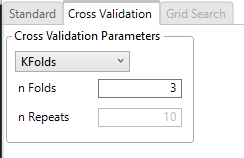

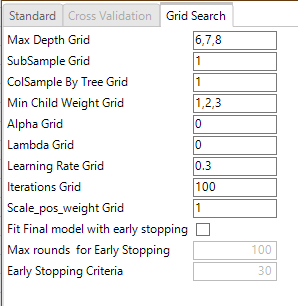

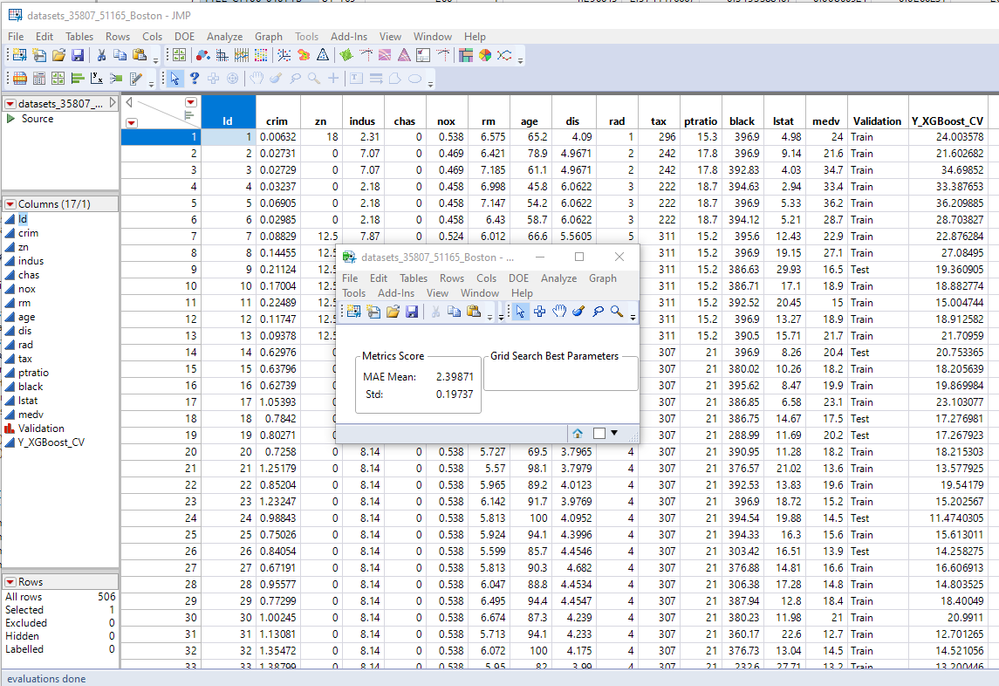

I have implemented the possibility to work on 3 types of validation scheme: holdout / cross validation / grid search. Both regressions and classifications have been developed on few objectives and classical metrics.

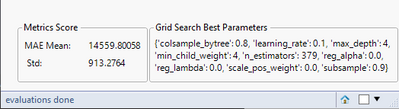

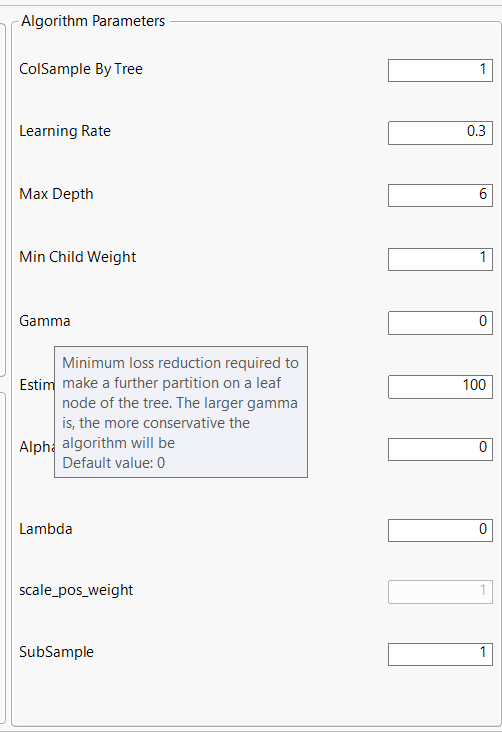

At the end of the training, a report is supplied with metrics value on both train / validate set or on mean/standard dev for cross validation. In the case of a grid search training the report is supplied with best hyperparameters results and we can choose to use early stopping. It is possible too to export the python model to predict new datas without training the model again (but should be done in a python environment)

You should then check that JMP can handle that link with Python prior to use the app...

For the New 2024 release:

A lot of updates were necessary with the upgrades of the python libraries, so I took the opportunity to add features that I was missing in practical use and to fix quite a few bugs...

- I now force column names to have a certain pattern to avoid any conflict between python/JMP (I clear all special characters, punctuation, spaces) and I take advantage of the new possibility introduced by JMP 16 to force the decimal format with dots to avoid certain crashes that I had previously.

- I'm also now checking that the responses are in the correct format for classification problems (it must be numeric and starting at 0 since the changes made to xgboost, presumably linked to numpy changes) and I'm correcting the class problem on binary responses that could occur in some cases with the first version of the addin.

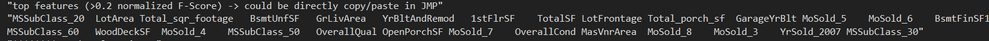

- Added a checkbox to allow you to keep the generated train and test datasets so that you can export them as csvs and work on the same basis when you want to mix this addin with classic python (and therefore other ML algos for even more fun ^^).

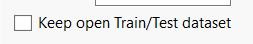

- Addition of the 'Gamma' hyperparameter (a.k.a. 'min_split_loss', i.e. a check on the degree of tree regularisation, by specifying the minimum reduction in the loss function required to justify the additional splitting of a node).

- I now systematically display the model's hyperparameters after fitting, in the same order as the addin, to make it easier to associate these hyperparameters with the model's performance and also to make it easier to copy them back into the addin (when you go from grid search to cross validation, for example).

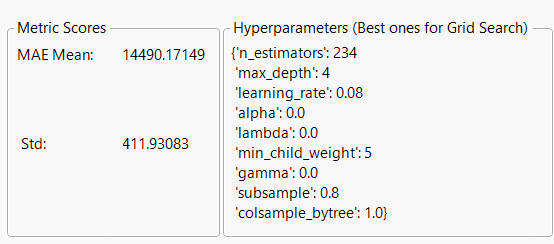

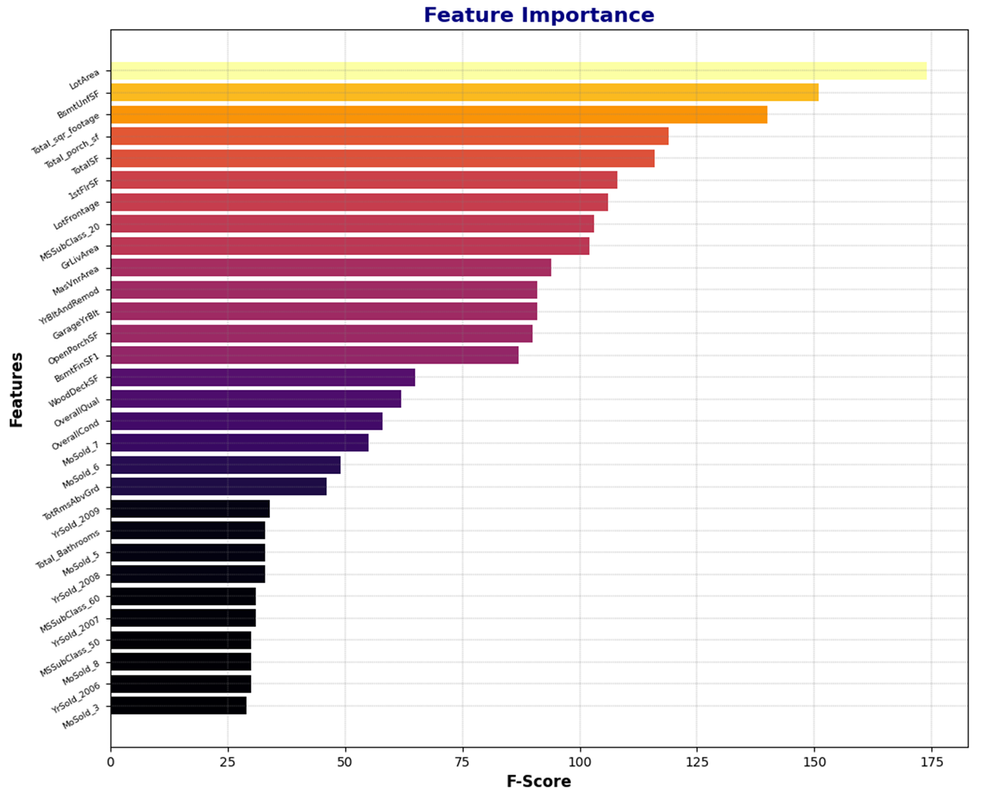

- I now retrieve the list of features used by the trained model, normalised by F-Score, and I display those whose normalisation is greater than 0.20, then display it in the logs in a format that can be directly copied and pasted into JMP (and therefore more easily added to another analysis). This list is sorted by decreasing F-Score value.

- Installation of a matplotlib output so that the feature importance of the model can now be displayed directly without having to export and then import into an ide python (this was envisaged in my initial release but JMP15 systematically crashed as soon as matplotlib was imported, this seems to have been resolved in JMP 17 but I haven't tested it in JMP 16), the graph adapts to the number of features displayed (font size and margin).

Example on a classic dataset ("Boston house prices": https://www.kaggle.com/competitions/home-data-for-ml-course/data:(

System Configuration

Add-in developed and test using the following system configuration:

JMP 17

Python 3.11 or 3.12 with numpy, pandas, pathlib, multiprocess, scikit-learn, xgboost

Tested using classical dataset (titanic, boston houses,...)

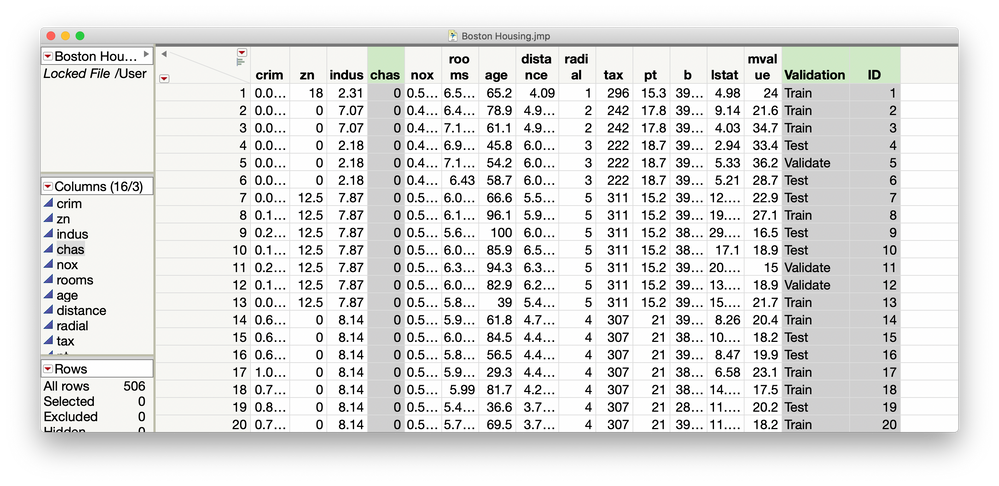

Thanks for making this available! I am attempting to run it on the Boston Housing data, adjusted slightly to include an appropriate validation column and a numeric ID column and with the "chas" variable as a numeric indicator. (See screenshot below.) I am running JMP Pro 15.1 on MacOS 10.15.3 with Python 3.8.2 and numpy 1.18.2, pandas 1.0.3, scikit-learn 0.23.1, and XGBoost 1.1.0. I am copying the log below. Obviously something is working for a bit, but then Python crashes. In the end, I get the warning that "Send Expects Scriptable Object in access or evaluation of 'Send'." I imagine this error happens because Python crashed and didn't create the object to be sent.

Any ideas on what might be going wrong? I'm no scripting expert, but in addition to this weakness, I don't comprehend the some of the French output in the log ;)

Thanks!

Ruth

"*** DEBUG Report: Start"

"******* Fonction update OK"

"**** Valeurs initialisées"

2

"**** OK avant lancement algo"

"***** La table de travail est:"

DataTable("Boston Housing.jmp")

/Library/Frameworks/Python.framework/Versions/3.8/lib/python38.zip

/Library/Frameworks/Python.framework/Versions/3.8/lib/python3.8

/Library/Frameworks/Python.framework/Versions/3.8/lib/python3.8/lib-dynload

/Library/Frameworks/Python.framework/Versions/3.8/lib/python3.8/site-packages

/Library/Frameworks/Python.framework/Versions/3.8/lib/python3.8/site-packages/aeosa

/**********/

# Partie import des biblios Python

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_absolute_error

from sklearn.metrics import mean_squared_error

from xgboost import XGBRegressor

from joblib import dump, load

from pathlib import Path

# Optionnel

#from xgboost import plot_tree

# On affiche pas les warnings python

import warnings

warnings.simplefilter("ignore")

try:

# On créé des DataFrame avec les Data Table JMP

data_frame_train = pd.DataFrame(dt_train)

data_frame_valid = pd.DataFrame(dt_valid)

data_frame_test = pd.DataFrame(dt_test)

# On récupère l'index du DF avec Id pour le réutiliser dans la génération du dataframe de la réponse

Id_train = data_frame_train[colId]

Id_valid = data_frame_valid[colId]

Id_test = data_frame_test[colId]

# On fixe l'index du DF avec Id

data_frame_train.set_index(colId)

data_frame_valid.set_index(colId)

data_frame_test.set_index(colId)

# On conserve la table train non modifiée

data_frame_train_initial = pd.DataFrame(dt_train)

# On fixe l'index du DF avec Id

data_frame_train_initial.set_index(colId)

# On fixe le y

y_train = data_frame_train[colY]

y_valid = data_frame_valid[colY]

y_test = data_frame_test[colY]

# On fixe les facteurs: ce sont ici les colonnes récupérées de la liste des colonnes de JMP donc les features contenus dans liste_colonne

X_train = data_frame_train[liste_colonne]

X_valid = data_frame_valid[liste_colonne]

X_test = data_frame_test[liste_colonne]

# MODELISATION

# On définit le modèle comme étant un régresseur XGBoost

# my_model = XGBRegressor(n_estimators=100, learning_rate=0.05, random_state=0)

#param_dist = {'n_estimators':2,'learning_rate'=0.05,'random_state'=0 }

# ATTENTION: le transfert de variable est piégeux car un integer est converti en decimal

# Or dans cette list de paramètre XGBoost ne tolère pas tout le temps des décimals, il faut donc convertir en Integer avec int()

# Conversion des paramètres:

Int_Nb_Iterations = int(Nb_Iterations)

Int_Max_Depth = int (Max_Depth)

Int_Min_Child = int (Min_Child)

# Assignation des paramètres

max_depth = Int_Max_Depth

min_child_weight = Int_Min_Child

subsample = Sub_Sample

colsample_bytree = Col_Sample

objective = Objective

num_estimators = Int_Nb_Iterations

learning_rate = Learning_Rate

my_model = XGBRegressor(max_depth=max_depth,min_child_weight=min_child_weight,subsample=subsample,colsample_bytree=colsample_bytree,objective=objective,n_estimators=num_estimators,learning_rate=learning_rate)

# On fitte le modèle

my_model.fit(X_train, y_train)

# On récupère les prédictions sur le set de la table train partie validation

predictions_sur_train_XGBoost = my_model.predict(X_train)

predictions_sur_validation_XGBoost = my_model.predict(X_valid)

# Calculate Score

#score_train = mean_absolute_error(predictions_sur_train_XGBoost, y_train)

#score_valid = mean_absolute_error(predictions_sur_validation_XGBoost, y_valid)

if Type_Score == 'MAE': score_train = mean_absolute_error(predictions_sur_train_XGBoost, y_train); score_valid = mean_absolute_error(predictions_sur_validation_XGBoost, y_valid)

elif Type_Score == 'RMSE': score_train = mean_squared_error(predictions_sur_train_XGBoost, y_train) ; score_valid = mean_squared_error(predictions_sur_validation_XGBoost, y_valid)

else: print("toto")

# Export prévisions sur table train et validate

output_train_XGBoost = pd.DataFrame({colY: predictions_sur_train_XGBoost,colId : Id_train})

output_validation_XGBoost = pd.DataFrame({colY: predictions_sur_validation_XGBoost, colId : Id_valid })

# On concatene les dataframe train et valid afin de réentrainer le modèle sur l'ensemble des données pour prédire le y_test

X_full = pd.concat([X_train, X_valid])

y_full = pd.concat([y_train, y_valid])

# On réentraine le modèle sur train + validate et on l'exporte sur test

my_model.fit(X_full, y_full)

predictions_sur_test_XGBoost = my_model.predict(X_test)

output_test_XGBoost = pd.DataFrame({colY: predictions_sur_test_XGBoost,colId : Id_test})

# ------------------------- Essais de sortie graphique: plantage car JMP 15.1 n'est pas fonctionnel

# fit = alg.fit(dtrain[ft_cols].values, dtrain['y'].values)

# ft_weights = pd.DataFrame(fit.feature_importances_, columns=['weights'], index=ft_cols)

# model = xgb.train(params, d_train, 1000, watchlist)

# fig, ax = plt.subplots(figsize=(12,18))

# xgb.plot_importance(model, max_num_features=50, height=0.8, ax=ax)

# plt.show()

# ft_weights = my_model.feature_importances_

#import matplotlib.pylab as plt

#from matplotlib import pyplot

#from xgboost import plot_importance

#plot_importance(model, max_num_features=10) # top 10 most important features

#plt.show()

# -------------------------

if int(File_Save_Check) == 1:

data_folder = Path(File_Save_Directory)

file_to_save = data_folder / File_Save_Name

dump(my_model, file_to_save)

except:

print("python has crashed check your parameters")

/**********/

Send Expects Scriptable Object in access or evaluation of 'Send'

Hi,

Yes the problem is indeed in the python part sorry about that...

It is odd because the python code has not been executed at all: the code has crashed before the "try" command! I never add that kind of crash....

But it is tricky to debug your log because JMP doesn't write any of the python's error message... it just throw the python code without explanations... When we use python with JMP and python encounter a problem there is no explicit error code: I had to developped all the python code outside of JMP then integrate that code in JMP because of that...

This dataset is well handled on my computer but I work on windows 10 and with python 3.7

The first thing to check is that JMP 15.1 can handle python 3.8 I am really not sure it is the case (there was some difficulties with python 3.7 for example see https://community.jmp.com/t5/Discussions/JMP-14-Python-abilities-working-I-m-confused/td-p/184515:( try to execute the example code in the scripting index:

Names Default To Here( 1 );

Python Init();

Python Submit(

"\[

str = 'The quick brown fox jumps over the lazy dog';

a = 200;

]\"

);

getStr = Python Get( str );

getNum = Python Get( a );

Show( getStr, getNum );

Python Term();

Then, is there any missing value in your data set ? (I do not check for missing value in the dataset and it can crash because of that if it is a reworked boston dataset with some missing values)

I am not sure too that my code can handle Mac OS because I have no way to check that and the python integration can be perhaps a bit different than windows...

The same dataset with no problem on my computer:

Thanks for looking at my issue, @Franck_R. My Python installation is working with JMP for other code (including the test code you suggested), but there may still be an issue with a package or some other missing component needed for your Add-In that I haven't yet discovered. I'm totally stumped for now. When I get a chance, I'll try setting this up on a Windows machine to give it another try.

Thanks again for putting this out in the community -- it's a very useful application!

Hi Ruth

I am very sorry I have no clue on what's happening: I suspected some trouble with the way Mac OS handle directory differently that Windows but the crash is located in the python's part and not before that. Moreover the crash happens whereas you did not use the file export option

Perhaps it is related to a package issue as you mention. I will try to upgrade my packages as soon as possible to check if same packages on windows 10 are still OK or not.

Thanks you for trying my addin ! ;)

Franck

This is great! Thanks for sharing* it

Regarding Python in Windows, Anaconda with Python 3.6 works out-of-the-box with JMP 15, but you need to update all the packages since it is a 2 y old package. I hope JMP improves their Python support in next versions.

(*note) The interface you have built can be used for other python APIs. Have you published the source code as well?

Regards.

Thank you!

Yes, I also hope that Python support will be improved over time.

For the interface, sorry I made this addin almost entirely on my free time but with JMP's licence paid by my company, I consider that the publication of the source code must be validated by my employer who wishes that this source code remains private.

No problem Franck. It is a great contribution, thanks for sharing.

Ciao Franck. Your addins is really well done.

Just a curiosity. Why when I choose the classification task the response variable type is required to be numeric? I was expecting a categorial variable (bad vs good, baseline vs experiment, cat vs dog etc etc..)

Rgds. Felice

Hi Felice and thank you for your feedback!

I don't remember exactly what the problem was but I had an issue to process non-numeric datas during the python transfer.

And because of the lack of error report as soon as JMP is executing python code I did not succeed to identify the root cause and solve it. The python script code for categorial was ok, the JMP code too but the JMP code then python code was crashing the script...

One day I'll have to try to look back on it!

You just have to recode the values for example: yes = 1 and no = 2 or setosa = 1, versicolor = 2, virginica =3,... It is a bit cumbersome but it was to get around this bug

Ok Franck

thanks for your reply.

As already sead I was just curious to know. Now it is clear.

Rgds. Felice

Hi

I have updated the add-in with compatibility with new python versions + new features + bug corrections

I can comment on the reason you had crashes with matplotlib. Both JMP's main window event loop and matplotlib's main window event loop clash. Since the Python shared library is loaded into JMP's process space, JMP already has a main window. Matplotlib called by usual methods believes it has the Main window loop. It depends entirely on the matplolib backend chosen. After 14 we forced the maptplotlib backend to the non interactive 'AGG' backend and it's fully usable by just telling matplotlib to save the figure rather than show it. The other issue you might have seen is that JMP wasn't crashing in all cases but instead exiting. The last matplotib's window closed was calling a C level exit() outside of JMP's control. JMP 18.0 is a little more lenient, if it allows matplotlib on Windows without forcing it to the AGG backend, but on Mac the default Cocoa backend does indeed still crash, I have an open bug report with matplotlib developers. On the Mac I have had some success with a PySide or PyQt backend, but that's still hit or miss. The best way to call matplotlib is to save the figure as an image.

Hi Paul,

Thanks for the follow-up and explanations! Glad to see that the integration with python is continuing in JMP 18...

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us