- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Why are ANOVA tables giving different results?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Why are ANOVA tables giving different results?

Hello,

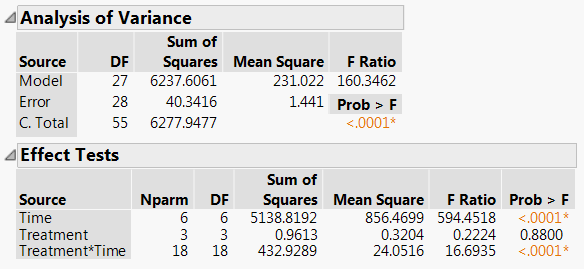

I am trying to build a linear model where X1 = Time and X2 = Treatment. When I use the Fit Model tool, I am seeing a discrepancy in the ANOVA table and the Effects table (screenshot below). The Sum of Squares columns should add to the same total (in this case, 6277.9477), correct? The SS under Effects Tests does not sum to this value. Why would this be? Am I misunderstanding the calculations here? Or is this a bug in JMP?

Many thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

This is not a JMP bug. The Sums of Squares will add up if you are using what is called Type I Sum of Squares (Type I SS). The problem with Type I SS is that it is order dependent. That means that the model A, B, and A*B would give different results than the model B, A, B*A. Therefore, Type I SS are not usually used except in "by hand" calculations.

What JMP is using is the Type III Sum of Squares (Type III SS). That removes the order dependency by only calculating the SS for a term AFTER all other terms have been put in the model. This provides stable estimates, but they are not additive to the model SS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

Thanks Dan. If I can ask a follow-up...

This experiment is a full factorial with 7 time points, 4 treatments, and 2 replicates. My understanding is that, in a balanced design (which this is), the factors are orthogonal, so Type I, Type II, and Type III Sums of Squares should all be identical. So why would they be different?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

That is not what orthogonal means. Orthogonal means that the SS would stay the same when removing terms (regardless of which type of SS are being used). Orthogonal has nothing to do with the order that terms are entered into the model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

One more thing. If you WANT the Type I Sum of Squares, from the red triangle by the response name, choose Estimates > Sequential Tests.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

Thank you for that feedback, Dan - greatly appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

Thanks for the clarification Dan. May I ask a follow up question: If I want to know the % of variance explained by each term, A,B and A*B, can I use the "sum of square" from each term as numerator and (sum up the "Sum of Square" of the three) as the denominator? I mean instead of using "Model SS".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Why are ANOVA tables giving different results?

No, I would not add up the Type III Sums of Squares for the denominator. That denominator has no real meaning.

How you calculate your "% Variance Explained" will depend on the information that you are trying to convey. Typically, people will use the Type I SS divided by the model SS since the Type I SS will add up to the Model SS. Your percentages will then add to 100%. This is a "% Variance Explained", but these results will depend on the order in which you put your model terms.

You COULD use the Type III SS divided by Model SS. That will result in a total percentage different from 100% (it should be less than 100%). In that case your result is really the "Additional % Variance Explained after accounting for all other model terms".

Because of these issues, I am not a big fan of determining the percent variance explained for each term. You can rank-order the importance of the terms in other ways, which JMP provides, such as the Effect Summary table which also shows the magnitude of the term's impact.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us