- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Simulating data to generate larger data set for building models

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Simulating data to generate larger data set for building models

Dear JMP community,

I'm trying to work out a way to generate simulated data to better train a model I'm building.

I have a decent sized data set -- a few thousand data points, but would like to generate simulated data that maintains a similar structure as the original data in order to improve upon the model. I'd like to have somewhere around 20K-50K data points with a similar structure in order to have a larger set to train, validate, and test the prediction model.

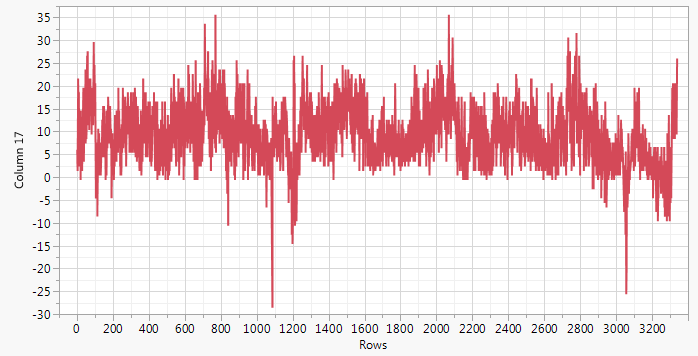

Just for visual purposes, the data looks something like this:

I've tried to work with the "Simulator" feature from the Profiler platform, but the data that is generated there doesn't keep a similar structure (variation) as the original data -- it gets too "washed out" with the standard deviations from the inputs and response. When I try to build a model off this set and compare it to the original model from the source data, the orginal model outscores it because that model maintains the structure of the data in it's prediction. The model generated from the simulated data set doesn't since all structure is essentially lost.

Also, the "Simulate" feature (right clicking a column in a report window) doesn't really get me where I want either.

I've done a bit of research into what people have done with JMP and found a few potential leads, however so far none of them really go after what I'm looking for. If anyone out there has a solution to this, or can point me in the right direction on how to code for it, I would be grateful.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

So the data are not plotted in time order? They are not from the same source (single source)?

When you say that each measurement is independent, have you estimated the auto-correlation, even just for a lag of 1? While 'independent' might mean that the data were collected separately, I am talking about statistical independence, or lack of correlation.

The simulated data is what I would expect. There is a single population with a stable mean and variance. The Monte Carlo simulation includes random perturbations to both the factors and the response.

The 'structure' that you see in your data but not in the simulation is the result of other 'assignable causes.' The causes can occur randomly in time and in magnitude. The simulation must include their contribution. Do you know what they are? How they occur? How to model them?

I understand that you cannot disclose the true nature and detail of the measurements, but perhaps you could use an analogy to give us a sense of the data and what you are doing. For example, perhaps you are measuring the pressure in three torpedo tubes inside a nuclear submarine from time to time...

Assuming that we can help you to simulate the real data, what kind of analysis or modeling do you plan to use with it?

Peter is very helpful but as we learn more about your problem, I doubt that those suggestions will help, The functional data analysis is for functions, profiles, or curves. Imagine a sample of hundreds of data series and you want to model the shape and either how the shape is influenced by factors or how the shape predicts outcomes. You apparently have a single data series that you want to extend. The FDA does not predict beyond the original domain. The ARIMA model assumes equally spaced points. It can model the auto-correlation, perturbations, and seasonality to predict ahead but not far. On the other hand, a Monte Carlo simulation based on the ARIMA model is possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

I agree with @Mark_Bailey last paragraph above...and as I stated in an earlier post...from what you've shared so far...I doubt that FDE or time series analysis is the most appropriate approach for your goals.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

Hi @Peter_Bartell and @Mark_Bailey,

I really appreciate the discussion on this matter, as it's giving me some ideas on how to improve my analysis.

The data come from a process, but are not in real time. They are collected in a database, that is then later accessed. I can't guarantee that each point is truly sequential after the previous. There is a typical time spacing between points, but if there is a pause in the process, which could be hours or days, the database just picks up where it left off. Hence, it's not strictly speaking a time-series. However, in practice if the ARIMA model can duplicate a similar response as the real data, this should be sufficient (I think).

Tha data are from the same source. Samples are pulled from the process and a measurement done on them.

I have not yet tried estimating the autocorrelation yet. The Monte Carlo simulation might be a way to go in order to generate input/output data that mimics the real data. I didn't know JMP could do MC simulations. I've done those before on other problems, but not using JMP. These are things I'll have to look into.

The goal is to develop a model that can well-predict the response. With that, we could monitor just the factors (easier). If the model predicts a warning, then the more challenging efforts can be done.

I still think it would be worth reading about the FDE and ARIMA, it might spark some ideas. I hope my somewhat vague explanations make sense. Thanks for understanding the disclosure concerns.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

It is not clear that simulating more data will help. If you can simulate the data, then you have the model of the data. The 'structure' is a shift in the population (not reproduced by the Monte Carlo simulation with a constant mean).

Are you simultaneously measuring the candidates for predictor variables (factors) with this response? Have you explored correlations, associations, and possible models?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

Hi @Mark_Bailey,

Ultimately, I'd like to build a strong model that predicts future values given the incoming factors. I have looked into the ARIMA and FDE. I agree the FDE is probably not a suitable approach. However, I think the ARIMA modeling might provide a pathway for generating new data for training the model.

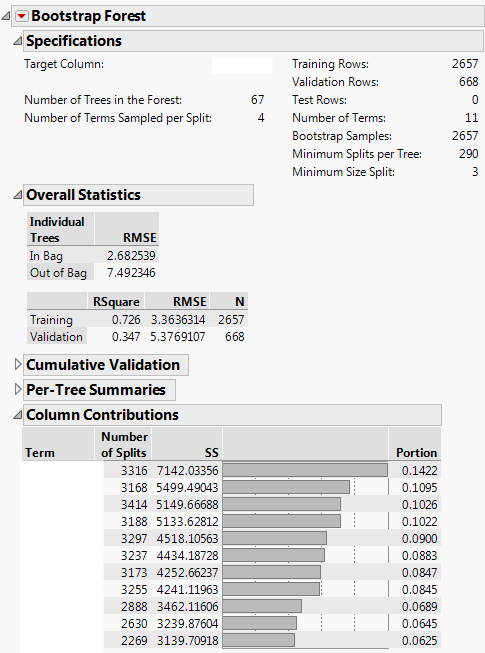

Several factors are measured more or less in parallel with the response. All measurements use up source material, but there is a large quantity that is sampled to be able to run the other measurements. We have identified a set of factors that have the strongest predictive capability. I have used those factors and the response to check several different models using generalized regression methods, boosted tree, boostrap forest, neural nets, and other partitioning methods. Comparing the models I find that the bootstrap forest consistantly gives better modeling results than the others. I can improve upon this model a little further by holding back for testing as well running the model with a tuning table to optimize the fit parameters.

What I still find, though, is that the model doesn't really capture the highs and lows of the data as much as we believe it should (or could). It does capture some of the structure, but it's not so great at getting those highs and lows. Hence, I'd like to simulate a larger data set that includes more of those events in order to build a better model. And since it takes a long time to actually build up a data set of real values, simulation of data to train a model would be helpful.

I do think that the ARIMA platform can be of help in this regard. I've tested it out to generate predicted values of the factors and response. This generates a new set of values for the factors and responses that mimic the real data. I can then concatenate the simulated and real data into a new data table to generate a new data set that is twice as big.

Unfortunately, the ARIMA modeling does not do a good job of predicting many new values for the factors or response, so I can't really use it to generate forcast periods. Still, I think this alternative approach to build a simulated data set might prove useful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

First of all, ARIMA and other time series models assume short-term perturbations and some periodic trends, so they are not good for long-range predictions.

The 'lack of fit' issue with your present models (prediction lacks the extreme excursions of the observed response) might not be aided by more data. You might be lacking sufficient predictors. One direction to reduce bias is to include more terms in the basis expansion using the current feature set. You mention several modeling techniques but you don't say anything about the complexity of these models that you explored. Higher complexity, with the risk of over-fitting addressed by cross-validation, might solve the bias problem. The other direction to reduce bias is to search for missing features that would finally explain the departures from the observed response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

Hi @Mark_Bailey,

There definitely might be some deficiencies in adequate predictors. However, based on the other analyses we've done, the set that we have definitely appear to be better suited than many of the others we've looked into.

With regards to complexity and cross-validation, I definitely take that into account. I generate a train/validation/test column to help with the risk of overfitting. The bootstrap forest modeling generates a rather complex decision-tree prediction formula that is much more extensive than, e.g. the generalized regression models or neural net modeling. It performs better too when comparing the models to one another. Typically, I find that after running the bootstrap forest platform with a tuning matrix, that there are on the order of 60+ trees, with ~4 terms sampled per split. The number of splits per term can vary, but is often in the 1,000's to 3,000's range.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

based on the dicsussion, it sounds like you have an outcome variable that you want to model with other predictor variables, where time, or the order in which the data are generated is not a predictor variable. You may want to simulate your NxP (N= rows, P = variables (y and x's)) matrix using a multivariate normal distribution. This link provides JSL to simulate multivariate normal distributions. http://www.jmp.com/support/notes/36/140.html You need to modify it based on your estimated covariance matrix, but it should work, if your data follows a multivariate normal distribution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

Hi @GM,

Thanks for the input and suggestion. You are correct about the intended goal and description of the multivariate data. The order the data is generated does not appear to be a good predictor. There are other properties that are measured which, when used collectively, provide a reasonable prediction of the "y" I want to model/predict. The difficulty comes when trying to train the model to be a better fit. I'd like to do this by simulating data, but this is proving more challenging that I anticipated.

The MVN approach certainly does do a better job at preserving some of the multivariate correlations of the data than the simulate option does in profiler. That option just makes an average with a given standard deviation.

Unfortunately when I try to build a model using the MVN script and generated data, it performs much worse than a similar model built off of just the original data.

So far, I find that the ARIMA model does a better job at capturing the short-term swings in the data, as well as some of the longer range structure. It also does a poor job predicting the forcast in data, but what I'm thinking is to use it to generate new data that is added to the original data to build a larger data set and then train a model with that larger data set.

What I think I'll try now is to "Save Columns" from the ARIMA report, extract out the predicted values and then concatenate that with the original data table. Then, I'd like to randomize the rows in the new data table and build a model with that larger data set. I guess another option might be to generate multiple validation columns, run the same model with different cross validation rows and average the resulting models.

I appreciate all the contributions to this discussion. Although I haven't found an appropriate solution yet, I'm learning a lot and coming up with some new ideas to try.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Simulating data to generate larger data set for building models

Hi @Mark_Bailey and @Peter_Bartell,

Just to provide some feedback, and continue the discussion on this topic, I was able to get some reasonable model data using the ARIMA platform. It does a good job of recreating a similar data set, although not good at forcasting, but that's OK. Applying the prediction formula from this model works fairly well with my actual data set, so it does provide a potentially useful path forward.

I'm not convinced this is ultimately how I wish to proceed, so I want to compare it to the other simulation options within JMP. I know that the stochastic simulation from the profiler doesn't help for reasons we've discussed before. Additionally, it's not the best approach for tuning the model for improved fitting, which I would like to do.

For my specific modeling, I'm using a bootstrap forest model and would like to improve it by tuning the model. I have done so in part with a tuning table. There are some limitations in this regard though, particularly with the number of runs I can afford (memory-wise) in the tuning table. I typically run my modeling with as many runs in my tuning table that I can afford. This narrows down on the number of trees in the forest, number of terms sampled per split, bootstrap samples, minimum splits per tree, and minimum size split. This might help to optimize the parameters of the model platform for finding a solution, but it doesn't necessarily mean an optimized model based on the data I have.

When I build the model, I create a validation column that I break into a training and test set, stratified by my response column. I then build the model on only the training data with a certain % held back for validation of the model. The model output depends on the validation column I choose (I've made several). A typical output is seen below (sorry I can't share what the info is for the response or factor columns). The details of the boostrap forest specifications change depending on how the original training and validation data was stratified.

What I'm interested in doing now is tuning the model for an improved fit possibly by using the simulate option for a model statistic. I could do this by changing the N for the training and validation sets, or by RMSE of the individual trees, etc. I know that I won't be able to use this approach to change the specifications of the bootstrap forest (the tuning table does that), but I would like to use it in such a way that I can tune the model, e.g. the number of samples used for training and validation, or the number of splits, in order to maximize r^2.

To summarize: I would like to use a simulation method to help train the data. I know the simulate platform from the profiler doesn't work, which leaves me with ARIMA (possible) or the bootstrap simulate option for a model statistic. I'm not sure how to best use the latter simulation method for tuning the model.

Any thoughts or feedback you might have on tuning models through simulations would be appreciated.

Thanks in advance for your time!

- « Previous

-

- 1

- 2

- Next »

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us