- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Pulling data from a webpage with multiple table pages

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Pulling data from a webpage with multiple table pages

Hey Folks,

I am pulling data from a webpage but running into a problem, I cant provide the web address due to IP.

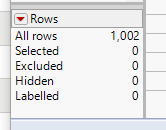

when i pull the page it opens a table but not all the rows - it opens the first 1000 rows:

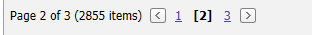

open("URL", HTML Table(2));The problem is it has 3 pages if that makes sense see screen shot

my question is how do I pull the next two pages?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

When you select the next page on the website in a browser, does the URL change in a way that you can decipher? If so, you could run your open script multiple times, one for each page/URL, then use the Concatenate() command to bring all the tables together.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

I tried this the URL remains the same, i tried page=2. Unfortunately as its an internal system I cannot share

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

There are several approaches, in addition to the @Jed_Campbell idea (which is the easiest if the browser's address bar shows an address change in a predictable way.)

0. If the site provides an API, possibly for rest services, that will be the best choice. Maybe you can ask the owner of the system for an API.

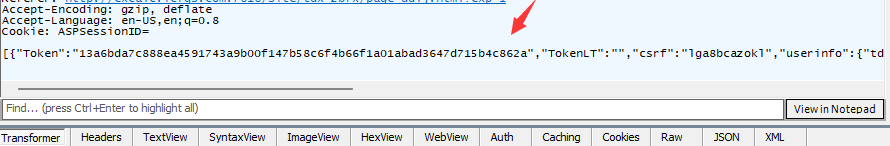

or, 1. Use F12 in your browser (I'm using FireFox, but Chrome and Edge are similar). Pick the network tab which shows you what is sent over the network. Click the next page button on the web site and start studying the requests that are sent. It's hard to say for sure what you are looking for, but it will often be JSON data. You'll see if it was a POST or GET and what sort of headers were used. If there is a choice, JSON will likely be better than HTML.

or, 2. This will be significantly harder, but will often work when other choices fail. Browser Scripting with Python Selenium

Scraping data from web pages is hard in the best case. In the worst case, some sites are actively trying to prevent automated bots from working, generally by detecting not a real browser or not a real human. Sites that don't update the address bar are using some sort of ajax-like protocol with JavaScript; that will show up in the F12 window, perhaps with an easy-to-decode URL and headers. Sometimes with a cookie or encoded parameters or password.

JMP has special handling for HTML tables, but that might not work for you unless there is a simple URL that returns each page as HTML with a table. JMP also has JSON, XML, and CSV import wizards that might help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

If there is JavaScript in the web page to improve the request of the timestamp algorithm conversion, how to use JSL to implement this JavaScript algorithm, so that JSL can download to the web data smoothly?

Thank Craige!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

A proper API will always be the best choice. An API will send back the data, typically as JSON, without other annoying artifacts like popups, <DIV>, etc. APIs are sometimes rest services.

Some web sites don't want to be scraped because they lose money when ads are not viewed and bandwidth costs money and they may have to pay for access to the data they pass along. If the site wants to make sure a human is using a real browser to access the data, using Selenium might violate their terms of service for robot scraping. Checking for a browser that can run JavaScript is one of the checks a site can do. A browser controlled by Selenium runs JavaScript just like a normal browser.

Without Selenium, when you use Open() or LoadTextFile() to read a URL, there is no browser. The JavaScript is just part of the text that is returned, not executed (just like the HTML is part of the text that is returned, not rendered to the display.)

https://www.google.com/search?q=use+selenium+to+run+javascript - a bunch of ideas if you actually need to run some Javascript: JSL->Python->Selenium->JavaScript.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

Thank Craige!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pulling data from a webpage with multiple table pages

Ok, I see, I studied your blog post carefully.

Thank Craige!

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us