- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Pull ZIP Files from HTTP Link

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Pull ZIP Files from HTTP Link

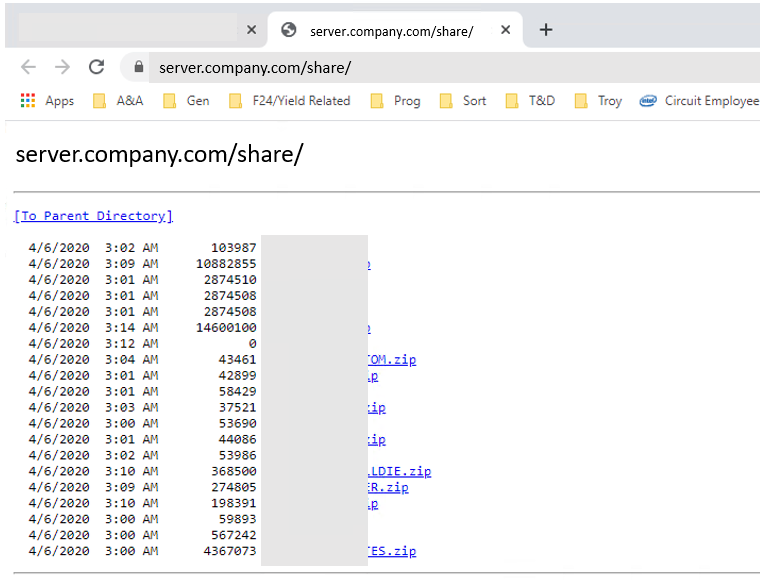

I have published ZIP files and want to programmatically pull them and store to my PC using JSL. I don't know up front how many files will be present.

Is this possible with JSL?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pull ZIP Files from HTTP Link

Or maybe this is closer to what you are asking:

path="https://www.vsp.virginia.gov/downloads/"; // a page with an index of files. Yours may be different format, adjust pattern below.

html = loadtextfile(path); // get the HTML text so we can scrape the links

// somewhat custom pattern for scraping the links, may be specific to this page

urls = {}; // this list will collect the urls

rc = patmatch(html,

patpos(0)+ // make sure the pattern matches from the start

patrepeat( // this is the loop that extracts the urls from the html

(

// the urls look like <a href="2017%20Virginia%20Firearms%20Dealers%20Procedrures%20Manual.pdf">

// and we want just the part between the quotation marks. Quickly scan forward (patBreak)

// for a < then see if it matches. >>url grabs the text between quotation marks.

(patbreak("<") + "<a href=\!"" + patbreak("\!"") >> url + pattest(insertinto(urls,url);1))

| // OR

patlen(1) // skip forward one character

)

+

patfence() // fence off the successfully matched text. There is no need to backtrack if something goes wrong.

) +

patrepeat(patnotany("<"),0) + // any trailing bits of html are consumed here

patrpos(0) // make sure the pattern matches to the end

);

if(rc==0, throw("pattern did not match everything"));

show(nitems(urls),urls[6]); // pick item 6. You'll have a different strategy.

fullpath = path||regex(urls[6],"%20"," ",GLOBALREPLACE);// minimal effort to fix up the url, might need more work

pdfblob = loadtextfile(fullpath,blob); // download item 6, it is a pdf when this was written...

savetextfile("$temp/example.pdf",pdfblob); // save it somewhere- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pull ZIP Files from HTTP Link

zip file from @wilkap presentation

za=open("https://community.jmp.com/kvoqx44227/attachments/kvoqx44227/virtual-jug/12/1/VJUG%20July%202015.zip","zip");

zipfiles=za<<dir;

show(zipfiles);

blob=za<<read(zipfiles[4],format(blob));

dt=open(blob,jmp);

clearglobals(za);several things to note

- the file is downloaded to your temp directory; the "zip" option to open returns a zip archive object

- you can get a list of members from the zip archive using <<dir

- you can use the blob format with zip archive for reading binary data like JMP tables

- the 3rd line uses a 2nd argument to tell open that the blob is a JMP data table

- clearing the za variable is needed if you rerun the whole script; the zip archive object keeps the file in the temp directory from being reused.

- you could use loadtextfile/savetextfile with blobs to download the zip file to a location of your choice (and delete it when done) and then use the zip archive to process that file.

- I already looked to see the 4th item in the archive directory was a JMP data table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pull ZIP Files from HTTP Link

Or maybe this is closer to what you are asking:

path="https://www.vsp.virginia.gov/downloads/"; // a page with an index of files. Yours may be different format, adjust pattern below.

html = loadtextfile(path); // get the HTML text so we can scrape the links

// somewhat custom pattern for scraping the links, may be specific to this page

urls = {}; // this list will collect the urls

rc = patmatch(html,

patpos(0)+ // make sure the pattern matches from the start

patrepeat( // this is the loop that extracts the urls from the html

(

// the urls look like <a href="2017%20Virginia%20Firearms%20Dealers%20Procedrures%20Manual.pdf">

// and we want just the part between the quotation marks. Quickly scan forward (patBreak)

// for a < then see if it matches. >>url grabs the text between quotation marks.

(patbreak("<") + "<a href=\!"" + patbreak("\!"") >> url + pattest(insertinto(urls,url);1))

| // OR

patlen(1) // skip forward one character

)

+

patfence() // fence off the successfully matched text. There is no need to backtrack if something goes wrong.

) +

patrepeat(patnotany("<"),0) + // any trailing bits of html are consumed here

patrpos(0) // make sure the pattern matches to the end

);

if(rc==0, throw("pattern did not match everything"));

show(nitems(urls),urls[6]); // pick item 6. You'll have a different strategy.

fullpath = path||regex(urls[6],"%20"," ",GLOBALREPLACE);// minimal effort to fix up the url, might need more work

pdfblob = loadtextfile(fullpath,blob); // download item 6, it is a pdf when this was written...

savetextfile("$temp/example.pdf",pdfblob); // save it somewhere- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pull ZIP Files from HTTP Link

Thanks Craig for the comprehensive reply. I'll take elements of both suggestions and merge into a generic function to suit my current and future needs.

I know I'd have to use a REGEXP to find the links from the HTTP Source but was hoping for a '<< saveLink' function for the zip part. :)

This will work perfectly fine though.

Great answer(s)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Pull ZIP Files from HTTP Link

Glad you can get something out of it! I'm pretty sure the pattern could be improved, speed-wise. Probably doesn't make a difference for directories of only a few thousand links, but the len(1) part could skip non-link text faster. And a more flexible pattern for the links would be better too.

@bryan_boone @ErnestPasour @paul_vezzetti

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us