- JMP User Community

- :

- Discussions

- :

- Multivariate Correlation vs Multivariate Relationship

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Multivariate Correlation vs Multivariate Relationship

Dear JMP Community,

I've tried to check within the history of discussion, and I'm not able to find topics that is related to my inquiries.

Let me provide the background:

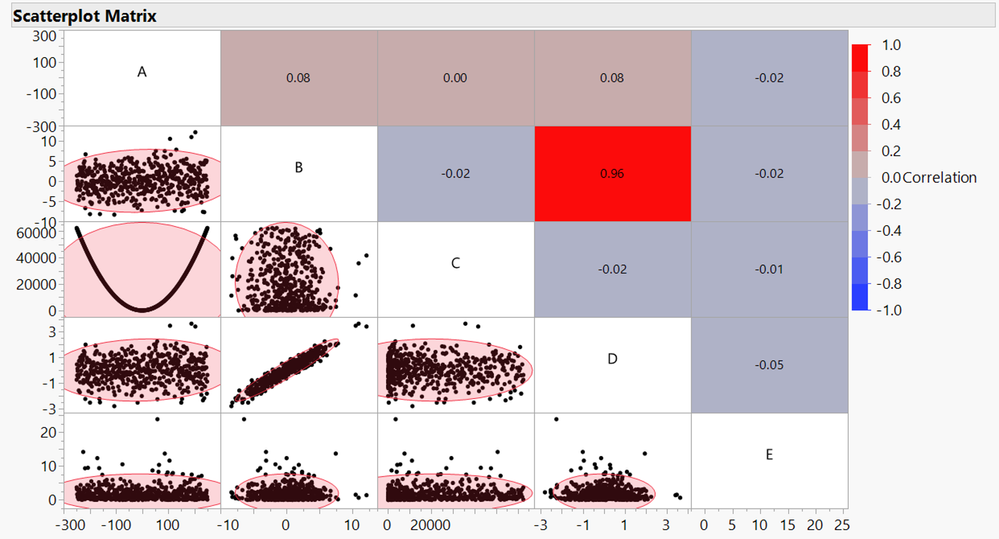

1. There is 5 variables (A, B, C, D, E). I created these data for explanation purposes.

2. If I wanted to check if these 5 variables are "related", then we can use Multivariate options which provide the

correlation value.

3. The correlation value provided are based on linear relationship between the variables.

Example : B vs D , Correlation = 1.00.

Example : A vs C , Correlation = 0.00. However there is a relationship between A & C which is quadratic.

My question is:

Is there any statistical method that can provide a quick look into relationship (rather than correlation) between

variables?

Alternatively, I could perform the Fit Model manually with all the combination of the variables. But this is too time consuming.

Example is to have same scatterplot matrix below, but with option to provide the P(value) from a Ftest (ANOVA) to check if there is relationship between the variables. Let's say up to quadratic term.

Example : B vs D , Correlation = 1.00 & P(value) from F(test) is <0.0001

Example : A vs C , Correlation = 0.00 & P(value) from F(test) is <0.0001

Thanks to advise.

B.r,

Chris

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Multivariate Correlation vs Multivariate Relationship

Hi @ChrisLooi,

I would recommend plotting the data like you did in order to find unusual patterns using the platform Multivariate. I also see (at least) two ways to identify complex relationships/associations between your variables :

- In the Multivariate platform, you can also use different Nonparametric Measures of Association. Using a reproduction of your example, the Hoeffding's D test is able to spot the quadratic relationship between A and C :

- You may also create a dummy response Y (no matter if it's fixed values or random values), and based on terms introduced in the model, display the Variance Inflation Factors in Parameter Estimates panel (right-click on the table, click on column and select VIF if they are not displayed by default). High VIFs indicate a collinearity issue among the terms in the model.

In a reproduction of your example, visualizing the VIF (through their log values, because they are very high !) enable to identify possible collinearity issues :Term AxA, A and C have very high VIF values, indicating a collinearity issue. Many other terms display VIF with large values (higher than 5-10), so you can easily identify there are collinearity issues with this dataset.

I attached the dataset used to reproduce your use case. Please next time provide your example dataset, it will save some time and help people answer to you more easily.

I hope these two options may be helpful for you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Multivariate Correlation vs Multivariate Relationship

Hi @ChrisLooi,

I would recommend plotting the data like you did in order to find unusual patterns using the platform Multivariate. I also see (at least) two ways to identify complex relationships/associations between your variables :

- In the Multivariate platform, you can also use different Nonparametric Measures of Association. Using a reproduction of your example, the Hoeffding's D test is able to spot the quadratic relationship between A and C :

- You may also create a dummy response Y (no matter if it's fixed values or random values), and based on terms introduced in the model, display the Variance Inflation Factors in Parameter Estimates panel (right-click on the table, click on column and select VIF if they are not displayed by default). High VIFs indicate a collinearity issue among the terms in the model.

In a reproduction of your example, visualizing the VIF (through their log values, because they are very high !) enable to identify possible collinearity issues :Term AxA, A and C have very high VIF values, indicating a collinearity issue. Many other terms display VIF with large values (higher than 5-10), so you can easily identify there are collinearity issues with this dataset.

I attached the dataset used to reproduce your use case. Please next time provide your example dataset, it will save some time and help people answer to you more easily.

I hope these two options may be helpful for you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- © 2024 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us