- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: MODEL COMPARISON TABLE IN JMP PRO - DIFFERENCE IN VALUES

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

MODEL COMPARISON TABLE IN JMP PRO - DIFFERENCE IN VALUES

I am carrying out three analysis on a set of data , namely multiple linear regression, bootstrap forests and neural networks and later apply the model comparison table to obtain a summary of the results at a glance. I find that the results obtained in the neural networks appear differently in the actual neural network output and the model comparison table. For example the R Square value obtained in the neural network out put was 0.9812414 , but this appears as 0.9553 in the model comparison table for the test set of the validation column. How this value changes in the model comparison table ?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MODEL COMPARISON TABLE IN JMP PRO - DIFFERENCE IN VALUES

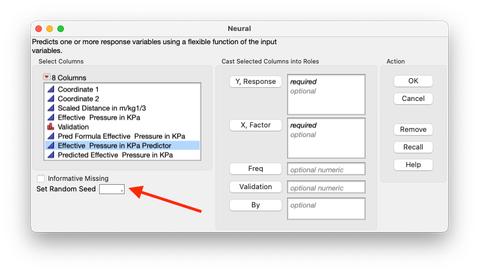

The neural network algorithm starts by randomly assigning a set of weights and then iteratively refitting those weights. This process yields a slightly different set of final weights (and therefore fit statistics) every time the algorithm is run. You saved one specific set of weights when saving the prediction formula for use in Model Comparison, and now when you rerun the analysis by opening the .jrp file, the model is rerun with a new random set of starting weights and produces slightly different numerical results. To obtain the exact same numerical result every time, specify a random seed value before running the model. (See this documentation page.) Setting the random seed will ensure that the random initialization of weights is the same each time.

The same concept applies to the Bootstrap Forest (here, the random seed controls the bootstrap sampling), though the random seed option appears in the specification window instead of the launch window as in Neural:

JMP Academic Ambassador

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MODEL COMPARISON TABLE IN JMP PRO - DIFFERENCE IN VALUES

The neural network algorithm starts by randomly assigning a set of weights and then iteratively refitting those weights. This process yields a slightly different set of final weights (and therefore fit statistics) every time the algorithm is run. You saved one specific set of weights when saving the prediction formula for use in Model Comparison, and now when you rerun the analysis by opening the .jrp file, the model is rerun with a new random set of starting weights and produces slightly different numerical results. To obtain the exact same numerical result every time, specify a random seed value before running the model. (See this documentation page.) Setting the random seed will ensure that the random initialization of weights is the same each time.

The same concept applies to the Bootstrap Forest (here, the random seed controls the bootstrap sampling), though the random seed option appears in the specification window instead of the launch window as in Neural:

JMP Academic Ambassador

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: MODEL COMPARISON TABLE IN JMP PRO - DIFFERENCE IN VALUES

Thank you for your prompt response , which has clarified the matter.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us