- JMP will suspend normal business operations for our Winter Holiday beginning on Wednesday, Dec. 24, 2025, at 5:00 p.m. ET (2:00 p.m. ET for JMP Accounts Receivable).

Regular business hours will resume at 9:00 a.m. EST on Friday, Jan. 2, 2026. - We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- LDA cross-validation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

LDA cross-validation

Hello,

I'm a new user of JMP (JMP 13.1.0). I have a question please: Is it possible to perform an linear discriminant analysis with cross-validation (leave-one-out method) in this version of the software?

Thank you,

Adias

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: LDA cross-validation

It is similar to excluding rows, but you can also specify a hold out set for testing the selected model.

You can create multiple data columns for the validation role, but you can only use one at a time. The idea is that once you decide on the size of the hold out sets, any of them are equally useful. Also, you can use the same validation column in more than one modeling platform for a fair and valid comparison of models. Why would you need more than one validation column? How would you use multiple validation columns?

I do not know what you mean by "option to select the validation method." There is only one cross-validation method with hold out sets represented by the Validation analysis role.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: LDA cross-validation

I see, thanks a lot for explaining this!

By 'validation options' I meant different methods of validating the models like k-fold or Monte Carlo cross-validation explained here: https://www.statisticshowto.com/cross-validation-statistics/

As far as I understand, to run 5-fold cross-validation, I'd need to have five validation columns. Each column would represent the 80-20 split, but where the 20% dedicated for the test in each of the 5 folds is different every time. Is there a way I can do this in JMP?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: LDA cross-validation

JMP provides K-fold cross-validation in some platforms but not all of them. You would have to implement your own version of it. Here is one way to do it:

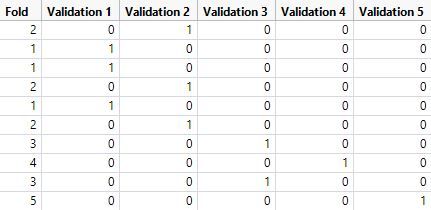

- Create a new data column called Fold that uses the Nominal modeling type and with a formula of Random Integer( 1, 5 ). This column will identify the five folds for you.

- Create a series of five more data columns called Validation i, also using the Nominal modeling type, and each with a formula of Fold == i, where i changes from 1 to 5 as you go from the first to the last of these new columns.

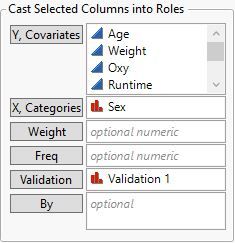

- Launch the Discriminant platform five times using the succession of Validation i columns in the Validation analysis role.

You should have new columns for 5-fold cross-validation in your data table like this.

This first iteration of your model fitting might look like this:

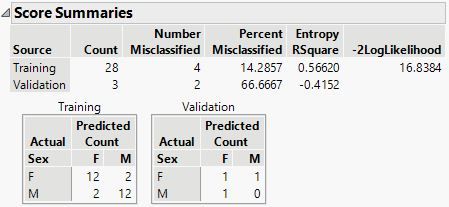

Use the cross-validation information for each of the folds, such as:

You can then combine the five sets of results into the overall training and validation results as you see fit such as described in your cited reference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: LDA cross-validation

- « Previous

-

- 1

- 2

- Next »

Recommended Articles

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us