- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Interpret prediction profiler confidence intervals for non significant parameter...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Interpret prediction profiler confidence intervals for non significant parameters

Hello everyone,

I beg your pardon for my dummy question.

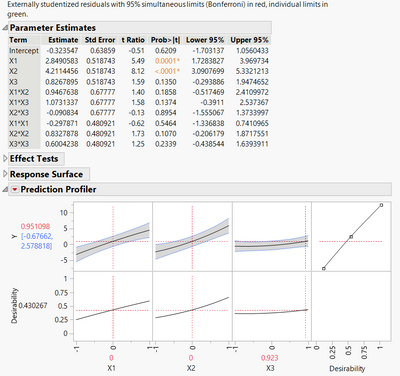

I am fitting a response surface model (glm including main effects, interactions and quadratic effects) on results of a central composite design with three parameters noted X1, X2 and X3 for the sake of the example. While looking at the parameters estimates, some of them are significant and some are not significant, at the alpha risk of 5%. My question is related to a parameter, let's say X3, where neither the main effect, the interactions nor the quadratic effect are significant.

I am interested in analysing the prediction profiler as it gives me indications on the ranges of the predicted Y-values on the design space considering all parameters X1, X2 and X3 (at given locations).

As none of the X3 estimates was significant I am not willing to interpret the estimates (even though is appropriately expressed that any interpretation is unreliable due to random variation and that there is no conclusive evidence that the variable has any effect at all).

My question is then the following one: Does it still make sense to interpret the confidence intervals of a prediction profiler for this parameter X3? Is this confidence interval somehow reliable even when none of the estimates is significant?

Many thanks in advance for your help,

Chris

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Interpret prediction profiler confidence intervals for non significant parameters

I would say it makes sense to look at that confidence interval. The non-significance is shown by the confidence interval for X3 including the horizontal (no effect) line. But the interval conveys additional information - about the potential range of the effect consistent with the data. X3 may not be significant but could potentially have a large effect - it's just that you can't say with any confidence the direction of that effect. But a wide confidence interval would indicate it is a noisy effect and one that might deserve further investigation (collect more data, for example). The binary choice of significance/non-significance is not particularly useful (I'd say it is of little use and potentially of much danger). Some effects are more distinguishable from random noise than others and some effects have stronger relationships with your response variable than others. These are different questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Interpret prediction profiler confidence intervals for non significant parameters

I would say it makes sense to look at that confidence interval. The non-significance is shown by the confidence interval for X3 including the horizontal (no effect) line. But the interval conveys additional information - about the potential range of the effect consistent with the data. X3 may not be significant but could potentially have a large effect - it's just that you can't say with any confidence the direction of that effect. But a wide confidence interval would indicate it is a noisy effect and one that might deserve further investigation (collect more data, for example). The binary choice of significance/non-significance is not particularly useful (I'd say it is of little use and potentially of much danger). Some effects are more distinguishable from random noise than others and some effects have stronger relationships with your response variable than others. These are different questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Interpret prediction profiler confidence intervals for non significant parameters

Welcome to the community. The first question is how much of a practical change in the response is associated with X3? You should always evaluate practical significance prior to statistical significance. Did the response variable change in a meaningful way? This must be interpreted by the SME.

On a side note, typically response surface type designs are used AFTER factors' significance has been determined via screening or other sequential designs. These type of designs are meant to create a surface to select levels for those pre-determined significant factors.

A second note, statistical significance is a conditional statement. There is a comparison between the 1. variation created by manipulating factors at specified levels and the 2. random errors (noise) under a 3. set of conditions (inference space). If any of those 3 components changes (i.e., in the future), so may statistical significance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Interpret prediction profiler confidence intervals for non significant parameters

One question/comment about this: your first point seems to suggest an initial screening to include statistically significant factors in your model (and, so, to exclude the others). Indeed, I often work that way myself. But it causes me discomfort when I do so. Omitting factors due to their non-significance does simplify the model (and preserve degrees of freedom) but risks changing the impact of what is left in the model. It is hard to claim that the remaining factors are being more accurately assessed when insignificant factors are removed from the model.

As a result, as the data sets become larger and larger, I have increasingly adopted the practice of leaving all potential factors (to the extent possible, and hopefully governed somewhat by subject matter knowledge about what potential factors make sense) in the model, significant or not. Can you comment on this issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Interpret prediction profiler confidence intervals for non significant parameters

Dale, here are my thoughts. I apologize, but mostly philosophic. All scientific investigations are iterative (I guess that's why we call it continuous improvement). Why are we constantly "optimizing" and never seem to "get there"? IMHO, there are 2 primary reasons:

1. We never start with ALL. We always start with some subset of factors, likely the ones we already have some hypotheses about. And what about those we don't feel strongly about, we seldom get data to support these have no effect. It is a typical human bias.

2. The world evolves. New materials and technologies are constantly being developed (and applications for those).

In search of the best manageable (e.g., simple) process, we use constant iteration of induction-deduction. Along this path, we make decisions weighing the effectiveness and the efficiency of the model(s). Ultimately, we are trying to understand causality. We use the methods at our disposal to try and determine which factors should be included in our future studies and what order model is necessary. This includes both practical evaluation as well as statistical evaluation. I am extremely cautious using statistical significance because it is a comparison and if you don't understand what you are comparing, it is meaningless. On the other hand, if you do have some idea of what is being compared (e.g., what creates the design space and what creates the inference space) and, for example, you know those sources of variation are representative of future conditions, then by all means you can feel confident in that analysis. Finally, we recognize we live in a multivariate world (e.g., customers want the product meet multiple criteria) and there will likely be compromises that must be made to achieve the best under the current circumstances.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us