- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Difference between JMP and Excel in dummy variable regression outputs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Difference between JMP and Excel in dummy variable regression outputs

Hi all,

Newbie question here: I'm doing dummy variable regression using JMP for a school project. When I cross-referenced to Excel's version, I realized the coefficients are completely different even though the t-stats are the same.

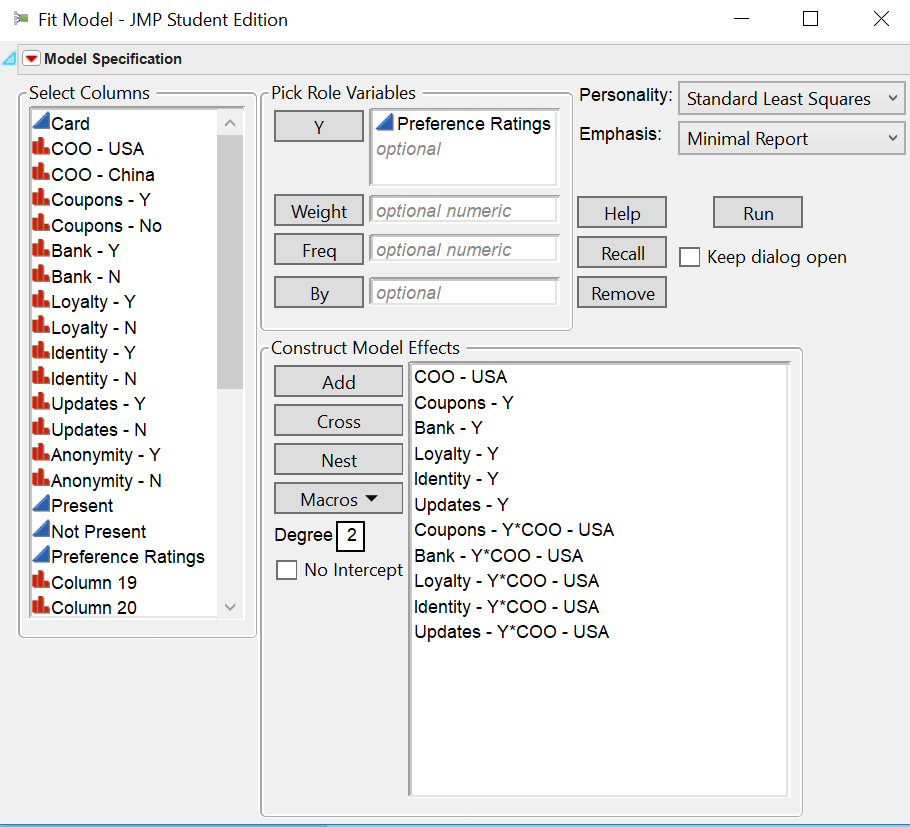

JMP:

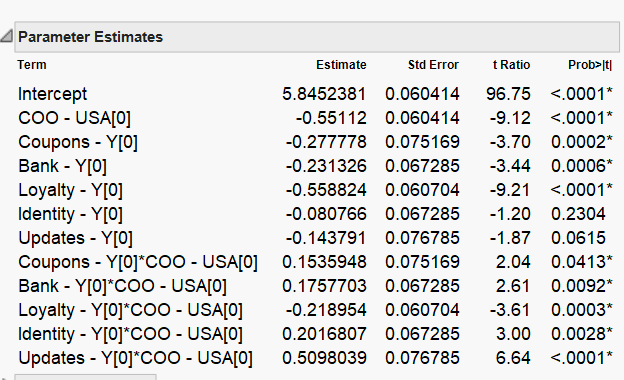

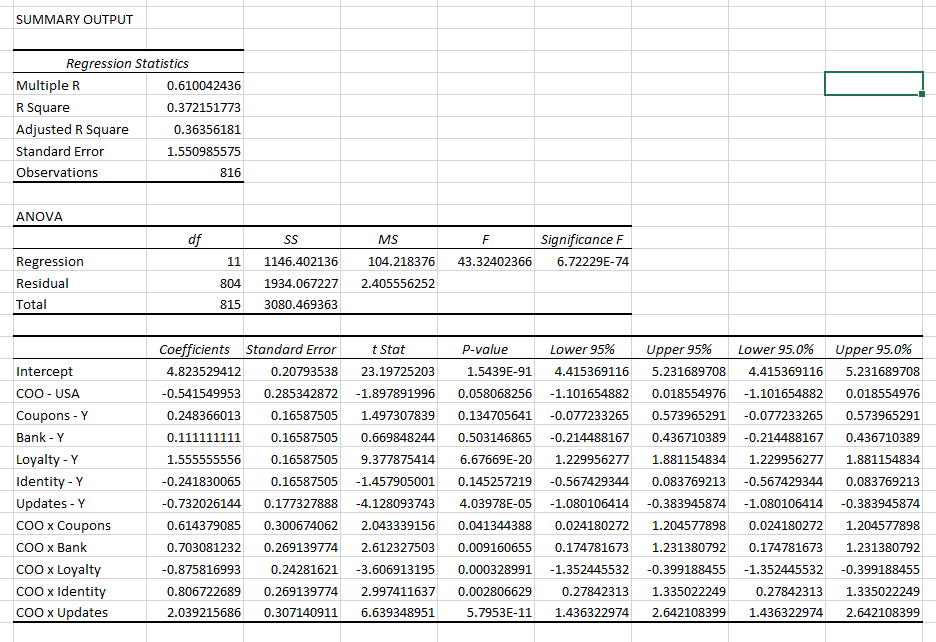

These two images are of JMP and as you can see as compared to the Excel's version, the figures are different even though I'm using the same attributes.

And it's not because of the interaction effect as I did with just the variables, without the interaction effect (e.g. COO x Coupons) and the results are still different.

Strangely, Excel's version seems more correct than the JMP's version, aligning more closely with my secondary and other primary research.

Any advice?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between JMP and Excel in dummy variable regression outputs

Hi Mark,

Thanks for your help! You've helped me so much on how to use JMP.

I'm at the finishing line, so just two more questions:

1) I can't seem to find the standard error of estimate for the overall model after running the regression (I can find only the standard error for each independent variable). Can you point me in the right direction for this?

2) Let's say I've coefficient estimates generated from one regression, and those generated from another regression. Am I able to run a t-test of the coefficient estimates in JMP? If so, do you have any advice how I can approach that?

Really appreciate your help on this! Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between JMP and Excel in dummy variable regression outputs

I need some clarification.

- What do you mean by the "estimate of the overall model?" You estimate parameters. Can you show me an example where it is available? Then I might recognize it.

- We generally do not compare parameter estimates between models. We instead use model selection criteria (e.g., adjusted R square, AICc, BIC, Mallow's Cp) to choose a model with minimum bias and variance and then infer about the parameter estimates of the selected model. We would not want to compare two estimates from different models with a hypothesis test for a difference. Estimates are expected to depend on the other terms in the model except for the rare case that all the estimates are orthogonal (uncorrelated), so different models (i.e., different sets of terms) would usually yield different estimates for any given term.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between JMP and Excel in dummy variable regression outputs

Hi Mark,

Thanks for getting back so promptly.

If you refer to the attached images, that's the analysis output after running a regression. So my question is, is there a way for me to find the "total standard error of estimate", if there's such a thing since currently all I can see about the standard error is of each coefficient estimate.

Thanks. I understand where you're coming from.

Another thing is, is there a way to find the standard error of beta coefficient for each independent variable in the Fit Model's Analysis? I understand this is the method used to find beta coefficient: http://www.jmp.com/support/notes/36/009.html

Regards,

Fred

[Edited as I've found the answer to one of the questions]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between JMP and Excel in dummy variable regression outputs

There are no attached images.

The link takes me to a page that says "Timed out."

Which question is answered? What is the answer? (Thanks!)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between JMP and Excel in dummy variable regression outputs

Hi Mark,

All the questions are answered except for "how to find the standard error of beta coefficient for each independent variable" after running a regression on Fit Model in JMP.

Can you point me in the right direction? Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference between JMP and Excel in dummy variable regression outputs

The standard error is 1 by definition.

- « Previous

-

- 1

- 2

- Next »

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us