- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Customize Response Surface model picking weird number other than lower/cente...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Customize Response Surface model picking weird number other than lower/center/upper level.

Hi all,

I ran a initial DSD and now trying to follow up with Custom Design with some interaction term mimicking RSM.

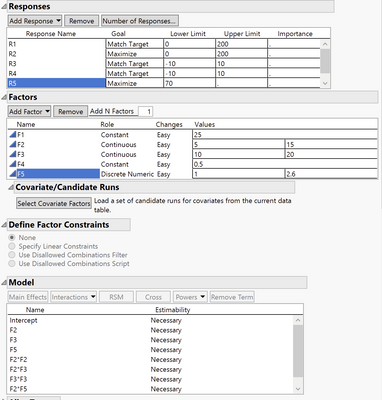

I set the range of lower and upper limit of each factor unless "fixed factors" as you can see below and added some interaction terms.

Then I made the design without having center points/ replicates with I-optimality, which created 15 treatments by default.

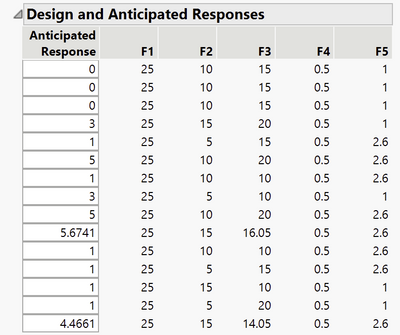

Most of the values generated on each factor levels are expected. However, I see two levels that is neither upper/lower limit nor center value on third factor (F3). You can see non-integer value (16.05 and 14.05) below.

Does anybody know why I get these numbers ? What is the theoretical reason behind it and Is there anyway to force it to become integer value that is either on upper/lower end or center point?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

My guess is that what you are seeing is related to the distances of the star points in a central composite design (https://www.itl.nist.gov/div898/handbook/pri/section3/pri3361.htm). F2 and F3 are the only continuous factors and it is only for them you are seeing "weird" values. I tried reproducing your design and received a similar output. Once you have created your DoE table to fill with responses, it is possible to change these values, but since I am not familiar with the effects of your factors it is not clear whether that would "destroy" the design. At this point I am strongly recommending the SAS' STIPS DoE module. Also, aren't R3 and R4 fully redundant?

Edit: OK, only "weird" values for F3, not F2 in your screenshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

It turns out that the coordinate exchange method will often move a design point away from the expected coordinate (e.g., F3 = 15) to improve optimality given the factor definitions, terms in the model, the number of runs, and so on. This result is normal and not uncommon. Combinatorial methods like factorial design and DSD result in balance. That is not a goal for Custom Design.

This design is perfectly acceptable. However, it might not be practical. Feel free to move these points back to F3 = 15 if you like. You won't break anything. Please save a copy of the custom design, open it so you have two copies, change the F3 levels to 15, and then select DOE > Design Diagnostics > Compare Designs. The optimality will be slightly reduced.

Did you consider using optimal augmentation of the original DSD instead of a new custom design?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

Thanks @Ressel and @Mark_Bailey

My laptop does not let me log in back to my original account so I am using this account.

As @Mark_Bailey said, these non-integer values appear anywhere in continuous factors (F2 or F3).

I have a follow up questions.

1. It is relieving that moving those non-integer numbers back to integer would not break the design. How would you "move" those points though? Since it will not break the design, I can just edit that value back to 'original(integer)' value in generated table. However, this will not show me how the changing of this point affect the correlations between factors (e.g. Color Map on Correlations). I am just interested to see how aliasing changes as I change those values. Is there way to edit this value in "Custom Design" tab?

2. I initially wanted to follow up my DSD with Augment Design. Augment Design seems to spit out the treatments (or "local" design space) that I have not explored to make the model prediction built with DSD better. But it does not necessarily spit out the optimal design space I would like to follow up. I have used just normal Augment Design on DOE tab which gives me not less degree of freedom to play around (e,g, fixing factor constant). I am not familiar with 'optimal augmentation' you talked about and I would like to explore. Could you direct me to right resources (webinar or posting) that I can look into please?

Thanks for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

1. You cannot change factor settings in Custom Design. Only JMP can do that. You can click the Make Table button and edit the value in the resulting data table. You can see the information before and after you edit the non-integer values. You can then use DOE > Design Diagnostics > Evaluate Design to get information about the design.

2. You should read the documentation about Augment Design. You can change the factor ranges during augmentation before generating new runs. Be careful! Do not narrow factor ranges because you expect the desired response in a local region. That change will be less informative in estimating the model parameters, which is the whole point of an experiment. Save confirmation for separate tests later after model prediction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

I am just going to make some philosophical comments to add to the discussion or you can completely ignore me.

The idea behind response surface design is to map the response as a function of important factors (model terms). Three things the experimenter needs to keep in mind:

1. The "true" surface already exists and we can't change it.

2. Surfaces are "n" dimensional, although there is strong empirical evidence to suggest only a subset of the surface is need to be useful (scarcity of effects principle).

3. It may not be useful or practical to map a surface using design factors when the conditions under which the experiment was run (i.e., inference space as a function of noise) can't be repeated.

There are many different approaches to mapping the surface, but in all cases, iteration is key to be efficient and effective in your investigation. I am an advocate of sequential experimentation. For example (overly simplified): One may start with directed sampling to determine dominant components or sources of variation and assess measurement errors. Experiment on the dominant components to identify significant factors and the effects of noise. Move and/or augment the space defined by the significant factors and find where those factors are robust to noise.

From a geometric and statistical analysis standpoint, the more balanced the design, the easier the analysis and hopefully the less likely biased (level setting may bias the study). Of course as computing power has increased, the need for balance has become less necessary. However, when you are mapping the surface, you are looking to sample the surface such that you mitigate your own biases as to where to focus. Ironically, when you are at the point of being near the optimum and now you are fine tuning the factor levels in this space, statistical significance is no longer as important (it has already been established). You are simply trying to test different locations on the surface to find best response values.

A good model is an approximation, preferably easy to use, that captures the essential features of the studied phenomenon and produces procedures that are robust to likely deviations from assumptions. G.E.P. Box

“The experimenter is like the person attempting to map the depth of the sea by making soundings at a limited number of places” Box, Hunter & Hunter (p. 299)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

Hi @statman and @Mark_Bailey

Thanks for offering the insightful philosophy and practical help regarding RSM or DOE in general.

I should say that my initial DSD run yielded a fairly good model even though there could be general improvement with Augment Design. However, I am more interested in scanning through the 'sweet spot' where Response is expected to be optimal than optimizing the Model Prediction. So I would say making better model would be secondary.

For instance, I have 5 continuous factors. I would like to fix two factors constant as you can see from the screenshot in my initial posting. These two factors do not have a big impact on responses and insensitive to changes by the change of other factors. And I trust this model prediction built by DSD. That is why I wanted to do Augment Design within somewhat limited range.

I read over the Augment Design tab but I did not find a way to change the 'property' of factors (continuous, constant, categorical) as they augment the design based on the previously built model. I can somewhat restrict these continuous factors that I do not want to vary using Define Factor Constraints tab in Augment Design. However, I cannot 'fix' that factor at specific value (another word 'constant').

Would Augment Design still be helpful in this case? Should I use Custom Design which gives me much more degree of freedom in fixing and changing the factors?

My initial purpose of doing DOE is to find the optimal conditions (conditions that I can maxmize the yield) faster with less experimental runs. As the time is important and the cost of materials I am dealing with is not cheap as well. I believe DSD did fair job in building somewhat predictive model which allows me to look at the local region where the responses would be optimal. However, after reading @Mark_Bailey and @statman comments, it might be worth improving the model first using Augment Design (with wider range than where the optimum would be), then, run additional validation run using Custom Design.

I guess it all depends on the situation and there would be no one answer to this. However, I would like to hear more of you guys' comments either practical or philosophical. It has been only ~10 days since I started to read DOE and to use JMP so any comments to this newbie would be appreciated.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

Why keep these two factors in the model and the new design? You can also drop factors when you augment if screening finds them among the 'trivial many,' not among the 'vital few.'

Some hallmarks of DOE are efficiency, economy, and rapid learning. The result is a model that hopefully enjoys a long and productive life in service to you. It is worth the investment. @statman has professed the necessity and the benefit of iteration in the learning process. So screen, augment, optimize, and verify. You are correct, though, that this everyday workflow has myriad variations that depend on the situation.

Keep asking questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

I think I did not clarify why I wanted to keep those two in the models.

They are insensitive to changes in the region 'where responses seem to be optimized'. So they can be dropped for optimizing the responses.

However, they do have significant effects on the responses 'where responses are not optimized'. So in terms of model building purpose (aka Augment Design), I do want to include those factors.

I am pretty sure that I can find optimal and robust conditions where responses are optimized without Augment Design. However, after discussion with the experts like you guys, I decided to step back and try to understand the model better using Augment Design. Thanks for all your help and support!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Customize Response Surface model picking weird number other than lower/center/upper level.

Thanks for the clarification.

You might use a feature of the Prediction Profiler for robust process design that is not available when used within a fitting platform. Save the model as a column formula. Select Graph > Profiler. Assign hard-to-control factors to the Noise Factor role. The profiler will simultaneously maximize the desirability of the responses and minimize the first derivative of the responses.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us