- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Calculating a Pooled Kappa Coefficient in JMP Pro 13

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Calculating a Pooled Kappa Coefficient in JMP Pro 13

Hi everyone,

I am a fourth year undergraduate student working on my honors thesis and I have run into an issue with calculating a pooled kappa statistic in JMP.

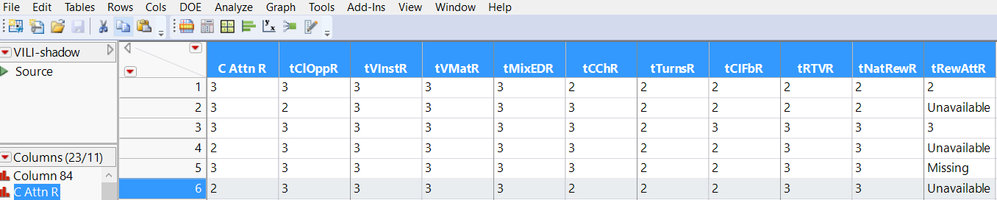

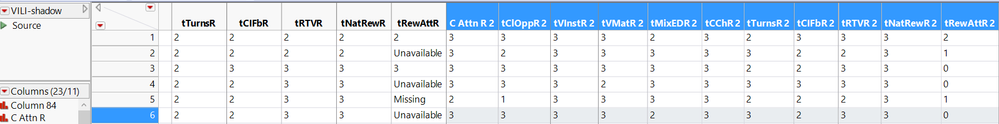

While I understand that Cohen's kappa is normally calculated between two raters who provide ratings in a 2x2 agreement table format, what I want to do is to calculate a measure of agreement between two groups of raters. The first group, highlighted here, provided ratings from a likert scale of 1-3 (0-3 for the last criteria) to 11 criteria "C Attn R, tCLOppR..." as indicated along the column headers. This scale corresponds to the frequency of a behavior, with 0=Unavailable, 1=not consistently used, 3=consistently used throughout the session.

Group 2

Group 2Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Calculating a Pooled Kappa Coefficient in JMP Pro 13

There are other platforms in JMP that you can use for analysis of agreement. Please check out the example http://www.jmp.com/support/help/13/Example_of_an_Attribute_Gauge_Chart.shtml#304555

that involves multiple raters and multiple items (i.e, parts). It seems from the data you showed you would need to turn your data around into one where questions as rows and ratings as columns.

As for assessing an overall agreement between two groups of raters I would suggest you sum up the ratings across raters in each group and then calcuate the Kappa on the group ratings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Calculating a Pooled Kappa Coefficient in JMP Pro 13

There are other platforms in JMP that you can use for analysis of agreement. Please check out the example http://www.jmp.com/support/help/13/Example_of_an_Attribute_Gauge_Chart.shtml#304555

that involves multiple raters and multiple items (i.e, parts). It seems from the data you showed you would need to turn your data around into one where questions as rows and ratings as columns.

As for assessing an overall agreement between two groups of raters I would suggest you sum up the ratings across raters in each group and then calcuate the Kappa on the group ratings.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us