- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Automate the neural network

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Automate the neural network

Hi all,

I am working on a project using a neural network model to predict soil moisture in different locations (around 1000 ones) defined by their unique coordinates. The same structure for the neural network in JMP can be applied to all sites. So, I am thinking about automating the work. It can be a JMP script that can find the location coordinator (X, Y), extract necessary data inputs, and run the neural network. At the end, the script can save reports of the models. Do you have any pointers or advice you can share with me?

Thank you in advance.

Any suggestion or discussion is appreciated.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Hi @giaMSU ,

This sounds very do-able, but your question is pretty open-ended. What are the roadblocks you are seeing that you need the community to help with?

I would start with the script for the neural net and see where you will need to make substitutions in the script for each location. Define a function or expression that extracts the location-specific information, performs the neural net analysis, saves the report/scripts. Iterate through the locations running that function/expression each time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

@giaMSU, what you plan to do is possible. Step 1. Use Summarize function to create a list of each of the J = 1000+ locations. Step 2. Index the J locations in the list. Step 3. Use Select Where and Subset functions to create a data set for the jth location. Step 4. Run the jth neural net model for the jth location. Step 5. Save the NN platform report in your desired format. Step 6. Repeat until you reached the last location. I didn't test, but the following should work or get you real close.

//assumes data table is "dt" and location is in a variable called "Location"

Summarize( locs = BY(dt:Location) );

For(

j=1, j<=NItems(locs), j++,

file = dt << Select Where(locs[j] == :Location) << Subset(

file,

Output Table Name("Model Subset File")

);

NN = file << Neural(

Y( :Name( "Y" ) ),

X( :Name( "X1" ), :Name( "X2" ) ),

Validation( :Validation ),

Informative Missing( 0 ),

Set Random Seed( 1121 ),

Fit( NTanH( 3 ) )

);

NNReport = NN << Report;

NNReport << Save PDF("Path")

);- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

I believe that the issue is that you need to add the Char function around the :ID in the Select Where function.

Select Where(loc[j] == Char(:ID))- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

The script runs well now.

There is a small issue in the script. Since you named the file is "Model Subset File," the script replaces the PDF file in each step. When it's done, there is only a file in the folder. Could you help me to add location ID into the filename so the script can record each report with a unique name?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

It sounds like the report is getting over written. Try concatenating the ID to the report like with the following:

Save PDF("C:\test\model_"|| Char(i) ||".pdf");- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Hi @giaMSU,

Thanks for posting the smaller data table that you have, and the script you were working with. I modified it a bit, and I got it to work. Give the following a try. I hand't realized that you were creating subsets of data tables and using only those for fitting.

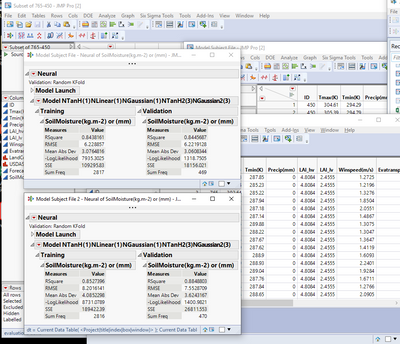

Here's the output I got from running a modified version of your script:

Here's the modified script:

Names Default To Here( 1 );

dt = Data Table( "Subset of 765-450" );

Summarize( locs = BY( dt:ID ) );

For( j = 1, j <= N Items( locs ), j++,

file = dt << Select Where( locs[j] == Char( :ID ) ) << Subset( file, Output Table Name( "Model Subject File" ) )

);

dt_list = {};

one_name = {};

For( m = 1, m <= N Table(), m++,

dt_temp = Data Table( m );

one_name = dt_temp << GetName;

If( Starts With( one_name, "Model" ),

Insert Into( dt_list, one_name )

);

);

For( i = 1, i <= N Items( dt_list ), i++,

NN = Data Table( dt_list[i] ) << Neural(

Y( :Name( "SoilMoisture(kg.m-2) or (mm)" ) ),

X(

:Name( "Tmax(K)" ),

:Name( "Tmin(K)" ),

:Name( "Precip(mm)" ),

:LAI_hv,

:LAI_lv,

:Name( "Winspeed(m/s)" ),

:Name( "Evatransporation(kgm-2s-1)" ),

:LandCover,

:USDASoilClass,

:Forecast_Albedo

),

Informative Missing( 0 ),

Transform Covariates( 1 ),

Validation Method( "KFold", 7 ),

Set Random Seed( 1234 ),

Fit(

NTanH( 1 ),

NLinear( 1 ),

NGaussian( 1 ),

NTanH2( 3 ),

NLinear2( 0 ),

NGaussian2( 3 ),

Transform Covariates( 1 ),

Robust Fit( 1 ),

Penalty Method( "Absolute" ),

Number of Tours( 10 )

)

);

NNReport = NN << Report;

NNReport << Save PDF("E:/Ready_for_ANN/result") // I actually didn't do this part.

);If you have a lot of different location ID's, you might want to save each result with the unique ID character code to differentiate them. Maybe modify the "save" line to something like:

NNReport << Save PDF("E:/Ready_for_ANN/result_LocID_"|| Char(dt_list[i]:ID[1]));Hope this helps!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Earlier in this thread, I posted a section of script I use all the time when running thousands of training sets in order to just save the R^2, RASE, etc.

As @G_M mentioned, you'll want to go into the tree structure of the report to find out where the values are for you to save. At the very bottom of the script, just before the end of the FOR loop, that is where it pulls the values from the tree structure of the report.

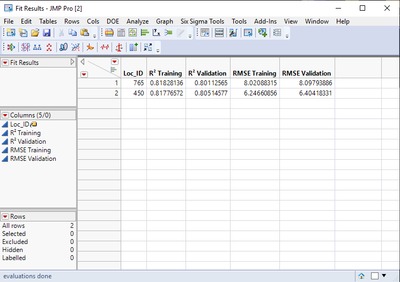

I modified my previous script that automates the NN fitting and included the portion from my previous post that will extract out the fit values (R2 and RMSE). If you want other values, you can add them in as well. The script creates a new data table that has the results of the NN fit for each location ID. I also modified the portion where each data table is generated based on location ID so that the data table has it's own unique identifier based on the location ID, although I just close each data table in the end anyway; in principle you can keep them all open by commenting out the close command at the end of the FOR loop, but then you'll have a massive amount of data tables open.

Before implementing the code, I highly suggest that you read through the comments and understand what the script is doing. Once you become familiar with scripting, it will change your JMP life forever!

On a side note, do you really need to always have the same random seed? I recommend leaving it blank for the fit so that you really get randomness with the fit, which can make it more robust. Also, you have enough observations in your data that you can create a validation column with a training, validation, (and test) set so you can actually find out which of the models is actually the best at prediction. You can stratify this validation column based on your :Y column ("SoilMoisture(kg.m-2) or (mm)"). You can automate this as well and have it in the portion where it creates each of the data tables after summarizing on :ID.

Running the below script on your data table, I get the following output:

Names Default To Here( 1 ); //keeps variables local, not global

dt = Data Table( "Subset of 765-450" ); //defines your starting data table

Summarize( locs = BY( dt:ID ) ); //creates a list of the different location IDs

//This FOR loop creates all the different data tables based on location ID and also names them based on location ID

For( j = 1, j <= N Items( locs ), j++,

file = dt << Select Where( locs[j] == Char( :ID ) ) << Subset( file, Output Table Name( "Model Subject File Location ID " || locs[j] ) )

);

//this portion of the code generates the list of data tables that will be used to train the models for each location.

dt_list = {};

one_name = {};

For( m = 1, m <= N Table(), m++,

dt_temp = Data Table( m );

one_name = dt_temp << GetName;

If( Starts With( one_name, "Model" ),

Insert Into( dt_list, one_name )

);

);

//this portion of the code creates the fit result data table that will record the statistics for each fit, to be reviewed later. Also adds the label column property to the Loc_ID column.

dt_fit_results = New Table( "Fit Results", Add Rows( N Items( dt_list ) ), New Column( "Loc_ID", "Continuous" ) );

dt_fit_results << New Column( "R² Training" );

dt_fit_results << New Column( "R² Validation" );

dt_fit_results << New Column( "RMSE Training" );

dt_fit_results << New Column( "RMSE Validation" );

dt_fit_results:Loc_ID << Label;

//this FOR loop makes sure that each row of the fit result table also includes the location ID

For( l = 1, l <= N Items( dt_list ), l++,

dt_fit_results:Loc_ID[l] = dt_list[l]:ID[l]

);

//this FOR loop is the fitting routine for each location data table.

For( i = 1, i <= N Items( dt_list ), i++,

NN = Data Table( dt_list[i] ) << Neural(

Y( :Name( "SoilMoisture(kg.m-2) or (mm)" ) ),

X(

:Name( "Tmax(K)" ),

:Name( "Tmin(K)" ),

:Name( "Precip(mm)" ),

:LAI_hv,

:LAI_lv,

:Name( "Winspeed(m/s)" ),

:Name( "Evatransporation(kgm-2s-1)" ),

:LandCover,

:USDASoilClass,

:Forecast_Albedo

),

Informative Missing( 0 ),

Transform Covariates( 1 ),

Validation Method( "KFold", 7 ),

Set Random Seed( ), //I took away the random seed, so your fits will be different from mine.

Fit(

NTanH( 1 ),

NLinear( 1 ),

NGaussian( 1 ),

NTanH2( 3 ),

NLinear2( 0 ),

NGaussian2( 3 ),

Transform Covariates( 1 ),

Robust Fit( 1 ),

Penalty Method( "Absolute" ),

Number of Tours( 1 ) //I only did one tour just for proof of concept, so you'll want to change this

)

);

NNReport = NN << Report;

//These next lines of code are what pull the actual values from the fit report and saves them to the fit results data table.

Train = NNreport["Training"][Number Col Box( 1 )] << Get;

Valid = NNreport["Validation"][Number Col Box( 1 )] << Get;

dt_fit_results:Name( "R² Training" )[i] = Train[1];

dt_fit_results:Name( "RMSE Training" )[i] = Train[2];

dt_fit_results:Name( "R² Validation" )[i] = Valid[1];

dt_fit_results:Name( "RMSE Validation" )[i] = valid[2];

Close( dt_list[i], no save );

);Good luck!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Hi @giaMSU ,

This sounds very do-able, but your question is pretty open-ended. What are the roadblocks you are seeing that you need the community to help with?

I would start with the script for the neural net and see where you will need to make substitutions in the script for each location. Define a function or expression that extracts the location-specific information, performs the neural net analysis, saves the report/scripts. Iterate through the locations running that function/expression each time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Thank you for your help. I wrote a script with 2 locations dataset.

First, I used neural script saved from an NN run on JMP 14 Pro. I used two hidden layers with six nodes and three nodes, respectively.

Neural(

Y( :Name( "SoilMoisture(kg.m-2) or (mm)" ) ),

X(

:Name( "Tmax(K)" ),

:Name( "Tmin(K)" ),

:Name( "Precip(mm)" ),

:LAI_hv,

:LAI_lv,

:Name( "Winspeed(m/s)" ),

:Name( "Evatransporation(kgm-2s-1)" ),

:LandCover,

:USDASoilClass,

:Forecast_Albedo

),

Informative Missing( 0 ),

Transform Covariates( 1 ),

Validation Method( "KFold", 7 ),

Set Random Seed( 1234 ),

Fit(

NTanH( 1 ),

NLinear( 1 ),

NGaussian( 1 ),

NTanH2( 3 ),

NGaussian2( 3 ),

Transform Covariates( 1 ),

Robust Fit( 1 ),

Penalty Method( "Absolute" ),

Number of Tours( 10 )

)

);This part works well. Then I add For loop as G_M suggestion.

dt = Open( "E:/Ready_for_ANN/Subset of 765-450.jmp" );

Summarize(locs=BY(dt:ID))

For (

j=1, j<=NItems(locs),j++,

file = dt << Select Where(locs[j])== :ID << Subset(

file,

Output Table Name ("Model Subject File"))

);

NN = file << Neural(

Y( :Name( "SoilMoisture(kg.m-2) or (mm)" ) ),

X(

:Name( "Tmax(K)" ),

:Name( "Tmin(K)" ),

:Name( "Precip(mm)"),

:LAI_hv,

:LAI_lv,

:Name( "Winspeed(m/s)" ),

:Name( "Evatransporation(kgm-2s-1)" ),

:LandCover,

:USDASoilClass,

:Forecast_Albedo

),

Informative Missing( 0 ),

Transform Covariates( 1 ),

Validation Method( "KFold", 7 ),

Set Random Seed( 1234 ),

Fit(

NTanH( 1 ),

NLinear( 1 ),

NGaussian( 1 ),

NTanH2( 3 ),

NLinear2( 0 ),

NGaussian2( 3 ),

Transform Covariates( 1 ),

Robust Fit( 1 ),

Penalty Method( "Absolute" ),

Number of Tours( 10 )

)

);

NNReport = NN << Report;

NNReport << Save PDF("E:/Ready_for_ANN/result")Then, it doesn't work.

As I learn from the script, the For Loop created a subset which includes all ID from j=1 to j++. Then this subset will be used by the Neural script. But the neural part is not included in the For Loop. Then, it is not what I want to do.

I want to create a subset of 1 location data and run the neural for this subset in each step. Please correct if I am wrong.

I attached here my subset of data (02 locations).

Please help me to solve the problem.

Thank you in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Hi @giaMSU,

I like the idea of automating the NN fit, and I've done something similar for other platforms. I think I'll try my hand at doing something similar for the NN to test out various fit parameters in the NN platform for finding an optimal fit.

I have done something very similar, but with a different modeling platform (random forest and boosted trees). I'll include my code below with descriptions of what the major lines do.

In general though, you're not feeding the new information to the NN fit each time you select a new location. The fit actually needs to be inside a FOR loop. I have mine as a script code inside my data table and do this as I need to run a very large tuning table to get the best fit, and when I do it this way, it saves a lot on memory for my computer, plus you can do do upwards of 24k+ rows in a data table this way, letting it run overnight.

By the way, the original concept of this and idea of the loop for the fit came to me via a post by gzmorgan0, but I can't remember the original post. I use it ALL the time.

Names Default To Here( 1 );//keeps all variables local

dt = Data Table( "My data table" ); //this is where you specify your data table with the :Y and :X columns

dt_pars = Data Table( "tuning table" ); //for me, this is my tuning table that has modified tuning parameters. For you, it might be the "Model Subject File" data table.

dt_results = dt_pars << Subset( All rows, Selected columns only( 0 ) ); //this just duplicate the above dt because I want to add columns for recording fit parameters.

//the below portion creates new columns for recording the fit parameters.

dt_results << New Column("Overall In Bag RMSE");

dt_results << New Column("Overall Out of Bag RMSE");

dt_results << New Column( "R² Training" );

dt_results << New Column( "R² Validation" );

dt_results << New Column( "RMSE Training" );

dt_results << New Column( "RMSE Validation" );

//The FOR loop here is to actually run the fit with each of the different tuning parameters.

For( i = 1, i <= N Row( dt_pars ), i++,

//these next few lines pulls the NEW fit parameters to put into the model fit. This would be each new row of data (location) for your case

NumTrees = dt_pars:Number Trees[i];

NumTerms = dt_pars:Number Terms[i];

PortBoot = dt_pars:Portion Bootstrap[i];

MinSplpTr = dt_pars:Minimum Splits per Tree[i];

MaxSplpTr = dt_pars:Maximum Splits per Tree[i];

MinSplSz = dt_pars:Minimum Size Split[i];

//this next section is a string that will be evaluated below and represents what you would do via a normal GUI in JMP.

str = Eval Insert(

"report = (dt << Bootstrap Forest( //this is the fit method

Y( :Y_Response ), //whatever your response variable is

X(

:X_1, //these are all your x variables that you put in (which you want to be changing each time)

:X_2,

:X_3,

),

Minimum Size Split( ^MinSplSz^ ), //here is where something is changing and the new values are inserted via the ^ ^ and fed to the fit method. Here is where you'd want to do something similar, but above with the :X's

Validation Portion( 0.2 ), //this too can even be a variable and changed each time.

Set Random Seed( ),

Method( \!"Bootstrap Forest\!" ), //this again defines the method

Minimum Splits per Tree( ^MinSplpTr^ ),

Maximum Splits per Tree( ^MaxSplpTr^ ),

Portion Bootstrap( ^PortBoot^ ),

Number Terms( ^NumTerms^ ),

Number Trees( ^NumTrees^ ),

Early Stopping( 0 ),

Go,

invisible

)) << Report;"

); //this closes the Eval Insert() command

Eval( Parse( str ) ); //this actually runs the fit for each iteration

//The below lines, I pull out the relevant fit statistic I want to evaluate from the report and save it to the data table called dt_results. I can then analyze my multiple thousand data points later and not chew up too much RAM.

Overall=report["Overall Statistics"][Number Col Box( 1 )] << Get;

R2 = report["Overall Statistics"][Number Col Box( 2 )] << Get;

RMSE = report["Overall Statistics"][Number Col Box( 3 )] << Get;

report << Close Window;

dt_results:Name("Overall In Bag RMSE")[i]=Overall[1];

dt_results:Name("Overall Out of Bag RMSE")[i]=Overall[2];

dt_results:Name("R² Training")[i] = R2[1];

dt_results:Name("R² Validation")[i] = R2[2];

dt_results:Name("RMSE Training")[i] = RMSE[1];

dt_results:Name("RMSE Validation")[i] = RMSE[2];

);I imagine you should be able to take the outline from above and modify it to the specific needs that you have.

On a side note, don't forget to not only do fit validation for not overfitting, but also to test out its predictive ability on a completely unused test data set that you've separated out from the fit data. Also, if you can afford it, I would recommend upping the number of tours >=25. The NN platform sometimes needs a lot more attempts, like in DOE to find an optimal fit for a given set of parameters.

Good luck! I hope to see the final solution. I might take a crack at it as well.

Thanks for starting this thread.

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

Hi @giaMSU,

Thanks for posting the smaller data table that you have, and the script you were working with. I modified it a bit, and I got it to work. Give the following a try. I hand't realized that you were creating subsets of data tables and using only those for fitting.

Here's the output I got from running a modified version of your script:

Here's the modified script:

Names Default To Here( 1 );

dt = Data Table( "Subset of 765-450" );

Summarize( locs = BY( dt:ID ) );

For( j = 1, j <= N Items( locs ), j++,

file = dt << Select Where( locs[j] == Char( :ID ) ) << Subset( file, Output Table Name( "Model Subject File" ) )

);

dt_list = {};

one_name = {};

For( m = 1, m <= N Table(), m++,

dt_temp = Data Table( m );

one_name = dt_temp << GetName;

If( Starts With( one_name, "Model" ),

Insert Into( dt_list, one_name )

);

);

For( i = 1, i <= N Items( dt_list ), i++,

NN = Data Table( dt_list[i] ) << Neural(

Y( :Name( "SoilMoisture(kg.m-2) or (mm)" ) ),

X(

:Name( "Tmax(K)" ),

:Name( "Tmin(K)" ),

:Name( "Precip(mm)" ),

:LAI_hv,

:LAI_lv,

:Name( "Winspeed(m/s)" ),

:Name( "Evatransporation(kgm-2s-1)" ),

:LandCover,

:USDASoilClass,

:Forecast_Albedo

),

Informative Missing( 0 ),

Transform Covariates( 1 ),

Validation Method( "KFold", 7 ),

Set Random Seed( 1234 ),

Fit(

NTanH( 1 ),

NLinear( 1 ),

NGaussian( 1 ),

NTanH2( 3 ),

NLinear2( 0 ),

NGaussian2( 3 ),

Transform Covariates( 1 ),

Robust Fit( 1 ),

Penalty Method( "Absolute" ),

Number of Tours( 10 )

)

);

NNReport = NN << Report;

NNReport << Save PDF("E:/Ready_for_ANN/result") // I actually didn't do this part.

);If you have a lot of different location ID's, you might want to save each result with the unique ID character code to differentiate them. Maybe modify the "save" line to something like:

NNReport << Save PDF("E:/Ready_for_ANN/result_LocID_"|| Char(dt_list[i]:ID[1]));Hope this helps!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

HI DS

Thank you for your contribution.

I still have a small issue with the problem. I saved a thousand pdf files of the NN reports. Then, it became an issue since I need to manually read many pdf files to get the R2 of each NN.

I want to run a script to extract them automatically. Can you help me?

Sincerely

GN

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

@giaMSU, what you plan to do is possible. Step 1. Use Summarize function to create a list of each of the J = 1000+ locations. Step 2. Index the J locations in the list. Step 3. Use Select Where and Subset functions to create a data set for the jth location. Step 4. Run the jth neural net model for the jth location. Step 5. Save the NN platform report in your desired format. Step 6. Repeat until you reached the last location. I didn't test, but the following should work or get you real close.

//assumes data table is "dt" and location is in a variable called "Location"

Summarize( locs = BY(dt:Location) );

For(

j=1, j<=NItems(locs), j++,

file = dt << Select Where(locs[j] == :Location) << Subset(

file,

Output Table Name("Model Subset File")

);

NN = file << Neural(

Y( :Name( "Y" ) ),

X( :Name( "X1" ), :Name( "X2" ) ),

Validation( :Validation ),

Informative Missing( 0 ),

Set Random Seed( 1121 ),

Fit( NTanH( 3 ) )

);

NNReport = NN << Report;

NNReport << Save PDF("Path")

);- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

@G_M I like your approach.

For @giaMSU , all you have to do is run one case of you neural net, and save the script to the script window (or clipboard). (Top most red triangle menu, last option on the list, "Save Script")

Take the script that G_M wrote, and replace this part:

Neural(

Y( :Name( "Y" ) ),

X( :Name( "X1" ), :Name( "X2" ) ),

Validation( :Validation ),

Informative Missing( 0 ),

Set Random Seed( 1121 ),

Fit( NTanH( 3 ) )

);with your Neural script, and you might be good to go, or at least 90% of the way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

I’ve tried your script. It runs well but not exactly as I wish. It seems like the script extracts data from all locations to create a subset, and then runs the neural. I tested it with a subset of 02 locations. The neural ran twice. However, their reports are the same. So I think the For Loop has some issues. Could you please help me ? I attached my script and data in this discussion in case you need to use.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Automate the neural network

I believe that the issue is that you need to add the Char function around the :ID in the Select Where function.

Select Where(loc[j] == Char(:ID))Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us