In a previous post, I used the Generalized Regression (Genreg) platform in JMP Pro to analyze data that I collected while playing a board game with my daughter. Since there was only a single effect in my model (Player), I wasn't able to show off the variable selection methods that are available in Genreg.

Having access to variable selection methods like the lasso or forward selection is especially important when your response is non-numeric (like red/blue/green or small/medium/large) because ordinal and nominal logistic regression models can be easy to overfit. Here, we will look at an example where we consider many more effects to model the response.

The Data

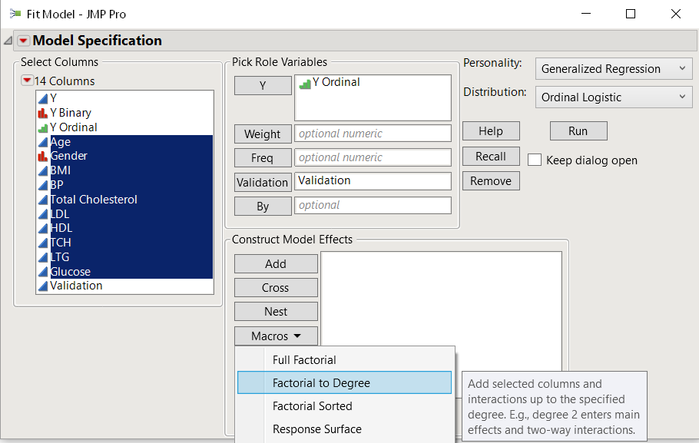

We will use the file Diabetes.jmp, which is available in the JMP sample data folder. The data set measures diabetes progression over the course of a year, with attributes like age, gender, and cholesterol levels for each individual in the sample of 442 patients. We want to use those attributes to model "Y Ordinal," which labels the severity of each patient's diabetes: low, medium, or high. As the name of the response column suggests, we'll model it using an ordinal logistic regression model, which is new in Genreg for version 14. It is reasonable to suspect that there are interactions between our explanatory variables, so we'll include main effects and two-factor interactions in our model. Specifying this model in the Fit Model dialog is easy using the "Factorial to Degree" macro. Launching an ordinal logistic model for the Diabetes data

Launching an ordinal logistic model for the Diabetes data

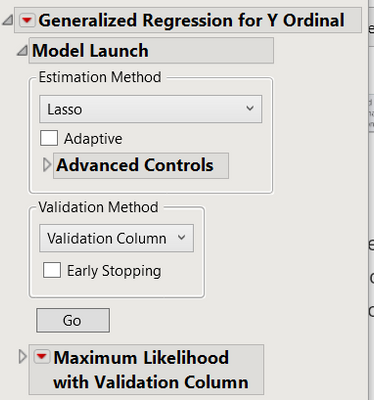

After specifying our model and hitting the Run button, we get into the Generalized Regression platform. Genreg automatically does a Maximum Likelihood fit (full model, no variable selection) for us on the training data. But I'm concerned about overfitting, so I don't want the MLE. I want to do the Lasso instead, since it will provide us with a more parsimonious model that should better fit new data. Doing a Lasso fit in Genreg

Doing a Lasso fit in Genreg

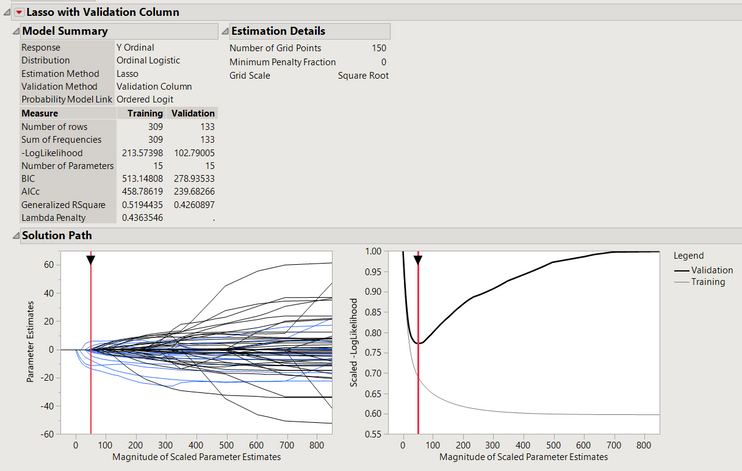

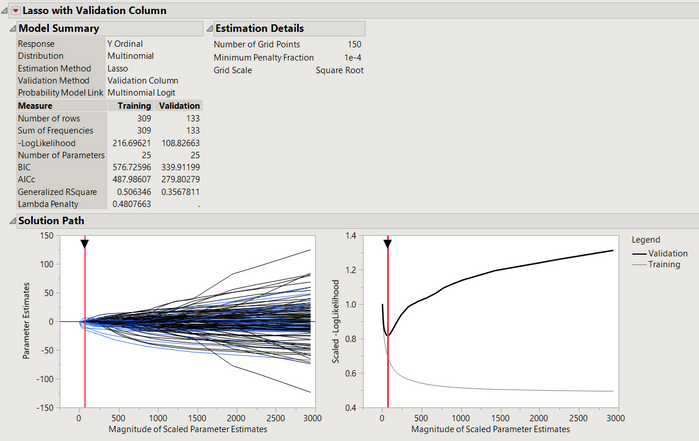

Lasso output for the Diabetes data

Lasso output for the Diabetes data

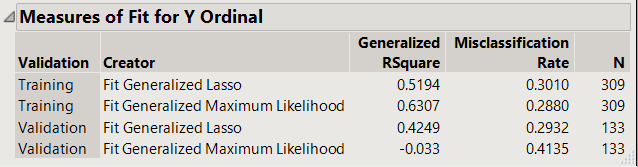

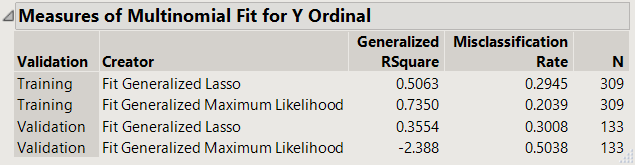

As we can see in the output, the lasso yields a model that uses only 15 parameters out of the original 57 candidates. So we have a model that is much easier to interpret/explain than the MLE. How much better does the lasso model perform on new observations than the full 57-term model? That’s easiest to see using the model comparison platform. We can see that the full model fits very well on the training data and terribly on the validation data (the validation R^2 is negative, always a bad sign). This is a classic case of overfitting. Meanwhile, the lasso model has much better R^2 and misclassification rates on the validation data. So the lasso model is the clear favorite here.

Lasso vs MLE using the Model Comparison platform

Lasso vs MLE using the Model Comparison platform

The Multinomial Case

As in my previous post, our response has a natural ordering. But let's ignore that for now so that we can do variable selection on a multinomial problem. Variable selection is especially important in this case because the multinomial model is so easy to overparameterize or overfit.

Why is this model so easy to overfit? If we have p predictors and our response takes k levels, then the model that we're fitting has p*(k-1) parameters. In the case of our diabetes data, we have 56 predictors and three levels of our response, so that gives us 56*(3-1) = 112 parameters to consider. This can get out of control quickly! For more information about this model, check the JMP Help.

We launch Genreg the exact same way as earlier. We just switch the distribution to Multinomial. Launching the Multinomial in Genreg

Launching the Multinomial in Genreg

Again we'll use the lasso for estimation and variable selection. This time, the lasso yields a model with only 25 variables, which means that it has dropped almost 90 parameters out of our model. And while it may not seem that the lasso model has a great generalized R^2 on the validation data (about .35), the full model has a generalized R^2 worse than -2 on the validation set! Lasso summary

Lasso summary

Lasso beats the MLE even worse in the Multinomial case

Lasso beats the MLE even worse in the Multinomial case

Try It Yourself!

I'm excited about support for ordinal and multinomial responses in Genreg in JMP Pro 14. This type of data is very common in practice, but as we've seen with the Diabetes data, we have to be careful building models. The interactive variable selection tools in Genreg can help you build models that fit better and are easier to interpret. Let me know what you think.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.