Isolation Forest has been emerging as arguably one of the most popular anomaly detectors in recent years due to its general effectiveness across different benchmarks and strong scalability. It is computationally efficient and has been proven to be very effective in anomaly detection.

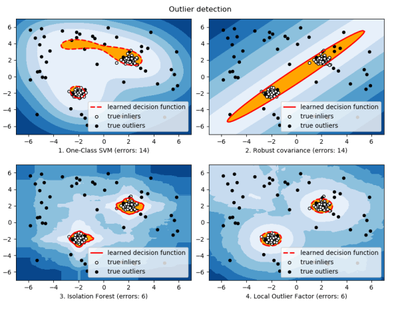

Isolation forest compared to others (src)

The algorithm isolates observations by randomly selecting a feature and then randomly selecting a split value between the maximum and minimum values of the selected feature. The implementation is therefore the same as partition trees and bootstrap trees with the difference that the target function should be uniform (trying to split variables with a constant Y value).

Yet, if you try this in JMP, it doesn’t work.

By including Isolation Forest in JMP, similar tu predictor screening, users would have access to a powerful tool for detecting anomalies in their data. This could help them identify unusual patterns or behaviors that may warrant further investigation.

This paper studies how IForest works and improves upon its few limitations (i.e., extended isolation forest)

https://hal.science/hal-03537102/document

Scikit-learn documentation

https://scikit-learn.org/stable/modules/outlier_detection.html