- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

JMP Scripters Club Discussions

- JMP User Community

- :

- JMP Users Groups

- :

- JMP Users Groups by Capability

- :

- JMP Scripters Club

- :

- Discussions

- :

- Re: Graphical User Interface to Automate the Process of Model Tuning

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Graphical User Interface to Automate the Process of Model Tuning

Graphical User Interface to Automate the Process of Model Tuning

As part of Innovation Management at Evonik, we are interested in developing predictive models for some of our quality performance parameters. JMP Pro’s predictive modeling platforms provides a direct path to just that for their practical, real-world problems. However, given the multiple different platforms available: from Neural Nets to Support Vector Machines, and the latest in the XGBoost platform, it can be daunting not only to find which model performs the best predictively, but also in tuning a model. To address this and to simplify the process of tuning models for each platform, I developed a graphical user interface that helps to automate the process of model tuning. The code is written entirely in JSL and utilizes different modeling platforms as well as the DOE platform within JMP. The code spans everything from simple calls and references to more complicated tasks like clicking an “OK” button, extracting data from reports, and passing data from one window to another. The code could not have been possible without the generous help from the JMP Community pages.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hi @Marco1 ,

**EDIT**: I think I understand what you were asking before -- can the code be changed to allow for more than one input to be modeling at a time? I'm sure it can, but I have not written it that way. I thought about it, but I really wrote this for my organization's needs and we only model one output at a time. I'm sure it could be done, but it would take some re-vamping and re-design of the code to get that to work.

Unfortunately, the script I wrote does not have the ability to perform tuning across all the different platforms in one go, like the model screening does. Keep in mind that the model screening platform in JMP only fits the default values of the models to the data, it does not tune each model. It's a nice feature to get an idea of how some default models compare to others, but I have found that a well-tuned model can outperform the default model that the model screening platform decides is the best. I end up not using the screening platform all that much because of this.

The primary goal of writing this code was to automate and simplify the process of tuning the many different modeling platforms in JMP, especially for those that might not have as much experience in tuning -- hence the tips for tuning with each platform. I still go through each platform in the GenAT_5.0 code and tune each model, then compare them on a holdout set at the very end to see which ones work best for my data. If one wanted to do an automated tuning over all platforms, it probably would be best to write it in the source code of JMP, which is not something I can do.

I think that Jarmo got it right when he noticed the language. Sorry, I have an English operating system and have English set as the language for JMP. I could imagine that trying to write this code for multiple languages would be very challenging. Hopefully by changing the language setting in JMP you can getup and running.

Please reach out if you have more questions, and happy modeling!

Thanks!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hello Diedrich,

I use the google translator, I'm not sure I understand... in any case I confirm my query:

- GenAT_5.0 could predict 2 or more outputs?

- When selecting NN of 1 and 2 layers....Could GenAT_5.0 include in the report the best models found of 1 and layers?

I have observed, that in most cases GenAT_5.0 when re-training the best result lowers its effectiveness... GenAT_5.0 could have an option to choose to re-train or not to re-train?

The models found by GenAT_5.0 of 1 output.... are in most cases....superior to model screening.

Excellent contribution Diedrich!!!

Cheers,

Marco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hi @Marco1 ,

It is correct that right now, GenAT_5.0 cannot predict 2 or more outputs. Depending on the platform, it can handle continuous, ordinal and nominal (2 or more levels, again depending on the modeling platform). It cannot fit more than one response at a time. To me, I am not so sure one saves all that much time by fitting more than one response at a time anyway, you still have to go back and evaluate each model for each response.

In principle, yes, the code could be rewritten to allow a user to select more than one model from the output table and re-run the selected models, however, you can still do that in the current version -- you just can't run them at the same time. What you do after you've run the tuning design, and you see the output window with the graph and table, you select one to rerun and then after it's performed that fit, you go back and select another model that you'd like to test. You can do this as many times as you want. You might not want to have the check box Save output data table because you don't need to save that each time you re-run a model.

As for the re-run model not having the same effectiveness (I'm guessing you mean like R^2 or RASE), that is likely due to the randomness of the starting point for each model fit. If you always want to have the same starting point even when re-running a model, then you need to enter a random seed value for the fit model. This then forces the fit to start from the same point each time rather than randomly moving around the model space. This will ensure that your model evaluation values like R^2 will be the same for when it trains vs when you re-run the model. That being said, there is a drawback to this: you lose the randomness that is often very important for a model to be able to manage. For the most part, your response has some kind of average value (depending on its inputs of course), but there is also some randomness to the output which you run the risk of not capturing by allowing the training to start from random points in the model space. Allowing your model to also have this randomness provides for a more realistic situation compared to enforcing the same starting point. The flip side to that is that you run the risk of just modeling noise and not your actual output. This is why it's so important to have a hold-out test data set that is not used in training or validating the model so you can compare different models on this test data set to find which one actually performs the best on "unused" data.

Thanks for your feedback and compliments. If there are any updates to this code, I'll be sure to post it here.

Best wishes,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hello Diedrich,

Thanks for your answer!!!... JMP is a good program and GenAT_5.0 is an excellent script that partially covers (1 output) the gap that JMP has in model screening, in most cases GenAT_5.0 finds better models than model screening ... it would be great if GenAT_5.0 could predict 2 or more outputs ... for example to apply it to cases where there are sales (outputs) of multiple products and the best model found by GenAT_5.0 can detect a pattern or relationship in the multiple exits (sales).

Regarding the trained neural network models, will there be a script that exports the formula found by GenAT_5.0 to excel to make predictions? Or is there a script that converts or generates VBA Excel code of the best NN model found by GenAT_5.0 to make predictions?

Cheers,

Marco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hi @Marco1 ,

Well, the whole purpose of writing the code was to automate the process of model tuning rather than necessarily comparing one model to another. As mentioned before, the model screening platform only fits the default values for a particular method. Often the default isn't as good as a well-tuned model, regardless of which method you use to model.

As for modeling multiple outputs (Ys), the code could be rewritten to do this, but one still needs to go back and evaluate each individual model for each Y because each Y will have it's own independent model generated for it. So, I'm not sure you still save time.

In the case that you describe, you might be better off using the Multiple Correspondence Analysis under Analyze > Multivariate Methods to detect patterns between outputs, Ys.

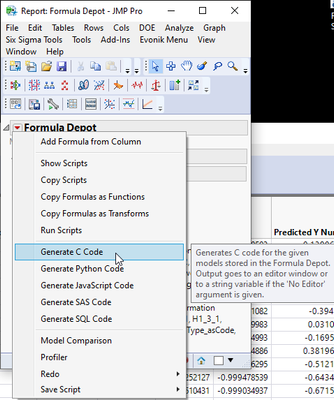

As for your other question about exporting the formula, there is already a way to do that in JMP. Once you've made a model, you can publish the model formula to the Formula Depot platform (see below). Within the Formula Depot Platform, you can use the red hot-button to export the code in different formats, like C, Python, Java, SQL, and so forth. This might get you where you are looking in order to run the formula in Excel. However, if your prediction formula is easy, you might be able to manually "transform" it from a JMP formula to an Excel formula. But, if it's complicated, then you might want to keep it as a JMP file as it might perform faster when evaluating large data tables.

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hello Diedrich,

Thanks for your information,

Cheers,

Marco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

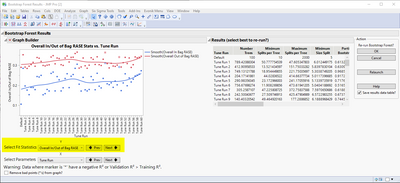

Hi All, I'm happy to share that I have updated the JSL code for automated tuning. I've fixed a couple strange bugs in it and also added a new feature to the results window that allows the user to see different fit statistics on the y-axis, see below. There is still the ability to step through the different tuning parameters on the x-axis, but now you can also see how the other fit statistics beyond R^2 change. Attached is the latest version, 6.0. Hope it can help everyone. If you do come across any issues in the code, please let me know and I'll try to fix them ASAP.

Thanks!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hi @SDF1 ,

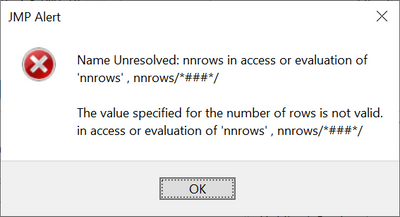

I'm using the tool and works great for random forests, support vector machines, etc. However, I'm having issues when tuning a neural network with a validation column; I get the following error:

Also, when I was tuning a random forest, I was getting an error because the validation column name was "Validation 9 months - 1MT 21", so I changed it to "Validation_9" and it worked.

Best,

Andres

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Graphical User Interface to Automate the Process of Model Tuning

Hi @AndresJ89 ,

I'm glad you're using the tool and it's working out for you. Sorry that you're getting an error when running the NN. I think it's because you have not selected a penalty method. By default they aren't checked because when you do a "Recall", it actually un-checks the previously checked items. Anyway, I believe if you select at least on penalty method, then you shouldn't get that error. This is independent of what type of cross-validation you're using.

As for the long validation column name, it could be in how the column is referenced with spaces vs. underscore "_" text, or that it's just too long.. The spaces might end up causing an error, I have not tested this out for long column names, but at least for a short column name like "V test", it works just fine. My guess is that it has to do with the length of the column name, but I'm not 100% sure on that.

I might update the code to account for the NN and the penalty method so that it first checks to make sure that at least one penalty method is selected prior to performing the fits. It's my understanding that the NN platform requires a penalty method.

Hope this helps!,

DS

- « Previous

-

- 1

- 2

- Next »

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us