Where should I invest to maximize profits?You’ve just spent the last year collecting data, restructuring data, analyzing relationships, and fine-tuning parameters. You’ve worked hard and have culminated all that expertise into what you believe is a robust prediction model that you will now use to determine where your company will spend $10 million of its assets. Just how confident are you in your decision? Have you accounted for all possible scenarios, factors and constraints? Is it possible that you might have missed something?

Where should I invest to maximize profits?You’ve just spent the last year collecting data, restructuring data, analyzing relationships, and fine-tuning parameters. You’ve worked hard and have culminated all that expertise into what you believe is a robust prediction model that you will now use to determine where your company will spend $10 million of its assets. Just how confident are you in your decision? Have you accounted for all possible scenarios, factors and constraints? Is it possible that you might have missed something?

Welcome to the world of uncertainty, where the unknown keeps even the most experienced data practitioners up at night. It’s the fear of not knowing what we don’t know and missing an unforeseen effect or outcome that can turn a simple prediction model into an organization’s worst nightmare.

Whether you’re leveraging the latest artificial intelligence algorithms or using traditional regression methods, some level of uncertainty will always be present in your model.

Since it’s unavoidable, the question now becomes: Can uncertainty be minimized and mitigated? Absolutely, I believe it can be greatly reduced by incorporating a few simple strategies that have proven to boost the accuracy of predictions and reduce uncertainty in models.

Uncertainty related to the data sample

To begin, we must recognize and understand that there are many sources of uncertainty in predictive models. Variation in data, modeling technique and predictor selection will all contribute to model performance. For this discussion, let's focus our attention on the uncertainty related to the data sample. The quality of data set plays a vital role in the accuracy of a prediction. Data is the fuel of a prediction model. And in most scenarios, the more we can feed the model, the greater our ability to improve accuracy. But everything comes at a cost, and collecting large data sets can be an expensive and time-consuming effort. Given that constraint, the best strategy on Day Zero is to craft a data collection plan using design of experiments (DOE) to gather critical data upfront that captures the combinations of factors and variation required to accurately model relationships.

Unfortunately, most do not take this path and subsequently find themselves in desperate need of additional data after the collection phase to enrich their model. Does that sound like you? What to do now when there’s no budget left to collect more data? No need to worry.

3 techniques to enrich your data

You’ll be happy to know that help is close by in the form of three quick and easy techniques proven to enrich your data and regain accuracy in your model without having to invest additional resources in the collection of new data points: Bootstrapping, Monte Carlo Simulation and Imputation.

Bootstrapping: A simple technique where we repeatedly sample random observations in our data to produce a new “bootstrap” data set, providing a better approximation of model estimates.

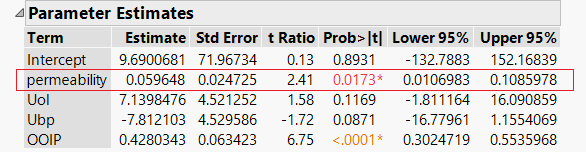

Let’s have a look at this technique in practice. In the example below, I would like to explore the model parameter (permeability) and determine if its confidence intervals of 0.010 and 0.108 are an accurate representation of the theoretical limits for this variable. Once I’ve answered that question, I will have a better understanding of the relationship between permeability and my model response.

Original confidence limits for permeability are 0.010 and 0.108

Original confidence limits for permeability are 0.010 and 0.108

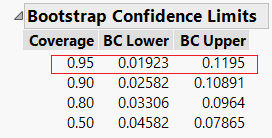

New bootsrap limits of 0.019 and 0.119 reflect a more accurate estimate of actual values

New bootsrap limits of 0.019 and 0.119 reflect a more accurate estimate of actual values

Using JMP, I generate 2500 bootstrap samples to calculate new confidence intervals of 0.019 and 0.119. The bootstrap limits are larger than the original limits of 0.010 and 0.108 – suggesting that the relationship between permeability and predicted responses are in fact stronger than previously estimated. With this new insight, I can adjust the coefficients of my model to reduce uncertainty and produce an estimate that’s more in line with actual expected results.

Monte Carlo Simulation: Uses distributions of random variation to produce a robust estimate of prediction results to better account for uncertainty in model data.

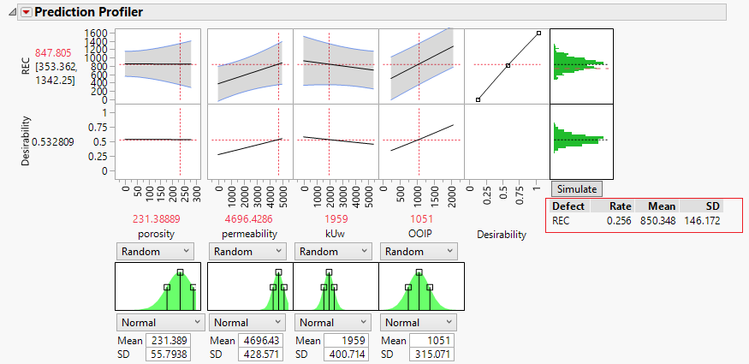

Let’s apply this second technique to our example data set. I’ve created a traditional model where I can estimate the expected response (Ex: REC=847.805) for a set of conditions. Although I can calculate the predicted response and confidence intervals of those estimates, what I cannot calculate is a result that incorporates the real-world variation that I would typically observe among my factors. By using the simulator option in JMP, I can now model factors as random distributions and produce more precise estimates in my expected response values. In my example, Monte Carlo Simulation reveals that approximately 25% of my response values are outside my lower limit of 750. With this more accurately quantified level of risk, I can make a more informed business decision now having simulated multiple scenarios and weighed their respective outcomes accordingly.

Monte Carlo simulation of predictor variables allows for risk analysis under varying conditions

Monte Carlo simulation of predictor variables allows for risk analysis under varying conditions

Imputation: A data replacement technique used to substitute missing data for a calculated result.

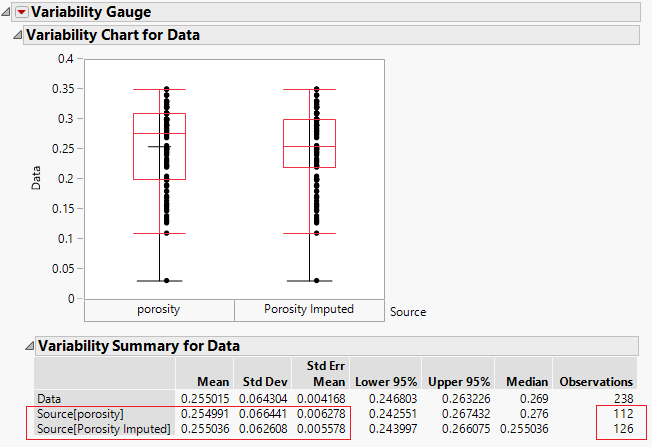

Real data sometimes will have missing values, and my example data set is no different. It contains some entries where data was not collected because there was an issue with a sensor. Unfortunately, since those values were omitted, they are now discarded from any analysis, and I lose out on any prospective insight that could have gained if my data set were complete. By using imputation in JMP, I can now estimate the values of those missing entries and increase the confidence of my projections. Imputation can be performed using many techniques; I opted for a method called Multivariate SVD Imputation to predict the values of the 14 missing entries. It should be noted that imputed data is not real data, but it offers a means to carry out analysis that otherwise could not be performed. By imputing my missing data, I increase the accuracy of predictor variable estimates and can now fully utilize all my collected data.

Results of 14 imputed values allow for estimation of missing data

Results of 14 imputed values allow for estimation of missing data

Improve estimates and reduce uncertainty

Bootstrapping, Monte Carlo Simulation, and Imputation. There you go: three quick and easy techniques that can be implemented on any existing data set to enrich the quality of results and greatly improve your predictive accuracy. Prediction models are amazing things where the use-cases are growing increasingly in the era of Big Data. Despite the advancements in modeling options and algorithms to promote better robustness and accuracy, performance continues to be driven by the quality of the data we feed the model.

In an ideal world, uncertainty would be nonexistent, and our data would light the path for a perfect prediction. But we know that real data doesn’t care what we think. It’s messy, flawed and difficult to deal with, so let’s face this challenge by throwing a few new tools in toolbox. Let’s not let the unknowns keep us up at night. Be proactive by utilizing these strategies to improve estimates, reduce uncertainty, and remain confident that you are making the best business decisions possible.