Welcome back to Analytics with Confidence. In this series, I am exploring the key to success with modern data analytics: validation. In the first and second posts we discussed generalizability and saw how to build problematic models that do not generalize. In this post, we continue with the case study on reaction recipes and introduce holdback validation as a simple tool for building models that you can trust.

Remember that in this case study we are looking at recipes for making a high-value chemical product [1]. There are 4,608 possible variations of ingredient choices (4 electrophiles x 3 nucleophiles x 12 ligands x 8 bases x 4 solvents). We want to use the data from just 150 of the possible recipes to build a model to predict the yield of any recipe and to find the best combinations of ingredients.

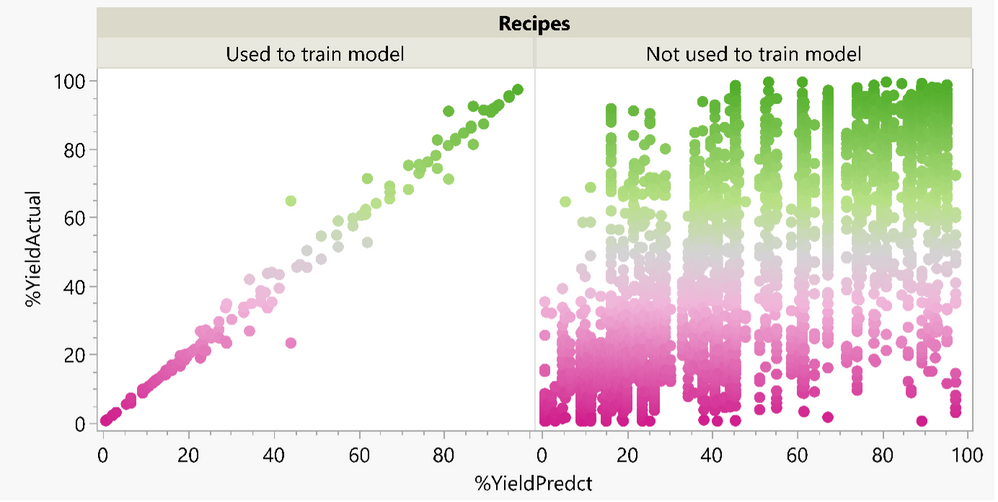

The partition model that we built last time looked great at first sight, with an RSquare of 0.985 and an Actual versus Predicted plot that showed an excellent fit to the data. But then we looked at how the model predictions compared with the actual yields for all the 4,458 recipes that were not in the training data. The model predicted yields of close to 100% for several recipes that actually had yields closer to 0!

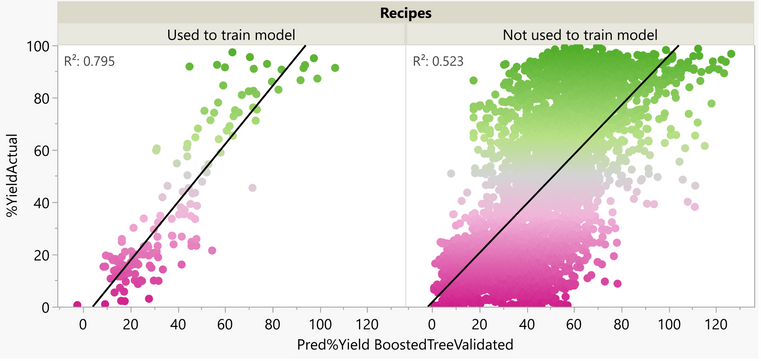

Actual versus Predicted plots for the data that was used to train the model (left) and the data for all the other recipes that were not used to train the model (right). This is an example of an "overfit" model that does not generalize.

Actual versus Predicted plots for the data that was used to train the model (left) and the data for all the other recipes that were not used to train the model (right). This is an example of an "overfit" model that does not generalize.

How can we avoid building the kinds of models that look good on the training data, only to find that they do not generalize when tested on unseen data? And how do we make sure our models are not unnecessarily complex?

One idea that might have occurred to you would be to validate the model against unseen data at each step of the building process. We could have stopped after each split in the decision tree to check the Actual versus Predicted (and the RSquare) for the 4,458 recipes that were not used to train the model. We could select the best model as the one where the fit to the unseen data is best. And we would have knowledge of how well we can expect it to perform.

Of course, this is in an artificial situation. If we have the data for all possible recipes, we would not hold back most of it to validate the model. Can we use the same idea but by utilizing the data for just the 150 recipes?

Introducing…Make Validation Column

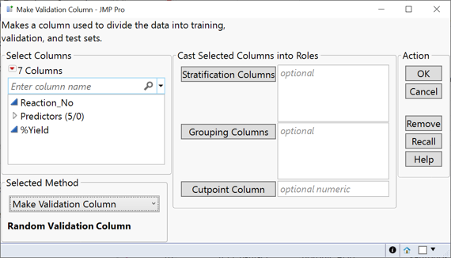

Yes, there is a tool for this. Make Validation Column in JMP Pro will mark a portion of the rows in your data table to be held back and used as validation at each step of the model-building process. There are various useful options that we will explore in later posts, but for this example, we will keep it simple and stick to the defaults.

The Make Validation Column tool in JMP Pro (Analyze > Predictive Modeling). In this example, we stick with the defaults to make a Random Validation Column and click OK.

The Make Validation Column tool in JMP Pro (Analyze > Predictive Modeling). In this example, we stick with the defaults to make a Random Validation Column and click OK.

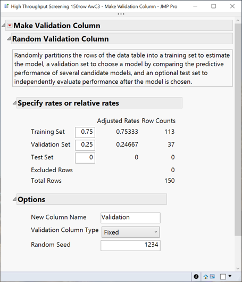

We will go with the suggested 0.75/0.25 training/validation split, which means 37 of the 150 rows of data will be held back for validation.

The default 0.75/0.25 training/validation split in Make Validation Column. Enter “1234” as the Random Seed if you want to follow along in JMP Pro and get the same results.

The default 0.75/0.25 training/validation split in Make Validation Column. Enter “1234” as the Random Seed if you want to follow along in JMP Pro and get the same results.

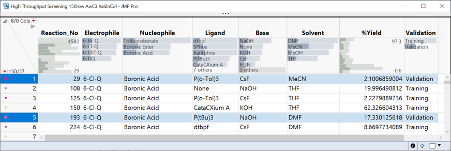

We now have a Validation column in our data table that marks 37 of the rows for validation and the others for training:

Validation column in our data table that marks 37 of the rows for validation and the others for training

Validation column in our data table that marks 37 of the rows for validation and the others for training

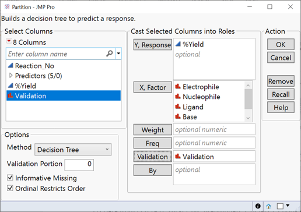

Now, let’s repeat the partition model we created in post #2, but with validation. The key step here is to add the Validation column in the Validation role when you launch Partition.

Partition model with validation

Partition model with validation

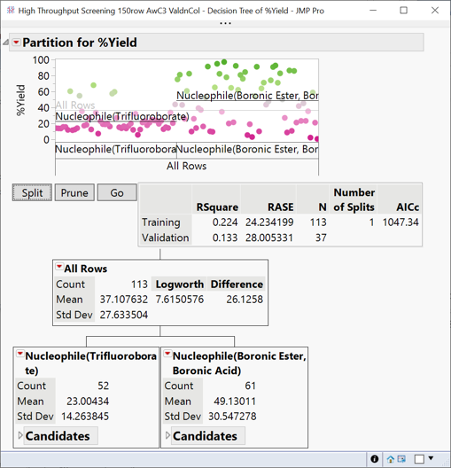

The first split is the same as for our model without validation. But now we can see the RSquare for both the training data and the validation data. The fit to the validation data is not as good for the training data (RSquare = 0.133 vs 0.224).

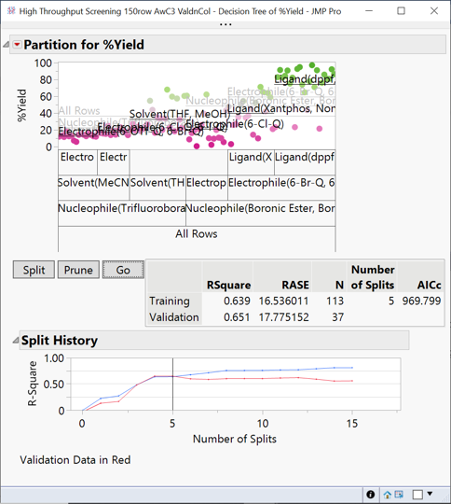

We can hit Go and the algorithm will explore a number of splits and then prune back to the tree that had the maximum Validation RSquare, that is, the model that best predicts the data that we have held back. In this case, Validation RSquare (in red) peaked at five splits. Beyond that point, the model was getting more complex and fitting better to the training data, but it was overfitting to the noise rather than finding patterns that generalize to unseen data. We may even prune back to four splits since the Validation RSquare is the same and the model is simpler.

What about other models?

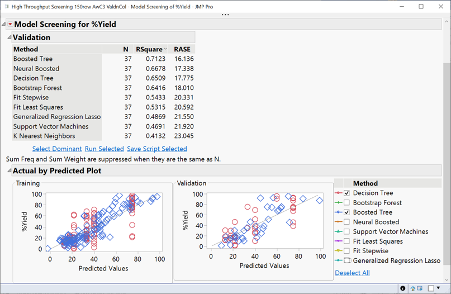

There are a variety of other statistical and machine learning model types that we could use. Another advantage of holdback validation is that it allows us to quickly build and compare validated models and find out which perform best. The Model Screening platform in JMP Pro makes this very easy. In this case, we find that a Boosted Tree model has the highest Validation RSquare:

Model Screening report showing the Boosted Tree has a slightly better Validation RSquare than the Decision Tree and the other statistical and machine learning methods.

Model Screening report showing the Boosted Tree has a slightly better Validation RSquare than the Decision Tree and the other statistical and machine learning methods.

Now when we look at how this Booted Tree model performs, we see that there is much better agreement between the predictions and the actual yields of the 4,458 recipes that were not used to train or validate the model:

Learn more:

[1] Data from Perera D, Tucker JW, Brahmbhatt S, Helal CJ, Chong A, Farrell W, Richardson P, Sach NW. A platform for automated nanomole-scale reaction screening and micromole-scale synthesis in flow. Science. 2018 Jan 26;359(6374):429-434. doi: 10.1126/science.aap9112. PMID: 29371464. The sampled and full datasets are attached as JMP data tables.

High Throughput Screening 150row AwC3.jmp

AwC3 Act_Pred_150vsAll.jmp