- JMP User Community

- :

- File Exchange

- :

- JMP Add-Ins

- :

- Neural Network Tuning

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Purpose

The Neural Network Tuning add-in is an alternative Neural Network platform that provides an easy way to generate numerous neural networks to identify the best set of parameters for the hidden layer structure, boosting, and fitting options. The add-in only works with JMP Pro.

How it works

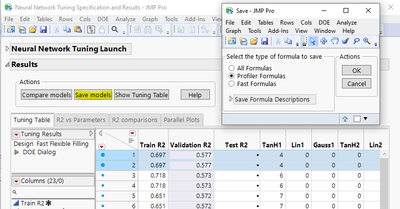

The user specifies the number of Neural Network models that will be run and sets the range of parameters and fitting options. A Fast Flexible Space Filling DOE is generated with the tuning parameters as Factors, and the Training, Validation, and Testing R2 values as Responses.

Each trial in the DOE is passed to the Neural Network platform and the R2 values are recorded in an output DOE table. After all models are run, the table is sorted by Validation R2 and a series of graphs are displayed to help the user identify the most important effects for maximizing the Validation R2 values.

Additional models can be generated by adjusting the tuning parameters and re-running the DOE. The new runs will be appended to the original table.

The data table can be saved and re-loaded into the platform to save progress and re-start additional analysis. This is a way to tune Neural Networks that might take considerable computation time.

Video Guide

Notes

The add-in has been tested on Windows and Mac using JMP Pro Versions 17.1, 17.2, 18.0, and 18.1

Known Issues

- The add-in does not currently handle a Response (Y) column that is virtually linked to the factor data table. As a work-around, you can unhide the linked column and then cut/paste the data into a new column.

Updates

November 27, 2023 - Version 1.1 - improvements and bug fixes, details are in a post below

November 28, 2023 - Version 1.11 - minor bug fix

December 15, 2023 - Version 1.2 - improvements and bug fixes, details are in a post below

September 20, 2024 - Version 1.4 - improvements and bug fixes

Hi Scott,

Very handy add-in. Previously I had to manually generate a space filling design to try and explore the NN platform. Will give it a test run! Any plans to expand the number of layers in the NN platform? Also any chance tuning can be implemented in the genreg platform too?

Thanks,

Vins

Hi, @Vins!.

Just wanted to chime in on this. GenReg can be tuned already, just set the alpha hyper-parameter to "." and JMP will optimize it. On more layers in the NN, that's more in the hands of JMP Development than Scott. You should put the request in the JMP Wishlist so that they know you'd like to see deeper NN's available.

Best,

M

@Vins -

Right now, the add-in just passes parameters to the JMP NN platform, which builds NNs with up to two layers. If the platform changes (more layers, activation functions, etc.) and there is sufficient interest, I can update the add-in. I want to second @MikeD_Anderson 's suggestion and head over to the wishlist if there are features you would like to see in future JMP versions.

Let me know how your test run goes!

-Scott

This is great. I've been wondering when something like this would make its way into JMP Pro, but great initiative making this add-in to do it. +50 points for Gryffindor!

Great tool thank you so much. I've just tetsed it on various datasets, it save me a lot of time and find conditions (parameters) that I would not have necessarily tested for sake of time. Thank you Scott !!

Great work @scott_allen on the nice setup and functionality of this add-in!

Two concerns / warnings:

1. The Neural platform peeks at the validation data while performing its model fitting, and so the validation results are leaky and overly optimistic. As such, I don't think they provide a good estimate of generalization performance nor a good way to compare different models in the tuning design. The only way around this I am aware of is to use a three-level Validation column (Training, Validation, and Test, creatable with Analyze > Predictive Modeling > Make Validation Column) and then only use the Test results to compare models. I just checked that the add-in handles this case, and to verify the general point, plot Validation R2 versus Test R2.

2. Throwing a lot of models at a single test holdout set will very likely lead to overfitting that test set. This happened with the Autotune functionality in XGBoost (which uses the same Fast Flexible Design approach), prompting a change to only output an ensemble model when autotuning and to use nesting within each fold to avoid leakiness.

@Vins Arbitrarily deep NNs are available in the new Torch Deep Learning add-in; please sign up to try it at JMP 18 Early Adopter It does not yet have autotuning but may in the future.

Great points. I agree with both and hope to continue developing this Add-In to address these.

My first goal was to automate the current model generation workflow and now I would like to start thinking about including "guard rails" or help find ways to include additional validation methods.

I also like the idea of ensemble models. I am considering the addition of a simple way for the user to pass models to the Compare Models platform. But it might be better to automate this as well based on some model fit statistic. Food for thought!

@scott_allen I have added to the wish list for more layers to NN.

@russ_wolfinger , I have already taken the Torch Deep Learning add-in for several test runs in EA5. I do get better models than xgboost add-in, however I always go back to your xgboost add-in I save a table of "gain", "splits","cover", scores for each variable. With this table I select only variables with non 0 values and rerun xgboost with a smaller set of predictors. Usually after 2-3 rounds, I get no non-zero predictors. This way I filter out unimportant variables, and I can get the profiler to visualise a smaller model. My predictors are routinely 60-300K. I would like to see an option in the Torch Deep Learning add-in where I can get access to a table with values I can use to kick out unimportant variables.

Is there going to be an expanded Fast Flexible Design in xgboost that also includes the other ~ 30 advanced options which include categorical parameters? And lastly @russ_wolfinger, is the next adopter EA release going to have a tuning table for the Torch Deep Learning add-in? cant wait, the models are significantly better!

@Vins Thanks much for the feedback. I think your variable reduction approach in XGBoost is reasonable (I've done the same myself) since those variables with 0 importances are not used in any of the models. Both variable importances and autotuning are on the "to-do" list for Torch.

XGBoost autotuning over all of the advanced options would require a much larger and messier interface, and we can think about it. In the meantime, there is a Tuning Design Table option in which you can specify a secondary JMP table with design points, e.g. created by invoking DOE FFF directly on the parameters you want to tune.

We are also looking more now at Bayesian Optimization approaches. There is an add-in .

Update

The Neural Network Tuning add-in has been updated. Version 1.1 is now available for download and version 1 has been removed. This version has a number of bug fixes and over-all improvements based on feedback from users:

Bug fixes

- Informative Missing works.

- Various warnings are thrown to avoid JMP Error messages when problems are encountered in the column specification or parameter specification steps.

- Multi-level nominal and ordinal Responses are now supported.

Improvements

- General interface updates

- KFold validation is now available

- A Help window can be launched by clicking the "Help" button. The Help window has additional information on how Model Validation, Comparing Models, and Saving Models are handled in version 1.1.

- Model validation is now always carried out with a column to help with model reproducibility.

- Model Comparison is now carried out using the Compare Model platform in a duplicate (and hidden) data table.

- Save models directly from the tuning results window using the Save Models button.

- When a previously saved data table is loaded, the add-in will warn you if the X variables in the saved data table are different than the X variables specified when the add-in was launched.

- The graph builder output now has a column switcher toggle to look at Training, Validation, or Testing R2 values versus each parameter.

- Two more model visualization graphs are added as tabs in the results window. These are also interactive with the data table to help identify the best models.

- Model Progress window has a few updates:

- Overall modeling time is shown

- Average time per model is shown

- A Cancel button is available in the model progress window in case the overall modeling time is longer than desired. Any model that was completed during the modeling step will be saved to the results window. Note that when modeling time is long, this button can "lag" and it may require a few clicks to register the command.

- Models saved to the data table have an updated Notes section with all the parameters used to generate the model.

Update

Version 1.2 is now available. This version has a number of bug fixes and addition improvements based on feedback from users:

Bug fixes

- Compare Models will only bring recently prepared NN models into the Compare Models platform instead of all previously generated models

- Model comparisons with nominal and ordinal Y values now work

- Other minor fixes

Improvements

- You can now recall tuning parameters

- Added different model saving options

- Showing the tuning table generates a copy of the table

- The data table is now saved as a tab in the results window

- Added unique model IDs to facilitate comparison of models with different random seeds

- New graph is available when multiple random seeds are used

Hi Scott,

Excellent complement, it is what the NN platform is missing, a query... How do I get it to analyze 2 or more outputs (and)....

Greetings,

Marco

Hi @Marco2024

To analyze two or more variables, you will need to create a model for each and then load the prediction expressions in the Profiler:

- Build a NN model for your first output (Y) and save the prediction expression by clicking the "Save models" button. The "Profiler Formulas" option will save a single formula column to your data table.

- Repeat this for all the Y-variables you would like to compare.

- Launch the Profiler (Graph > Profiler) and load your prediction expressions.

This will provide a profiler for each of your Y variables stacked on top of each other to help you compare and co-optimize your system.

Hi Scott,

I am pleased to greet you......I could not enter the blog with my username to respond to you......, I appreciate your response to my query!!..... Below I have noticed the following:

1. Observations:

- The plugin does not work in Spanish

- The plugin does not save the chosen model or models in the table

2. Queries:

- Could there be an option for the plugin to search for 1 and 2 layer neural networks in 1 step... according to the range of parameters indicated by the user?

Greetings,

Marco

Hi Scott,

Observation 2 (complement does not save models) leaves it without effect, the complement does save the models.

Greetings,

Marco

Hi Scott,

A question: Could the plugin have the option to generate neural networks that learn in real time from the inputs (incremental learning), that is, that are not static neural networks?

Greetings,

Marco

Thanks for all the comments and suggestions.

1. I reproduced the issue when running the add-in in non-English versions and I have found the issue. I will make the fix and post the updated version in the next few weeks.

2. The models will be saved to your table if you use the Save Models button shown below. Select the models in the tab with data table, click Save Models and then select the type of formula column to save. Are you saying that this is not working for you?

3. I considered building a way to screen one and two layer NNs at the same time, but it became overly complicated. To screen these models, I suggest running a single layer NN and then update the tuning parameters and then run the two layer NNs. You can then compare 1 vs 2 layers NN in the resulting graphs.

4. The suggestion for a "dynamic" neural network is very interesting. Currently, the add-in automates the generation of NN models using the JMP Pro NN platform and is aimed at making it easier to explore tuning parameters. If the JMP Pro NN platform is updated with other capabilities, I will look to incorporate those into the add-in.

For now, you can consider saving models then test new data against the model. If the new data is not predicted by the model, you might consider updating the model with results from the new data.

Hi Scott,

I am glad to greet you, thank you for your response!.... I can't understand point 3.

Greetings

Marco

Hi @scott_allen,

This is a really great tool to start working with ANN. Thank you so much!

One question: Is it possible to model multiple responses at the same time (for example, different dissolution time points)?

Thank you,

Sofia

Hi @SofiaSousa2412 -

Currently the add-in supports a single Y response. If you want to build models for multiple responses, you can build them independently, save their prediction expressions as column formulas, then combine the results on a single profiler.

@SofiaSousa2412 As another option, the Torch Deep Learning Add-In can cross-validate a single NN architecture to predict multiple Ys.simultaneously. Yet another idea is to first analyze the dissolution profiles in Functional Data Explorer, export meaningful summary estimates, and then use NN or other models like XGBoost to predict them.

Update

Version 1.4 is now available. This version has a number of bug fixes and additional improvements.

Bug fixes

- Random Seeds are now remembered when replicates are run. The same random seeds will be applied when adding to a design table with replicates.

Improvements

- Brought back the original way of running selected models.

- GUI improvements.

- A simple Help window was created to describe how various comparing and saving functions work. Access the Help menu with Red Triangle > Help.

- You can now specify the number of levels between the Min and Max values for all continuous variables. For instance, if you set Min = 0 and Max = 20, the DOE would originally give you a table with every integer value between 0 and 20. Now, if you specify the levels to be 5 with a Min = 0 and Max = 20 you will get 5 levels: 0, 5, 10, 15, and 20. You can find this in the Additional Options box:

- Replicates and Random Seed values are locked in after the first trial or after a tuning table is loaded. This helps with model reproducibility.

Hi @scott_allen,

Congratulations! It now also works in Spanish... It would be very helpful if the plugin could do the following:

- Save the weights and model(s), to then continue training and improving

- Predict 2 or more outputs

- Have the range of hidden layers to optimize be 3 or more

- Be able to learn the inputs in real time (incremental learning).

Regards,

Marco

Hi @Marco2024 -

Thanks for your note. It took a little longer than I expected to post an update in order to work in other languages, I am glad to hear it is working for you.

Regarding your questions:

- You can save the tuning table using the Red Triangle > Show Tuning Table and then saving a copy. If you would like to load it you can use the "Load" button in the Tuning Interface. You must select a table to load before you run any additional models. You can see an example of this in the updated video around 8:20.

- For now, the add-in will be limited to a single Y variable. Russ Wolfinger made a suggestion a few comments up that you can evaluate the Torch Deep Learning add-in for running multiple Responses using the same NN structure.

- The hidden layer structure is based on the Neural Network platform in JMP, which currently has a maximum of two layers. If the JMP NN platform changes, the add-in can be updated to reflect those changes.

- Building an autotune option is on my personal "wish-list", but there is no timeline for this.

-Scott

I tried to use this for the first time in JMP Pro 19 beta and got the following error message (see attachment), regardless of which options I selected. Is this add-in not yet supported in the beta version of JMP?

Hi @abmayfield - Thanks for the heads up on the issue, I have not tested the add-in in JMP19 yet. When there is an updated version, you will find it in the Marketplace.

Recommended Articles

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us