- We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

- JMP 19 is here! Learn more about the new features.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Want advice on implementing Statistical Process Control in a maturing manufa...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Want advice on implementing Statistical Process Control in a maturing manufacturing organization

The scenario: We have a LIMS that pushes QC test data from manufacturing to a data warehouse. We have a handful of JMP users. We want to finally introduce a systematic approach to SPC and are discussing different strategies how to do that. One of the things that came up was the disappointment that we are not able to wholesale tap into our LIMS system, but will most likely have to pull the data for trending from the data warehouse. The fact that we haven't had LIMS for a long time resulted in relatively dirty data sources, requiring a lot of cleaning. Yet, cleaning is necessary even with data coming from LIMS due to various and continual changes in the LIMS system (new products requiring new QC tests are introduced, discontinued products and their QC tests are not needed any longer, changing test names, new sample types, configuration changes, etc. etc.). My impression is that this is just how it is: Things change, data literacy levels vary, data discipline is not rigidly enforced, LIMS super users are most often not data warehouse users and often things just work out differently than planned.

One key comment by a team member was the suspicion that Big Pharma is much better organized in terms of data and the question was asked whether our strategy is potentially too complex (LIMS --> data warehouse --> data analytical tools tapping into data warehouse).

Questions:

- Is the approach of pushing data from LIMS to the data warehouse and retrieving it from there again for trend analysis uncommon?

- Are data sources in Big Pharma on average cleaner and better managed than in other areas of manufacturing?

- Are there any standard "one size fits all" approaches of getting where we want to get?

- Isn't JMP just a gem when it gets to data base connection, automation and SPC?

- How to best publish SPC graphs without resorting to JMP Live?

- What other important information can I feed back to the team?

Thank you very much in advance!

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

Here's my seven cents (one for each question and a bonus thought:

1. No. During my tenure as a JMP senior systems engineer with many users in Big Pharma this is a common data pathway.

2. No. It's always 'dirty' and data cleanup and surveillance is a big part of the job.

3. No.

4. IMO, JMP is world class.

5. There's always JMP Public or creating a Dashboard application where you internally control who has read/write access.

6. Begin your system design with the end in mind. Then reverse engineer the data acquisition, clean up, reporting, and distribution.

Bonus thought: At the risk of making work for the JMP systems engineer on your sales team, engage that person with these questions to pick their brain. Who knows...maybe there's even a JMP customer that would be willing to talk to you as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

Here's my seven cents (one for each question and a bonus thought:

1. No. During my tenure as a JMP senior systems engineer with many users in Big Pharma this is a common data pathway.

2. No. It's always 'dirty' and data cleanup and surveillance is a big part of the job.

3. No.

4. IMO, JMP is world class.

5. There's always JMP Public or creating a Dashboard application where you internally control who has read/write access.

6. Begin your system design with the end in mind. Then reverse engineer the data acquisition, clean up, reporting, and distribution.

Bonus thought: At the risk of making work for the JMP systems engineer on your sales team, engage that person with these questions to pick their brain. Who knows...maybe there's even a JMP customer that would be willing to talk to you as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

Here are my thoughts/questions:

SPC is an analytical tool. In order to use it correctly, you must understand rational subgrouping and sampling strategies. How you get the data is a very important decision.

“The engineer who is successful in dividing his data initially into rational subgroups based on rational theories is therefore inherently better off in the long run. . .”

Shewhart

SPC and the control chart method was invented (Shewhart) to answer 2 questions:

1. Is the variation associated with the within subgroup sources of variation (The x's varying within subgroup) stable and consistent? If not, why not? This is consistent with Deming's Special cause/Common cause model. If you are looking at data after the "special cause "event" happened, you are missing the opportunity to learn about what happened in real time.

2. Which sources of variation have greater leverage (are dominant), the within or the between? The X-bar chart is a comparison chart. It compares the variation due to the within subgroup sources (the control limits) to the between subgroup sources of variation (the plotted means). It helps the investigator understand where to focus their work.

With this in mind, I'm not sure what you are trying to do? It seems you are interested in plotting data and making this visible to many. This is not a bad idea, but it is only part of the intended purpose of SPC. What SPC was intended to do was to understand causal structure (analytics).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

@statman, thank you for your questions! These questions are very useful.

By "causal structure", I assume you mean the identification of assignable or special causes. In our case, it can take a very long time to manufacture a single batch. Each batch is subject to a comprehensive testing program, resulting in a number of measurement results which we for now assume are representative of the true quality of each batch.

Personally, I don't think we have any reason yet to group the data for multiple batches into rational subgroups. We'd probably only see a lot of variation within each subgroup and have little data to plot along the horizontal axis. So we are really only thinking individual and moving range charts for now, since what we are trying to understand or learn is what causes variations in quality from one batch to the next.

It is also unclear whether we know and fully understand all sources of variation. Presumably, we'll start off by interviewing process engineers about what their thoughts on individual sources of variation are. Next, I am thinking we'd be trying to control these sources of variation individually to test whether we are able to obtain a more consistent process output, i.e., whether batches manufactured in sequence become more similar than they are now. As a result, we'll probably need to define different phases in these control charts, associated with the different initiatives targeted at controlling the individual sources of variation. I imagine that documenting these phases can be problematic: How to call them / is a specific nomenclature required? Where / how to log them and document them for posterity?

As things are now, we have no choice but looking at the data after the "special cause event" happened, and - as mentioned above - we have little to no understanding of what the specific special causes in each case are.

Are these adequate answers to your questions or am I overlooking something?

What more do you suggest to think of?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

A few more thoughts for you. I'll keep my comments limited to your statements, "It is also unclear whether we know and fully understand all sources of variation. Presumably, we'll start off by interviewing process engineers about what their thoughts on individual sources of variation are. Next, I am thinking we'd be trying to control these sources of variation individually to test whether we are able to obtain a more consistent process output, i.e., whether batches manufactured in sequence become more similar than they are now."

Interviewing process engineers is a good start...but nothing can replace data. In working in chemical batch manufacturing for years sometimes urban myths predominate because somebody (usually a senior person) 'said so when the batch went bad awhile ago.' In my company we often had machine process control systems which we could download the process data to SAS or JMP and then use modeling methods to try and ferret out causal structure. We'd use PLS and PCA alot. It's been a few years since I left industry and now with the Gen Reg and Functional Data Explorer capabilities in JMP Pro...boy it almost makes me want to unretire! So I suggest using these methods if you have the opportunity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

Again, this is much appreciated. I had thought about mentioning the Functional Data Explorer, since it fits nicely with our scenario of long batch manufacturing times vs. one set of different QC tests characterizing the whole lot. Yet, the cost for JMP Pro is high enough to attract attention from management, making it difficult to defend the investment. We'll keep trying, of course.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

And now you've discovered the biggest challenge you may encounter...management indifference. Far more frequently than I would have liked, I worked with managers that willingly accept the costs of poor quality at the expense of avoiding it in the first place. All the topics and issues you raise in your original post pale in comparison. Good luck.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

My responses:

"By "causal structure", I assume you mean the identification of assignable or special causes."

Not exactly (realize there are 2 models; The Shewhart model separates the variation into assignable and random and the Deming model separates the variation into Special and Common cause. While synonymous, they are not identical), I mean understanding the relationships between input variables (X's) and response variables (Y's) symbolically represented by Y=f(X). The process of understanding causal structure of starts with hypotheses (these are likely from the engineers and scientists familiar with the process/product). These are hypotheses not facts and are often developed as a result of questioning the process, observation, data mining, etc. There should be a multitude of hypotheses. Once you have a set of hypotheses, you then get data to provide insight into those hypotheses. How you get the data is an important decision. If you are low on the knowledge continuum, the hypotheses might be fairly general. This usually suggests sampling (components of variation studies) to get clues as to where to focus your efforts. This is the intent of SPC. Based on the little information provided, your process appears to be a batch process. Some questions I would have include; How capable and consistent is the measurement component? Is it adequate to discriminate sources of variation both within and between batch? How much variation is there within batch? Is the within batch variation consistent? Is there any systematic variation within batch (e.g., stratification)? How much variation is there between batch? Is that variation consistent? How do these sources of variation compare to each other (e.g., what is the rank order of these components)?

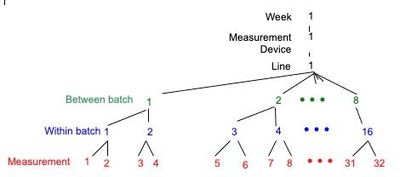

While Moving range charts can provide insight into consistency, they do very little to separate and assign the different components of variation. After all, over time, all of the x's change within subgroup in a moving range chart. If on the other hand your sampling consists of multiple batches, multiple samples within batch and multiple measures of the same sample, you have three layers of components that can be separated. Here is an example of a nested sampling tree:

I suggest using hypotheses to determine how many layers you want in your tree (e.g., how many components do you want to separate). KISS, that is Keep It Simple and Sequential. The purpose of the first sampling plan is to help develop a better, more specific sampling plan directed by the data (like perhaps an experiment).

I suggest you read the following to get a better idea of the methodology:

Wheeler, Donald, and Chambers, David (1992) “Understanding Statistical Process Control” SPC Press (ISBN 0-945320-13-2)

Wheeler, Donald (2015) “Rational Sampling”, Quality Digest

Wheeler, Donald (2015) “Rational Subgrouping”, Quality Digest

Shewhart, Walter A. (1931) “Economic Control of Quality of Manufactured Product”, D. Van Nostrand Co., NY

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Want advice on implementing Statistical Process Control in a maturing manufacturing organization

No worries, glad it makes sense. I know plenty of statisticians that do not understand the methodology.

Recommended Articles

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us