- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Scripting and very large files

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Scripting and very large files

I have a script that imports many files and concatenates them.

This file can be >41 million rows and be in the 10's of Gigabits (I have 100's of GB free space and 128GB memory : Using JMP16.2)

I then have scripts to select rows and columns and such. My question really is not about scripting per se, but why if I run each script in order versus having a script that includes all the scripts, JMP "hangs".

E.g., Everything appears to work in that if I run each JSL in order, it works

1. Concat_files.JSL

2. Change_Test_Instance_Name.JSL

3. Summarize_Test_Instance.JSL

vs

a Script that runs each of the above for me...

E.g.,

Run_all_scripts.JSL

include("ADDIN_HOME(my_scripts)\Concat_files.jsl");

include(("ADDIN_HOME(my_scripts)\Change_Test_Instance_Name.JSL");

include("ADDIN_HOME(my_scripts)\Summarize_Test_Instance.JSL.jsl");

This just hangs my system.... I have the log open and I can tell it is stuck in the next script (Log shows a show statement like show("Entering Change_Test_Instance name");

I have tried using wait(); statements between each include (and within each of the scripts), but that still does not work. Is there anything else I should try?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Scripting and very large files

Are you opening/closing report windows, 100s or 1000s perhaps? Not opening the windows at all might help, a lot. (Possibly the OS isn't getting a chance to clean something up.)

Are you printing 1000s of lines to the JMP 16 log? There is a pref to use the old log format that might help.

Task manager might provide some insight. Look at handles and GDI objects as well as the obvious memory and disk culprits.

Or check the disk light on the front of the computer, if you have one. It doesn't sound like you should be paging, that's a nice computer!

I'd like to know what was happening when you get an answer; if nothing above helps, tech support can help too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Scripting and very large files

I let the script run to see if it was stuck (appears it was running, but it takes hours, not minutes when running within a script).

I only have show statements in the log.

Here is log output for running scripts within a script. (The "changing to one test instance" takes 3.65 hours vs 3.65 minutes if i run the same script myself on the same file). The CPU was running 12 cores (CPU0 to CPU11) about 20% for the 3.65 hours

Here is the log(s) and a snippet of the JSL below.

N Items(files_open) = 12; "Files are Concatenated "; As Date(Today()) = 14Feb2022:13:00:32; start_time - As Date(Today()) = -492; "Saving Digital_shmoo_all"; As Date(Today()) = 14Feb2022:13:00:54; "Done Saving Digital_shmoo_all"; As Date(Today()) = 14Feb2022:13:01:24; "Changing to one test instance"; N Rows(dt) = 41929065; "In Reduce_Test_Instance"; As Date(Today()) = 14Feb2022:13:01:39; "Reducing Test Instances Ended"; As Date(Today()) = 14Feb2022:16:41:13; start_time - As Date(Today()) = -13174; "Transposing"; "File has been transposed"; As Date(Today()) = 14Feb2022:16:42:23; start_time - As Date(Today()) = -69;

If I run the the reduce test instance script by itself with the same large file, here is the log

As Date(Today()) = 14Feb2022:13:01:24; "Changing to one test instance"; N Rows(dt) = 41929065; "In Reduce_Test_Instance"; As Date(Today()) = 14Feb2022:13:01:39; "Reducing Test Instances Ended"; As Date(Today()) = 14Feb2022:16:41:13; start_time - As Date(Today()) = -13174; "Transposing"; "File has been transposed"; As Date(Today()) = 14Feb2022:16:42:23; start_time - As Date(Today()) = -69;

The basics of this script is finding names that start with "Char_Char" and changing them so they do not have "Char_Char" ; Doing this by creating a summary table, selecting the rows in the summary table that have "Char_Char", getting the rows in the main table that are selected, then changing the name in the main table. Here is a snipping of the slow script.

One_Test_Instance.JSL

dt = Current Data Table();

Names Default To Here( 1 );

Show( N Rows( dt ), "In Reduce_Test_Instance" );

Show( As Date( Today() ) );

start_time = As Date( Today() );

dt << clear select;

dt << clear column selection;

Wait();

Test_names = {};

dt << Summary(

Group( :Test_Instance ),

Freq( "None" ),

Weight( "None" ),

output table name( "Summary_of_Table" )

);

dt_summary = Current Data Table();

Wait();

For Each Row( Insert Into( Test_names, dt_summary:Test_Instance ) );

Wait();

For( i = N Items( Test_names ), i > 0, i--,

If( (!Contains( Test_names[i], "CHAR_char" )),

Remove From( Test_names, i )

)

);

dt_summary << select where( Contains( Test_names, :Test_Instance ) );

Wait();

aa = dt << get selected rows; //Get selected rows in main table

Wait();

Close( dt_summary, nosave );

If( N Rows( aa ) > 0,

(dt:Test_instance[aa]) = "CHAR_char_meas_TI";

(dt:Setup[aa]) = "meas_TI"; //Change name in main table

);

dt << clear select;

dt << clear column selection;

Wait();- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Scripting and very large files

Looks like I pasted same log....

Here is when Running alone... 200s or about 3.33minutes

N Rows(dt) = 41929065; "In Reduce_Test_Instance"; As Date(Today()) = 15Feb2022:10:11:57; "Reducing Test Instances Ended"; As Date(Today()) = 15Feb2022:10:15:17; start_time - As Date(Today()) = -200;

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Scripting and very large files

You might be able to run the code with the JSL debugger/profiler, or just instrument it with finer-grained time_start..time_end like you are doing. My guess is the code has an N^2 behavior (4X time for doubling the data) for some reason with the includes. Maybe a namespace issue with some name that collides with a name in the data table.

One place that could be bad is

dt_summary << select where( Contains( Test_names, :Test_Instance ) );but it is hard to know without timing it. If Test_names might have 1e6 items and dt_summary might have 1e6 rows, on the average 500,000 test_names would be examined on each of the 1e6 rows. Or maybe there is only 10 or so and it isn't an issue. You might want to rewrite it, approximately like this

// untested. combine two loops and use an aa rather than a list for lookup

Test_names = AssociativeArray();

For Each Row(

If( ( Contains( dt_summary:Test_Instance, "CHAR_char" )),

Test_names[ dt_summary:Test_Instance ] = 1

)

);

dt_summary << select where( Test_names<<Contains( :Test_Instance ) );The associative array will be much faster if the list has several hundred items.

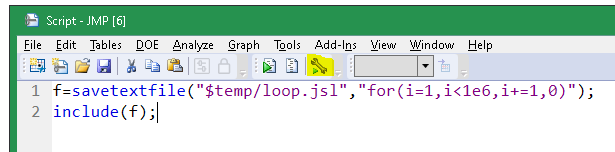

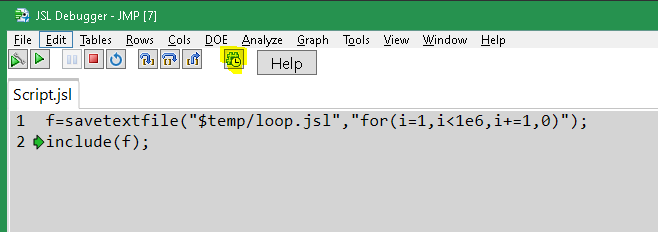

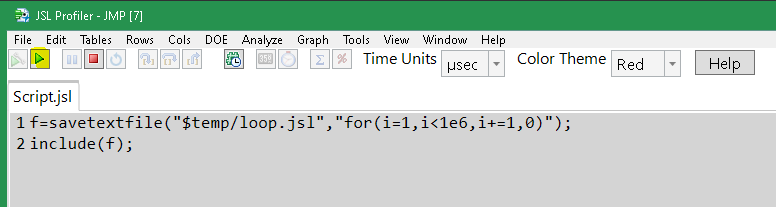

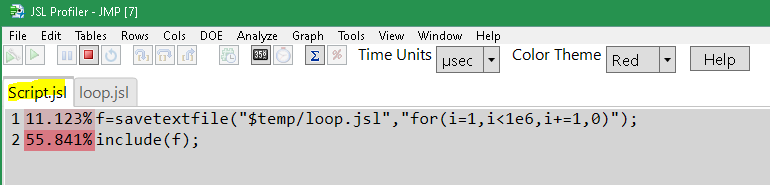

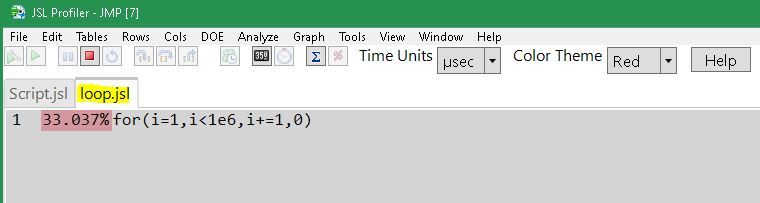

If you want to use the JSL profiler, don't do the 3 hour run for your first profiling experience.Try a small program, using an include, and make sure you play with these buttons:

you might be able to use the blue pause button or wait for it to end, then examine the hot spots:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Scripting and very large files

Sorry for the late reply(busy with work).

Thanks for the ideas. I have never used the debugger.

I will look at the namespace idea as well. I have also found that if I have the main DT open (it has been concatenated) and then run the script with include statement it also runs as fast as when I run the script as is (no include). So something about the main data table being in memory for a while vs concatenating then immediately running the include script.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us