- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Reason against using Custom Design DOE for everything

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Reason against using Custom Design DOE for everything

Hi All,

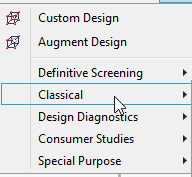

I need someone to play Devil's advocate for me. There's a lot of different DOE functionality in JMP. For example, if I go into JMP there are over 10 different traditional DOE designs in the DOE drop down menu.And there's also the Custom Design function.

This might be a naive question, but is there any argument to use any other DOE design instead of a Custom Design? Can any of these designs do anything the Custom design cannot?

Thanks,

Donald

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Reason against using Custom Design DOE for everything

Hi Donald,

I would be interested to know why you ask this question. Is it for your own understanding? Or are you thinking about how to advise your colleagues?

For most scientists and engineers, I recommend that they focus on Definitive Screening and Custom Design.

Why Definitive Screening? In many situations a Definitive Screening Design (DSD) will be the most efficient and easy-to-use experiment. I would say that the majority of experiments by scientists and engineers are screening experiments - they have 5 or more continuous factors and they don't know that all factors are important. DSDs are great in these cases. If a small number of effects are found to be important you can obtain a useful model for optimisation. Even if all factors are important, a DSD will have been a good first step in the experimentation process and you can follow up with Augment Design. DSD in JMP has a simple-to-use fitting method that makes it very easy to analyse the results. DSDs can't be recreated using Custom Design, hence why it is a separate platform in JMP.

Custom Design covers most other experiment situations.

The options under the Classical menu are all tried and tested, and have been used successfully many times. For the most part though they can be recreated using Custom Design because they are special cases of optimal designs. I would only recommend the Classical options to someone that has been schooled in the older methods of DOE, so that they can recreate the designs that they have learned. I don't know if you could recreate Taguchi designs using Custom Design, but I don't know that you would want to - I have never come across anyone using JMP for Taguchi designs.

The options under Consumer Studies and Special Purpose all have their own uses. They can definitely do things that Custom Design cannot. However, they are specialised or niche situations that will be relevant for a smaller subgroup of experimenters. For example, space-filling designs for computer experiments on very nonlinear systems in engineering. Or Max-Diff designs for studies to understand consumer preferences.

I hope that helps,

Phil

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Reason against using Custom Design DOE for everything

Hi Donald,

I would be interested to know why you ask this question. Is it for your own understanding? Or are you thinking about how to advise your colleagues?

For most scientists and engineers, I recommend that they focus on Definitive Screening and Custom Design.

Why Definitive Screening? In many situations a Definitive Screening Design (DSD) will be the most efficient and easy-to-use experiment. I would say that the majority of experiments by scientists and engineers are screening experiments - they have 5 or more continuous factors and they don't know that all factors are important. DSDs are great in these cases. If a small number of effects are found to be important you can obtain a useful model for optimisation. Even if all factors are important, a DSD will have been a good first step in the experimentation process and you can follow up with Augment Design. DSD in JMP has a simple-to-use fitting method that makes it very easy to analyse the results. DSDs can't be recreated using Custom Design, hence why it is a separate platform in JMP.

Custom Design covers most other experiment situations.

The options under the Classical menu are all tried and tested, and have been used successfully many times. For the most part though they can be recreated using Custom Design because they are special cases of optimal designs. I would only recommend the Classical options to someone that has been schooled in the older methods of DOE, so that they can recreate the designs that they have learned. I don't know if you could recreate Taguchi designs using Custom Design, but I don't know that you would want to - I have never come across anyone using JMP for Taguchi designs.

The options under Consumer Studies and Special Purpose all have their own uses. They can definitely do things that Custom Design cannot. However, they are specialised or niche situations that will be relevant for a smaller subgroup of experimenters. For example, space-filling designs for computer experiments on very nonlinear systems in engineering. Or Max-Diff designs for studies to understand consumer preferences.

I hope that helps,

Phil

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Reason against using Custom Design DOE for everything

Hi Phil,

Thanks for the detailed reply. I'm a formulation scientist and want to try and help my colleagues get into JMP more. Most of our DOEs would be mixture designs.

I wanted to sense check whether recommending the Custom Design as a go to made sense or not. It looks like it does, but DSDs should also be carefully considered for screening purposes.

Appreciate it if anyone else adds an opinion as well. The more the merrier

Thanks

Donald

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Reason against using Custom Design DOE for everything

For mixture designs, I would certainly recommend Custom Designs. In fact, you will not be able to use a Definitive Screening Design for a mixture scenario.

I do agree with Phil's recommendations. The other designs do have some advantages depending on the situation. For example, the power of a Definitive Screening Design (DSD) is slightly lower than what you would get from a Custom Design for a main effects only model. The prediction variance of a DSD is not as good as an I-Optimal custom design. But the advantage for DSD is a single design that could also be used for optimization as well as the screening.

The classical designs offer orthogonality which is not always guaranteed (but achieved most of the time for the same number of runs) with a custom design.

Essentially, each design has an advantage, which is how/why they were created in the first place. In some cases those advantages may not be valuable to you. But that is exactly why JMP offers them all. You may have a situation where you want that particular advantage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Reason against using Custom Design DOE for everything

Here are my thoughts:

1. The selection as to what experiments to run is, IMHO, a personal choice. You must understand the situation (context), what questions you are trying to answer and weigh the information you could get from the experimental strategy against the resources required. In some cases, I would be willing to sacrifice efficiency for effectiveness.

2. Convincing engineers, scientists and managers to run ANY experiment can be the biggest battle. It can be more challenging if they think of the design matrix as a "block box" and they don't understand how it works. It is incredible how many folks are impacted by the OFAT mentality. It is, after all, so fundamentally intuitive... Classical orthogonal designs are easy to explain and provide comfort for de-aliasing if necessary, particularly for those with no statistical background.

3. The focus of design selection is biased to the factor effects. There is not enough emphasis on strategies to handle noise. Short-term noise (e.g., measurement error, within sample, between sample) handled via repetition or nesting. Long-term noise (e.g., ambient conditions, lot-to-lot raw materials, operator technique) handled via RCBB, BIB and split-plots. I use cross-product arrays in robust design situations and I have yet to find an easy way to set these up using the custom design platform.

4. "The purpose of the first experiment is to design a better experiment". We experiment to gain insight into and validate/invalidate our understanding of causal structure. The likelihood we have all of the important factors, tested at optimal levels in our first experiment is questionable. Iteration is how we learn. The first experiment is merely the start of the journey.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us