- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Predictor screener vs. DOE?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Predictor screener vs. DOE?

Just curious if predictor screener can be used to ID important variables the way a screening DOE does - so basically allowing you to go straight into optimization? Just curious if/how people combine predictor screener & DOE - if at all, or what recommendations/best practices people have.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

I'm not sure I completely understand your question, but here are my thoughts:

What questions you can answer, information revealed by the data, conclusions you draw, tools you use for analysis, confidence in extrapolating the results, appropriate reaction to the data are entirely dependent on how the data was acquired, how representative, and how comprehensive the data set is.

That being said, you did not indicate where you are getting the data to do predictor screening. If it is historical data, you may not have the proper context for the data. You could call this data mining. Nothing wrong with that, but this is done to develop hypotheses that will then be tested in an experiment (scientific method: is a continuous cycle of induction/deduction). For example, your historical data may have influential variables that were not recorded but were changing during the data collection. You may have had measurement errors that were not estimated. Too many unknowns.

Hopefully the DOE is well planned. You should have identified the factors to be manipulated (and why you want to manipulate them) as well as the factors you will not be manipulating (noise). You likely have some model in mind to evaluate with your experiment. You have studied the measurement system or plan on nesting this within treatment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Hi @mjz5448,

There is no preferred option regarding the handling of time in experiments, it is based on how you evaluate time to be a critical noise factor in your experiment and the "scope"/sensitivity ; for example, if you expect time variability day-by-day and can do several experiments per day, a blocking factor may be enough. If during the day there may be some time trend (due to varying temperature, humidity conditions, luminosity, etc...), then the use of time as a covariate may be helpful to create a robust design.

The situation of using time as covariate to create a robust time-trend design is explained in the book "Optimal Designs of Experiments : A Case Study Approach" from Peter GOOS and Bradley JONES (Chapter 9: Experimental Design in the presence of covariates).

There was already a topic dealing with time covariate here : https://community.jmp.com/t5/Discussions/Covariates-in-defined-order-in-custom-design/m-p/596777/hig...

Bradley Jones also write a blog post about the use of time/order as a covariate : "How to create an experiment design that is robust to a linear time trend in the response"

And in JMP Help : https://www.jmp.com/support/help/en/17.2/index.shtml#page/jmp/design-with-a-linear-time-trend.shtml

Hope this answer will help you,

PS : None of the discussions you have started have received an "Accepted Solution". If some answers in your posts did solve your question/problem, please mark it as "Accept as Solution". This is a good sign of acknowledgement for the people who take time answering you, and help other JMP Users find solutions more easily to similar problems.

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

I'm not sure I completely understand your question, but here are my thoughts:

What questions you can answer, information revealed by the data, conclusions you draw, tools you use for analysis, confidence in extrapolating the results, appropriate reaction to the data are entirely dependent on how the data was acquired, how representative, and how comprehensive the data set is.

That being said, you did not indicate where you are getting the data to do predictor screening. If it is historical data, you may not have the proper context for the data. You could call this data mining. Nothing wrong with that, but this is done to develop hypotheses that will then be tested in an experiment (scientific method: is a continuous cycle of induction/deduction). For example, your historical data may have influential variables that were not recorded but were changing during the data collection. You may have had measurement errors that were not estimated. Too many unknowns.

Hopefully the DOE is well planned. You should have identified the factors to be manipulated (and why you want to manipulate them) as well as the factors you will not be manipulating (noise). You likely have some model in mind to evaluate with your experiment. You have studied the measurement system or plan on nesting this within treatment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Hi @mjz5448,

As stated by @statman, Predictor screening is a nice data mining tool to explore possible important factors based on historical data, but it won't replace a proper study done with DoE and clear objectives.

Predictor Screening is a method based on the Random Forest algorithm that help you identify important variables (identified by the calculation of features importance) for your response(s) in your historical data. Depending on the representativeness of your data, coverage of the experimental space, missing values, presence of outliers, interactions, correlations or multicollinearity between your factors, this platform may have some shortcuts, many unknowns, and shouldn't be trust blindly.

- The analysis and insights you can have with this analysis is only limited to the historical experimental space (which might be too limited and/or not representative for a good understanding of the system). You can see this as Production experimental space (historical data) vs. R&D (DoE) : in production, only small variations of the factors is allowed (and some are fixed, so you won't be able to determine their importances) to create robust and quality products, whereas in R&D the objective is different : explore an experimental space and optimize the factors of a system, by using larger ranges for the factors considered.

- The Predictor screening platform doesn't give you a model (unlike the Bootstrap Forest available in JMP Pro), only feature/factor importances, so you don't have access to individual trees and branches, which could help identify interactions between factors.

- Since you don't know the quality of the model behind (through R², RMSE or other relevant metrics according to your objectives), you don't know how much variability is explained through it (or how accurate the model is) and if the calculations of feature importance is relevant/adequate for your topic.

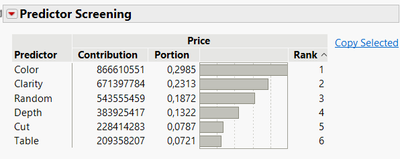

- Since all factors are ranked, the threshold/limit between important and non-important factors is not trivial. To help you with, you can add a random factor (with random values). An example of this technique is shown here with the Diamonds Dataset from JMP with Price as response, adding a random factor and omitting Carat Weight as possible input (since it is a very important predictor, so I wanted to show you an example where it's hard to sort out the other predictors) :

- This platform doesn't give you indications about possible correlations or multicollinearity between factors. You can use the Multivariate platform to explore correlations between inputs, outputs, and input-outputs. You can also create dummy regression models to calculate VIF scores between your factors, in order to spot multicollinearity.

- High cardinality categorical factors may also bias the feature importance calculations. The more cardinality (number of classes), the higher the bias, as the data will form a high number of small groups/classes that the model will try to learn. So it will fail to generalize or "understand the logic" and as a result this will increase the chance of overfitting, giving this feature a high importance.

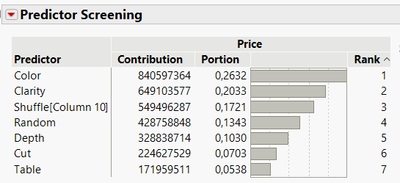

An example here with the same dataset, same conditions but adding a random categorical factors with 11 levels (from A to K, named "Shuffle[Column10]). This categorical random feature with high cardinality is ranked higher than the random numerical variable seen before :

DoE has a broader scope than an exploratory data analysis/data mining technique, and is more an end-to-end approach/methodology : from defining your objectives, responses and factors in order to create the most informative dataset (but also adapted to your experimental budget), to the analysis of the results and refinement of the model in order to better know how to proceed next or reach your objectives. Always use domain expertise (with other tools and visualizations if you have historical data) to guide the selection of factors for your DoE.

I would encourage you to use the Augment Design platform if you have historical data that is relevant for your study (in terms of factors), as you can always expand/reduce the factors' ranges if needed, and you have a lot of flexibility on how to augment your initial historical dataset into a bigger design. As a good practice (and particularly relevant when augmenting historical data into a DoE), make sure to check the "Group new runs into separate block" option in this platform. Using "Augment Design" can help you save some experimental budget in the initial screening part to allocate the saved runs in the optimization part (by augmenting the DoE a second time with Space-Filling method in a narrower experimental space or defining a more precise model with higher order terms only on the relevant factors).

I hope this complementary answer will help you understand the differences,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Thank you for both commenting.

statman - you are correct that I'm looking at historic data & trying to see if any variables effect my 1 response variable.

Victor_G - thanks for the advice about the random variable, I actually did include a random variable to determine a cut-off point so to speak for important vs. un-important factors.

One other question I have that I couldn't find documentation on is if it's okay to include "Date" as a variable in the predictor screening? Or should I instead look at a time-series analysis w/ "Date" separately from my predictor screening, or is there use in including Date in predictor screening?

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Technically, it is possible to include a time variable like Date in the Predictor screening platform, and Random Forests can be used for time serie analysis.

However, based on the content you provided, I would consider comparing the results of this platform with and without the use of time variable.

I fear that your experimental variables might be time-sensitive, and that including time as a possible predictor may hide other interesting predictors.

Depending also on the format and data type used for date (continuous, discrete ?) you might also bias the platform's outcomes by introducing a high cardinality predictor.

Finally, in the experimental context, time is not a factor you can fully and independently vary in your DoE (unlike other factors). You might be able to create time robust design by adding time as a covariate (and in the model through main effect and quadratic effect) or as a blocking factor, but not change it as you wish like other factors.

So I would rather analyze the relations between the response and factors through Predictor Screening platform, and then analyze factors dependencies related to time (through visualizations, time serie analysis and/or functional data explorer), but probably not directly include time in the same way as other factors in the exploratory analysis.

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Thank you Victor_G.

Are there preferences for how you include time series in your DOE - so preference of including a covariate vs. blocking your runs by time? It seems date/time always plays a role in my experiments as a plant engineer - but I've found it burdensome to try & block a time factor out since I don't control our production schedule, I've done it before, but my preference would be to use it as a covariate - but have never used a covariate in my DOE before. Curious if you have any experience? Thank you again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Hi @mjz5448,

There is no preferred option regarding the handling of time in experiments, it is based on how you evaluate time to be a critical noise factor in your experiment and the "scope"/sensitivity ; for example, if you expect time variability day-by-day and can do several experiments per day, a blocking factor may be enough. If during the day there may be some time trend (due to varying temperature, humidity conditions, luminosity, etc...), then the use of time as a covariate may be helpful to create a robust design.

The situation of using time as covariate to create a robust time-trend design is explained in the book "Optimal Designs of Experiments : A Case Study Approach" from Peter GOOS and Bradley JONES (Chapter 9: Experimental Design in the presence of covariates).

There was already a topic dealing with time covariate here : https://community.jmp.com/t5/Discussions/Covariates-in-defined-order-in-custom-design/m-p/596777/hig...

Bradley Jones also write a blog post about the use of time/order as a covariate : "How to create an experiment design that is robust to a linear time trend in the response"

And in JMP Help : https://www.jmp.com/support/help/en/17.2/index.shtml#page/jmp/design-with-a-linear-time-trend.shtml

Hope this answer will help you,

PS : None of the discussions you have started have received an "Accepted Solution". If some answers in your posts did solve your question/problem, please mark it as "Accept as Solution". This is a good sign of acknowledgement for the people who take time answering you, and help other JMP Users find solutions more easily to similar problems.

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Predictor screener vs. DOE?

Because of the possibility of time series in your data, you might also consider treating your data as functions. I cannot tell from the descriptions and answers if that approach would help, but you can learn more here. Note that functions (reduced to functional PCAs) can be used like any other X or Y analysis role variable.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us