- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Neural Networks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Neural Networks

How reliable are neural networks? When I didn't set the seed I got different results so I set the seed, but when I change the seed from 1234 to 100 I also get different results. I'm interested in variable importance which completely changes each time I run the model. I've also tried increasing the number of tours but still variable importance changes. I'm using Kfold validation, 3 for hidden layer structure, 10 for number of models, 0.1 for learning rate, transform covariates and robust fit, squared penalty method and 20 number of tours. RSquare is around 0.9 for both training and validation.

Thank you,

Shanice Krombeen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

Neural networks are reliable predictive models. You are using k-fold cross-validation so, of course, each time you fit the model, you will get different estimates and results. The fact that you are getting practically the same R square for the training and validation sets is a good indication that the model is a good fit.

You can save the fitted model as a column formula. Then you don't have to fit the model again and the estimates won't change when you use the model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

Should I simply choose the model with the highest R square? Each time I fit the model the R square is always high (between .90 and .94) but the variable importance under prediction profiler changes significantly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

Hi Mark,

Thanks for your reply! That's super useful for me to understand the neural networks model.

If I do not set the random seed, is there any way I can get the seed number for the current NN model?

Additionally, is there any way to get the historical seed number? I got a good model but I did not know the seed and I want to repeat the model next time.

Thanks!

Best,

Jiaping

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

The default seed is 0 for the first fit. You may specify an alternative seed at this point. The fitting uses a series of pseudo-random numbers, which changes the seed along the way. Subsequent fits use the last seed. You cannot specify it after the first fit.

A user-specified seed is captured if you save a script for Neural. This action does not occur if you use the default seed, but you can always reproduce the fits by using the default the next time.

There is no way to obtain the starting seed from an old fit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

Hi Mark,

Thanks for your reply!

When we set the random seed, are we determine the way for cross validation?

When I set the random seed to 1 and get a model, I would get one "go" option on the top "model lauch". After I click the go, another model with slightly different R^square is obtained. What is making the difference of the model R^2 compared with the previous one when I click on "Go"?

If I do not specify the random seed and click on "Go", will it give us different models with different random seed?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

The random seed determines the start of the sequence of random numbers used by the platform algorithm. The default seed of 0 tells Neural that you do not want to determine the sequence. Launching Neural more than once and fitting with a default seed of 0 will, therefore, not lead to reproducible results. You can change the seed to restart the sequence. If you launch Neural again and change the seed to the same non-zero value, you will get the same fit.

You can only set the seed at the beginning. To initiate the same sequence using the same initial seed, you would have to re-launch Neural.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

How many total observations do you have in your data set?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

There are 209 total observations in the data set but I'm fitting the model by day. Day 70 = 60, Day 90 = 91, and Day 110 = 58.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Neural Networks

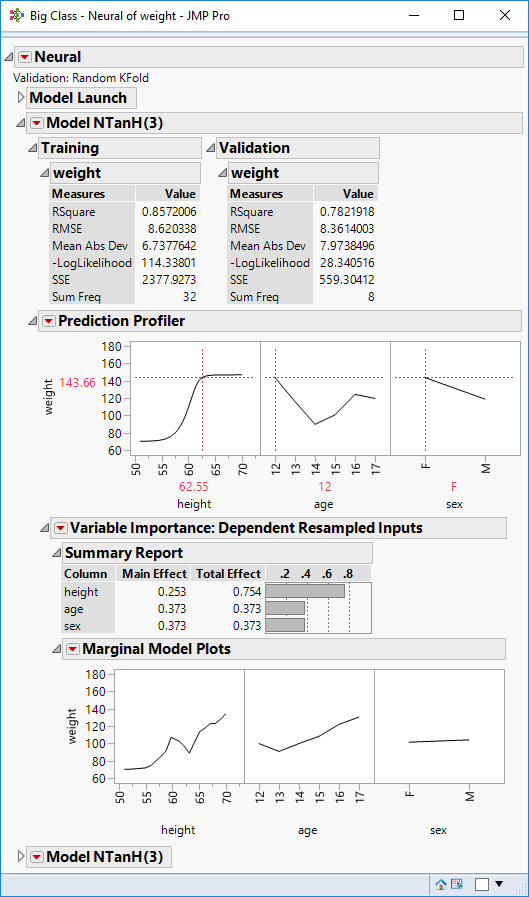

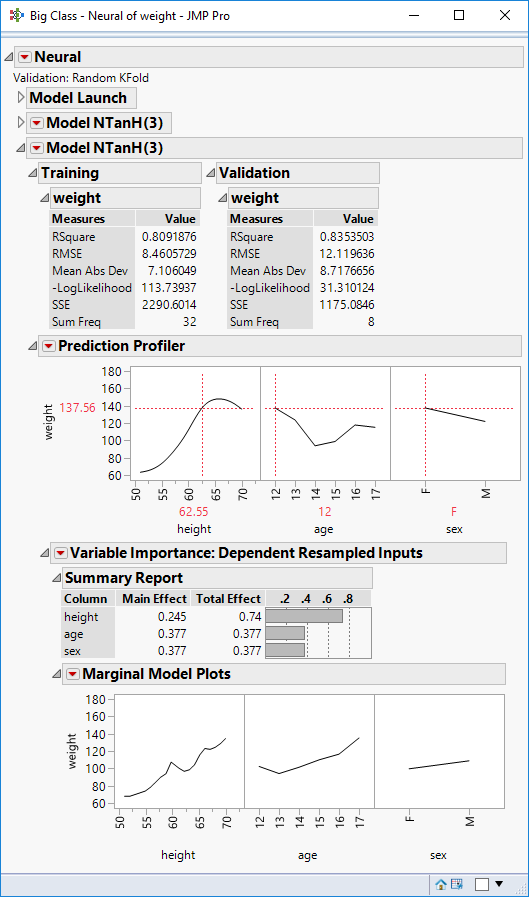

So the sample size ranges from 60 to 91? That seems like sufficient data for k-fold CV. Still, if effects are small then the estimates and accompanying variable importance could change a lot with each random assignment to the training and validation sets. Here is a simple case based on the Big Class example and a NN using the default settings except validation uses 5-fold CV.

(First fun)

(Second run)

These two runs use the same data set and settings but involve different random assignments to the five folds. Is this variability similar to what you see?

Why not include Day as a predictor and then the data set size will be 209?

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us