- Due to inclement weather, JMP support response times may be slower than usual during the week of January 26.

To submit a request for support, please send email to support@jmp.com.

We appreciate your patience at this time. - Register to see how to import and prepare Excel data on Jan. 30 from 2 to 3 p.m. ET.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Po...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

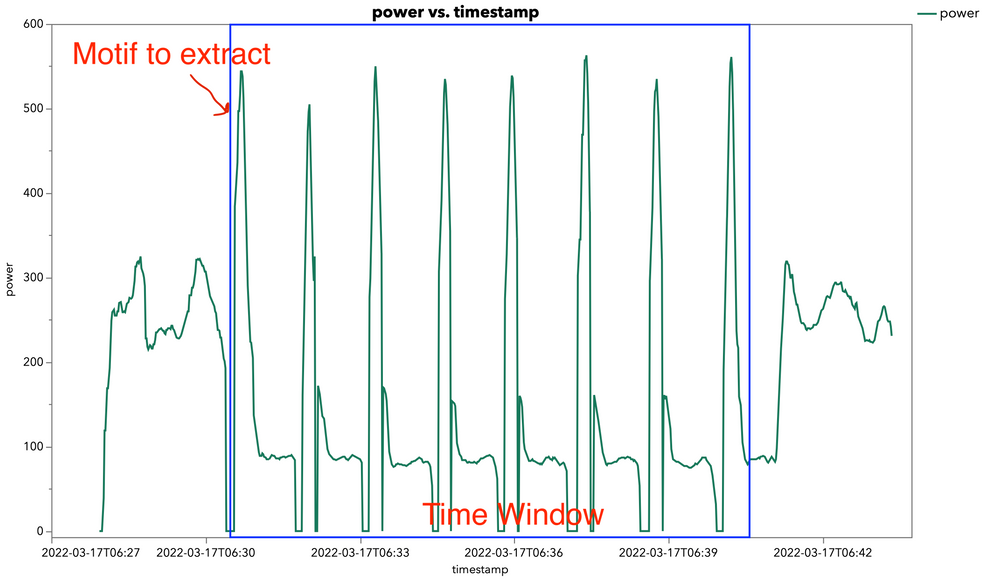

Calling JMP workflow and JSL wizards. Here's a Thursday challenge for you. The attached data set is set of power data off my running power meter. What we want to do is identify and correctly tag the 8 motifs that occur during this ~1000 point signal data set attached to this post, create a new column with function ID and write it in a way that it can be used to automate the tagging of future data that follows this same envelope.

The data collection assumptions for this data set and the future are:

- Prior to the start of a motif the power is 0.

- There is a period before and after the motifs that will be non-zero (warm-up and warm-down). This is not part of the system under test and should not be tagged.

- Each motif has an attack, sustain, decay and release. The shape of the function is identical from motif to motif. It goes from 0 to some peak over a period of samples and then ramps down to the baseline noise floor (walking power). The motif ID is over when the power reads 0 again.

- There will be an unknown number of functions to identify in the future. There are 8 in the data set. The solution needs to be invariant to that.

- The overall length of the functions as well as the peak can vary.

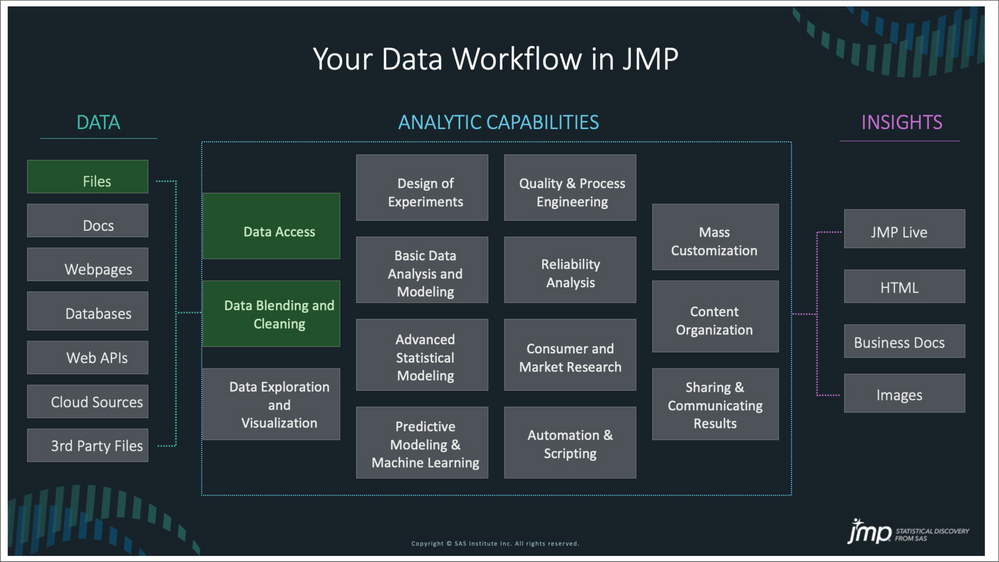

We are working on the left edge of the analytic workflow today (shown in green below). The data comes from a file (.csv), and eventually when we automated this workflow a folder of n .csv files. The Data Access is being used, and what the task is today is to perform the Data Blending and Cleaning tasks on this example file to have the data ready to expand the workflow to other analytic capabilities in the future.

Leave your solutions in the comments.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

I changed the simple column formula approach to include an AI model, so now the table has one fantastically complex formula along with some simpler ones.

The attached script add columns and a graph. The model scores the curve segments as signal or noise.

I'm interested to see how it will score a unique data set.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

Nice, Byron -- this looks pretty good. It's picking out those two noise curves. I'll collect some additional data over the weekend and see how it fairs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

Nice! That sounds like a good solution too. Post the JSL if you can dig it up and we'll try it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

Here is my attempt at the challenge.

My approach is to identify features at the beginning and end of the motif, identify the entire run region (between the beginning and the end), then assign a motif ID to each.

Here is my code for the column formulas that I used:

names default to here(1);

dt = current data table();

// date from time stamp

dt << New Column( "Date[timestamp]",

Numeric,

Nominal,

Format( "yyyy-mm-dd", 19 ),

Input Format( "yyyy-mm-dd" ),

Formula( :timestamp - Time Of Day( :timestamp ) )

);

// rate of change

dt << New Column( "rate of change",

Numeric,

Continuous,

Formula(

Col Moving Average(

Dif(

Col Moving Average(

:power / Col Maximum( :power, :Name( "Date[timestamp]" ) ),

0.6,

5,

5,

Empty(),

:Name( "Date[timestamp]" )

)

)

,

0.95,

5,

5,

Empty(),

:Name( "Date[timestamp]" )

)

)

);

// Start point

dt << New Column( "Start Point",

Numeric,

Continuous,

Formula(

If( Row() == 1,

0,

checkZero = 1 * (:power[Row()] > 0 & :power[Row() - 1] == 0);

foundPeak = 0;

For( k = Row() + 1, k <= N Rows(), k++,

If( :power[k] > Col Quantile( :power, 0.93, :Name( "Date[timestamp]" ) ),

foundPeak = 1;

Break();

);

If( :power[k] == 0, Break() );

);

If( checkZero == 1 & foundPeak == 1,

1,

0

);

)

)

);

// steady state

dt << New Column( "Steady State",

Numeric,

Continuous,

Formula(

ans = 1;

For( k = 0, k <= 10, k++,

ans = Minimum(

ans,

1 * (:rate of change[Row() - k] < 0.003 &

:rate of change[Row() - k] > -0.003)

)

);

ans;

)

);

// Run Region

dt << New Column( "Run Region",

Numeric,

Continuous,

Format( "Best", 12 ),

Formula(

ans = 0;

For( k = Row(), k >= 1, k--,

If( :Start Point[k] == 1,

ans = 1;

Break();

);

If( :Steady State[k] == 0 & :Steady State[k - 1] == 1,

ans = 0;

Break();

);

);

ans;

)

);

// motif ID

dt << New Column( "Motif ID",

Numeric,

Nominal,

Formula(

If(

:Run Region == 1,

Col Cumulative Sum( :Start Point )

)

)

);

// rank timestamp, Motif ID - helps plot each motif

dt << New Column( "Rank[timestamp]",

Numeric,

Continuous,

Formula( Col Rank( :timestamp, :Motif ID ) )

);- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

This is a really nice solution. I posted the output on JMP Public with a Local Data Filter to look at the new data set, the three training runs and your feature column of binary steady state:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

Here are all the solutions so far on JMP Public:

|

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

Here is another approach with interpolation of the dropouts, using some (relatively simple) formula columns, many of which could be eliminated by using a more complex interpolation formula, at the expense of easy troubleshooting and formula-writing. (See attached table for formulas and graph scripts)

The results seems consistent with what we want, at least in the sample dataset: (red points will be dropped)

Here is how it works so you can judge whether the assumptions made hold for your data:

First, interpolation of the zeros occurring due to "dropouts". Linear interpolation was chosen; splines and quadratics were considered but the results were inferior to my eye.

Note the "Cum Zeros Behind" through "Interpolated Power" columns in rows 4-6 below.

"Cum Zeros Behind" is an inclusive lookback for zeros.

"Cum Zeros Ahead" is an inclusive look-ahead for zeros.

"Zero Block" is the number of contiguous zeros in the block.

"Groups for Zeros" starts at 1 and increases whenever a new group of zeros occurs

Interpolation occurs when there is a short (fewer than 7) block of zeros, not starting on row 1.

****Note: In the sample data, the longest case of interpolation spanned 4 zeros, while the shortest "true" break between intervals was 7 zeros. This is a pretty slim margin for error, and is a possibly significant drawback of this approach. It is important that the shortest "true" break be longer than the longest "dropout".

As mentioned previously, you don't need most of these columns, although the formula for the interpolation will grow much uglier without them, and troubleshooting will be (much) more difficult.

After interpolation, only blocks of at least 7 consecutive zeros remain in the data (zeros possibly occurring in the first few rows excepted.)

The "Interval" column groups the data. It starts at 1 and increments whenever:

-the timestamp changes by more than 100, or

-interpolated power moves from 0 to some nonzero value

The "Post-spike" column is a flag that sets during power's ramp-down from the spike to steady(ish) state.

It is (re)set to zero:

- at row 1

- whenever the interval column changes

- whenever a new block of zeros occurs

It sets to 1 whenever interpolated power moves from some value above 90 to some power less than or equal to 90. Based on the data in the sample this seemed reasonable.

We can now decide which data to ignore--the purpose of the "Ignore" column. We ignore data when any of the following are true:

- The maximum over the interval is < 400. (This is the "background noise"). Again, based on the provided data.

- Interpolated power moves above 100, post-spike. (Indicating we've moved into "background noise", rather than ramping down towards 0)

- Interpolated power is 0

"Final Power" is the same as "Interpolated Power", but set to missing when "Ignore" == 1.

"Interval Time" computes time relative to the interval (i.e., starts at 0 and increments from there). Like "Final Power", it is set to missing whenever "Ignore" == 1.

I did not disable formula evaluations and subset out the non-ignored data, but this is a likely next step.

It will be interesting to see how Dan breaks this next ;)

Cheers,

Brady

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

Nice, Brady.

Good point about some of the pretty short times from the start of an interval vs. a drop-out. The drop-outs do seem to be 2-3 seconds, max, however. We will see how this holds with new data being collected this week.

Attached is some additional training data. This is about 1:40 of very steady state running, but at more of a rac -pace (nearly 100% of my critical power). It might help @Byron_JMP , though, with his ML model. Maybe add a feature to this training data set to bring it closer to the warm-up/warm-down range?

Also who wants to tackle getting the data directly through the Strava API using HTTP Request (); in JMP? I know @dieter_pisot has done this before. I can tag the workouts with something like sprints so that the data pull can be easily retrieved through the API.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

I took a shot a getting data straight from the Strava API. I am successfully getting several measurements including distance, velocity, longitude, latitude, altitude, and grade. However I am not getting power. I suspect that this may be due to my Strava account and/or my lack of equipment for measuring power. This solution may or may not be what is needed for someone with power information in their Strava account, but if it's not correct, I can't imagine that I'm too far off.

To get started with the Strava API, this youtube video was very helpful and it clearly demonstrates the steps to get your access token, which is required before this code will get any data from Strava. In the code below, replace your_access_token_here with your actual access token, once you have it.

names default to here(1);

accessToken = "your_access_token_here"; // access token from the Strava API

// helpful link to get started with the API: https://www.youtube.com/watch?v=sgscChKfGyg

//////////////////////////////////////////

//////////////////////////////////////////

// get list of activities from Strava

s = New HTTP Request(

URL( "https://www.strava.com/api/v3/athlete/activities?access_token=" || accessToken ),

Method( "GET" ),

Headers( {"Accept: application/json"} )

) << Send;

dtx = json to data table(s);

dtx << set name("Activities List");

// keep only activities marked as sprint

r = dtx << get rows where(

!contains(lowercase(:name), "sprint")

);

dtx << delete rows(r);

if( n rows(dtx) == 0,

close(dtx, no save);

throw();

);

// get list of unique activity ids

summarize( activityList = by(dtx:id));

// get and combine the data streams from each activity

dty = new table();

dty << set name("Strava Data_temp");

for( k = 1, k <= n items(activityList), k++,

actStream = New HTTP Request(

URL( "https://www.strava.com/api/v3/activities/" || activityList[k] || "/streams?keys=latlng,watts,distance,velocity,altitude,velocity_smooth,cadence,temp,moving,grade_smooth&access_token=" || accessToken ),

Method( "GET" ),

Headers( {"Accept: application/json"} )

) << Send;

dtz = json to data table(actStream);

dtz << new column("Activity ID", numeric, nominal, set each value(num(activityList[k])));

dty << concatenate(dtz, append to first table);

close(dtz, no save);

);

// add rank column for x value

dty << New Column( "x",

Numeric,

"Continuous",

Formula( Col Rank( :Activity ID, :type, :Activity ID ) )

);

// split up latitude and longitude pairs

myCount = 0;

for( k = 1, k <= n rows(dty), k++,

if( dty:type[k] != "latlng",

dty:type[k] = dty:type[k]

,

myCount ++;

if( modulo(myCount,2) == 0,

dty:type[k] = "lat",

dty:type[k] = "lng"

)

);

);

// split tall table to wider table

dt = dty << Split(

Split By( :type ),

Split( :data ),

Group( :x, :Activity ID ),

Remaining Columns( Drop All ),

Sort by Column Property

);

// done with this data table

close(dty, no save);

// update start time from activities table

dt << Update(

With( dtx ),

Match Columns( :Activity ID = :id ),

Add Columns from Update Table( :start_date, :name ),

Replace Columns in Main Table( :start_date, :name )

);

// close activity list

close(dtx, no save);

// clean up time stamp

dt << Begin Data Update;

dt << Recode Column(

dt:start_date,

{Regex( _rcNow, ".*^(.*)Z.*", "\1", GLOBALREPLACE )},

Target Column( :start_date )

);

dt << End Data Update;

dt:start_date << data type(numeric) << Format( "yyyy-mm-ddThh:mm:ss", 19, 0 ) << Input Format( "yyyy-mm-ddThh:mm:ss", 0 );

dt << New Column( "timestamp",

Numeric,

"Continuous",

Format( "yyyy-mm-ddThh:mm:ss", 19, 0 ),

Input Format( "yyyy-mm-ddThh:mm:ss", 0 ),

Formula( (:start_date + :x) - 1 )

);

wait(0);

dt:timestamp << delete formula;

dt << delete column("start_date");

// arrange columns

dt << Move Selected Columns( {:timestamp}, To first );

dt << Move Selected Columns( {:Activity ID, :name}, after(:timestamp) );

// set name

dt << set name("Strava Data");

// sort chronologically

dt << Sort( By( :timestamp ), Order( Ascending ), replace table );

dt << sort( by(:Activity ID), order( ascending ), replace table );

The code:

- only includes data for activities that contain "sprint", not case sensitive, in the name

- tries to get power (watts), among many other measurements including distance, velocity, position, altitude, etc...

- arranges the data in a format similar to the original data tables presented with this challenge

I do hope that this code works for a user that has power information in their Strava account.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: JMP Workflow Challenge 1: Motif Extraction and Identification from Continuous Power Data

I have taken a shot at getting data straight from the Strava API. I am successfully getting measurements for distance, velocity, position, altitude, & grade. However I am not getting power measurements. I have a suspicion that this is due to my Strava account level and/or due to my lack of power measurement equipment. I have reason to believe that my code might work for users that have power data in their Strava data, but I'm unable to confirm. If this solution isn't correct, I can't imagine that it is too far away from what is needed.

I found this youtube video very informative for getting started with the Strava API. It clearly demonstrates how to get your access token from the Strava API, which is required before the JSL code will get any information from Strava. In the code below, replace your_access_token_here with your access token you get from the Strava API prior to running.

names default to here(1);

accessToken = "your_access_token_here"; // access token from the Strava API

// helpful link to get started with the API: https://www.youtube.com/watch?v=sgscChKfGyg

//////////////////////////////////////////

//////////////////////////////////////////

// get list of activities from Strava

s = New HTTP Request(

URL( "https://www.strava.com/api/v3/athlete/activities?access_token=" || accessToken ),

Method( "GET" ),

Headers( {"Accept: application/json"} )

) << Send;

dtx = json to data table(s);

dtx << set name("Activities List");

// keep only activites marked as sprint

r = dtx << get rows where(

!contains(lowercase(:name), "sprint")

);

dtx << delete rows(r);

if( n rows(dtx) == 0,

close(dtx, no save);

throw();

);

// get list of unique activity ids

summarize( activityList = by(dtx:id));

// get and combine the data from each activity

dty = new table();

dty << set name("Strava Data_temp");

for( k = 1, k <= n items(activityList), k++,

actStream = New HTTP Request(

URL( "https://www.strava.com/api/v3/activities/" || activityList[k] || "/streams?keys=latlng,watts,distance,velocity,altitude,velocity_smooth,cadence,temp,moving,grade_smooth&access_token=" || accessToken ),

Method( "GET" ),

Headers( {"Accept: application/json"} )

) << Send;

dtz = json to data table(actStream);

dtz << new column("Activity ID", numeric, nominal, set each value(num(activityList[k])));

dty << concatenate(dtz, append to first table);

close(dtz, no save);

);

// add rank column for x value

dty << New Column( "x",

Numeric,

"Continuous",

Formula( Col Rank( :Activity ID, :type, :Activity ID ) )

);

// split up latitude and longitude pairs

myCount = 0;

for( k = 1, k <= n rows(dty), k++,

if( dty:type[k] != "latlng",

dty:type[k] = dty:type[k]

,

myCount ++;

if( modulo(myCount,2) == 0,

dty:type[k] = "lat",

dty:type[k] = "lng"

)

);

);

// split tall table to wider table

dt = dty << Split(

Split By( :type ),

Split( :data ),

Group( :x, :Activity ID ),

Remaining Columns( Drop All ),

Sort by Column Property

);

// done with this data table

close(dty, no save);

// update start time from activities table

dt << Update(

With( dtx ),

Match Columns( :Activity ID = :id ),

Add Columns from Update Table( :start_date, :name ),

Replace Columns in Main Table( :start_date, :name )

);

// close activity list

close(dtx, no save);

// clean up time stamp

dt << Begin Data Update;

dt << Recode Column(

dt:start_date,

{Regex( _rcNow, ".*^(.*)Z.*", "\1", GLOBALREPLACE )},

Target Column( :start_date )

);

dt << End Data Update;

dt:start_date << data type(numeric) << Format( "yyyy-mm-ddThh:mm:ss", 19, 0 ) << Input Format( "yyyy-mm-ddThh:mm:ss", 0 );

dt << New Column( "timestamp",

Numeric,

"Continuous",

Format( "yyyy-mm-ddThh:mm:ss", 19, 0 ),

Input Format( "yyyy-mm-ddThh:mm:ss", 0 ),

Formula( (:start_date + :x) - 1 )

);

wait(0);

dt:timestamp << delete formula;

dt << delete column("start_date");

// arrange columns

dt << Move Selected Columns( {:timestamp}, To first );

dt << Move Selected Columns( {:Activity ID, :name}, after(:timestamp) );

// set name

dt << set name("Strava Data");

// sort chronologically

dt << Sort( By( :timestamp ), Order( Ascending ), replace table );

dt << sort( by(:Activity ID), order( ascending ), replace table );The code:

- looks through all Strava activities and selects only those with "sprint" in their names (not case sensitive)

- tries to get many measurements including power (watts)

- organizes the information in a data table similar to the original data sets provided in this challenge

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us