- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Is there an algorithm that can quickly find such highly distributed data?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Is there an algorithm that can quickly find such highly distributed data?

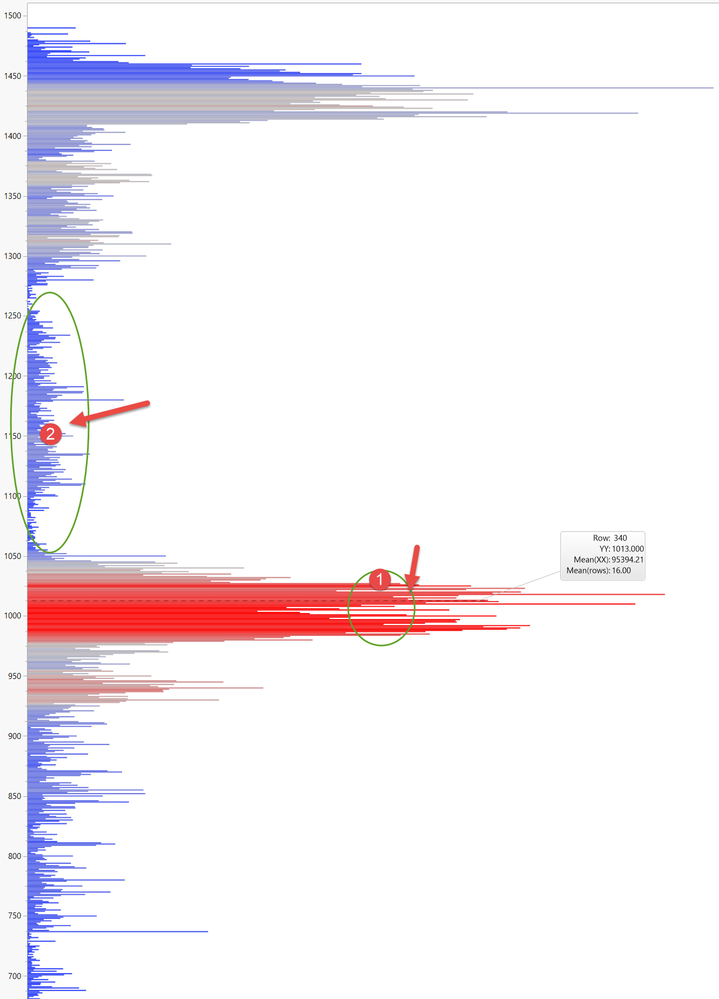

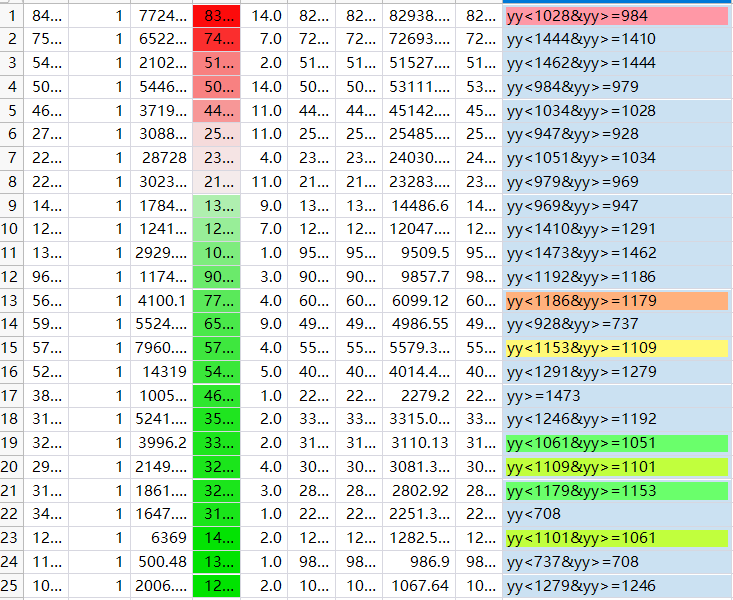

For example, the values marked 1 in the figure are larger,

The larger range above it is smaller (the range marked 2).

How can I automatically find out the range of mark 1 and mark 2 through calculation?

- Tags:

- windows

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Hi @lala,

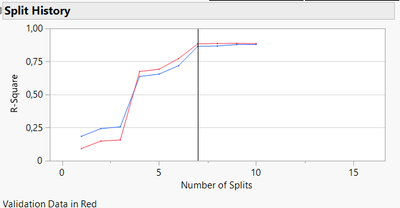

I just tried the Partition platform and I think the outcomes could be what you're looking for.

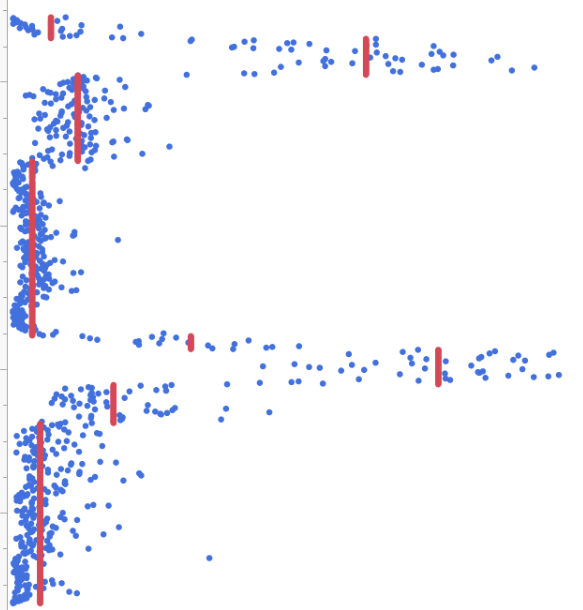

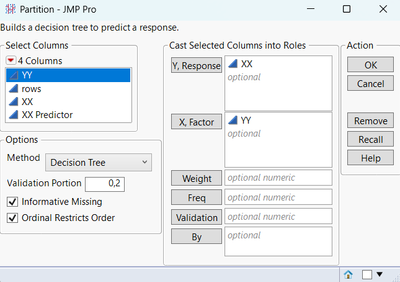

I used it on your data with a 20% validation set (specified in the launch dialog window) in order to know when to stop splitting data (when training and validation results remain stable) and avoid creating too many splits in the data:

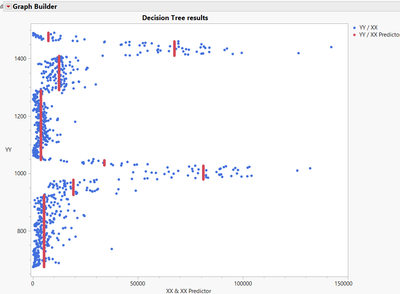

When displaying the results with the formula saved from the platform, it's easier to spot areas with similar values :

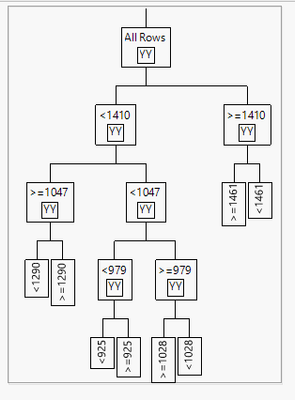

You can thanks to Graph Builder identify the ranges of similar values, or create a small script that creates groups based on predicted values in the datatable, and/or use the small tree view to identify the ranges in your data :

Please find attached the datatable with scripts of the analysis and graphs included, I hope this complentary answer makes the use of Partition platform clearer for you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Hi @lala,

If you have only few independent and non-correlated X's with one response Y, I think a Decision Tree should be able to uncover X ranges related to different mean response Y.

Décision Trees is available in the platform Partitioning.

Hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Thanks Experts!

Partition

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Hi @lala,

I just tried the Partition platform and I think the outcomes could be what you're looking for.

I used it on your data with a 20% validation set (specified in the launch dialog window) in order to know when to stop splitting data (when training and validation results remain stable) and avoid creating too many splits in the data:

When displaying the results with the formula saved from the platform, it's easier to spot areas with similar values :

You can thanks to Graph Builder identify the ranges of similar values, or create a small script that creates groups based on predicted values in the datatable, and/or use the small tree view to identify the ranges in your data :

Please find attached the datatable with scripts of the analysis and graphs included, I hope this complentary answer makes the use of Partition platform clearer for you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Thanks Experts!

Thank you for the script file.I further learned the decision tree method.

How can this JSL be modified to apply to other file analyses with this structure?

In addition, how is the red vertical line added in the following figure?

How can get its value?

Thank you for spending a lot of time helping me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Hi @lala,

If you want to reproduce the analysis on other file, you can launch manually the platform with the corresponding settings :

- Your response (here XX) in the "Y, Response" panel

- Your factor(s) (here YY) in the "X, Factor" panel

- Finally, you can set a validation portion (between 10 and 20%) in the corresponding panel (bottom left) :

The JSL code corresponding to this analysis would be :

// Launch platform: Partition

Data Table( "pic" ) << Partition(

Y( :XX ),

X( :YY ),

Validation Portion( 0.2 ),

Informative Missing( 1 )

);

However, I don't know how you could automate the splitting, you may have to create a metric to indicate where to stop splitting, based on the difference between R² training and R² validation, or based on the metric slope between the previous split and the new one (for R² training and validation for example).

The red vertical lines comes from the prediction formula of the Decision Tree and is directly linked to the inner working of tree-based methods. A Decision Tree will create splits in the factor(s) values based on a criteria to create more homogeneous subsets of data. For Regression Tree, this criteria is often MSE (Mean Squared Error), MAE (Mean Absolute Error) or SSE (Sum of Squared Error).

The value used for splitting is determined by testing every value for every factor, so that the one which minimizes the sum of squares error (SSE) best is chosen. So by splitting your data into "chunks" where you calculate for each of this part the mean value, you minimize the difference between predicted values and actual values, and actually reduce SSE. This explains the "stairway" step profile look of the prediction formula.

On the table, you can get the prediction values of this formula in the column "XX Predictor" saved, and in the platform , you can click on the red triangle next to "Partition", go into "Save Columns" and "Save Prediction Formula". As you can see, values are the same for each range/split done, and corresponds to the average of actual values in this split/group. This is quite helpful in your use case, as you can directly identify groups of similar values (corresponding to the same predicted value with the Decision Tree).

Some ressources about Regression Trees :

StatQuest (Youtube) : https://youtu.be/g9c66TUylZ4?si=-oyWbyieIlfwxLoH

Interpretable Machine Learning (Christoph Molnar) : https://christophm.github.io/interpretable-ml-book/tree.html

Hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Is there an algorithm that can quickly find such highly distributed data?

Thanks!

This Partition module is heavily used, but with low-level duplication and no further functionality to use it.

I run JMP using scripts.

It automatically splits if you add go.

p = Partition(

Y( Column( 3 ) ),

X( Eval( xF ) ),

Method( "Decision Tree" ),

Validation Portion( 0.2 ),

Informative Missing( 1 ),

Column Contributions( 1 )

);

p << go;Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us