- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Functional Data Explorer with validation column

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Functional Data Explorer with validation column

I have a data set with 40 NIR spectra with rows as functions. The data set is split up in a training and validation set using a cutpoint valdiation column; 10 last rows are validation.

Running FPCA analyis only the training set is fitted as shown below? How do we know if fitting of the valdation set is OK using the training model?

I assume also the diagnostic plot only is for the training set?

FPCA scores: are results for validation set computed with the training model?

Thanks for input!

- Tags:

- windows

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Functional Data Explorer with validation column

Hi @frankderuyck,

I agree, the documentation about the use of validation set for Functional Data Explorer is a bit confusing about the terms and the use of the sets : Launch the Functional Data Explorer Platform (jmp.com)

There are only two possible sets used in Functional Data Explorer (unlike other Machine Learning algorithms that may use 3 sets: Training, validation and test sets) : Training set (coded as 0 or the smallest value) and Validation Set (coded as 1 or higher values).

The validation set is in fact used here as a Test set (if we use rigorously the right naming convention) : it is not used to fit a functional model, but you can extract the FPCA scores of this set to see how effective is the model fit on new functional data : Solved: Functional Data Analysis and Classification: How to calculate FPC of new data (u... - JMP Us...

The Training set is used to fit and evaluate the model's fit, and all plots in the report you mention are done on the training set.

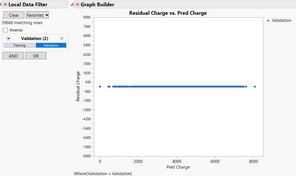

You can evaluate the fitting of the model on validation data by extracting the prediction formula on new data table (red triangle next to "[Model] on [Initial data]" and "Save Data"). Formula columns are added (Prediction and Residuals) and using Graph Builder, you can visualize the Actual vs. Predicted of your validation data, or visualizing the residuals to reproduce the results seen in the report :

But I agree with you, having the results on the validation data directly on the platform would be a lot easier. This might be a good idea to add in the JMP Wish List if not already written.

I hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Functional Data Explorer with validation column

Hi @frankderuyck,

I agree, the documentation about the use of validation set for Functional Data Explorer is a bit confusing about the terms and the use of the sets : Launch the Functional Data Explorer Platform (jmp.com)

There are only two possible sets used in Functional Data Explorer (unlike other Machine Learning algorithms that may use 3 sets: Training, validation and test sets) : Training set (coded as 0 or the smallest value) and Validation Set (coded as 1 or higher values).

The validation set is in fact used here as a Test set (if we use rigorously the right naming convention) : it is not used to fit a functional model, but you can extract the FPCA scores of this set to see how effective is the model fit on new functional data : Solved: Functional Data Analysis and Classification: How to calculate FPC of new data (u... - JMP Us...

The Training set is used to fit and evaluate the model's fit, and all plots in the report you mention are done on the training set.

You can evaluate the fitting of the model on validation data by extracting the prediction formula on new data table (red triangle next to "[Model] on [Initial data]" and "Save Data"). Formula columns are added (Prediction and Residuals) and using Graph Builder, you can visualize the Actual vs. Predicted of your validation data, or visualizing the residuals to reproduce the results seen in the report :

But I agree with you, having the results on the validation data directly on the platform would be a lot easier. This might be a good idea to add in the JMP Wish List if not already written.

I hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Functional Data Explorer with validation column

Great reply again, thanks Victor!

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us