- Due to inclement weather, JMP support response times may be slower than usual during the week of January 26.

To submit a request for support, please send email to support@jmp.com.

We appreciate your patience at this time. - Register to see how to import and prepare Excel data on Jan. 30 from 2 to 3 p.m. ET.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Distribution Nonconformance Statistics vs. Distribution Profiler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Distribution Nonconformance Statistics vs. Distribution Profiler

Hello JMP Community,

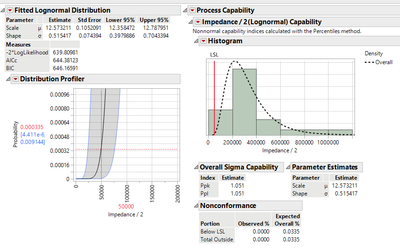

I have a set of data that is best fit with a lognormal distribution. I cannot seem to find what is going on behind the scenes for the Nonconformance statistics vs. the 95% confidence intervals of the distribution profiler. When I run the capability analysis, I get a nonconformance table. Observed % is obvious in that my actual data population did not have any values below the LCL. What statistics are used for calculating the Expected Overall %, I assume it is using the lognormal distribution and making a judgement as to how well it fits the data; is it a 3 sigma approach or something? How is it calculated, and what useful information does it provide as opposed to the confidence intervals of the distribution profiler.

The confidence intervals I think are interpreted in the following manner: since I only have a LCL, I would look at the upper 95% confidence interval to make a statement along the lines of 'with 95% confidence one can expect 0.91% of values to fall below the LCL.

Thank you for any help on this!

Greg

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Distribution Nonconformance Statistics vs. Distribution Profiler

The difference between Observed and Expected is that Observed looks at your data (in the table) and Expected calculates % of LogNormal Curve below LSL. So one is describing the sample and one is predicting HVM from the fitted model.

Capability analysis answers the question: How good is this product at meeting Spec limits!

The CI on the curve of predicition profiler says something about the certainty of your predicted probaility (of whatever your response is). So at 50000 Impedance/2 you predict a probability of 0.0003 (based on the sample) with a 95% certainty of the HVM value being somewhere between 4.4e-6 and 0.009.

So in short they are two very different pieces of information!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Distribution Nonconformance Statistics vs. Distribution Profiler

The difference between Observed and Expected is that Observed looks at your data (in the table) and Expected calculates % of LogNormal Curve below LSL. So one is describing the sample and one is predicting HVM from the fitted model.

Capability analysis answers the question: How good is this product at meeting Spec limits!

The CI on the curve of predicition profiler says something about the certainty of your predicted probaility (of whatever your response is). So at 50000 Impedance/2 you predict a probability of 0.0003 (based on the sample) with a 95% certainty of the HVM value being somewhere between 4.4e-6 and 0.009.

So in short they are two very different pieces of information!

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us